3D Data Science is revolutionizing various industries, from healthcare and manufacturing to entertainment and urban planning. The ability to analyze, manipulate, and visualize data in three dimensions offers new perspectives and insights that were previously impossible with 2D data. This guide covers the essential systems, tools, and techniques used in 3D Data Science, providing a comprehensive overview for data scientists, engineers, and researchers looking to leverage this cutting-edge technology.

1. Understanding 3D Data Science

What is 3D Data Science?

3D Data Science is the study and application of methods for processing, analyzing, and visualizing data that exists in three dimensions. This type of data can be spatial, volumetric, or multi-dimensional, often requiring specialized tools and techniques for effective analysis. Unlike traditional 2D data, which is represented in flat, two-dimensional planes, 3D data captures the depth and structure of objects or environments, providing a richer and more complex understanding of the subject matter.

This field merges various disciplines, including computer graphics, spatial analysis, machine learning, and data visualization, to handle and interpret data in three dimensions. 3D Data Science encompasses a wide range of data types such as 3D point clouds, meshes, voxel grids, and even multi-dimensional time-series data with spatial components.

In practical terms, 3D Data Science is used to create digital models of physical objects or environments, simulate real-world processes, and enhance the accuracy of predictions in fields like healthcare, manufacturing, and urban planning. This approach not only improves the ability to analyze complex structures but also enhances the ways we visualize and interact with data, leading to more informed decisions and innovative solutions across various industries.

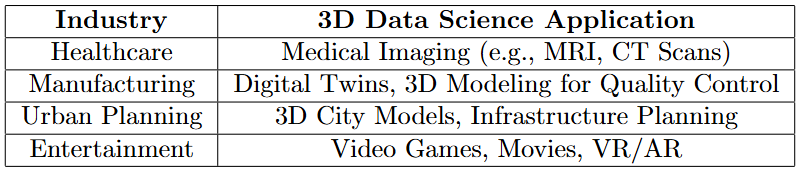

2. Applications of 3D Data Science

Revolutionizing Healthcare with 3D Medical Imaging

In the healthcare industry, 3D Data Science plays a pivotal role, particularly in medical imaging. Techniques such as MRI and CT scans generate detailed 3D models of organs, tissues, and bones. These models enable doctors to diagnose conditions more accurately and plan treatments with greater precision. For instance, surgeons can use 3D reconstructions of patient anatomy to practice complex procedures before entering the operating room, significantly reducing the risk of complications.

Enhancing Manufacturing with 3D Scanning and Modeling

Manufacturing has seen a significant transformation thanks to 3D Data Science. The use of 3D scanning and modeling in product design allows engineers to create highly detailed digital prototypes, which can be analyzed and refined before moving to production. This process not only speeds up the design phase but also enhances quality control by identifying potential flaws early on. Additionally, 3D data is instrumental in reverse engineering, enabling manufacturers to reconstruct and improve existing products with remarkable accuracy.

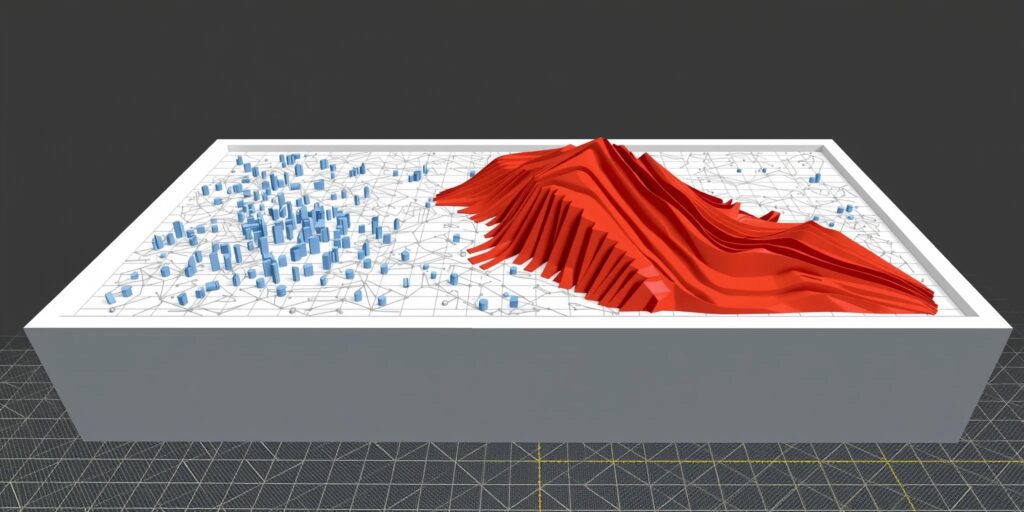

Optimizing Urban Planning with 3D City Models

Urban planning is another domain where 3D Data Science is making a significant impact. By creating 3D models of cities, urban planners can simulate the effects of new infrastructure projects, such as buildings, roads, and bridges, on the environment and existing cityscape. These simulations help planners make informed decisions about land use, transportation systems, and disaster management. Moreover, 3D city models are essential for environmental simulations, such as predicting flood zones or analyzing air quality, leading to more sustainable and resilient urban developments.

Elevating Entertainment with Immersive 3D Experiences

The entertainment industry relies heavily on 3D Data Science to create captivating experiences. In video games and movies, 3D models and environments are the foundation of immersive storytelling. 3D animation and motion capture technologies enable the creation of lifelike characters and realistic scenes, enhancing the audience’s experience. Additionally, virtual reality (VR) and augmented reality (AR) platforms leverage 3D data to create interactive worlds that blur the line between fiction and reality, providing users with a deeper level of engagement.

3. Key Systems in 3D Data Science

3.1. 3D Scanning Systems

3D scanning systems are the backbone of 3D Data Science, enabling the capture of real-world objects and environments in digital form. These systems vary in their methodologies but all share the goal of accurately recording the geometry, structure, and sometimes the color of objects in three dimensions.

LiDAR (Light Detection and Ranging) is a prominent 3D scanning technology that uses laser pulses to measure distances to a target. LiDAR is widely used in geographic information systems (GIS) for creating high-resolution topographic maps and in autonomous vehicles for navigation and obstacle detection. The precision and range of LiDAR make it indispensable for capturing detailed 3D data over large areas.

Photogrammetry is another essential 3D scanning technique that involves taking multiple photographs of an object or scene from different angles and processing them to generate a 3D model. This method is particularly popular in fields like architecture, archaeology, and gaming due to its cost-effectiveness and the ability to capture complex textures and details.

Structured Light Scanners use a different approach by projecting a pattern of light onto an object and capturing the deformation of this pattern with a camera system. This technique is excellent for capturing the fine geometry of objects, making it ideal for industrial design, quality control, and biometrics. Structured Light Scanners offer high accuracy and are often used in reverse engineering and medical applications.

3.2. 3D Data Management Systems

3D data management systems are crucial for storing, processing, and analyzing the vast amounts of data generated by 3D scanning systems. These systems are designed to handle the unique challenges posed by 3D data, such as the complexity and size of datasets.

Point Cloud Databases are specialized systems for managing 3D point cloud data, which are collections of data points representing the surface of an object or environment. Tools like PDAL (Point Data Abstraction Library) and Potree are widely used for storing, visualizing, and querying point cloud data. These databases are essential for applications in urban planning, forestry, and construction, where large-scale 3D models must be managed efficiently.

Volumetric Data Systems are designed to handle 3D data that represents volumes or spaces, such as medical imaging data. Systems like DICOM (Digital Imaging and Communications in Medicine) are standard in the healthcare industry for managing and sharing medical images. Similarly, VTK (Visualization Toolkit) is a powerful tool for processing and visualizing volumetric data across various fields, including biomedical research and engineering. These systems allow users to explore the internal structures of objects, which is critical for applications like tumor detection and material analysis.

4. Essential Tools for 3D Data Science

4.1. Data Processing Tools

Data processing is a critical step in 3D Data Science, involving the manipulation and refinement of raw 3D data to prepare it for analysis and visualization. Several tools are available to help professionals handle this complex data efficiently.

MeshLab is a powerful open-source tool specifically designed for processing and editing 3D triangular meshes. It provides a comprehensive suite of tools for cleaning, repairing, and optimizing 3D models. MeshLab is widely used in fields such as cultural heritage preservation and 3D printing, where precise control over mesh structure is essential.

CloudCompare is another key tool, specializing in the processing of 3D point clouds. Originally developed to handle LiDAR data, CloudCompare has become a go-to solution for various applications, including photogrammetry and geospatial analysis. It offers features like point cloud registration, filtering, and comparison, making it indispensable for those working with large-scale 3D datasets.

Blender, while best known as a 3D modeling and animation software, also offers robust capabilities for data visualization and simulation. Its open-source nature and extensive scripting capabilities make it a flexible tool for integrating 3D data into complex pipelines. Blender is frequently used in scientific visualization, architectural design, and virtual reality projects.

4.2. Data Analysis and Machine Learning Tools

3D Data Science increasingly relies on machine learning and deep learning techniques to extract insights from complex datasets. Several tools and libraries are optimized for working with 3D data in these contexts.

TensorFlow Graphics is an extension of the popular TensorFlow library, specifically designed for 3D deep learning models. It provides tools and pre-built models for tasks such as 3D object recognition, scene understanding, and graphics rendering. This library is particularly valuable in computer vision and augmented reality applications, where understanding the spatial structure of the environment is crucial.

PyTorch3D is a library built on PyTorch, tailored for handling 3D data. It supports various 3D computer vision tasks, including reconstruction, recognition, and rendering. With its flexible API and integration with the broader PyTorch ecosystem, PyTorch3D is ideal for researchers and developers working on cutting-edge 3D AI applications.

Open3D is another versatile library designed for rapid development in 3D data processing. It includes a rich set of features for machine learning, computer vision, and visualization. Open3D is particularly well-suited for robotics, autonomous vehicles, and 3D mapping projects, where real-time processing and analysis are essential.

4.3. Visualization Tools

Visualization is a crucial aspect of 3D Data Science, enabling users to explore and interpret complex 3D datasets effectively. Several tools are designed to facilitate high-quality 3D visualization across various platforms.

ParaView is an open-source, multi-platform data analysis and visualization application. It’s particularly adept at handling large 3D datasets, making it ideal for use in fields like climate science, fluid dynamics, and material science. ParaView supports a wide range of visualization techniques, from volume rendering to surface mapping, and can be extended with custom plugins for specialized needs.

Unity 3D is a real-time development platform that is widely used in gaming but also has powerful capabilities for creating interactive 3D visualizations. Its versatility makes it a popular choice for applications in education, architecture, and medical training, where engaging, real-time interaction with 3D data is critical.

Plotly 3D is a graphing library that enables the creation of interactive, publication-quality 3D plots. Plotly 3D is accessible to data scientists and developers who need to integrate 3D visualization into web applications or dashboards. It’s particularly useful in fields like finance, engineering, and scientific research, where clear and interactive visual representation of 3D data is essential for communicating insights.

5. 3D Data Science Techniques

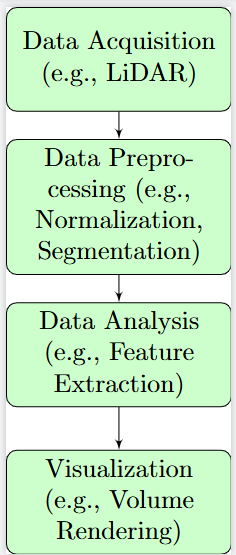

5.1. 3D Data Preprocessing

Preprocessing is a critical step in 3D Data Science, ensuring that data is prepared and standardized before any analysis or modeling is performed. Several key techniques are involved in this process:

Normalization is the practice of adjusting the scale of 3D data so that different datasets share a common reference frame. This is particularly important when combining data from multiple sources, such as LiDAR scans and photogrammetry. Normalization helps align datasets spatially, making them comparable and easier to analyze together.

Segmentation involves dividing a 3D model or dataset into distinct, meaningful parts. This technique is crucial in applications like medical imaging, where it is used to isolate and analyze specific anatomical structures such as organs, bones, or tumors. Segmentation enhances the focus on relevant areas of a dataset, making subsequent analysis more targeted and efficient.

Feature Extraction is the process of identifying and quantifying important characteristics of 3D data, such as edges, surfaces, and textures. These features are essential for various tasks, including object recognition, scene understanding, and quality control in manufacturing. Feature extraction distills large datasets into more manageable, informative elements that can be used in machine learning models or other analyses.

5.2. Machine Learning in 3D

Machine learning is transforming how 3D data is analyzed and interpreted, with several specialized techniques emerging to handle the complexities of three-dimensional datasets.

3D Convolutional Neural Networks (3D CNNs) are an extension of the widely used 2D CNNs in image processing, designed to work with 3D data like volumetric images. 3D CNNs apply convolutional operations across three dimensions, making them highly effective for tasks such as 3D object detection, medical image analysis, and video analysis. These networks are particularly valuable in fields like healthcare and autonomous driving, where understanding the depth and structure of data is crucial.

Graph Neural Networks (GNNs) are another advanced technique, particularly useful for analyzing 3D meshes where data is structured as graphs rather than regular grids. GNNs can effectively model the relationships between different points in a mesh, making them ideal for tasks like 3D shape analysis, mesh segmentation, and graph-based 3D reconstruction. GNNs are often used in computer graphics and robotics, where understanding the connections and interactions within a 3D structure is essential.

Reinforcement Learning is increasingly applied in scenarios where 3D simulations are used to train agents, such as in robotics. In these environments, an agent learns to perform tasks by interacting with a 3D simulation of the real world, receiving feedback in the form of rewards or penalties. This approach is valuable in fields where physical experimentation is costly or dangerous, as it allows for extensive training and optimization in a virtual setting.

5.3. 3D Data Visualization Techniques

Visualization is a key aspect of 3D Data Science, helping to transform complex datasets into understandable and actionable insights. Several visualization techniques are particularly important for interpreting 3D data:

Heatmaps in 3D are used to represent the intensity or density of data points across a 3D space. These visualizations are useful in fields like geospatial analysis, urban planning, and medical imaging, where it’s important to understand the distribution and concentration of certain features within a volume. 3D heatmaps provide a clear, intuitive way to visualize patterns that might be difficult to discern in raw data.

Surface Rendering is a technique used to create 3D surfaces from data points or volumetric data. This method is widely used in medical imaging to generate 3D representations of organs, bones, and other anatomical structures. Surface rendering allows for detailed exploration of the outer contours of objects, making it easier to identify abnormalities or specific features of interest.

Volume Rendering goes beyond surface representations to visualize data inside a 3D space, capturing internal structures and relationships within a volume. This technique is essential for understanding complex, layered data such as human organs, geological formations, or fluid dynamics. Volume rendering provides a more comprehensive view of 3D data, enabling deeper insights into the internal composition and interactions within a dataset.

6. Best Practices in 3D Data Science

6.1. Ensuring Data Integrity

In 3D Data Science, maintaining data integrity is paramount. 3D data must accurately represent the real-world objects or environments it models, as even minor errors can lead to significant inaccuracies in analysis and decision-making. To ensure data integrity, it’s essential to use high-quality data acquisition methods, such as precise 3D scanning techniques like LiDAR or photogrammetry. Regularly validating and verifying data against known standards or real-world measurements can also help maintain accuracy. In applications like medical imaging or urban planning, where decisions based on 3D data can have serious consequences, rigorous quality control is non-negotiable.

6.2. Performance Optimization

3D data processing is often computationally intensive, requiring substantial resources for tasks like rendering, analysis, and machine learning. To address these challenges, performance optimization techniques are crucial. One common approach is downsampling, which reduces the size of 3D datasets by selecting a representative subset of data points, thus decreasing computational load without significantly sacrificing detail. Additionally, employing efficient data structures such as octrees for spatial partitioning or k-d trees for nearest neighbor searches can drastically improve processing speed. Optimizing code, leveraging parallel computing, and utilizing GPUs for intensive calculations are also effective strategies for managing the computational demands of 3D Data Science.

6.3. Interdisciplinary Collaboration

3D Data Science is inherently interdisciplinary, often requiring collaboration between computer scientists, engineers, and domain-specific experts. For instance, in healthcare applications, data scientists may need to work closely with medical professionals to ensure that 3D models and analyses are clinically relevant and accurate. Similarly, in manufacturing or urban planning, collaboration with mechanical engineers or architects can provide essential insights that guide the interpretation and application of 3D data. Effective collaboration across disciplines ensures that 3D Data Science projects are grounded in domain-specific knowledge, leading to more robust and applicable results. Fostering communication, using interdisciplinary tools and platforms, and creating cross-functional teams are all critical for successful collaboration.

7. Future Trends in 3D Data Science

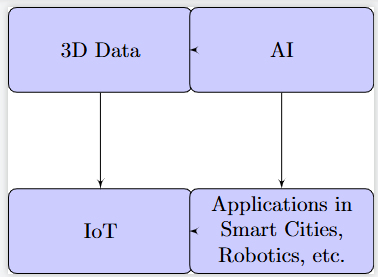

7.1. Integration with AI and IoT

The future of 3D Data Science is deeply intertwined with advancements in Artificial Intelligence (AI) and the Internet of Things (IoT). As 3D data becomes more prevalent, its integration with AI will enable the development of more intelligent and autonomous systems. For instance, in smart cities, 3D models of urban environments, combined with real-time data from IoT devices, will allow for sophisticated monitoring and management of infrastructure, traffic, and public services. In robotics, 3D data coupled with AI algorithms will enhance robots’ ability to navigate and interact with complex environments, leading to more autonomous and adaptive systems. This synergy between 3D Data Science, AI, and IoT promises to revolutionize industries by creating systems that can learn, adapt, and optimize in real-time.

7.2. Advances in 3D Data Acquisition

Emerging technologies are set to push the boundaries of 3D data acquisition, offering unprecedented precision and detail. Quantum LiDAR is one such advancement, promising to deliver higher-resolution 3D scans with greater accuracy and over longer distances than traditional LiDAR systems. This technology could be transformative for fields like autonomous driving and geospatial analysis, where the need for precise environmental mapping is critical. Similarly, advanced photogrammetry techniques are evolving, enabling faster and more accurate 3D modeling from images. These advances will reduce the cost and complexity of 3D data collection, making it more accessible and enabling new applications across a broader range of industries.

7.3. Virtual Reality (VR) and Augmented Reality (AR)

Virtual Reality (VR) and Augmented Reality (AR) are poised to become increasingly reliant on 3D Data Science for creating immersive and interactive experiences. In consumer applications, VR and AR technologies are already being used for gaming, education, and virtual tourism, with 3D data playing a central role in rendering realistic environments and objects. In the industrial sector, these technologies are being applied to training simulations, remote collaboration, and product design. As 3D data science continues to evolve, the fidelity and interactivity of VR and AR experiences will improve, leading to more seamless integration of digital and physical worlds. This trend is expected to expand the use of VR and AR across industries, making them indispensable tools for both consumer and professional applications.

Setting Up a 3D Data Science Project

Embarking on a 3D Data Science project requires careful planning and the right tools and techniques to ensure success. Here’s a step-by-step guide to help you set up your project from start to finish.

1. Define the Project Scope and Objectives

1.1. Identify the Problem

- Determine the core problem you want to solve using 3D data. For instance, are you developing a model for medical imaging, urban planning, or 3D object detection?

- Set clear objectives: What do you hope to achieve? Improved accuracy, faster processing times, or new insights?

1.2. Establish the Project’s Scope

- Define the boundaries of your project: What specific data will you use? What tools and methods are applicable?

- Identify any constraints: Budget, time, hardware limitations, or data availability.

2. Assemble the Team and Resources

2.1. Build a Multidisciplinary Team

- Data Scientists: Experts in machine learning and data processing.

- Domain Experts: Specialists in the field you are targeting, such as medical professionals or urban planners.

- Engineers/Developers: For setting up the data pipelines, developing tools, and integrating models.

- Project Manager: To oversee progress, manage resources, and ensure that objectives are met on time.

2.2. Gather Necessary Tools and Software

- 3D Data Acquisition Tools: LiDAR, photogrammetry systems, or 3D scanners.

- Processing Software: Tools like Blender, MeshLab, and CloudCompare for handling and refining raw data.

- Data Management Systems: Set up databases such as Point Cloud Databases or Volumetric Data Systems to store and query your data.

- Analysis Tools: Choose machine learning libraries like TensorFlow Graphics, PyTorch3D, or Open3D for analysis and modeling.

3. Data Acquisition and Preprocessing

3.1. Collect the Data

- Primary Data: Use 3D scanning tools like LiDAR or structured light scanners to capture the data you need.

- Secondary Data: Supplement with publicly available datasets or previously collected data relevant to your project.

3.2. Preprocess the Data

- Normalization: Ensure all datasets are in a consistent scale and reference frame.

- Segmentation: Divide the 3D models into meaningful parts, especially important in medical imaging or object recognition.

- Feature Extraction: Identify and isolate key features such as edges, surfaces, and textures.

4. Model Development and Training

4.1. Choose the Appropriate Models

- 3D Convolutional Neural Networks (3D CNNs): For analyzing volumetric data or performing object detection in 3D space.

- Graph Neural Networks (GNNs): Ideal for 3D meshes and tasks where data is best represented as graphs.

- Reinforcement Learning: If your project involves training agents in simulated 3D environments, such as robotics.

4.2. Train the Model

- Data Splitting: Divide your data into training, validation, and test sets.

- Model Training: Use libraries like TensorFlow Graphics or PyTorch3D to train your models on the prepared data.

- Hyperparameter Tuning: Experiment with different parameters to optimize model performance.

5. Visualization and Analysis

5.1. Visualize the Data and Results

- Use tools like ParaView, Unity 3D, or Plotly 3D to create interactive and insightful visualizations of your data and model outputs.

- Surface Rendering and Volume Rendering: Essential for interpreting medical images, geological data, or any other complex 3D structures.

5.2. Analyze the Results

- Evaluate Model Performance: Use metrics like accuracy, precision, recall, and F1-score, depending on the task.

- Interpret Visualizations: Ensure that the visualizations align with the project’s objectives and provide actionable insights.

6. Performance Optimization

6.1. Optimize Data Processing

- Downsampling: Reduce the size of your datasets to improve processing speed while retaining essential information.

- Efficient Data Structures: Implement structures like octrees or k-d trees to improve data access and processing times.

6.2. Optimize Model Performance

- Parallel Processing: Use GPU acceleration or distributed computing to speed up model training and inference.

- Model Pruning: Simplify the model by removing unnecessary parameters or layers to increase efficiency without losing accuracy.

7. Deployment and Maintenance

7.1. Deploy the Model

- Integrate the trained model into a production environment using API services or embed it into applications (e.g., web-based tools or mobile apps).

- Ensure that the deployment setup can handle real-time data processing if required.

7.2. Continuous Monitoring and Updates

- Monitor Model Performance: Keep track of model accuracy and performance post-deployment. Adjust as needed based on new data or changing conditions.

- Iterate and Improve: Regularly update the model and processing pipelines with new data and improved algorithms to maintain or enhance performance.

8. Documentation and Reporting

8.1. Document the Process

- Keep detailed records of the project scope, methodologies, tools used, and results obtained.

- Ensure that all team members have access to the documentation for future reference and project continuity.

8.2. Report Findings

- Prepare reports or presentations to communicate the results and insights gained from the project.

- Tailor the reports to different stakeholders, providing high-level summaries for executives and detailed analysis for technical teams.

9. Future Planning

9.1. Plan for Scalability

- Consider how your project can be scaled up in the future, either by incorporating more data, extending to new applications, or integrating with other systems.

9.2. Explore Future Trends

- Stay updated with emerging technologies such as Quantum LiDAR, advanced photogrammetry, and their potential impact on your projects.

Setting up a 3D Data Science project involves multiple stages, from defining the problem and assembling a team to deploying and maintaining the solution. By following these steps and leveraging the right tools and techniques, you can ensure your project is well-organized, efficient, and ultimately successful.

Conclusion

3D Data Science is not just a niche field; it is a rapidly expanding frontier that blends traditional data science with cutting-edge spatial analysis and visualization techniques. As industries continue to evolve and embrace the power of 3D data, the ability to process, analyze, and visualize information in three dimensions will become increasingly critical. The tools, systems, and techniques discussed in this guide offer a solid foundation for anyone looking to delve into this exciting area.

Professionals across various sectors—from healthcare and manufacturing to urban planning and entertainment—can harness the potential of 3D Data Science to unlock deeper insights, enhance decision-making, and drive innovation. Whether you are an experienced data scientist or just beginning your journey, developing expertise in 3D Data Science will undoubtedly become a significant advantage as the demand for advanced 3D data processing and analysis continues to rise.

Further Reading and Resources

For those looking to dive deeper into 3D Data Science, here are some valuable resources:

- Point Cloud Library (PCL): Explore this comprehensive library for 3D point cloud processing, which is essential for handling LiDAR data and 3D reconstruction.

- Blender: Learn about Blender, a powerful open-source tool for 3D modeling, animation, and simulation. It’s widely used in various industries for creating and visualizing complex 3D models.

- TensorFlow Graphics: Discover TensorFlow Graphics, a specialized library within TensorFlow that focuses on 3D deep learning models, particularly useful in computer vision and graphics.

- Open3D: Delve into Open3D, an open-source library designed for rapid development in 3D data processing, including machine learning and visualization.

- ParaView: Learn about ParaView, an open-source, multi-platform application for 3D data analysis and visualization, ideal for handling large datasets.

- Unity 3D: Explore Unity 3D, a real-time development platform not just for gaming, but also for creating interactive 3D visualizations in various industrial applications.