Random Forest has firmly established itself as a powerful tool in the world of machine learning, offering a robust way to make accurate predictions across a variety of applications. But to truly appreciate its capabilities, it’s essential to delve deeper into its underlying mechanics and explore how it can be fine-tuned for specific use cases.

The Foundation: Decision Trees and Bagging

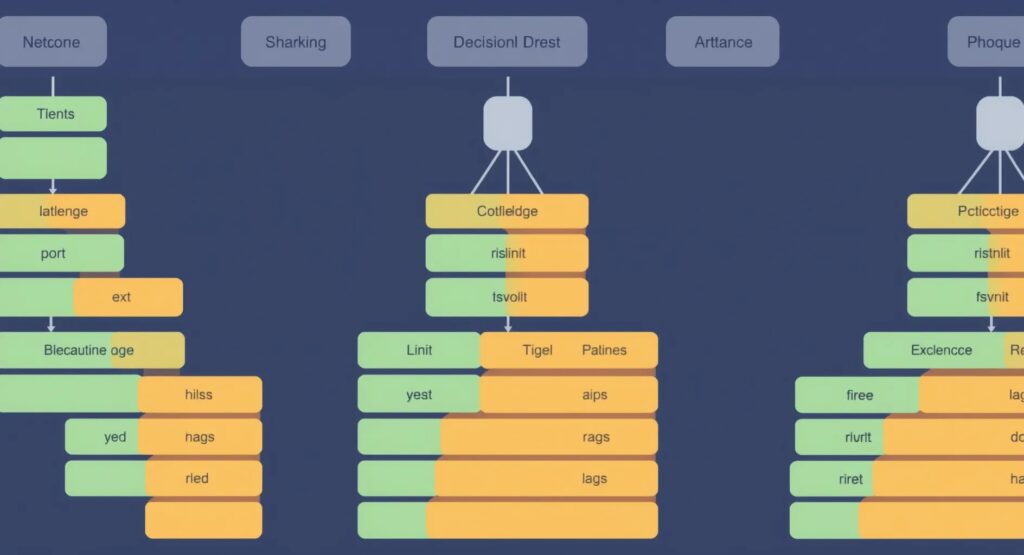

To understand Random Forest, we first need to grasp the basics of decision trees and bagging (Bootstrap Aggregating). A decision tree is a simple yet powerful model that makes decisions by splitting data into branches based on feature values, leading to a prediction at the leaf nodes. However, decision trees are prone to overfitting—especially with complex datasets—because they can become too specific to the training data.

Bagging is an ensemble technique designed to reduce variance and improve the stability of models like decision trees. It works by creating multiple versions of a predictor and using these to generate an aggregated prediction. In Random Forest, bagging is applied by constructing numerous decision trees using different bootstrap samples (randomly selected subsets of the training data with replacement).

The Power of Randomization

One of the key innovations of Random Forest is the introduction of feature randomness. In traditional decision trees, each node is split by evaluating all possible features and selecting the one that provides the best split. However, Random Forest only considers a random subset of features at each node. This additional layer of randomness serves to further decorrelate the trees, making the ensemble more resilient to overfitting.

This random feature selection means that each tree in the forest might focus on different aspects of the data, leading to diverse perspectives. As a result, the combined predictions are less likely to be skewed by any particular feature or pattern, enhancing the overall robustness of the model.

Random Forests is a machine learning algorithm specifically designed to address one of the most significant issues associated with decision trees: variance. In simple terms, variance in decision trees refers to the model’s tendency to become overly sensitive to the training data, leading to overfitting. This means that a decision tree might perform exceptionally well on the data it was trained on but fails to generalize when exposed to new, unseen data.

How Random Forests Solve the Variance Problem

Random Forests tackle this issue by introducing two key concepts: ensemble learning and randomization.

- Ensemble Learning:

- Random Forests are an ensemble of multiple decision trees, usually created from different subsets of the training data. Instead of relying on a single decision tree, which could be highly sensitive to specific data points (high variance), Random Forests combine the outputs of multiple trees. By aggregating the predictions from these trees, Random Forests produce a more stable and reliable output that is less likely to overfit.

- For classification tasks, the Random Forest algorithm takes a majority vote among the trees to determine the final prediction. For regression tasks, it averages the predictions of all trees.

- Randomization:

- Random Forests introduce randomness in two ways: first, by creating each tree using a different subset of the training data (a technique known as bootstrap sampling), and second, by selecting a random subset of features at each split in the tree. This randomization ensures that the trees in the forest are diverse, further reducing the risk of overfitting and ensuring that the final model generalizes better to new data.

Random forests are very flexible and can be used for both classification and regression tasks. They are robust to overfitting, especially with a large number of trees.

— from Scikit-learn Documentation

Why Reducing Variance Matters

Reducing variance is crucial because it allows a model to perform well not just on the training data but also on new, unseen data. A high-variance model may capture noise and specific quirks of the training data that do not apply to the broader dataset, leading to poor performance when the model is deployed in real-world scenarios. By leveraging the ensemble approach and randomization, Random Forests offer a robust solution to this problem, making them one of the most reliable machine learning algorithms available.

Fine-Tuning Random Forest: Hyperparameters and Their Impact

While Random Forest performs well out of the box, fine-tuning its hyperparameters can lead to even better results, especially for complex tasks. Some of the most critical hyperparameters include:

- Number of Trees (n_estimators): Increasing the number of trees generally improves model performance, as the ensemble becomes more robust. However, there is a trade-off in terms of computational cost and memory usage.

- Maximum Depth of Trees (max_depth): Controlling the depth of the trees can prevent overfitting. Shallow trees might not capture all the nuances of the data, while very deep trees might overfit.

- Minimum Samples Split (min_samples_split) and Leaf (min_samples_leaf): These parameters control the minimum number of samples required to split an internal node or to be at a leaf node. By increasing these values, the model becomes less sensitive to noise.

- Number of Features to Consider (max_features): This controls how many features are considered when looking for the best split. Lowering this value increases randomness and diversity among trees, potentially improving generalization.

- Bootstrap Sampling (bootstrap): While typically enabled by default, disabling bootstrap sampling forces all trees to be built from the entire dataset, which might lead to overfitting but could be useful in certain scenarios.

Understanding Feature Importance

One of the strengths of Random Forest is its ability to provide insights into which features are most important in making predictions. Feature importance is a metric that indicates how much a particular feature contributes to the prediction power of the model. In Random Forest, this is typically measured by observing how much the model accuracy decreases when the feature values are permuted.

By analyzing feature importance, data scientists can gain a deeper understanding of the data, potentially identifying key drivers of the target variable. This is particularly valuable in fields like healthcare, finance, and marketing, where understanding the underlying factors driving predictions is just as important as the predictions themselves.

Random forests are an example of an ensemble method, where many individual models (trees) are combined to produce a stronger model. This ensemble approach helps in reducing variance without increasing bias, making Random Forests a powerful tool in machine learning

— Andrew Ng

Random Forest in Action: Real-World Applications

Random Forest is used extensively in various domains, thanks to its versatility and robustness. Here are a few detailed examples:

1. Healthcare: Disease Prediction and Diagnosis

In healthcare, Random Forest models are employed to predict the likelihood of diseases such as diabetes, heart disease, and cancer. By analyzing patient data—including factors like age, lifestyle, and genetic information—Random Forest can accurately classify patients into risk categories. Moreover, the model’s feature importance metrics help doctors understand which factors are most significant, aiding in diagnosis and treatment planning.

2. Finance: Fraud Detection and Risk Management

Financial institutions leverage Random Forest for fraud detection by analyzing transaction data to identify unusual patterns that may indicate fraudulent activity. The model’s ability to process vast amounts of data and consider multiple variables makes it ideal for this task. Additionally, Random Forest is used in credit risk assessment to predict the likelihood of default by evaluating an individual’s credit history, income, and other financial indicators.

3. Marketing: Customer Segmentation and Recommendation Systems

In marketing, Random Forest is used to segment customers based on their behavior, preferences, and demographics. This segmentation allows businesses to target their marketing efforts more effectively, leading to higher conversion rates. Furthermore, Random Forest models are integral to recommendation systems that suggest products or content to users based on their past behavior and the behavior of similar users.

Challenges and Considerations

Despite its many strengths, Random Forest is not without challenges. One potential drawback is that it can be computationally intensive, especially with a large number of trees or very deep trees. Training and prediction times can be lengthy, and the model can consume substantial memory.

Additionally, while Random Forest handles categorical data well, it may not be the best choice for datasets with a very high number of categorical features unless these are appropriately encoded. Also, Random Forest might struggle with datasets where the target variable is highly imbalanced, requiring techniques like SMOTE (Synthetic Minority Over-sampling Technique) or class weighting to improve performance.

Conclusion: Maximizing the Potential of Random Forest

Random Forest is a powerful, flexible, and reliable machine learning model that excels in various tasks, from classification to regression. By leveraging its ensemble approach, Random Forest mitigates the risks of overfitting and enhances predictive accuracy. Its ability to handle large datasets and complex interactions between features makes it a go-to solution for many data scientists.

However, to fully unleash the potential of Random Forest, one must understand its mechanics, carefully tune its hyperparameters, and consider its limitations. By doing so, you can tailor the model to your specific needs, whether you’re predicting customer behavior, assessing credit risk, or detecting fraud.

Explore the deeper intricacies of Random Forest through hands-on experimentation, and you’ll find that it’s not just a tool—but a powerful ally in the quest to make data-driven decisions.

Frequently Asked Questions about Random Forest

1. What is Random Forest, and how does it work?

Random Forest is an ensemble learning method that creates multiple decision trees from different subsets of a dataset. Each tree in the forest gives a prediction, and the final output is determined by averaging (for regression) or taking the majority vote (for classification) of these predictions. This process helps improve accuracy and reduces the risk of overfitting compared to using a single decision tree.

2. Why is Random Forest considered better than a single decision tree?

A single decision tree can be highly sensitive to noise and variations in the training data, often leading to overfitting. Random Forest mitigates this by creating multiple trees and aggregating their predictions, which helps smooth out errors and biases, resulting in a more accurate and generalizable model.

3. What are the main advantages of using Random Forest?

Random Forest offers several advantages:

- High accuracy: It often provides better predictive performance than individual decision trees.

- Robustness: The ensemble approach makes it more resistant to overfitting.

- Handling large datasets: It works efficiently with large datasets and can manage thousands of input variables without variable deletion.

- Feature importance: It provides insights into the relative importance of each feature in the dataset.

4. What are some common applications of Random Forest?

Random Forest is widely used across various domains, including:

- Fraud detection: Identifying fraudulent activities in financial transactions.

- Credit risk assessment: Predicting the likelihood of loan defaults.

- Portfolio optimization: Helping investors make data-driven decisions.

- Healthcare: Diagnosing diseases and predicting patient outcomes.

- Marketing: Customer segmentation and recommendation systems.

5. How do you fine-tune a Random Forest model?

Fine-tuning a Random Forest involves adjusting several hyperparameters:

- Number of Trees (n_estimators): Increasing the number of trees can improve accuracy but requires more computation.

- Maximum Depth (max_depth): Limiting the depth of trees helps prevent overfitting.

- Minimum Samples Split and Leaf (min_samples_split, min_samples_leaf): Setting these to higher values can make the model less sensitive to noise.

- Number of Features (max_features): This controls the randomness in selecting features for each split, which can improve generalization.

6. What are the limitations of Random Forest?

While Random Forest is powerful, it has some limitations:

- Computationally intensive: Training and prediction times can be long, especially with a large number of trees.

- Memory consumption: It can require significant memory, particularly with large datasets.

- Not ideal for highly imbalanced datasets: Without adjustments, it may not perform well when the target classes are imbalanced.

- Less interpretable: While it provides feature importance, the overall model is less interpretable compared to simpler models like single decision trees.

7. How does Random Forest handle missing data?

Random Forest can handle missing data by using a technique called “in-bag” imputation, where the model fills in missing values using the median of the data or by relying on surrogate splits. However, preprocessing steps like imputation before feeding the data into the model are recommended for better performance.

8. Can Random Forest be used for both classification and regression tasks?

Yes, Random Forest is versatile and can be used for both classification (categorical output) and regression (continuous output) tasks. The difference lies in how the final prediction is made—by taking the majority vote in classification or the average of the predictions in regression.

9. How does Random Forest determine feature importance?

Random Forest determines feature importance by evaluating how much each feature contributes to reducing the overall impurity (like Gini impurity or entropy) in the trees. This is often measured by the decrease in the model’s accuracy when the feature is randomly permuted.

10. When should I choose Random Forest over other machine learning models?

You should consider using Random Forest when:

- You have a large dataset with many features.

- Overfitting is a concern, and you need a model with strong generalization capabilities.

- You need to understand which features are most important in making predictions.

- You require a model that works well with complex, non-linear relationships in the data.

If you still have questions or need further clarification, check out these resources to explore Random Forest in more depth.