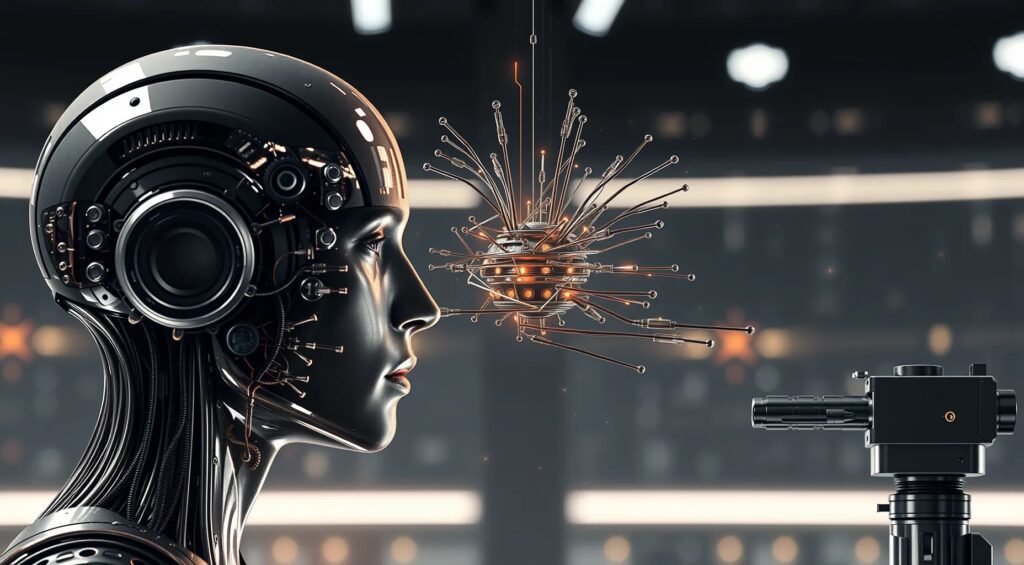

Artificial Intelligence (AI) is revolutionizing how we live, work, and interact. Its potential to enhance productivity, streamline healthcare, and create new opportunities seems boundless. Yet, amid this progress, AI systems frequently overlook or misinterpret the needs and experiences of people with disabilities, leading to biased outcomes that exacerbate the existing challenges faced by this community.

Understanding AI Bias in the Context of Disability

AI bias occurs when systems, trained on datasets that lack diversity, produce skewed outcomes. This is especially problematic for the disabled community, where biases can manifest in areas such as employment, healthcare, and accessibility. The data used to train AI models often fails to represent the full spectrum of human abilities, leading to algorithms that are unable to serve disabled individuals effectively.

For example, AI-driven hiring platforms may screen out candidates with disabilities because the algorithms, designed without inclusive data, equate certain traits with lower productivity. In healthcare, AI systems might misdiagnose patients with disabilities, particularly if those disabilities are underrepresented or misunderstood in the training data. Even in daily life, AI-powered accessibility tools like voice recognition or automated captions can fail, leaving users frustrated and excluded.

AI Bias: A Deeper Dive into Its Roots

To fully grasp the impact of AI on the disabled community, it’s crucial to understand how AI bias originates. AI systems are trained on vast datasets, which ideally should reflect the diversity of human experience. However, many of these datasets are skewed—often containing a disproportionate amount of data from non-disabled individuals. This results in AI models that perform well for the majority but poorly for those whose experiences and needs differ from the norm.

Data collection practices play a pivotal role in perpetuating this bias. Often, the data does not include sufficient examples of disabled individuals or adequately account for the diversity within the disabled community itself. For instance, wheelchair users may have different requirements from those with visual impairments, yet AI systems frequently lump them together, leading to one-size-fits-all solutions that fail to meet the unique needs of each group.

Moreover, the very metrics used to evaluate AI performance can be biased. If these metrics do not consider the experiences of disabled individuals, the systems deemed “successful” may still fail a significant portion of the population. This issue is particularly pronounced in areas such as employment and healthcare, where biased AI can have life-altering consequences.

Employment Discrimination: The Silent Gatekeeper

AI is increasingly used in hiring processes, from resume screening to video interviews. While this automation aims to remove human bias, it often introduces new forms of discrimination, particularly against disabled candidates.

Resume screening algorithms may filter out candidates based on gaps in employment history or the absence of certain experiences, which might be common among individuals with disabilities due to periods of medical leave or limited access to traditional work environments. These systems, designed with the able-bodied in mind, often fail to recognize the alternative skills and perspectives that disabled individuals bring to the workplace.

AI-powered video interviews pose another challenge. These systems analyze facial expressions, body language, and tone of voice to assess a candidate’s suitability. However, for those with physical disabilities, neurological conditions, or speech impairments, these algorithms can misinterpret or penalize behavior that deviates from the “norm.” For example, an AI might incorrectly assess a candidate with Parkinson’s disease as nervous or unconfident, simply because their movements or speech patterns differ from those of non-disabled individuals.

Healthcare: When AI Fails the Most Vulnerable

The healthcare sector is another area where AI bias can have devastating effects. AI-driven diagnostic tools and treatment recommendations are increasingly relied upon to improve efficiency and accuracy. However, these systems are often trained on datasets that underrepresent disabled individuals, leading to potential misdiagnoses and inappropriate treatment plans.

Consider AI algorithms used in medical imaging. These systems are trained to recognize patterns in X-rays, MRIs, and other scans. However, if the training data lacks sufficient examples of scans from disabled individuals—who may have different anatomical variations due to their conditions—the AI might fail to correctly interpret the images, leading to incorrect or missed diagnoses.

In the case of chronic illness management, AI systems designed to predict disease progression or recommend treatments may not account for the complexities of managing multiple disabilities. A person with a mobility impairment, for example, may not be able to follow standard exercise recommendations, yet the AI may not offer alternative suggestions tailored to their capabilities.

Accessibility and the Limitations of AI

One of the promises of AI is to enhance accessibility, providing disabled individuals with tools that enable greater independence and participation in society. However, when these tools are designed without a deep understanding of the users they are meant to serve, they often fall short.

Voice recognition technology is a prime example. While it offers hands-free interaction for those with mobility impairments, it often struggles to accurately recognize the speech patterns of individuals with speech disabilities. This limitation is not just frustrating—it can render the technology effectively unusable, perpetuating the very exclusion it was designed to eliminate.

Similarly, AI-driven mobility aids like self-navigating wheelchairs or robotic prosthetics must be meticulously calibrated to individual users. When these systems are based on generic models, they can fail to accommodate the unique physical and functional needs of different users, leading to discomfort or even injury.

The Intersectionality of Disability and Other Marginalized Identities

The impact of AI bias is further complicated when disability intersects with other marginalized identities, such as race, gender, and socioeconomic status. For disabled individuals who are also people of color, for instance, AI systems may doubly fail them due to biases in both disability representation and racial representation.

In healthcare, a black woman with a disability might face compounded bias. AI systems trained predominantly on data from white, non-disabled individuals may not only misdiagnose her condition but also overlook the specific ways in which her race and disability interact, leading to suboptimal or even harmful treatment recommendations.

Socioeconomic status adds another layer of complexity. AI systems that recommend job placements or educational opportunities might not consider the unique barriers faced by disabled individuals from low-income backgrounds, such as limited access to assistive technology or specialized training. This can result in recommendations that are not only unattainable but also reinforce the cycle of poverty and marginalization.

Toward Inclusive AI: A Call for Ethical Design

Addressing the pervasive issue of AI bias requires a fundamental shift in how AI systems are designed, developed, and deployed. Inclusive design must be at the heart of AI development, ensuring that the needs of disabled individuals are considered from the ground up.

This begins with diversifying the data used to train AI models. Data collection efforts must actively seek out and include a broad range of experiences and abilities, ensuring that AI systems are exposed to the full spectrum of human diversity. This also means challenging the assumptions that often underlie data collection practices, such as the notion that non-disabled experiences can serve as proxies for disabled experiences.

Involving disabled individuals in the AI development process is another crucial step. This goes beyond token consultation; it requires meaningful collaboration, where disabled people have a direct role in shaping the technologies that affect their lives. By embedding their insights and experiences into the design process, AI systems can be better tailored to meet their actual needs.

Regulatory frameworks must also evolve to address the specific challenges posed by AI bias. This includes establishing standards for data diversity, requiring transparency in AI decision-making, and implementing regular audits to detect and mitigate bias. These measures will help ensure that AI systems are held to the highest ethical standards, prioritizing fairness and accessibility for all.

Conclusion: Building a Future Where AI Works for Everyone

The silent discrimination of AI bias against the disabled community is a significant yet often overlooked issue. As AI continues to permeate every aspect of our lives, it is imperative that we take proactive steps to address these biases. By embracing inclusive design, diversifying data, and strengthening regulatory oversight, we can build AI systems that are not only innovative but also equitable and just.

The future of AI should be one where all individuals, regardless of their abilities, are empowered to thrive. This vision requires a collective commitment to inclusivity and ethical responsibility, ensuring that technology advances in a way that uplifts rather than excludes. The time to act is now, before the silent discrimination of AI bias becomes a permanent barrier to equality and inclusion.

For more in-depth insights and resources on AI bias and the disabled community, please refer to the following:

Inclusive AI: Designing AI Systems for People with Disabilities

An article by Stanford University that provides insights into the principles of inclusive design and how they can be applied to AI systems.

Link to resource