What is Homomorphic Encryption and Why Does It Matter?

Data breaches seem to happen daily. Protecting sensitive information has never been more important. Homomorphic encryption (HE) offers a game-changing solution. It’s a cryptographic technique that allows computations on encrypted data, meaning you can process data without ever seeing it in plain text!

Sound wild? It kind of is, but this innovation means even if hackers get their hands on your data, they can’t do much with it. The key is that it keeps data secure even during analysis or computation, which was previously a major vulnerability.

Homomorphic encryption is particularly exciting for industries like finance and healthcare, where data confidentiality is crucial. It enables complex data-driven tasks while keeping privacy intact.

Federated Learning: AI Without Data Centralization

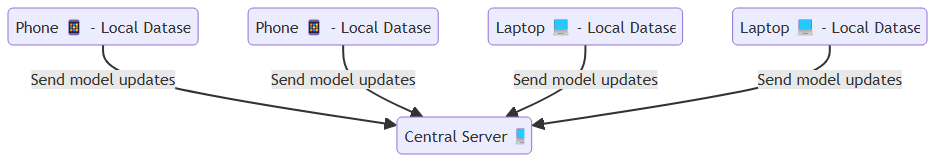

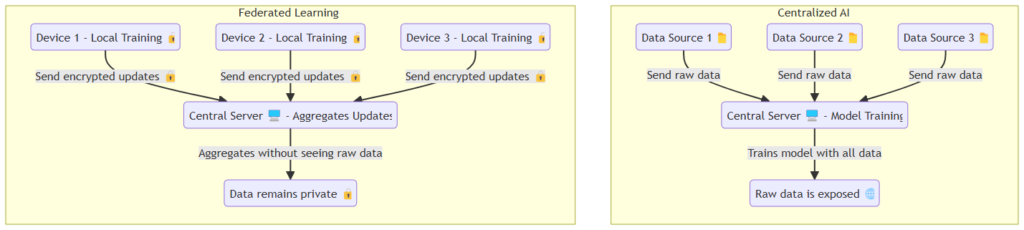

Now, federated learning (FL) takes privacy protection a step further. Imagine training an AI model without having to pull all the data into one central server. Instead of sending your data to a central point, FL allows the AI to learn directly from your device—whether it’s your smartphone, laptop, or IoT gadget—by keeping your data local. This is huge because, traditionally, training AI meant feeding it tons of data housed in one place, often exposing it to privacy risks.

Federated learning’s decentralization makes it perfect for privacy-conscious sectors. It empowers devices to improve the AI model while maintaining data privacy, ensuring that sensitive information never leaves the device. For industries looking to balance innovation with privacy, FL is a breakthrough.

How Homomorphic Encryption Protects Sensitive Information

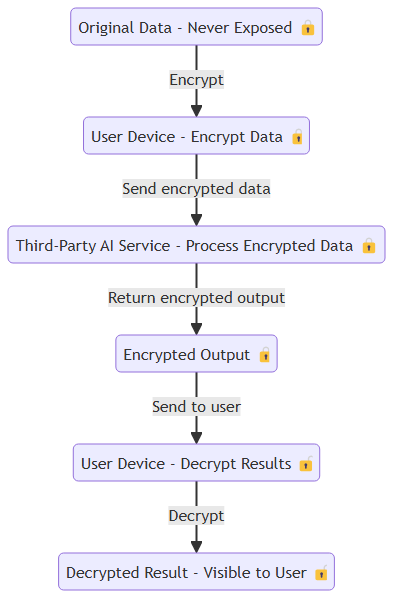

Here’s where homomorphic encryption really shines. Normally, when you need to analyze encrypted data, you have to decrypt it first. That’s like opening a locked door and hoping no one barges in. With HE, the data stays locked! You can run all your computations without ever exposing the raw data.

For instance, in healthcare, hospitals can securely analyze patient data to identify trends, all without risking personal data exposure. In finance, companies can perform detailed analytics on customer transactions without compromising privacy. The beauty of homomorphic encryption is that it allows both privacy and utility, which is something other forms of encryption can’t do.

The Magic Behind Federated Learning: How It Works

Federated learning sounds complex, but it’s pretty simple in concept. Instead of centralizing data for AI model training, the model is sent to each user’s device. The device uses its local data to train the model and sends updates back to a central server. What’s important is that the data never leaves the user’s device—only the model updates do.

These updates are then aggregated, so the AI improves without ever directly accessing the raw data. For example, imagine your smartphone contributing to a predictive text AI. Your typing patterns help train the AI without ever sharing your actual texts. It’s privacy by design.

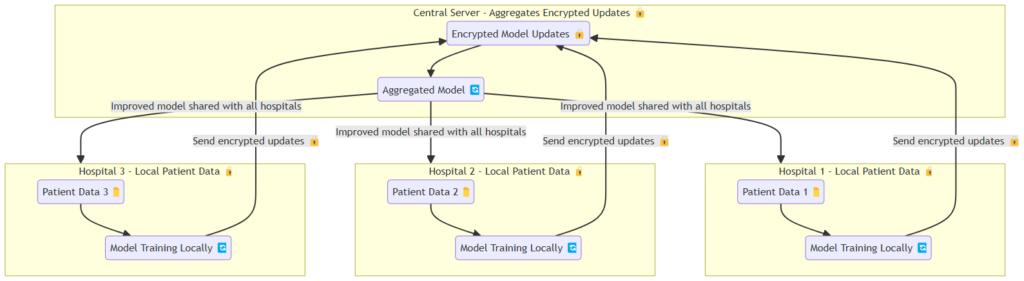

FL is a particularly great fit for industries that require both personalization and data security, like health apps or financial services. The challenge, however, lies in ensuring that the updates themselves don’t inadvertently leak information, which is where homomorphic encryption steps in.

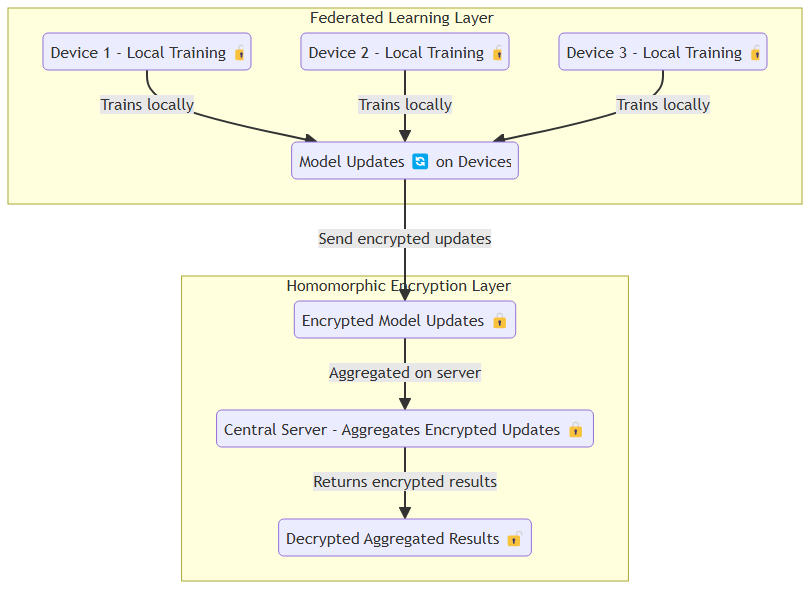

Why Combine Homomorphic Encryption and Federated Learning?

Combining homomorphic encryption with federated learning is like putting a vault inside a vault. Federated learning ensures your data stays on your device, and homomorphic encryption makes sure that even if it’s used for computation, it remains protected. Together, they offer a supercharged solution for secure, privacy-first AI.

The reason this pairing is so powerful is that both methods tackle different aspects of privacy. Federated learning decentralizes data storage, reducing the risk of large-scale breaches, while homomorphic encryption ensures that any data used for analysis is still secure, even if someone tries to intercept it.

This combo is especially important as more industries turn to AI for personalized services. Data-driven industries, like healthcare and finance, benefit immensely from this union because they can offer cutting-edge AI solutions without compromising sensitive customer data.

Real-World Applications of Federated Learning in AI

Federated learning has already made its way into several industries, and its applications are expanding fast. One of the most notable areas is smartphone technology. Ever noticed how your phone seems to improve in predicting what you’re typing, or in suggesting content? That’s thanks to federated learning. Your phone is continuously learning from your data, refining its algorithms without ever sending your personal information to the cloud. Google’s Gboard, for instance, uses federated learning to improve its autocorrect and predictive text features.

In healthcare, federated learning is being used to analyze patient data while keeping sensitive health information private. Hospitals in different locations can collaborate on AI research to improve disease detection or treatment recommendations, all without exposing patient records. Another key sector is self-driving cars, where federated learning helps vehicles learn from one another’s experiences, without sending raw data to a central database. The more vehicles share model updates, the smarter and safer autonomous driving systems become!

Homomorphic Encryption’s Impact on Data Security

When it comes to data security, homomorphic encryption is nothing short of revolutionary. In sectors like financial services, where sensitive customer data is constantly being analyzed, HE ensures that even if the data is intercepted, it remains encrypted. The computations happen on encrypted data, and results are decrypted after the fact, meaning no one gets to see the underlying data during the process.

This capability is a massive win for businesses that rely on heavy analytics. Think about credit card companies: they can analyze transaction patterns to detect fraud without ever needing to decrypt the customer’s financial data during the analysis. This not only safeguards privacy but also meets strict regulatory requirements for data protection.

In research and government sectors, homomorphic encryption is paving the way for collaborations between organizations that need to share data while keeping it confidential. This is particularly important in fields like biotechnology, where secure sharing of data can accelerate breakthroughs in personalized medicine.

Overcoming Challenges: Federated Learning’s Scalability Issues

Despite its promise, federated learning has its own set of challenges, particularly when it comes to scalability. Coordinating updates from millions of devices and then aggregating them into a usable form isn’t a walk in the park. The communication costs of sending updates back and forth can also become high, especially when you’re working with resource-limited devices like smartphones.

There’s also the issue of model accuracy. When data is spread across thousands or millions of devices, the data quality varies significantly. Devices may also have differing capabilities, leading to uneven training of the AI model. To combat these issues, companies are investing in compression techniques and model optimization to reduce the amount of data that needs to be transferred, making the process more efficient.

However, these challenges aren’t show-stoppers. The benefits of federated learning—especially in terms of data privacy—far outweigh the hurdles. Researchers are constantly exploring new ways to make it scalable, while homomorphic encryption plays a role in ensuring that even when data updates are being shared, privacy remains intact.

The Role of Homomorphic Encryption in Regulatory Compliance

In an era where data privacy laws like GDPR and CCPA dictate the way companies handle user information, homomorphic encryption provides a critical solution. These regulations require that companies protect customer data at all costs, even when using it for analytics or AI training. Since homomorphic encryption allows computations on encrypted data, it aligns perfectly with these stringent privacy regulations.

Take healthcare as an example. Under regulations like HIPAA, patient information must be protected, even when used for research or improving services. Homomorphic encryption ensures that healthcare providers can continue to develop AI-driven solutions while staying compliant with the law. Similarly, in the finance sector, it ensures compliance with laws governing customer data protection, without slowing down innovation in areas like fraud detection or risk management.

For companies in regions with strict data regulations, adopting homomorphic encryption isn’t just about security—it’s about avoiding fines and reputational damage due to non-compliance. As more countries introduce data protection laws, homomorphic encryption will likely become an essential tool in the privacy toolkit.

Can Homomorphic Encryption Solve Federated Learning’s Privacy Concerns?

While federated learning is a step forward in protecting privacy, it’s not without concerns. One of the biggest worries is whether data updates sent from devices could leak information. Even if the raw data never leaves the device, model updates might carry traces of the original data, creating a privacy risk.

This is where homomorphic encryption comes in as a game-changer. By encrypting the updates themselves, it ensures that even if someone intercepts the model updates, they won’t be able to extract useful information. Combining federated learning with homomorphic encryption effectively closes the loop on privacy concerns. The data stays secure both during local training and when updates are sent back to improve the AI model.

For companies concerned about privacy violations or regulatory non-compliance, this combination offers an airtight solution. With encrypted updates, federated learning becomes a true privacy-preserving technology, ensuring both innovation and protection in one neat package.

Enhancing Machine Learning with Privacy-Preserving Technologies

Machine learning has become integral to businesses, but concerns about data privacy and security breaches have pushed the need for more privacy-preserving technologies. This is where the powerful combination of homomorphic encryption and federated learning really shines, offering the ability to develop smarter AI systems without sacrificing data security.

In traditional machine learning, companies collect massive amounts of user data to train their models. However, as more data flows into central systems, the risks of data leakage or unauthorized access increase. With federated learning, companies can still benefit from diverse data sets, but that data remains with the user. By layering homomorphic encryption on top, the data is not just decentralized but also encrypted throughout the entire learning process.

Imagine you’re running a health app that helps people manage chronic conditions like diabetes. Using federated learning, the app can learn from individual users’ data to improve its recommendations while keeping that data local. And with homomorphic encryption, any data shared for the model’s updates is fully protected, making it nearly impossible for unauthorized parties to exploit it.

Big Tech’s Adoption of Homomorphic Encryption and Federated Learning

It’s no surprise that tech giants like Google, Apple, and Microsoft are at the forefront of adopting homomorphic encryption and federated learning. These companies have realized that securing customer data isn’t just a regulatory requirement—it’s key to maintaining trust in their products and services. Google, for example, has incorporated federated learning into its Gboard and other smartphone applications, ensuring that the user experience is enhanced without exposing personal information.

Apple’s focus on user privacy has made it a pioneer in integrating federated learning into its ecosystem. Their use of FL for predictive text and other features in iOS ensures that users benefit from AI-driven improvements while their data remains protected on their devices. With privacy becoming a hot-button issue, Big Tech companies know they have to go beyond basic encryption methods. They’re increasingly exploring how homomorphic encryption can make federated learning even more secure, preventing potential vulnerabilities when model updates are transmitted.

Microsoft has also stepped into the arena with its Azure Confidential Computing services, which ensure that sensitive data remains protected even while being processed in the cloud. By using a combination of homomorphic encryption and secure enclaves, Microsoft is creating a cloud environment where data is safe from potential breaches, even when used for complex machine learning tasks.

The Future of AI and Privacy: Predictions and Trends

As the need for privacy-preserving AI grows, we can expect a significant rise in the adoption of homomorphic encryption and federated learning. These technologies are no longer niche—they’re fast becoming the standard for industries that deal with sensitive data like healthcare, finance, and law. One exciting trend is how these technologies will evolve to handle real-time data processing. Imagine a future where smart cities can monitor traffic patterns and predict emergencies using AI without ever invading individual privacy.

Another future trend will be the development of more efficient algorithms for homomorphic encryption, as current methods can still be resource-intensive. As encryption methods become more streamlined, we’ll see faster adoption in devices like wearables and IoT technologies, enabling real-time privacy-preserving analytics. Similarly, federated learning will move beyond smartphones and start impacting larger infrastructures, like connected vehicles, smart factories, and even medical research networks.

Overall, the future of AI will be defined by how well we can balance innovation with ethical data usage. The combination of homomorphic encryption and federated learning points toward a more privacy-centric approach to AI, where users can trust that their personal information will remain secure while benefiting from the advancements in machine learning.

Barriers to Mainstream Adoption of Privacy-Enhanced AI

Despite the enormous potential of homomorphic encryption and federated learning, there are several barriers to mainstream adoption. One of the key challenges is computational efficiency. Homomorphic encryption, while incredibly secure, is still resource-heavy and can slow down operations. This means companies need to invest in more powerful hardware and optimize their algorithms to handle the encrypted data without sacrificing performance.

Federated learning has its own hurdles too, primarily around communication costs. With so many devices involved in the training process, sending model updates back and forth can put a strain on network resources. The need to ensure model consistency—making sure the AI learns effectively despite data from vastly different devices—adds another layer of complexity. It’s a balancing act between privacy and practicality, and many industries are still figuring out how to implement these technologies at scale without compromising on performance.

Another obstacle is the lack of standardization. While tech giants like Google and Apple have embraced these methods, smaller companies often lack the resources to develop privacy-enhancing technologies from scratch. There’s also a learning curve for organizations to fully understand the benefits and limitations of homomorphic encryption and federated learning. Widespread adoption will likely hinge on the development of open-source tools and frameworks that make it easier for companies to integrate these technologies into their operations.

Are Homomorphic Encryption and Federated Learning the Future of AI?

With privacy concerns escalating and the public demanding more transparent data practices, it’s hard to imagine a future where homomorphic encryption and federated learning aren’t central to AI development. These technologies represent a major shift towards data sovereignty, allowing individuals and organizations to maintain control over their data while still contributing to and benefiting from AI advancements.

The growing regulatory landscape, driven by laws like GDPR and CCPA, will only accelerate the push towards these privacy-preserving methods. In fact, we may soon see laws that mandate the use of federated learning or homomorphic encryption in industries that handle sensitive data, as the risks of data breaches continue to mount.

Looking forward, privacy-first AI isn’t just a trend—it’s the future of ethical AI development. As machine learning continues to evolve, the demand for solutions that protect privacy while enabling innovation will only grow. The union of homomorphic encryption and federated learning may very well become the gold standard for AI systems that respect user privacy without compromising on performance.

Collaborative AI: How Businesses Can Benefit from Privacy Innovations

Privacy-enhancing technologies like homomorphic encryption and federated learning don’t just protect user data—they open up new opportunities for collaborative AI. Businesses across various industries can collaborate on AI development without compromising sensitive information. For example, companies in the healthcare sector can share insights gained from patient data, improving diagnostic tools and treatment plans, while keeping personal health information completely secure.

In financial services, organizations can collaborate on fraud detection systems without sharing raw customer data. Each bank or credit card company can run their own data through an AI model and then securely send encrypted updates back to a central system. This collaborative approach ensures a broader dataset without exposing sensitive financial information. The result is smarter, faster AI solutions across an entire industry.

By leveraging these privacy-first technologies, businesses can innovate and create competitive advantages without risking data breaches. Collaboration, previously hindered by privacy concerns, becomes possible on a global scale, accelerating growth in AI capabilities across sectors like transportation, retail, and insurance.

The Ethical Implications of Data Privacy in AI

As AI systems become more powerful, the ethical implications of data privacy can’t be ignored. Technologies like homomorphic encryption and federated learning offer solutions, but they also raise questions. For instance, if companies and governments can process vast amounts of data without technically “seeing” it, who decides how this data is used?

There’s also the issue of informed consent. Are users fully aware of how their data is being processed and by whom? While federated learning keeps data on individual devices, the AI is still being trained based on personal data. Transparency becomes crucial. Users need to understand how privacy-preserving AI operates and have control over their participation in these systems.

Furthermore, the deployment of AI models trained through federated learning raises questions about bias. If data from certain groups or regions isn’t included because of resource limitations or privacy concerns, could that skew the AI’s predictions? As these technologies evolve, ensuring fairness and accountability in their deployment will be essential to maintaining trust in AI systems.

Homomorphic encryption and federated learning present exciting possibilities for improving data privacy in decentralized AI, but as with any breakthrough, there’s a need for thoughtful consideration of their ethical impacts. These technologies are poised to shape the future of AI—transforming how we interact with machine learning in ways that prioritize security without sacrificing innovation.

FAQs

What industries benefit the most from these technologies?

Healthcare, finance, and telecommunications are the key industries benefiting from homomorphic encryption and federated learning. They need to perform complex computations on highly sensitive data while complying with strict privacy laws. Other industries, like smart home technology and automotive (self-driving cars), also use these technologies to improve AI without compromising user privacy.

What are the challenges of homomorphic encryption?

The main challenge of homomorphic encryption is its high computational cost. Current encryption techniques are resource-intensive, requiring more time and computing power than traditional encryption methods. However, researchers are working on more efficient algorithms to reduce these barriers.

How is federated learning different from traditional machine learning?

In traditional machine learning, all data is centralized for model training, increasing the risk of data breaches and privacy concerns. In federated learning, data stays on the user’s device, and only model updates (based on local data) are shared with a central server, significantly enhancing privacy protection.

Are there any drawbacks to federated learning?

Yes, federated learning can face issues like communication overhead, as model updates need to be sent from millions of devices. Additionally, the AI model’s accuracy can suffer due to differences in data quality across various devices, which can create inconsistent learning outcomes.

Is homomorphic encryption secure against all types of attacks?

Homomorphic encryption is highly secure, especially when used correctly. However, like all cryptographic systems, its security depends on the strength of the encryption scheme and the key management practices. It provides strong protection but is not entirely invulnerable, especially if encryption standards are not followed properly.

How does federated learning help with regulatory compliance?

Since federated learning keeps sensitive data on the device and doesn’t require the transfer of raw data to a central server, it helps organizations comply with data privacy regulations such as GDPR and CCPA. This decentralized approach ensures that personal data remains under the control of the user.

What is the difference between differential privacy and homomorphic encryption?

Differential privacy adds random noise to data to ensure that individual users cannot be identified from the output of computations, while homomorphic encryption allows computations to be performed on encrypted data without revealing the data itself. Both techniques protect privacy but in different ways.

Is federated learning slower than centralized machine learning?

It can be. Since federated learning requires model updates from multiple devices and has to handle varied network conditions, it might be slower compared to centralized systems. However, improvements in communication protocols and model optimization are reducing these delays.

How do businesses implement federated learning?

Businesses typically implement federated learning through specialized frameworks like Google’s TensorFlow Federated or OpenMined’s PySyft, which offer tools to build and deploy federated learning systems. These platforms handle data management, model updates, and secure communications between devices.

Can homomorphic encryption be applied in real-time systems?

Although homomorphic encryption is still resource-heavy, advancements are being made to make it more practical for real-time applications. Currently, its use in real-time systems is limited, but as the technology improves, it could be more widely implemented in areas like financial trading and healthcare diagnostics.

What is the future of homomorphic encryption and federated learning?

The future looks promising for both technologies. As demand for privacy-preserving AI grows, more industries will adopt these methods. Researchers are working on making homomorphic encryption more efficient and federated learning more scalable, which will lead to widespread use in consumer tech, smart cities, and global data collaboration.

What are the benefits of federated learning in healthcare?

Federated learning allows hospitals, clinics, and medical researchers to collaborate on AI model development without sharing sensitive patient data. This approach enhances medical research by allowing institutions to work together on improving disease prediction, diagnostics, and personalized treatments, all while complying with strict data privacy regulations like HIPAA. It enables healthcare providers to build robust AI systems from a decentralized pool of data, increasing model accuracy and ensuring that patient privacy is maintained.

How does homomorphic encryption impact financial services?

In the financial sector, homomorphic encryption enables secure analysis of encrypted financial data. Banks and financial institutions can perform risk assessments, detect fraud, and analyze customer behavior without ever decrypting the underlying data. This protects sensitive information like account details and transaction histories even during data analysis, making it invaluable for industries that handle confidential financial data.

How does federated learning help in AI personalization?

Federated learning enables AI systems to be personalized for individual users without compromising their privacy. For example, a health app can tailor recommendations based on the user’s data while keeping that data stored on the user’s device. Similarly, smartphones can improve their predictive text or voice recognition by learning from the user’s input locally and sending only model updates (without any personal data) to a central server. This ensures that personalization is achieved without sacrificing user data privacy.

What are the communication costs in federated learning?

One of the challenges of federated learning is the communication overhead involved. Since updates from numerous devices need to be sent back to a central server, this can result in high data transfer costs, especially when dealing with large-scale AI models. However, techniques like update compression and asynchronous training are being explored to reduce these communication costs, making federated learning more scalable and efficient.

How can homomorphic encryption be improved?

Homomorphic encryption, while highly secure, currently faces limitations in terms of speed and resource consumption. To improve its practical applications, researchers are focusing on developing more efficient encryption algorithms that require less computational power. Techniques like partial homomorphic encryption and optimizations in secure multiparty computation are also being explored to strike a balance between security and performance, especially for real-time applications in finance and healthcare.

Resources

- Google AI Blog: Federated Learning

- Google’s official blog post introducing federated learning and how it’s applied in mobile AI development (e.g., Gboard).

Link: Google AI: Federated Learning

- Google’s official blog post introducing federated learning and how it’s applied in mobile AI development (e.g., Gboard).

- Microsoft Research: Homomorphic Encryption

- An in-depth guide to Microsoft’s work on homomorphic encryption and its applications in secure data computation.

Link: Microsoft Research: Homomorphic Encryption

- An in-depth guide to Microsoft’s work on homomorphic encryption and its applications in secure data computation.

- OpenMined: Federated Learning

- A community-driven initiative offering tutorials and resources on privacy-preserving AI, including federated learning and differential privacy.

Link: OpenMined Resources

- A community-driven initiative offering tutorials and resources on privacy-preserving AI, including federated learning and differential privacy.

- IBM Research: Fully Homomorphic Encryption

- IBM’s research and practical examples of homomorphic encryption use cases in sectors like finance and healthcare.

Link: IBM Homomorphic Encryption

- IBM’s research and practical examples of homomorphic encryption use cases in sectors like finance and healthcare.

- The Alan Turing Institute: Ethics of Data Science

- A resource focusing on the ethical implications of privacy-preserving technologies like federated learning and how they relate to data protection laws.

Link: Turing Institute Data Ethics

- A resource focusing on the ethical implications of privacy-preserving technologies like federated learning and how they relate to data protection laws.

- NIST (National Institute of Standards and Technology): Post-Quantum Cryptography & Homomorphic Encryption

- NIST’s publications on encryption standards and future trends in data privacy technology.

Link: NIST Publications on Cryptography

- NIST’s publications on encryption standards and future trends in data privacy technology.

- Privacy International: Data Privacy & AI

- A non-profit resource on global data privacy trends, regulatory impacts, and the intersection of AI and personal privacy.

Link: Privacy International AI Resources

- A non-profit resource on global data privacy trends, regulatory impacts, and the intersection of AI and personal privacy.