What Are Autoencoders and Why Do They Matter?

Autoencoders are neural networks designed to learn efficient representations of data. Their core purpose is to compress data into a smaller, more manageable form and then reconstruct it as closely as possible.

This process of encoding and decoding seems simple, but the implications are vast.

Initially, autoencoders were just seen as tools for dimensionality reduction. However, their true potential goes far beyond shrinking data. They act as unsupervised learning mechanisms that discover hidden patterns. These networks don’t just reduce the noise in a dataset; they highlight its essence, making it cleaner and more insightful.

In today’s data-rich environment, the ability to extract meaningful information with minimal supervision is invaluable. Whether you’re working with images, time-series data, or anomaly detection, autoencoders provide a powerful solution. But to truly appreciate them, we need to explore how they function beyond their initial purpose.

Dimensionality Reduction: The Starting Point for Autoencoders

At their simplest, autoencoders excel at dimensionality reduction. They take high-dimensional input data, like a massive dataset of images, and compress it into a lower-dimensional space. This compressed version retains the most important features while discarding redundancies.

For instance, think about how much raw pixel data exists in a high-resolution image. Most of it is irrelevant noise, or information you don’t need for a task like facial recognition. Autoencoders can distill this data into key components—saving time, storage, and computational power.

But dimensionality reduction is just the tip of the iceberg. While this ability has made autoencoders popular in areas like data compression or preprocessing for machine learning models, it’s their deeper capabilities that really set them apart. From there, we dive into feature extraction, anomaly detection, and even complex generative models.

Moving Past Simple Compression: Autoencoders as Feature Extractors

One of the most exciting roles of autoencoders lies in their ability to act as feature extractors. By forcing data through a “bottleneck” (the compressed layer), they learn the most crucial aspects of the input. This distilled information can be more insightful than the original high-dimensional data.

For example, imagine using autoencoders for image recognition. The network may learn to focus on defining shapes or textures, rather than irrelevant details like background noise. Once you’ve trained an autoencoder, the encoded features it extracts can serve as inputs for further analysis or machine learning models.

In fact, this ability makes them particularly powerful when dealing with unsupervised tasks. With minimal human guidance, autoencoders can automatically generate meaningful, abstract representations of complex datasets—whether it’s financial market patterns, voice recognition data, or even human genomic sequences.

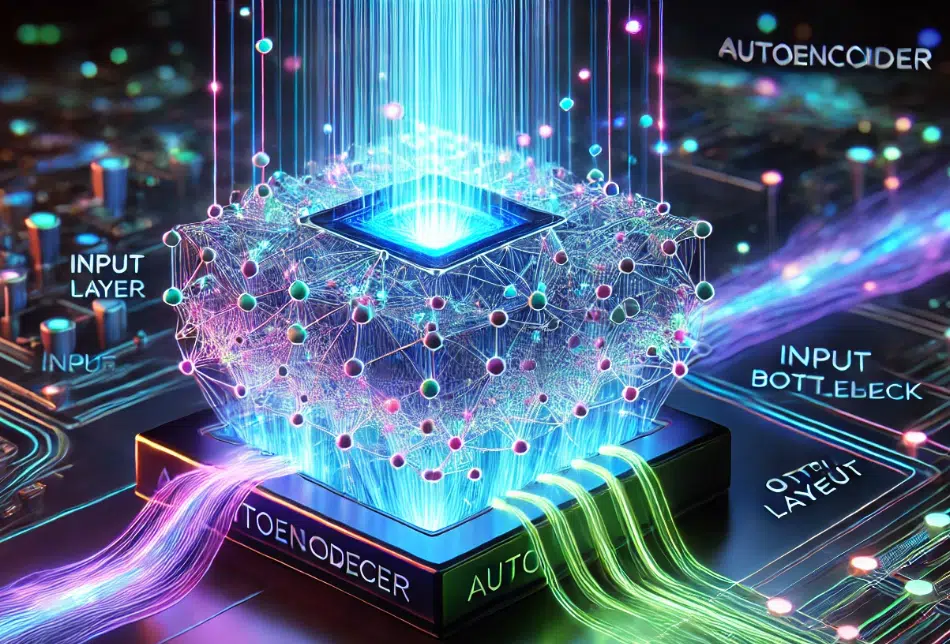

Understanding the Architecture: Encoder, Bottleneck, and Decoder

At the heart of every autoencoder is a three-part structure: the encoder, the bottleneck, and the decoder. Understanding how these components work can shed light on why autoencoders are so effective.

- Encoder: This section takes the raw input data and transforms it into a compressed, lower-dimensional format. It captures the essence of the data by filtering out unimportant features.

- Bottleneck: This is the compressed representation. Think of it as the “middle” where the data is at its most concise form—yet still rich in valuable information. This small set of dimensions contains all the necessary patterns the network has learned to extract.

- Decoder: The decoder takes the information in the bottleneck and tries to recreate the original input. A well-trained autoencoder will rebuild the input with minimal loss, demonstrating its ability to store essential information.

The encoder-decoder structure is deceptively simple, but within it lies the power to perform a range of complex data processing tasks. And once this architecture is in place, it’s possible to experiment with variations and improvements—like variational autoencoders (VAEs).

Variational Autoencoders (VAE): A Step Beyond Standard Models

While standard autoencoders learn a deterministic mapping of inputs, variational autoencoders (VAEs) add a probabilistic twist. In a VAE, the bottleneck isn’t just a fixed encoding—it’s a distribution from which values can be sampled. This subtle difference opens up entirely new possibilities, especially in the realm of generative models.

A VAE doesn’t just learn to reconstruct the input data. It learns a probability distribution that describes the data, meaning it can generate new data similar to the input. This capability has significant implications for fields like data augmentation, where you may want to create synthetic data that closely resembles real-world data for training purposes.

The use of VAEs has grown particularly in image generation tasks, where they can create new, lifelike images from a trained model. But beyond visual data, the implications are vast—from generating more realistic speech patterns to simulating complex environments in reinforcement learning.

By embracing the variability in data, VAEs show how autoencoders can move past their traditional boundaries into more creative, generative areas.

The Role of Autoencoders in Data Denoising

One of the most practical applications of autoencoders lies in data denoising. Often, real-world data is noisy—whether it’s blurry images, background static in audio, or random fluctuations in sensor readings. Autoencoders, particularly denoising autoencoders, can clean up this data by learning to ignore noise while focusing on essential patterns.

Here’s how it works: you train the autoencoder with noisy input data and the corresponding clean version as the target output. The model learns to map noisy data to its clean counterpart. When you input noisy data into the trained autoencoder, it reconstructs a cleaner version, essentially filtering out the unwanted noise.

This technique is especially useful in image processing. For example, if you’re working with old, damaged photos, a denoising autoencoder can help restore them by removing scratches, blurs, or other distortions. It’s also valuable in speech recognition systems that need to filter out background noise to better understand spoken commands.

How Autoencoders Empower Anomaly Detection

Autoencoders are also widely used in anomaly detection. This is because they excel at learning the “normal” patterns in data and can easily spot deviations. Once trained on a dataset of typical, non-anomalous data, an autoencoder struggles to reconstruct anything that doesn’t fit this pattern—revealing anomalies.

For instance, in fraud detection, the system learns what a standard transaction looks like. When faced with an unusual pattern—like a sudden, massive purchase—it flags the transaction as an anomaly. Similarly, autoencoders are used in industries like manufacturing, where machines continuously generate performance data. If an autoencoder detects data that falls outside the norm, it can signal a potential failure or malfunction.

Anomaly detection with autoencoders has proven to be highly effective in areas like cybersecurity (identifying unusual network activity), finance, and healthcare (detecting abnormal medical conditions). It’s particularly valuable in environments where the cost of missing an anomaly is high.

Autoencoders in Image Reconstruction: Pushing Visual Data to New Heights

Image reconstruction is another area where autoencoders shine. Beyond cleaning up noisy data, they can reconstruct missing or damaged parts of an image. In fact, this technology has been employed in medical imaging, art restoration, and even satellite imagery, where reconstructing missing visual information can be critical.

The decoder component of an autoencoder is responsible for this reconstruction. After the encoder compresses the data into its core features, the decoder attempts to rebuild the input image based on what it’s learned. In cases where some parts of the image are missing, autoencoders can still generate highly accurate reconstructions.

This capability extends to more creative applications, such as image generation. For example, autoencoders have been used to generate synthetic faces or landscapes by learning patterns from real images. This technology is also behind popular tools used in photo editing software to restore or enhance old photos.

Time-Series Data: A Growing Frontier for Autoencoders

Time-series data—such as stock prices, heart rate monitors, or weather patterns—has its own set of challenges. Since it’s sequential, patterns change over time, and these shifts must be accounted for. Autoencoders can capture these time-based dependencies, making them highly effective for processing temporal data.

For example, in financial forecasting, autoencoders can analyze historical price movements and extract key patterns, helping predict future trends. In healthcare, they can monitor patient data over time, spotting subtle changes that could signal a problem. And in smart home systems, they can learn typical patterns of energy use or temperature regulation, helping optimize efficiency and detect unusual spikes.

Additionally, recurrent autoencoders—which incorporate recurrent neural networks (RNNs) into the autoencoder structure—are particularly well-suited for time-series data. These models can capture the dependencies between time steps, improving accuracy in prediction and anomaly detection.

Deep Autoencoders: Unpacking Complex, Layered Data

While basic autoencoders are powerful, deep autoencoders take data processing to the next level. They consist of multiple layers in both the encoder and decoder, allowing the network to learn more complex, hierarchical representations of the data. This is especially useful when dealing with high-dimensional datasets that have intricate relationships between variables.

Deep autoencoders excel in fields like natural language processing (NLP), where understanding subtle patterns in text data requires deep layers of abstraction. For example, they can learn to break down language into individual letters, words, and phrases, and eventually into the meaning behind sentences.

Similarly, in image processing, deep autoencoders can capture not just simple features like edges or textures but also more abstract concepts like object recognition. As the layers go deeper, the model uncovers increasingly sophisticated features, making deep autoencoders ideal for tasks like facial recognition, autonomous driving, and scientific data analysis.

These advanced models require more training data and computational resources, but the payoff is significant. They offer an unmatched ability to represent complex data in a way that’s both compressed and rich in information, making them indispensable in big data environments.

The Intersection of Autoencoders and Generative Models

Autoencoders don’t just stop at reducing dimensions or filtering data—they have a fascinating relationship with generative models. Specifically, autoencoders can be used to create entirely new data, making them part of the broader family of generative algorithms. Variational autoencoders (VAEs), as discussed earlier, are particularly relevant here.

The idea of generating data might sound futuristic, but it’s already applied in fields like artificial image synthesis and music generation. By training autoencoders on a specific dataset, they can learn to generate new, similar examples based on the patterns they’ve identified. For instance, after learning from a collection of human faces, an autoencoder can generate new, highly realistic images of faces that never actually existed.

In addition to VAEs, generative adversarial networks (GANs) can be paired with autoencoders to boost their data generation capabilities. This combination of autoencoders with generative models opens up exciting possibilities for creating data-driven art, synthetic data for training AI, or even virtual environments for simulations and games.

Data Augmentation and Autoencoders: Creating More From Less

Data augmentation is essential when working with limited datasets. In many machine learning applications, a shortage of data can be a significant challenge. Autoencoders solve this problem by generating additional data based on the existing dataset, a process known as data augmentation.

For example, imagine you’re developing a machine learning model to recognize handwritten digits, but you only have a few hundred samples to train on. An autoencoder can generate more handwritten digits by slightly altering the existing ones, providing new training examples that still reflect the original dataset’s properties.

In image processing, autoencoders can introduce slight modifications—like rotating, flipping, or adding noise to an image—while still preserving its core features. This ability to expand a dataset without introducing new noise is particularly valuable in fields like medicine, where it’s often difficult to gather large amounts of labeled data. By augmenting data, autoencoders help boost model accuracy and reduce overfitting, ensuring that models generalize better to new, unseen examples.

Challenges and Limitations in Using Autoencoders

While autoencoders offer many benefits, they also come with some limitations. One of the primary challenges is that they require large amounts of data to be truly effective. If the training dataset is too small, the model may struggle to generalize, resulting in overfitting—where the model performs well on training data but poorly on new data.

Another limitation is that autoencoders tend to work best when the data has clear, consistent patterns. For example, they excel in structured environments like image processing but can struggle with more abstract or unstructured data types. Training a successful autoencoder also requires careful tuning of hyperparameters like the size of the bottleneck layer. If the bottleneck is too small, important features may be lost. If it’s too large, the autoencoder may fail to compress the data effectively.

Additionally, interpretability can be a concern. Unlike decision trees or simple statistical models, autoencoders are neural networks with many layers, making it difficult to understand exactly how they arrive at their conclusions. This “black box” nature means that while they may provide accurate results, understanding the why behind those results isn’t always straightforward.

The Future of Autoencoders: From Theory to Real-World Applications

Autoencoders have already proven their worth in a variety of fields, but their future looks even more promising. Researchers are exploring new ways to improve autoencoder efficiency, reduce computational costs, and apply them in more real-world scenarios. For instance, the fusion of autoencoders with transfer learning could significantly reduce the amount of training data needed.

Another exciting area is their potential for multi-modal data processing—where different types of data, like text, images, and audio, are processed simultaneously. Imagine an autoencoder that can take a photo, generate a caption, and synthesize an appropriate background sound. These multi-modal autoencoders could be used in virtual reality environments, content creation, or even complex decision-making systems where different data sources need to be combined.

Furthermore, as AI becomes increasingly important in fields like autonomous driving and robotics, autoencoders could play a critical role in helping machines understand and react to their environments. Their ability to filter noise, detect anomalies, and create representations of the world will be essential as AI systems become more integrated into our everyday lives.

Training Autoencoders: Pitfalls and Best Practices

Training an autoencoder is no simple task. It requires careful consideration of the architecture and hyperparameters. One common pitfall is the temptation to create too large or complex a model. When the model has too many parameters relative to the amount of training data, it becomes prone to overfitting.

To avoid this, many experts recommend using techniques like dropout, which randomly deactivates certain neurons during training to prevent the model from becoming overly reliant on any one feature. Another strategy is to employ regularization methods, which add a penalty for overly complex models.

Choosing the correct size for the bottleneck layer is another key decision. If it’s too small, the model might not capture all the necessary features. If it’s too large, it can fail to effectively compress the data, defeating the purpose of using an autoencoder in the first place.

Finally, training a model on clean data first, and gradually introducing noisy data, can help improve the autoencoder’s robustness in real-world applications. This approach, known as curriculum learning, helps the model learn progressively more complex representations, improving its overall performance in tasks like denoising, anomaly detection, or data reconstruction.

Applications of Autoencoders in Healthcare, Finance, and Beyond

The flexibility of autoencoders makes them incredibly useful across various industries, with healthcare and finance being two key areas where they are already making a big impact. In healthcare, autoencoders are helping with tasks like medical imaging, where they’re used to identify patterns in CT scans, MRIs, and X-rays. By learning from thousands of images, autoencoders can highlight abnormalities, detect diseases like cancer early on, or even enhance the quality of scans that may have been distorted by equipment malfunctions or patient movement.

Beyond imaging, they also assist in predictive analytics. For example, by analyzing patient data over time, autoencoders can predict potential health risks or detect early signs of deterioration. Hospitals are leveraging this ability to monitor patients in intensive care units (ICUs), where timely detection of subtle changes can be lifesaving.

In finance, autoencoders are particularly effective for fraud detection. Financial transactions tend to follow certain patterns, and any deviations from these patterns may indicate fraudulent activity. By analyzing vast amounts of transaction data, autoencoders learn the “normal” behavior and flag anything unusual. This automatic detection is crucial for real-time fraud prevention, especially in high-volume environments like credit card processing or stock trading.

But autoencoders aren’t confined to these industries. In e-commerce, they’re used for recommendation systems, predicting what users will want to buy next based on their browsing history. In manufacturing, they help with predictive maintenance, detecting equipment failures before they happen by analyzing sensor data.

Will Autoencoders Evolve Further? Emerging Research Trends to Watch

As machine learning continues to advance, so too will the development of autoencoders. Researchers are already exploring ways to improve their scalability and efficiency, particularly as we deal with ever-larger datasets. One area of interest is unsupervised pretraining, where autoencoders are used to learn basic representations of data before fine-tuning them for specific tasks.

Another exciting trend is the integration of autoencoders with reinforcement learning. Imagine a robot that uses autoencoders to map out its environment—compressing visual or sensor data to better understand its surroundings—and then makes decisions based on this learned representation. This combination could enable more intelligent autonomous systems in industries like logistics, self-driving cars, and even space exploration.

In terms of architecture, we’re seeing more experimentation with hybrid models that combine autoencoders with other advanced techniques like transformers. These hybrid systems aim to capture both short-term and long-term dependencies in data, making them especially useful for tasks like natural language processing and sequence-to-sequence modeling.

Finally, explainability is becoming a key focus. As the demand for transparency in AI grows, efforts are being made to design autoencoders that not only perform well but are also interpretable. This will be crucial as these models are deployed in sensitive areas like medicine and law, where understanding how a model arrives at a decision is just as important as the decision itself.

The future of autoencoders is bright, and their evolution will undoubtedly continue to push the boundaries of what’s possible in data processing and AI.

Frequently Asked Questions (FAQ) About Autoencoders

1. What is an autoencoder?

An autoencoder is a type of neural network designed to learn a compressed representation of input data. It has two main components: an encoder, which compresses the data, and a decoder, which reconstructs it. The goal is to reduce the data into a smaller dimension while retaining important features.

2. How do autoencoders perform dimensionality reduction?

Autoencoders reduce dimensionality by learning to represent the data in a compressed form, called the bottleneck layer. Unlike traditional techniques like PCA, autoencoders can capture complex, non-linear relationships in the data, making them more powerful for high-dimensional datasets like images or time-series data.

3. What are autoencoders used for?

Autoencoders are versatile and can be used in:

- Dimensionality reduction

- Data denoising

- Anomaly detection

- Feature extraction

- Image and time-series data reconstruction

- Generative models to create new data samples

4. What is the difference between an autoencoder and a variational autoencoder (VAE)?

While both aim to compress and reconstruct data, variational autoencoders (VAEs) introduce a probabilistic element by learning a distribution of data rather than fixed encodings. This allows VAEs to generate new data by sampling from this distribution, making them suitable for tasks like data augmentation and generative models.

5. How do autoencoders help in data denoising?

Autoencoders can be trained to filter out noise by using noisy input data and clean output data during training. The network learns to ignore irrelevant noise and reconstruct a clean version of the input, which is useful in applications like medical imaging or audio enhancement.

6. Can autoencoders be used for anomaly detection?

Yes, autoencoders are great for anomaly detection. They learn the “normal” patterns in a dataset, and when something doesn’t fit this pattern (an anomaly), the autoencoder has difficulty reconstructing it, leading to higher reconstruction errors, which flag the anomaly.

7. What are the challenges of using autoencoders?

Some challenges include:

- Overfitting: Especially when the model is too complex or the dataset is too small.

- Interpretability: Autoencoders are neural networks, so understanding exactly how they learn patterns can be difficult.

- Data requirements: They often require large amounts of training data to perform well.

8. Are autoencoders unsupervised learning models?

Yes, autoencoders are typically used in unsupervised learning because they don’t require labeled data. They learn from the data itself by compressing and reconstructing the input, rather than learning a specific mapping from input to output like in supervised learning.

9. Can autoencoders generate new data?

Yes, variational autoencoders (VAEs) are specifically designed to generate new data. By learning the underlying distribution of the data, VAEs can create realistic new examples similar to those they’ve been trained on.

10. What industries use autoencoders?

Autoencoders are widely used in industries such as:

- Healthcare: For medical imaging, early disease detection, and predictive analytics.

- Finance: Fraud detection, risk management, and market predictions.

- Manufacturing: Predictive maintenance and anomaly detection in sensor data.

- E-commerce: Product recommendations and customer behavior analysis.

11. What is a deep autoencoder?

A deep autoencoder consists of multiple layers in both the encoder and decoder. This allows the network to learn more complex representations of the data, making it highly effective for tasks involving high-dimensional data, such as image classification or speech recognition.

12. What are denoising autoencoders?

A denoising autoencoder is a variation of the basic autoencoder model designed to remove noise from data. By training the model with noisy input and clean output, it learns to reconstruct the original, noise-free version of the data.

13. How do autoencoders compare to principal component analysis (PCA)?

Both autoencoders and PCA can perform dimensionality reduction, but autoencoders are more powerful as they can capture non-linear relationships in data. PCA is limited to linear transformations. Autoencoders also have the advantage of being highly customizable through deep learning architectures.

14. Are autoencoders prone to overfitting?

Yes, like many machine learning models, autoencoders can overfit if they have too many parameters relative to the size of the training data. Techniques like dropout, early stopping, and regularization can help reduce the risk of overfitting.

15. How do you choose the bottleneck size in an autoencoder?

Choosing the size of the bottleneck layer requires balancing between too much compression (leading to information loss) and too little compression (which defeats the purpose of dimensionality reduction). Experimenting with different sizes and using techniques like cross-validation can help optimize this choice.

Research Papers on Autoencoders

- “Auto-Encoding Variational Bayes” by Kingma and Welling: A seminal paper on variational autoencoders (VAEs), introducing the probabilistic approach to autoencoders.

Read the paper. - “Anomaly Detection with Autoencoders” by Sakurada and Yairi: This paper details the use of autoencoders for anomaly detection in time-series data.

Find it here.