Why Fake News is a Growing Problem

Information travels faster than ever. Fake news spreads like wildfire across social media platforms, creating confusion and influencing public opinion.

Misinformation and disinformation are increasingly used to manipulate audiences, especially during critical events like elections, public health crises, or geopolitical tensions.

From viral conspiracy theories to false headlines, the sheer volume of information makes it difficult to discern what’s real. This growing problem has led to a surge in research and the development of technologies aimed at fighting misinformation.

Topic modeling, particularly through Latent Dirichlet Allocation (LDA), is emerging as a valuable tool in this battle.

The Challenge of Identifying Fake News

Detecting fake news isn’t as simple as fact-checking isolated claims. The speed and volume of online content make it impossible for manual efforts to keep up. Complicating things further, fake news articles often blend factual elements with falsehoods, making it hard to detect at a glance.

Fake news detection requires advanced methods that analyze not only content, but the context and the patterns within large datasets. This is where LDA shines. By automatically identifying topics and trends in massive text datasets, LDA can help isolate misleading information from the truth.

Introduction to Latent Dirichlet Allocation (LDA)

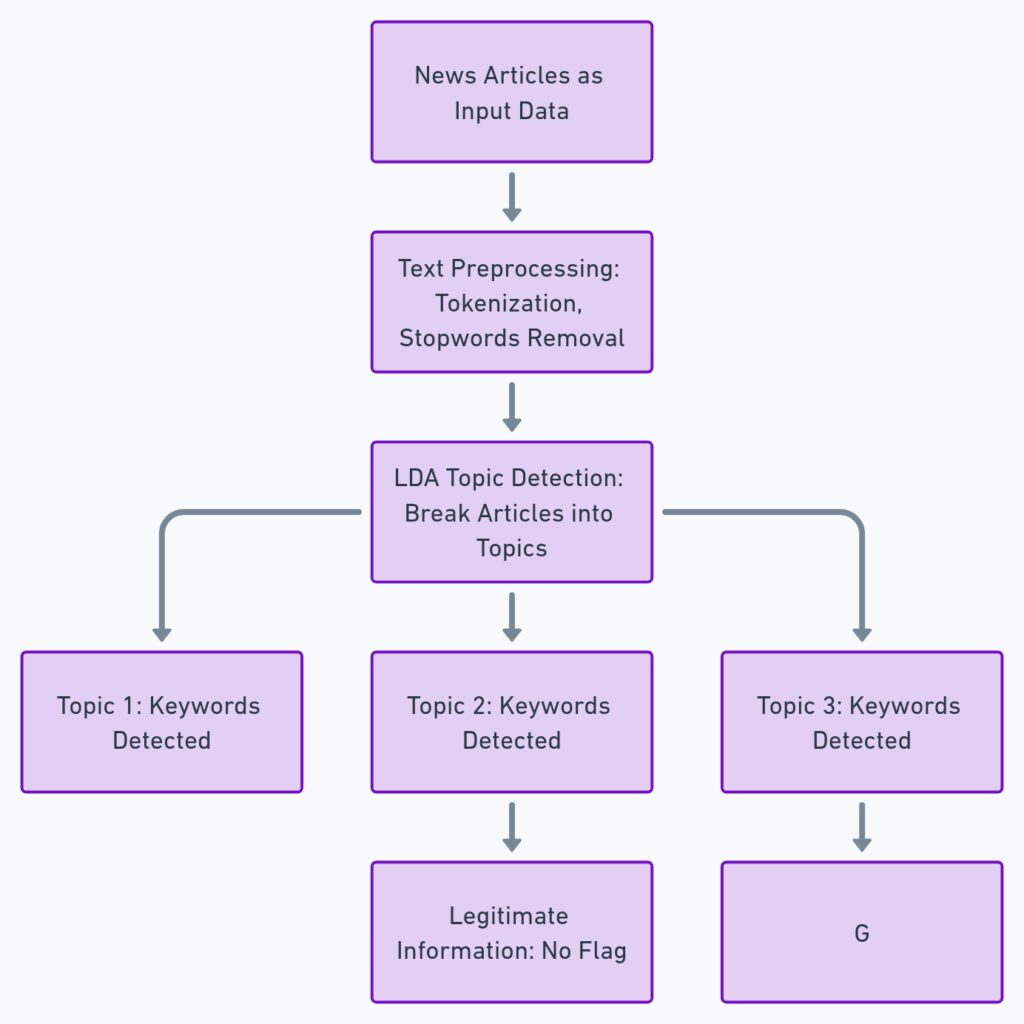

Latent Dirichlet Allocation (LDA) is a statistical model used for topic discovery in large collections of documents. In the context of fake news, LDA can be employed to analyze textual data across articles, social media posts, or news sites.

By analyzing how words group together in documents, LDA uncovers hidden topics that give insight into the overall content without needing direct human supervision.

Each document is considered a mixture of topics, and each topic is defined by a distribution of words. LDA finds these hidden topics and helps us understand the broader patterns present in the dataset, which can be essential in identifying sources of misinformation.

How LDA Helps Identify Misinformation

LDA can be a powerful tool in fake news detection because it breaks down complex datasets into more understandable parts—topics. When applied to news articles, LDA can surface unusual patterns, such as topics or themes that deviate from those covered in credible news sources.

For instance, while legitimate news outlets may focus on events like policy changes or scientific findings, fake news stories might have topics centered around conspiracy theories or fear-mongering. LDA helps in discovering these abnormal topic patterns by flagging articles or sources that talk about themes commonly associated with misinformation.

Moreover, LDA can detect topic coherence. Fake news stories may contain disjointed or incoherent topics that don’t align with the narratives seen in trustworthy media outlets, further helping to spot misleading content.

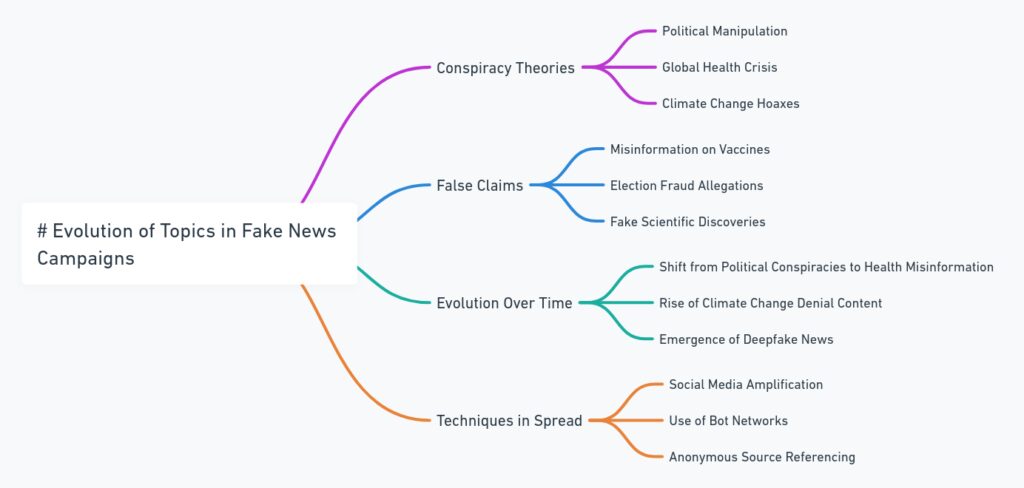

Topic Modeling in the Age of Fake News

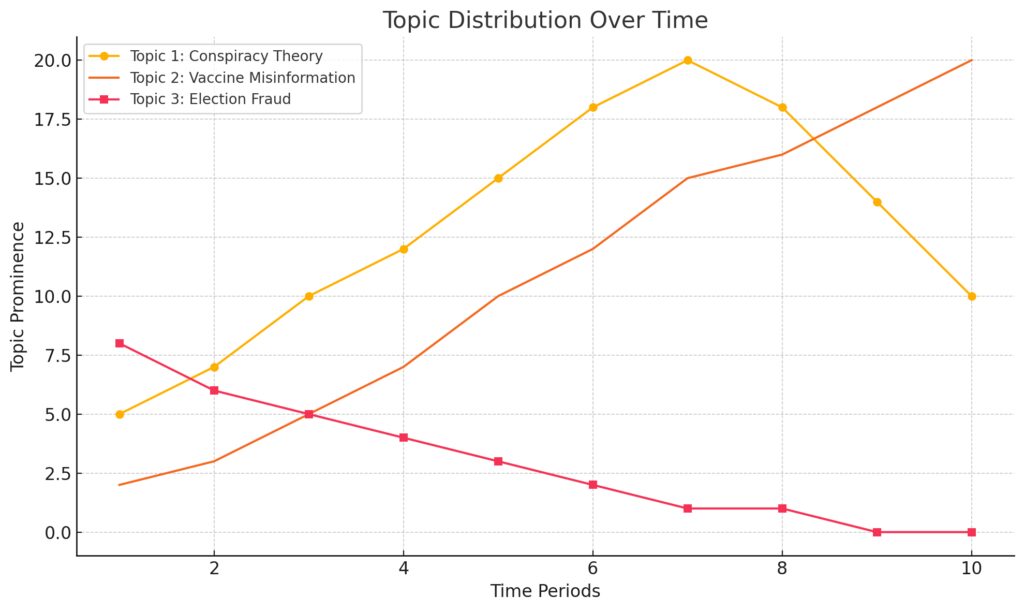

The role of topic modeling has become increasingly critical in our effort to combat the spread of misinformation. Fake news often comes in waves, with particular topics being pushed forward by actors seeking to manipulate the conversation. By using LDA, researchers can track these topics and analyze how they evolve over time.

For example, during major events like pandemics or elections, misinformation campaigns tend to follow specific patterns. By continuously applying LDA to data streams—whether from news sources, social media, or forums—it’s possible to detect emerging misinformation topics before they spiral out of control.

This proactive approach offers a way to slow the spread of fake news before it becomes viral.

Detecting Patterns in News Stories with LDA

Fake news articles often have distinguishing patterns in terms of language use, topic shifts, and structure. LDA helps detect these by comparing the content of suspicious news articles with that of legitimate ones.

For instance, fake news might frequently introduce fringe topics like conspiracy theories, false medical advice, or fear-based narratives that aren’t prominent in credible sources. By analyzing topics across a wide range of articles, LDA can highlight these inconsistencies and flag sources that may be spreading misinformation.

This ability to capture both content patterns and distribution shifts makes LDA a crucial asset in identifying news articles that are out of sync with trusted journalism.

Real-World Applications of LDA in Fake News Detection

LDA has already been employed in several real-world scenarios to tackle misinformation. Social media platforms and news aggregators use topic modeling to analyze massive streams of content, identifying suspect posts or articles that could be spreading misleading information.

For example, during the 2016 US Presidential Election, researchers used LDA to track fake news sources spreading conspiracy theories about candidates. Similarly, health organizations have used LDA to monitor the spread of misinformation on COVID-19, allowing them to respond to dangerous myths before they gain significant traction.

By analyzing articles and posts in near real-time, organizations can flag content for fact-checking and limit its spread to wider audiences.

Challenges in Applying LDA to Misinformation

While LDA is effective at detecting topics, applying it to the complex world of fake news poses several challenges. One key issue is that fake news is often designed to mimic real news, making the distinction between the two difficult. LDA can uncover patterns, but distinguishing between legitimate and illegitimate sources based on topics alone requires additional layers of analysis.

Another challenge lies in the dynamic nature of misinformation. Fake news topics can shift rapidly, especially during fast-moving events like elections or pandemics. LDA models trained on older datasets may struggle to keep up with new narratives introduced by misinformation campaigns, making continuous model updates necessary.

Moreover, the language used in fake news can be highly varied. Misinformation may rely on sensationalism, clickbait, or subtle misinformation, creating an environment where low-quality content is blended with high-impact fake narratives. This variability complicates the task of LDA because its assumptions about text coherence might be violated in fake news datasets.

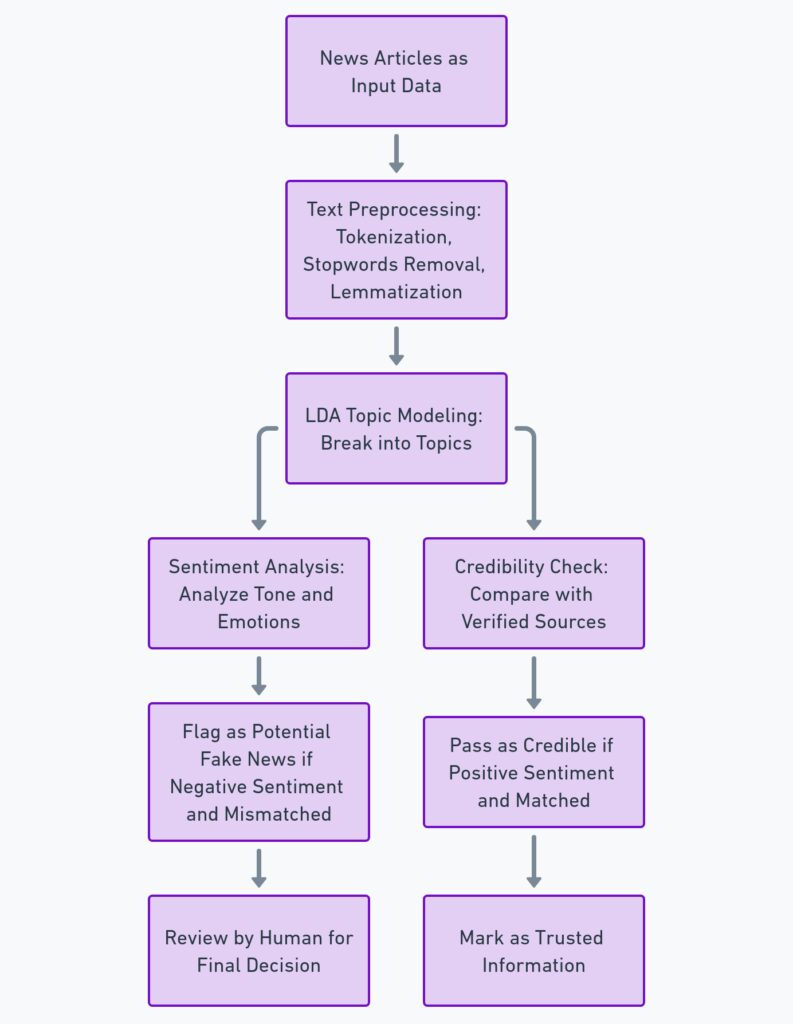

Combining LDA with Sentiment and Credibility Analysis

To improve the effectiveness of fake news detection, LDA is often combined with other techniques like sentiment analysis and credibility analysis. Sentiment analysis helps detect whether articles or posts contain unusually high levels of negative or emotionally charged language, which is a common hallmark of fake news.

For example, many fake news stories rely on triggering fear, outrage, or shock to drive engagement. By pairing LDA with sentiment analysis, you can filter content that not only contains unusual topics but also exhibits extreme emotional tones. This combination helps isolate stories that might be deliberately inciting fear or anger.

Credibility analysis is another important layer. Instead of relying solely on LDA to detect suspicious topics, systems can assess the source credibility. By factoring in the reputation of the news outlet, social media influencers, or bloggers, systems can refine the results and prioritize content from trusted sources while flagging dubious ones.

LDA for Social Media Misinformation Detection

Social media platforms are fertile ground for the spread of misinformation. LDA can be an excellent tool for analyzing social media content, where fake news often spreads in viral patterns. On platforms like Twitter, users may share or retweet content without verifying its credibility, allowing misinformation to propagate rapidly.

By applying LDA to tweets, posts, and hashtags, researchers can identify which topics are trending and isolate emerging clusters of fake news. For instance, LDA could help detect bots or troll farms that are seeding fake stories by analyzing the language patterns and topics they promote.

Hashtag analysis using LDA can further pinpoint misinformation campaigns that focus on key phrases or buzzwords. This is especially useful during global events, where misinformation campaigns often target trending hashtags or create their own to mislead the public.

Dynamic Topic Shifts: Tracking Evolving Misinformation

One of the strengths of LDA is its ability to track topic shifts over time. In the context of fake news detection, this ability is crucial because misinformation campaigns evolve. What starts as a false claim may expand into an entire web of conspiracy theories or new narratives.

LDA models can track how certain topics emerge, gain traction, and eventually fade. By monitoring these shifts, fact-checkers and researchers can anticipate the next wave of misinformation before it hits a larger audience.

For example, LDA can reveal how a small conspiracy theory grows into a larger fake news phenomenon by identifying key changes in the way certain words or phrases are used over time. This allows organizations to stay ahead of the curve by responding before the misinformation reaches its peak.

Leveraging LDA with Machine Learning for Accuracy

While LDA excels at identifying topics, its ability to combat misinformation increases significantly when combined with machine learning algorithms. Machine learning classifiers like Support Vector Machines (SVMs) or Random Forests can use the topic distributions generated by LDA as features to build more accurate fake news detection systems.

For instance, after LDA generates topics, machine learning models can learn to distinguish between patterns typical of fake news and those of credible news. This combination increases accuracy because the machine learning model can make more nuanced distinctions based on patterns that go beyond just topic similarity.

Moreover, ensemble methods, which combine multiple machine learning models, can further improve detection by integrating sentiment analysis, source credibility scores, and topic models into a single system. This comprehensive approach ensures that the model captures the full complexity of fake news stories, leading to more reliable results.

Case Studies: LDA in Action Against Misinformation

Several real-world examples highlight how LDA has been successfully used to detect and combat fake news. One significant case involved the use of LDA for election misinformation monitoring. Researchers applied LDA to social media data during the 2016 U.S. Presidential Election, where they uncovered distinct topics around conspiracy theories and disinformation campaigns targeting political candidates. By isolating these emerging topics early, fact-checkers were able to debunk false claims before they went viral.

Similarly, during the COVID-19 pandemic, LDA was used to monitor the spread of health-related misinformation. Health organizations and academic researchers applied LDA models to analyze large datasets of social media posts and news articles. They found recurring themes around false cures, vaccine misinformation, and conspiracy theories about the virus’s origin. This analysis allowed them to quickly respond to these narratives by issuing fact-based corrections and guiding public awareness efforts.

Another example is the #Pizzagate conspiracy theory, where researchers applied LDA to thousands of posts and articles. LDA revealed that certain keywords and topics associated with the conspiracy were being amplified by bots and misinformation networks. This insight helped researchers understand how the conspiracy was being spread and provided a roadmap for slowing its viral momentum.

Ethical Considerations in Using AI to Combat Fake News

While the use of LDA and machine learning to combat misinformation has tremendous potential, it also raises ethical questions. One major concern is the potential for censorship. Automatically flagging and removing content based on topic patterns could unintentionally silence legitimate voices, particularly those discussing controversial but factual topics.

Another ethical issue is algorithmic bias. If LDA models are trained on biased datasets, they could inadvertently reinforce those biases, disproportionately targeting certain viewpoints or communities. Ensuring that the data used to train these models is diverse and representative is critical to preventing misuse.

Moreover, privacy concerns arise when applying LDA to social media content. Even though LDA doesn’t rely on personal data, its application to vast amounts of publicly shared content can make people feel surveilled. It’s important that tools used for misinformation detection respect user privacy while balancing the need for public safety and truth.

Tools and Platforms for LDA in Fake News Detection

Several platforms and tools are available for implementing LDA in misinformation detection:

- Gensim: A Python library that provides an easy-to-use interface for applying LDA to text data. It’s widely used in academic and industry settings for topic modeling on large datasets, making it a reliable choice for analyzing fake news.

- MISP (Malware Information Sharing Platform): Although traditionally used for cybersecurity, MISP has tools for detecting misinformation in real-time by analyzing topic patterns across fake news campaigns. It supports LDA for pattern recognition in large text datasets.

- Hugging Face: While primarily known for transformer models, Hugging Face also offers integrations with LDA and other machine learning techniques for analyzing social media and news content.

- Google BigQuery: BigQuery is a powerful platform for processing and analyzing large datasets in real-time. It supports LDA for tracking topic changes and misinformation patterns across vast amounts of text data.

The Future of LDA in Combating Misinformation

As misinformation continues to evolve, so too must the tools we use to fight it. The future of LDA in this space will likely involve deeper integration with neural networks and natural language processing (NLP) models. Neural topic models, which combine deep learning with traditional LDA, may provide more sophisticated topic analysis by capturing complex relationships between words and topics that simple probabilistic models might miss.

We can also expect to see more focus on real-time misinformation tracking. With the help of online LDA and streaming topic models, organizations will be able to stay one step ahead of misinformation campaigns by constantly updating their models to reflect the latest data trends.

Cross-lingual LDA models are also a promising area of development. As misinformation spreads globally, tools that can handle multilingual datasets will be crucial for identifying patterns across different languages and regions.

Finally, the increasing collaboration between AI developers, journalists, and policy makers will shape the ethical frameworks and regulations for using LDA in fighting fake news, ensuring these technologies are applied responsibly and transparently.

LDA’s potential in fake news detection and misinformation combat is significant, but it must be combined with other techniques and used thoughtfully to address both the technical and ethical challenges that come with it.

Resources for Understanding and Implementing LDA in Fake News Detection

- Gensim for Topic Modeling

Gensim is a widely used Python library for performing topic modeling, including LDA. It’s a powerful tool to apply LDA on large text datasets and is ideal for identifying topics in news articles or social media data. - Scikit-Learn: LDA Implementation

Scikit-learn provides an easy-to-implement LDA module for small and medium datasets. It can be used to get started with topic modeling for fake news detection and is well-suited for academic and experimental purposes.- Scikit-Learn LDA Documentation

- Online LDA by Matthew Hoffman

This foundational research paper on online LDA explains how LDA models can be adapted for real-time processing. It’s highly useful for understanding how to track topics in fast-moving data streams like social media.- Hoffman et al. Online LDA Paper

- Introduction to LDA by Blei, Ng, and Jordan

The original paper introducing Latent Dirichlet Allocation provides the theoretical background needed to understand the model and how it can be applied to fake news detection.- Blei et al. LDA Paper

- COVID-19 Misinformation Detection with LDA

This research paper covers how LDA was applied to detect COVID-19 misinformation by analyzing trending topics on social media and other platforms. - Detecting Fake News on Social Media Using Machine Learning

A comprehensive research study that explores the role of LDA in detecting fake news when combined with machine learning techniques like sentiment analysis and classification models.- Fake News Detection Study

- Hugging Face for Text Classification

Hugging Face offers models and libraries that integrate LDA with deep learning for text classification and misinformation detection on social media platforms.