Understanding the Role of Reward Shaping in PPO

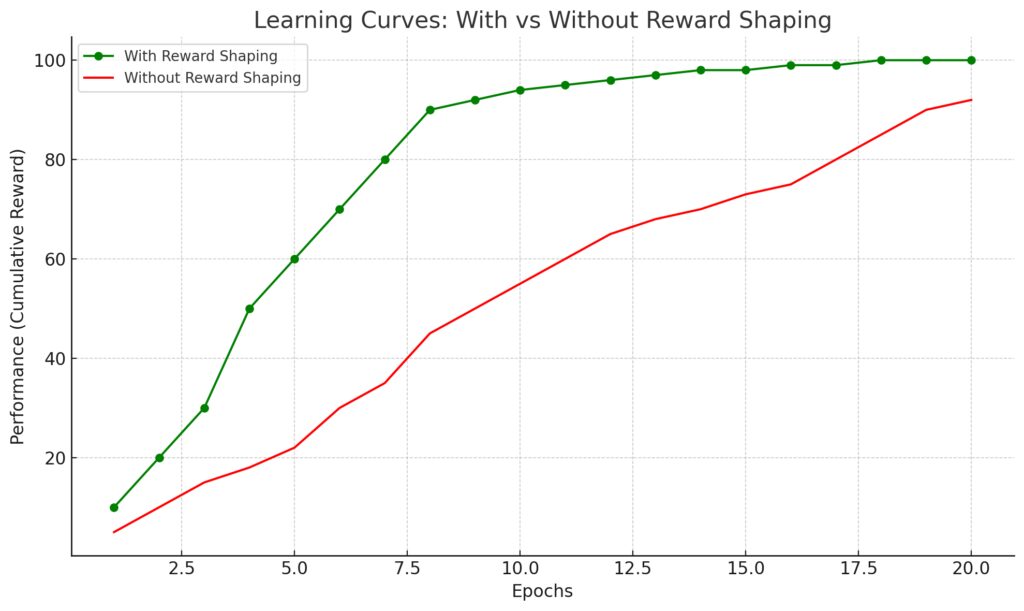

In Proximal Policy Optimization (PPO), reward shaping plays an essential role in steering agents toward desired behaviors.

It’s the mechanism through which an agent can connect its actions to specific outcomes in environments that are often dynamic and complex. Done well, it can significantly accelerate learning and improve performance.

However, reward shaping isn’t without its difficulties. Finding the right balance between guiding the agent and letting it learn independently is crucial.

In overly complex environments, such as robotics simulations or strategy-based video games, this challenge becomes even more evident. Designers must find the sweet spot where rewards drive effective learning without distorting the ultimate objective.

The Balance Between Exploration and Exploitation

One of the most delicate challenges in reward shaping is achieving a good balance between exploration and exploitation. On the one hand, exploration encourages the agent to discover new behaviors and strategies. On the other, exploitation pushes the agent to maximize rewards by leveraging what it has already learned.

Poorly shaped rewards can lead to agents overly exploiting what they know, failing to explore potential improvements. In contrast, too much emphasis on exploration can result in wasted efforts, with agents trying out random strategies without clear direction.

Reward shaping must delicately manage this trade-off, especially in complex environments where long-term goals often outweigh immediate gains.

Delayed Rewards and Long-Term Goals

In some tasks, rewards are not immediate, creating an additional challenge for the agent. Delayed rewards are common in scenarios where the overall objective is spread across multiple actions or stages. For example, consider a task in autonomous driving—navigating through a series of intersections to reach a final destination.

In these cases, reward shaping must account for the entire sequence of decisions, not just the short-term outcomes. If the agent gets too focused on rewards tied to immediate steps, it may ignore the broader, long-term strategy required to achieve success.

Fine-tuning objectives to reflect intermediate progress without overshadowing the final goal becomes a key challenge.

Avoiding Reward Hacking and Unintended Behaviors

One of the more notorious pitfalls of reward shaping is the potential for reward hacking. Agents are highly goal-driven, and they may find ways to exploit the reward structure in ways the designer didn’t anticipate. For instance, an agent learning to walk might discover that stumbling forward repeatedly still earns it the same reward as walking properly, leading to strange and unintended behaviors.

Avoiding this requires carefully crafted reward systems that encourage the correct behavior without introducing loopholes. Multiple rounds of testing and adjustment are often needed to fine-tune objectives, ensuring the agent learns in a way that aligns with the designer’s true goals.

Scaling Reward Shaping in Multi-Agent Systems

Reward shaping becomes even more complex in multi-agent environments, where agents must consider not only their own goals but also how their actions affect others. This is particularly evident in cooperative or competitive tasks where agents either work together or against one another to achieve a common goal.

In cooperative settings, shaping rewards that promote collaboration without sacrificing individual learning is tricky. If the rewards are too group-focused, individual agents might fail to learn their unique strengths. In competitive environments, the challenge is to balance fairness with aggression, ensuring that agents learn strategies that work within the context of competitive games or other multi-agent ecosystems.

Understanding the Role of Reward Shaping in PPO

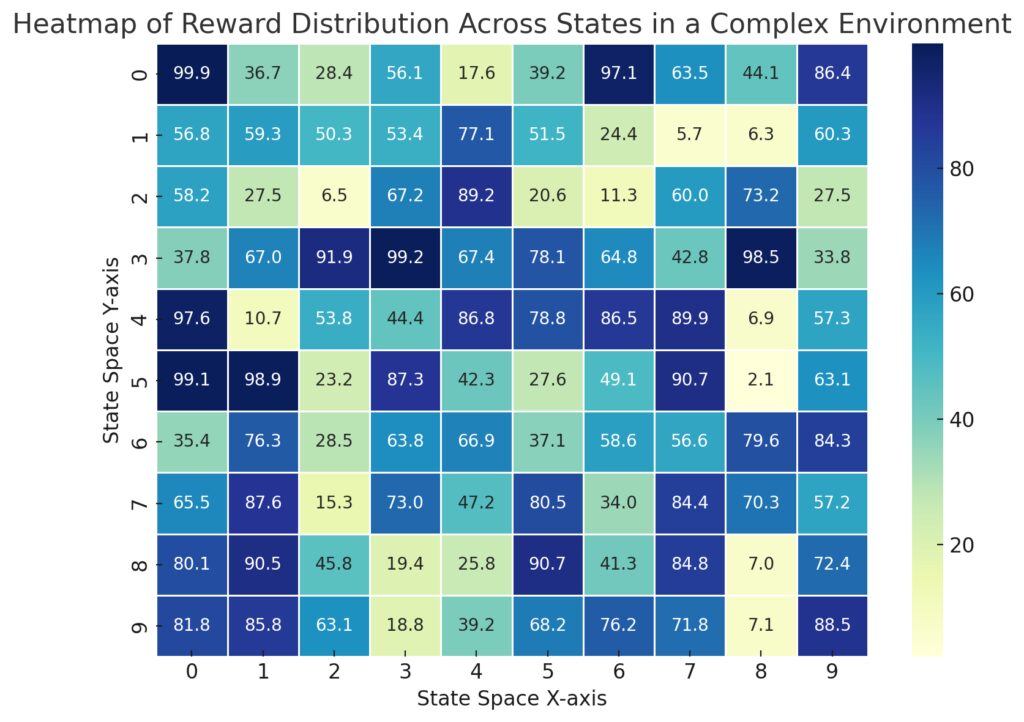

In Proximal Policy Optimization (PPO), reward shaping is essential for guiding agents toward desired behaviors within complex environments. It acts as a scaffold between an agent’s learning process and the goals it’s supposed to achieve. Shaping rewards effectively can speed up learning, but there are considerable challenges in getting it right. Misalignment between rewards and objectives can derail the agent’s learning or make it pursue undesirable strategies.

As environments grow more intricate—whether in robotics simulations, strategy-based video games, or other multi-task setups—reward shaping becomes trickier.

The rewards must strike a fine balance: too sparse, and the agent will struggle to learn; too dense, and the agent may become reliant on short-term gains, missing the larger objective.

The Balance Between Exploration and Exploitation

A core challenge in reward shaping is achieving the right balance between exploration and exploitation. Exploration drives the agent to discover new behaviors and strategies, which is crucial for learning in dynamic environments. Meanwhile, exploitation refers to refining what has already been learned to maximize immediate rewards.

However, this trade-off isn’t easy to manage. If the reward system pushes too heavily toward exploitation, the agent may miss out on more optimal strategies that could be found through exploration. Conversely, if exploration is overemphasized, the agent may waste time on unproductive behaviors.

In complex environments like those seen in robotics or multi-level gaming, designing rewards that foster both exploration and exploitation is key to maintaining agent progress.

Delayed Rewards and Long-Term Objectives

Certain environments introduce delayed rewards, where the impact of an action is not immediately visible. This can pose significant challenges for PPO agents. If the agent doesn’t receive enough feedback from intermediate actions, it may fail to learn how these individual steps contribute to the ultimate goal.

For example, in tasks like autonomous navigation, the final reward might be achieving the destination safely. However, each decision made along the way contributes to that success. Designing intermediate rewards that guide the agent through the process without leading to fixation on short-term successes is crucial. The fine-tuning process must emphasize long-term strategic thinking over immediate rewards to align the agent with broader objectives.

Avoiding Reward Hacking and Unintended Outcomes

Reward hacking is a common problem in reinforcement learning. When an agent finds loopholes in the reward system, it may adopt strategies that maximize its reward in unintended ways. This can lead to bizarre behaviors, where the agent is technically meeting the objectives, but not in the way the designer intended.

For instance, an agent programmed to learn efficient walking might figure out that constantly stumbling forward technically achieves movement, earning the same reward as walking properly. To combat this, reward shaping must be carefully designed to encourage the intended behavior while closing off potential loopholes. This often requires multiple testing iterations, where developers observe, adjust, and re-calibrate rewards to align with the agent’s goals.

Scaling Reward Shaping in Multi-Agent Systems

Multi-agent environments introduce another layer of complexity in reward shaping. Each agent must consider not only its rewards but also how its actions affect others. In cooperative settings, agents must work together, while in competitive settings, they’re trying to outmaneuver one another.

In cooperative environments like multi-agent reinforcement learning, shaping rewards that promote teamwork without overshadowing individual learning paths is challenging. On the other hand, in competitive environments, agents need to learn how to navigate between personal success and disrupting opponents’ strategies.

Balancing these rewards requires understanding the individual roles within the group dynamic and adjusting the rewards to maintain healthy competition or collaboration.

The Impact of Sparse Rewards on Learning

In many complex environments, rewards can be sparse, meaning the agent receives feedback only occasionally. This poses a significant challenge for PPO agents, as sparse rewards slow down the learning process. Without frequent signals, it becomes difficult for the agent to understand how its actions lead to positive outcomes.

In these cases, fine-tuning intrinsic rewards—internal motivators based on the agent’s own progress—can keep the learning process alive. This method helps bridge the gap between sparse external rewards and the agent’s need for consistent feedback.

For example, an agent navigating through a maze may not immediately receive a reward for reaching the exit, but intrinsic rewards for progressing in the right direction can help it stay motivated and learn more effectively.

Designing effective intrinsic rewards can prevent agents from stalling in their learning process, allowing them to make incremental progress even when external signals are limited.

Tackling Uncertainty in Reward Functions

In real-world applications, environments often contain uncertainty or stochastic elements, where outcomes may vary unpredictably. These uncertain environments make reward shaping especially challenging. For instance, in a financial trading simulation, market conditions can fluctuate wildly, meaning that the same action may yield very different results depending on external factors.

To cope with this, it’s essential to build robust reward structures that account for environmental variability. One strategy is to reward agents for learning flexible behaviors that adapt well to change, rather than rigid strategies that perform only under ideal conditions. Fine-tuning rewards to encourage resilience and adaptability can help agents thrive in stochastic environments where certainty is a luxury.

Designers may also introduce noise into the reward system during training to prepare the agent for real-world uncertainty. This approach helps agents learn to cope with inconsistent feedback while still progressing toward their objectives.

Shaping Rewards for Hierarchical Tasks

In environments where tasks are hierarchical—involving multiple steps or stages—reward shaping becomes even more intricate. Consider an agent tasked with assembling a product on a factory line. The task requires several sequential actions, each building on the previous one. If the agent completes one stage correctly but fails in the next, overall progress can be hindered.

In such cases, breaking down rewards into sub-objectives becomes vital. Each step of the task should be tied to its own rewards, allowing the agent to focus on individual goals without losing sight of the larger objective. Fine-tuning rewards in hierarchical tasks ensures that the agent understands not only how to complete individual steps but also how those steps connect to the bigger picture.

Dynamic Environments and Evolving Rewards

Many environments evolve over time, requiring agents to adapt to new conditions. Dynamic environments—like online multiplayer games or evolving ecosystems—demand flexible reward systems that can shift alongside changing goals or circumstances. Static reward structures may fail in such settings, as they don’t adjust to the evolving nature of the tasks.

One approach is to introduce dynamic rewards that change based on the agent’s progress or the current state of the environment. For example, in a game setting, early rewards might focus on basic skills like movement, while later rewards shift to more advanced objectives, like strategic decision-making.

Fine-tuning rewards in this way ensures that agents remain challenged and continue to learn even as the environment evolves.

Designing reward structures that evolve over time can significantly improve performance in dynamic environments, as agents become better equipped to handle new challenges as they arise.

Ethical Considerations in Reward Shaping

When developing agents for real-world applications, ethical considerations in reward shaping become paramount. In fields like healthcare or autonomous driving, agents must not only perform efficiently but also adhere to strict safety and ethical standards. Poorly shaped rewards could lead agents to prioritize short-term gains over important factors like human safety or fairness.

For example, in the development of autonomous vehicles, agents must learn to navigate traffic safely, but if rewards are too focused on efficiency, the vehicle might make risky decisions to save time. Fine-tuning rewards to incorporate ethical guidelines ensures that agents learn in ways that align with societal values, not just technical objectives.

This requires careful collaboration between engineers, ethicists, and domain experts to build reward systems that emphasize both task success and ethical responsibility, creating a balance between optimization and safety.

Overcoming the Challenges of Reward Redundancy

In certain cases, reward shaping can unintentionally introduce redundancy, where multiple rewards are given for similar or overlapping tasks. This can confuse the agent, causing it to become overly fixated on easy, repetitive actions to maximize rewards, rather than truly learning and improving its performance.

For example, if an agent is rewarded for reaching a destination and also for minimizing time, it might focus solely on the shortest path, ignoring other important factors like safety or efficiency. This kind of redundancy in rewards dilutes the learning process. Fine-tuning is necessary to remove these overlaps and ensure that each reward targets a distinct and meaningful aspect of the task.

To avoid redundancy, developers must carefully evaluate which behaviors should be rewarded and how these rewards can complement each other, rather than compete or overlap.

Tailoring Rewards for Generalization vs. Specialization

Another key challenge in reward shaping for PPO agents is the tension between generalization and specialization. Generalized agents are more adaptable to various tasks and environments, while specialized agents excel at very specific, narrowly defined tasks.

In complex environments like robotics or autonomous systems, it can be tempting to reward specialized behavior that works well in a particular situation. However, this may lead to poor performance when conditions change. If agents are too specialized, they may struggle to generalize their knowledge to new or slightly different scenarios.

Fine-tuning rewards to strike a balance between generalization and specialization ensures that agents are both adaptable and capable of excelling in specific contexts. This can be achieved by incorporating varied tasks and environments during training, rewarding adaptability alongside task completion.

The Role of Human Intuition in Reward Shaping

Despite advances in artificial intelligence and reinforcement learning, human intuition still plays a crucial role in reward shaping. Designers often need to anticipate how an agent will interpret rewards and adjust them based on observed behavior.

While algorithms like PPO can optimize policies effectively, they still rely on humans to craft the underlying reward functions. Designers must constantly test and refine these systems to ensure that the agent behaves as intended. In many cases, it’s a process of trial and error—observing how the agent interacts with the environment and making adjustments to the reward structure.

Leveraging domain expertise is essential when fine-tuning rewards, especially in specialized fields like healthcare, finance, or autonomous systems, where nuanced understanding is necessary to guide agents appropriately.

Reward Shaping for Multi-Objective Tasks

In multi-objective tasks, agents are required to balance competing goals, making reward shaping particularly difficult. For instance, in autonomous drones, agents may need to optimize both speed and stability—two objectives that can sometimes conflict. If the reward structure heavily emphasizes one objective, the agent may neglect the other, leading to imbalanced behavior.

Fine-tuning reward shaping in multi-objective tasks involves creating a hierarchical or weighted reward system that allows the agent to prioritize based on the context. For example, in an emergency, speed might take precedence, whereas in normal conditions, stability would be the primary focus. Designers need to carefully craft these rewards to reflect the dynamic nature of the task at hand, ensuring the agent can seamlessly switch priorities based on the environment.

Reward Shaping in Real-World, High-Stakes Environments

In high-stakes environments, like medical decision-making or financial trading, reward shaping takes on a new level of importance. Mistakes in these domains can have serious consequences, meaning that reward structures must be carefully calibrated to avoid risky or unethical behaviors.

Agents in these environments must prioritize accuracy, safety, and ethical considerations over efficiency. For example, a financial trading algorithm that seeks to maximize profits at all costs might inadvertently exploit loopholes or take unsustainable risks. Similarly, a medical AI agent needs to balance the speed of diagnosis with accuracy, ensuring it doesn’t misdiagnose due to a poorly calibrated reward system.

Fine-tuning these rewards requires continuous monitoring and adjustment to reflect both the goals of the system and the ethical boundaries within which it operates.

Relevant Resources for Understanding Reward Shaping in PPO

“Proximal Policy Optimization Algorithms”

Authored by John Schulman et al., this is the foundational paper that introduced PPO. It details the theoretical framework and implementation of PPO in complex environments.

Available at: arXiv PPO Paper

“Reward Shaping in Reinforcement Learning”

A comprehensive overview of how reward shaping can be used effectively to guide reinforcement learning agents toward desired behavior, outlining various strategies to avoid common pitfalls.

Available at: Springer Reward Shaping

OpenAI Baselines: PPO Implementation

The OpenAI Baselines repository includes a popular implementation of PPO in Python, making it an excellent resource for practical learning and experimentation with reward shaping techniques.

Available at: OpenAI Baselines

“Intrinsic Motivation in Reinforcement Learning”

This article explores how intrinsic rewards can be used to keep agents motivated in environments with sparse or delayed rewards, providing insights into how to fine-tune reward structures for long-term learning.

Available at: Intrinsic Motivation Research