In the world of machine learning and data science, we often hear about complex models that make impressive predictions and classifications. But have you ever wondered what fuels these models beneath the surface?

Enter latent variables—the hidden, unobservable factors that can make or break a model’s performance. While they don’t present themselves directly, they play a crucial role in understanding and improving how machine learning works.

In this article, we’ll break down the concept of latent variables, their role in machine learning, and how they influence the accuracy and interpretability of models.

What Are Latent Variables?

Latent variables are variables that are not directly observed but are inferred from other observed variables. They are the underlying structures or patterns that help explain the relationships within a dataset. Think of them as the hidden drivers that influence the behavior of the data without being explicitly recorded.

For example, in a study on mental health, observable variables might include responses to a survey about mood, sleep patterns, and energy levels. The latent variable here could be depression, which influences all these observed responses but isn’t directly measured.

Latent Variables in Everyday Life

To make it even clearer, let’s use a common analogy. Imagine you are trying to determine someone’s overall happiness (latent variable) by observing their daily habits like how often they smile, their interactions with friends, and their sleep schedule. These observable behaviors give clues to the hidden variable—happiness—that we can’t measure directly.

In machine learning, latent variables work similarly. They help models identify patterns that aren’t immediately visible but impact the results of predictive tasks.

The Role of Latent Variables in Machine Learning Models

Machine learning models, especially those in unsupervised learning and deep learning, rely heavily on latent variables to make sense of complex datasets. Since latent variables are inferred, they provide a more abstract level of understanding that can help models generalize better to new data. Let’s explore where they come into play.

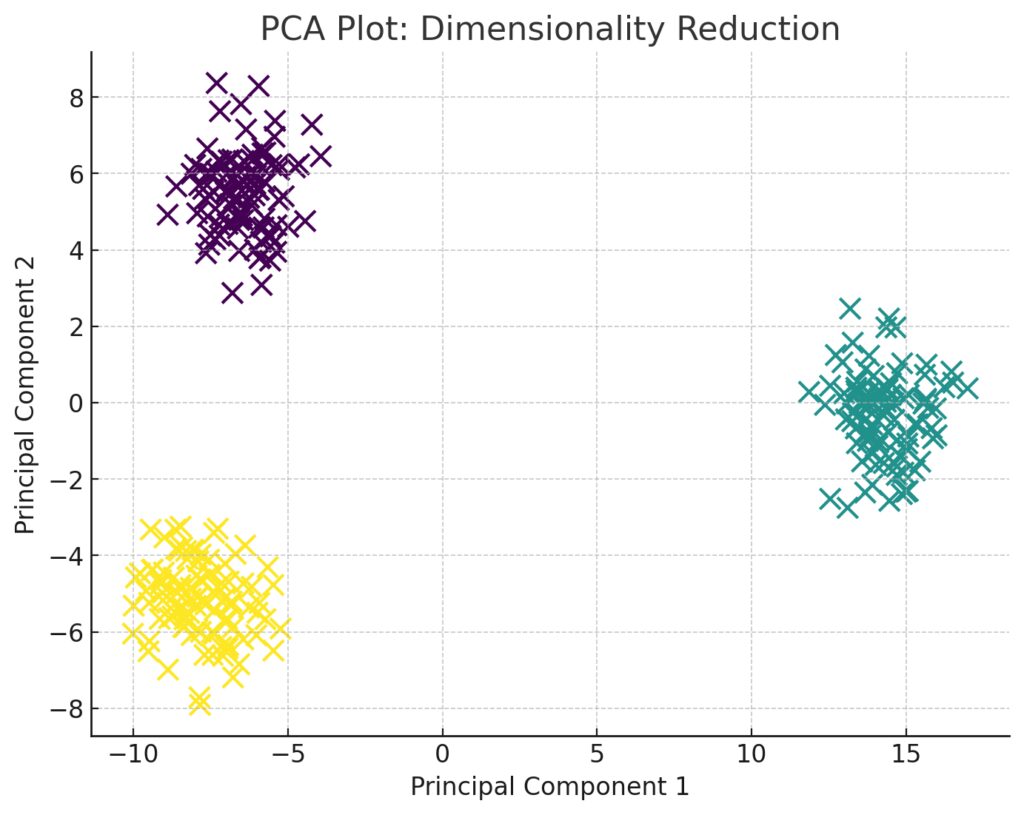

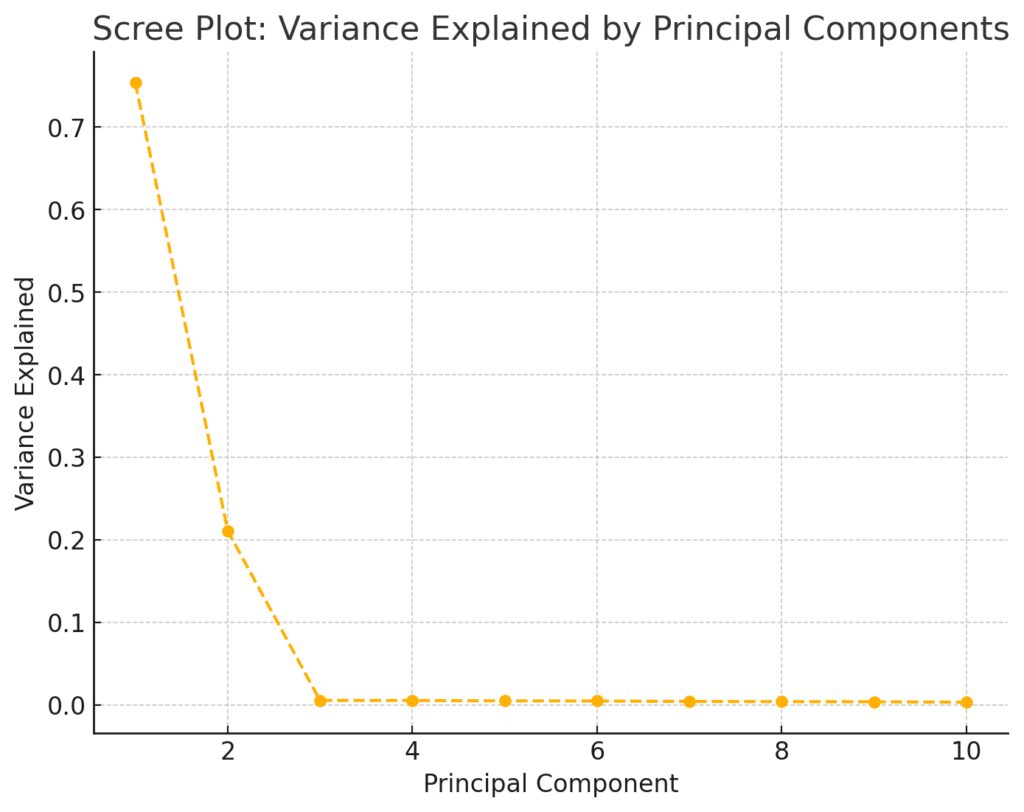

Dimensionality Reduction: Revealing Hidden Structure

In datasets with many features, such as images or text, reducing the number of variables while retaining meaningful patterns is essential. Principal Component Analysis (PCA) and Singular Value Decomposition (SVD) are two methods used to uncover latent variables by reducing the dimensionality of the dataset. These methods identify the most important features or components, which often represent latent variables, to simplify the data while preserving its essence.

Comparison of Different Latent Variable Techniques

| Technique | Key Features | Applications | Best Suited for | Type of Data |

|---|---|---|---|---|

| Principal Component Analysis (PCA) | – Reduces dimensionality by finding principal components (latent variables) that capture maximum variance. – Orthogonal transformation of data. | – Data compression – Noise reduction – Feature extraction for machine learning models. | – Data with high dimensionality where you want to reduce features while retaining key patterns. | – Continuous data (numerical features). |

| Autoencoders | – Neural network-based model for dimensionality reduction. – Encodes data into a lower-dimensional latent space and reconstructs it. | – Anomaly detection – Data generation (e.g., image reconstruction) – Denoising autoencoders | – Complex, high-dimensional data such as images, audio, or text. | – Structured or unstructured data (images, text, etc.). |

| Factor Analysis | – Identifies underlying latent factors that explain correlations among observed variables. – Assumes observed variables are linear combinations of latent factors. | – Psychometrics – Economics – Social sciences (e.g., personality tests) | – Data where you aim to uncover latent factors affecting responses or behaviors. | – Continuous or categorical data (survey responses, tests). |

| Latent Dirichlet Allocation (LDA) | – Unsupervised learning technique to find latent topics in text data. – Models each document as a mixture of topics and each topic as a mixture of words. | – Topic modeling – Text classification – Document clustering | – Large text corpora, where you’re looking for hidden themes across documents. | – Text data (documents, articles). |

| Hidden Markov Models (HMM) | – Models sequential data using hidden states (latent variables) and observable outputs. – Probabilistic model for state transitions. | – Speech recognition – Time-series analysis – Natural language processing | – Sequential data where latent states govern observable outcomes. | – Time-series or sequential data (speech, biological data). |

| Variational Autoencoders (VAEs) | – Similar to autoencoders but with probabilistic latent spaces. – Can generate new data points by sampling from the latent space. | – Data generation (e.g., creating new images). – Representation learning – Anomaly detection | – Complex datasets where data generation or probabilistic inference is required. | – Structured or unstructured data (e.g., images, audio). |

Latent Variables in Neural Networks

Neural networks, especially deep learning architectures like autoencoders, make extensive use of latent variables. Autoencoders compress the input data into a lower-dimensional latent space, often referred to as the bottleneck layer. This hidden layer captures the essential characteristics of the input data in a condensed form, helping the model reconstruct the original input during training.

For example, in a neural network trained to recognize handwritten digits, the latent space may represent abstract concepts like edges or curves that, when combined, define a particular digit.

Latent Variables in Probabilistic Models

In probabilistic models, such as Bayesian networks or Hidden Markov Models (HMMs), latent variables play a critical role in managing uncertainty. These models incorporate latent variables to express uncertainty about observed data, improving the model’s ability to make predictions even with incomplete or noisy data.

Why Are Latent Variables Important?

Latent variables are not just abstract concepts; they have practical implications in improving machine learning models. Understanding these hidden drivers can lead to more interpretable, efficient, and accurate models.

Improving Model Interpretability

One of the biggest challenges in machine learning is the black box nature of many models, where it’s hard to understand how or why the model makes certain predictions. By identifying latent variables, we can better interpret how features are related to the outcome. For example, in topic modeling, latent variables can represent topics in a large corpus of documents, helping users understand the underlying themes in the data.

Enhancing Generalization and Reducing Overfitting

Latent variables help machine learning models generalize better by capturing the fundamental structure of the data rather than memorizing specific examples. This reduces the risk of overfitting, where the model performs well on training data but fails on new, unseen data. By focusing on these hidden relationships, models can create more robust predictions in various applications.

How to Identify Latent Variables in Your Data

Identifying latent variables is a challenging but crucial step in many machine learning tasks. While these variables are, by definition, not directly observable, there are techniques that can help infer their presence.

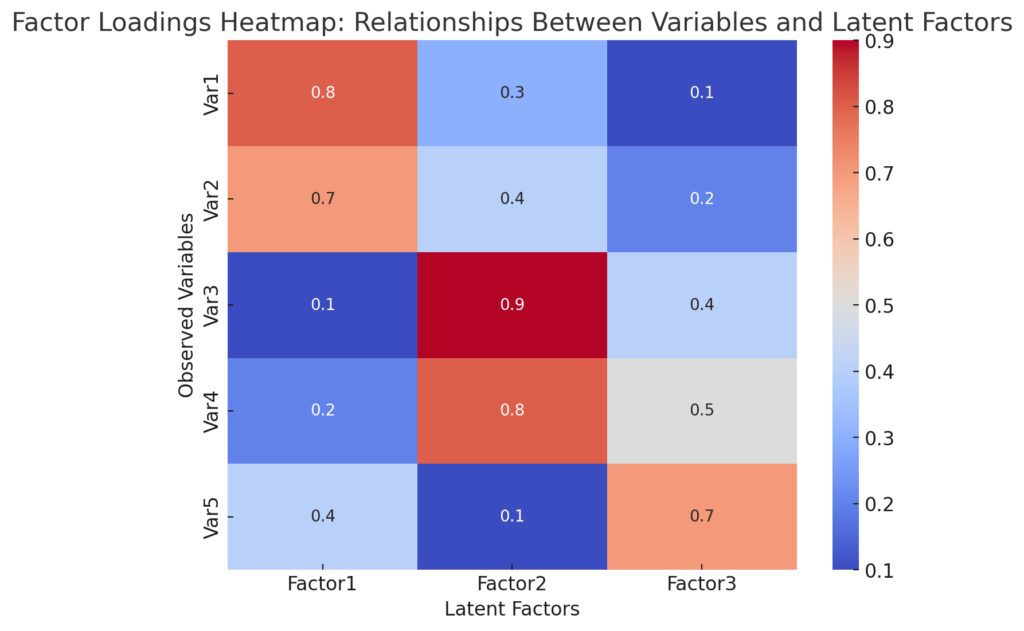

Factor Analysis and Latent Variable Models

Factor analysis is one of the main statistical techniques used to identify latent variables. It seeks to explain the observed variables in terms of a few latent factors that account for the correlations among the observed data. This technique is often used in psychometrics, economics, and social sciences to reveal hidden influences.

Clustering and Grouping Techniques

In unsupervised learning, techniques like k-means clustering or Gaussian Mixture Models (GMMs) can help in identifying latent groups within the data. These clusters or mixtures often correspond to latent variables, representing subgroups or hidden categories that aren’t explicitly defined in the data.

Deep Learning and Representation Learning

In more advanced applications, deep learning methods like autoencoders or variational autoencoders (VAEs) can learn complex representations of data in a latent space. These learned representations capture the essence of the data, allowing for tasks like image generation, data compression, or anomaly detection.

Challenges and Limitations of Latent Variables

While latent variables offer many advantages, working with them can be challenging.

Difficult to Interpret

Latent variables, by their very nature, can be hard to interpret. Even though they help models perform better, understanding what a latent variable represents in real-world terms isn’t always straightforward. This can be especially problematic in fields where model interpretability is crucial, such as healthcare or finance.

Risk of Oversimplification

Sometimes, relying on latent variables can lead to oversimplification. By reducing data to its latent structure, important nuances might be lost. This can negatively impact the accuracy of the model, especially in complex datasets where detailed information matters.

Conclusion

Latent variables are the hidden drivers behind many machine learning models, acting as the key to uncovering deeper patterns in data. From dimensionality reduction to deep learning, they play an essential role in making complex models more efficient and generalizable. However, while latent variables can help in building more powerful models, they also introduce challenges, particularly in interpretation and potential oversimplification.

By understanding latent variables and how to identify them, data scientists and machine learning practitioners can enhance the performance, interpretability, and robustness of their models—ensuring that they not only perform well but also provide valuable insights.

Further Reading:

FAQs

What is the difference between observed and latent variables?

Observed variables are those that are directly measurable and recorded, such as height, age, or temperature. Latent variables, on the other hand, are not directly measurable but can be inferred from observed data. For example, in a psychological survey, mood and sleep might be observed variables, while mental health conditions like anxiety or depression could be latent variables.

Why are latent variables important in machine learning models?

Latent variables help machine learning models by:

- Improving generalization: They capture the underlying patterns in the data, reducing overfitting to specific examples.

- Enhancing interpretability: Identifying latent variables can help explain complex relationships within the data.

- Reducing dimensionality: They simplify datasets, allowing models to focus on the most important information.

What is an example of a latent variable in real-world applications?

In marketing, customer satisfaction is a latent variable that cannot be directly measured but can be inferred from observed behaviors such as repeat purchases, customer feedback, and interaction with customer service. Similarly, in healthcare, stress levels can be a latent variable inferred from sleep patterns, blood pressure, and other observable health metrics.

How can latent variables be identified in a dataset?

There are several techniques to identify latent variables, including:

- Factor analysis: A statistical method that explains observed variables through latent factors.

- Dimensionality reduction: Techniques like PCA and SVD reduce data dimensions to reveal latent patterns.

- Clustering methods: In unsupervised learning, techniques like k-means clustering can group data based on latent similarities.

- Deep learning: Autoencoders and variational autoencoders (VAEs) are neural network architectures that automatically learn latent representations of data.

What is the role of latent variables in deep learning?

In deep learning, latent variables are learned representations that help the model extract and compress essential information from high-dimensional data. For instance, in an autoencoder, input data (like images) is transformed into a lower-dimensional latent space, capturing important features, which is then used to reconstruct the original data.

What are the limitations of using latent variables in machine learning?

While latent variables are powerful, they come with certain challenges:

- Interpretation difficulties: It can be hard to understand what latent variables represent in real-world terms.

- Oversimplification: By focusing on latent variables, important details in the data might be missed, leading to a loss of accuracy, especially in complex datasets.

How do latent variables improve model performance?

Latent variables help models by capturing abstract patterns or hidden relationships in the data, leading to better generalization. This reduces the risk of overfitting and improves the model’s ability to handle new, unseen data. They also allow models to focus on essential features rather than noisy, redundant information.

Can latent variables be directly observed or measured?

No, latent variables cannot be directly observed or measured. They are inferred from observable data, often through statistical or machine learning techniques like factor analysis, PCA, or deep learning methods like autoencoders.