Gradient boosting has come a long way since its inception, revolutionizing the way machine learning models are built for complex prediction tasks.

If you’ve ever wondered how the techniques evolved from traditional methods to today’s leading frameworks like XGBoost, LightGBM, and CatBoost, you’re in the right place.

In this post, we’ll explore the evolution of gradient boosting, highlighting the innovations that led to its widespread adoption and dominance in machine learning competitions.

What is Gradient Boosting?

The Basics of Boosting

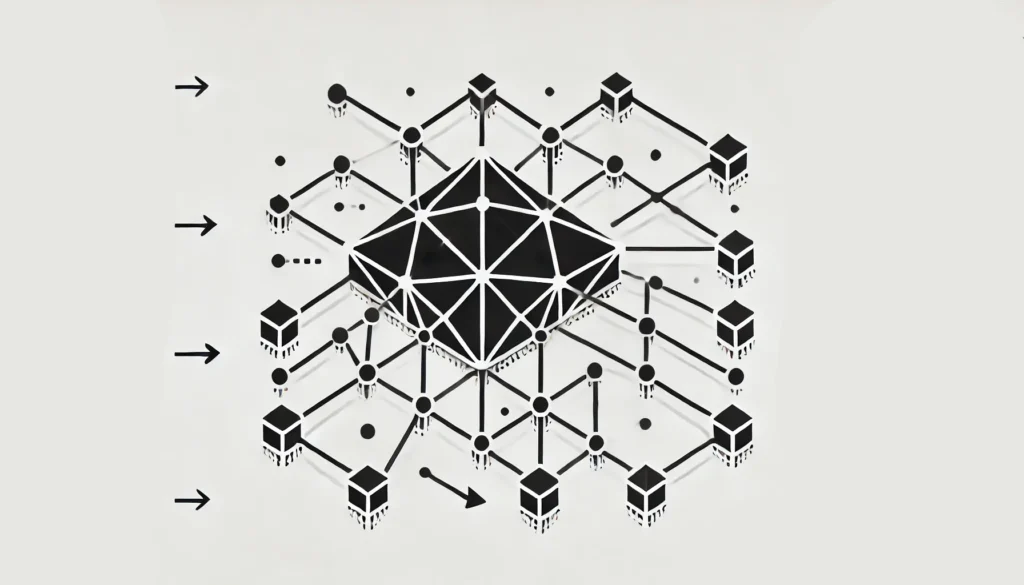

Boosting is a machine learning ensemble technique that combines the power of many weak learners, usually decision trees, to form a more robust predictive model. A weak learner is a model that performs slightly better than random guessing. Boosting works by sequentially training models, where each new model aims to correct the errors of the previous one. Over time, these models “boost” the performance of the entire ensemble.

Gradient Boosting Defined

The concept of gradient boosting builds upon boosting, using gradient descent to minimize a loss function. Gradient boosting constructs new trees by using information from the gradients (slopes) of the loss function, ensuring that each successive tree improves the performance of the model. This iterative process can handle both classification and regression problems, making it a highly versatile tool in a data scientist’s arsenal.

The Rise of Traditional Gradient Boosting

Friedman’s Breakthrough

The earliest formalization of gradient boosting was introduced by Jerome Friedman in his 2001 paper, “Greedy Function Approximation: A Gradient Boosting Machine.” This paper laid the foundation for modern implementations of boosting, introducing key ideas like using a gradient descent optimization process to reduce prediction errors.

Traditional Gradient Boosting Machines (GBMs) used decision trees as their base learners and employed simple but effective techniques to improve accuracy. However, these early versions were slow, requiring a lot of computational resources.

Pros and Cons of Traditional GBM

Traditional gradient boosting methods, while effective, came with some significant downsides:

Pros:

- Accurate on both structured and unstructured data

- Powerful for both regression and classification tasks

- Interpretable models with intuitive outputs

Cons:

- Slow to train, especially on large datasets

- Prone to overfitting if not properly tuned

- Limited scalability due to computational inefficiency

This is where more modern implementations like XGBoost, LightGBM, and CatBoost made their entrance, addressing these limitations head-on.

XGBoost: The Game Changer

What is XGBoost?

XGBoost (eXtreme Gradient Boosting), introduced by Tianqi Chen in 2016, is perhaps the most famous advancement in gradient boosting. It rapidly gained popularity due to its superior performance in competitions and real-world tasks. XGBoost improved upon traditional GBMs with several innovations that significantly boosted speed and accuracy.

Key Innovations of XGBoost

- Regularization: XGBoost incorporates L1 and L2 regularization to prevent overfitting, a common problem in earlier versions of gradient boosting.

- Sparsity Awareness: It can handle missing data by learning the optimal path in decision trees, improving performance on sparse datasets.

- Parallel Processing: By using parallel processing, XGBoost reduced training time, making it feasible for larger datasets.

- Tree Pruning: It uses a technique called “pruning” to prevent unnecessary trees from growing, reducing overfitting and improving generalization.

Performance Impact

XGBoost’s innovations made it a go-to tool for many machine learning practitioners. It offered faster training times, reduced overfitting, and better generalization compared to earlier methods. It became a staple in data science competitions on platforms like Kaggle, where accuracy and performance are paramount.

LightGBM: Speed and Efficiency

Introduction to LightGBM

Developed by Microsoft, LightGBM (Light Gradient Boosting Machine) took the advancements of XGBoost a step further. Its focus was on improving speed and scalability for large datasets, which made it a strong contender for big data applications.

LightGBM’s Key Features

- Leaf-Wise Tree Growth: Unlike traditional depth-wise tree growth, LightGBM grows trees leaf-wise, allowing it to reduce more loss in fewer steps. This drastically increases training speed, especially on large datasets.

- Histogram-Based Decision Trees: Instead of using continuous values for splitting, LightGBM uses a histogram-based approach, which reduces memory consumption and speeds up the process.

- GPU Acceleration: LightGBM provides native support for GPU acceleration, making it incredibly fast on large datasets and high-dimensional data.

Where LightGBM Excels

LightGBM shines in scenarios where datasets are large, and computational efficiency is critical. It sacrifices some interpretability in exchange for speed, making it perfect for applications that require real-time or near-real-time predictions.

CatBoost: Handling Categorical Data with Ease

The CatBoost Revolution

While XGBoost and LightGBM improved gradient boosting for many applications, they struggled with datasets that included a lot of categorical features. Enter CatBoost, a gradient boosting library developed by Yandex, which is designed to handle categorical data more effectively.

Key Features of CatBoost

- Handling Categorical Features: CatBoost natively supports categorical features without needing to manually convert them to numerical values. It uses a novel algorithm to transform categorical variables into numerical data during training.

- Symmetric Tree Building: CatBoost constructs symmetric decision trees, meaning that the trees are built with equal splits at each node. This results in faster inference and simpler trees that are easier to interpret.

- Built-in Overfitting Detection: Like XGBoost, CatBoost includes regularization techniques but also incorporates automatic overfitting detection, which can stop training when performance begins to degrade.

Ideal Use Cases for CatBoost

CatBoost is particularly useful when working with datasets that have a high number of categorical variables. It also performs well on small to medium-sized datasets, making it ideal for industries like finance or healthcare, where categorical data often plays a significant role.

Choosing the Right Tool: XGBoost, LightGBM, or CatBoost?

When to Use XGBoost

- You have a small to medium-sized dataset.

- Overfitting is a concern, and you want strong regularization techniques.

- Your data is clean and does not have many categorical variables.

When to Use LightGBM

- You’re working with large datasets and need fast training times.

- You have access to GPU resources and need to take advantage of hardware acceleration.

- Interpretability is less of a concern compared to speed.

When to Use CatBoost

- Your dataset includes a lot of categorical variables.

- You need fast inference times for real-time applications.

- You want a model that handles small datasets efficiently.

Conclusion

The evolution of gradient boosting has brought us powerful tools like XGBoost, LightGBM, and CatBoost, each tailored to specific needs. From boosting predictive accuracy to speeding up computation and handling complex data types, these frameworks have reshaped the landscape of machine learning. Choosing the right tool depends on your dataset, your hardware resources, and the specific challenges of your project.

As gradient boosting continues to evolve, it’s clear that these tools will remain key players in the data science toolkit, driving innovations across industries.

Further Reading

- A Deep Dive into Gradient Boosting

- XGBoost Documentation

- LightGBM’s Official Guide

- CatBoost Overview

FAQs

How is XGBoost different from traditional gradient boosting?

XGBoost improves on traditional gradient boosting by incorporating regularization to prevent overfitting, using parallel processing for faster training, and employing pruning techniques to enhance generalization. It also handles missing data better and can process large datasets efficiently.

When should I use LightGBM over XGBoost?

LightGBM is ideal for large datasets where training speed and memory efficiency are crucial. It uses leaf-wise tree growth and histogram-based decision splitting to reduce memory usage and computation time, making it faster than XGBoost in many cases, especially on large datasets.

What makes CatBoost different from XGBoost and LightGBM?

CatBoost is specifically designed to handle datasets with a lot of categorical features without needing extensive preprocessing. Its symmetric tree-building algorithm and built-in overfitting detection make it particularly useful for datasets with mixed data types or when working with categorical data is challenging.

Can XGBoost, LightGBM, and CatBoost all be used for classification and regression tasks?

Yes, all three libraries—XGBoost, LightGBM, and CatBoost—support both classification and regression tasks. They are versatile and can be applied to a wide range of problems, including ranking, recommendation systems, and more.

Which tool should I choose for small datasets?

For small datasets, CatBoost is often the preferred choice due to its efficient handling of categorical data and its ability to avoid overfitting. However, XGBoost can also work well if the dataset is well-structured and overfitting is a concern.

How does LightGBM achieve faster training times?

LightGBM achieves faster training times by using leaf-wise tree growth rather than depth-wise. This means it splits the leaves that cause the greatest reduction in loss, allowing the model to achieve better accuracy with fewer iterations. It also uses a histogram-based algorithm that reduces the memory footprint and speeds up the decision-making process.

Is GPU acceleration supported by these frameworks?

Yes, both XGBoost and LightGBM support GPU acceleration, making them ideal for training models on large datasets with high-dimensional features. CatBoost also supports GPU training but is often chosen for its efficiency with categorical features rather than raw computational speed.

How do these tools prevent overfitting?

- XGBoost uses L1 and L2 regularization to penalize overly complex models.

- LightGBM has regularization parameters and also uses early stopping techniques to avoid overfitting.

- CatBoost includes built-in overfitting detection and applies symmetric tree construction, which helps reduce model complexity and overfitting.

Which algorithm should I use for high-dimensional data?

For high-dimensional data, LightGBM is often preferred due to its ability to handle large datasets with numerous features efficiently. The leaf-wise growth and histogram-based optimization methods make it faster and more scalable for these tasks.

Can these tools be used in real-time prediction systems?

Yes, CatBoost is especially suitable for real-time prediction systems because of its fast inference times. Its symmetric tree structure and automatic handling of categorical features make it ideal for applications that require low-latency predictions.

Do these algorithms require extensive hyperparameter tuning?

While XGBoost, LightGBM, and CatBoost perform well out of the box, optimal performance can often be achieved with some level of hyperparameter tuning. Tuning parameters such as learning rate, tree depth, and regularization techniques can help improve accuracy and prevent overfitting for specific datasets.

Can these boosting algorithms handle missing data?

Yes, all three algorithms can handle missing data. XGBoost has built-in sparsity awareness to handle missing values effectively, while LightGBM and CatBoost can also handle missing data during the training process without extensive preprocessing.

What is boosting in machine learning?

Boosting is an ensemble technique where multiple weak models (often decision trees) are combined to create a stronger, more accurate model. In boosting, models are trained sequentially, with each new model trying to correct the mistakes of the previous ones, improving overall performance.

Why is XGBoost so popular in machine learning competitions?

XGBoost is popular in competitions because of its ability to deliver high accuracy with relatively fast training times. Its features like regularization, handling of missing values, and parallel processing make it versatile and powerful for both structured and unstructured data, making it the go-to method for Kaggle competitions.

Is LightGBM more memory-efficient than XGBoost?

Yes, LightGBM is more memory-efficient than XGBoost due to its histogram-based decision tree learning. This reduces the complexity of searching for optimal splits, which in turn lowers memory usage, particularly for large datasets with many features.

Does CatBoost require one-hot encoding for categorical variables?

No, CatBoost does not require one-hot encoding for categorical variables. Unlike other algorithms that need categorical variables to be converted to numerical values, CatBoost can natively handle these variables, making it easier to work with datasets that have high-cardinality categorical features.

How does LightGBM’s leaf-wise growth differ from traditional depth-wise growth?

In traditional depth-wise growth, trees are grown level by level, ensuring that all leaves are expanded at the same rate. LightGBM’s leaf-wise growth splits the leaf with the highest loss reduction, allowing it to reduce the training error more quickly. This approach leads to faster and more accurate models but can risk overfitting if not carefully controlled.

Can I use XGBoost, LightGBM, or CatBoost with Python?

Yes, all three libraries—XGBoost, LightGBM, and CatBoost—have comprehensive Python APIs that are widely used in the data science community. They are also available in other programming languages like R, Java, and C++, making them accessible to a wide range of developers.

Do these algorithms support multi-class classification?

Yes, all three boosting algorithms support multi-class classification tasks. They adapt their loss functions to handle multiple classes, allowing them to be used in problems where there are more than two target labels.

How do I prevent overfitting with LightGBM?

To prevent overfitting with LightGBM, you can:

- Use early stopping, which halts training when the model’s performance on the validation set starts to deteriorate.

- Tune hyperparameters like max depth, min data in leaf, and regularization parameters to control the complexity of the model.

- Use cross-validation to find the optimal combination of parameters.

Does CatBoost outperform XGBoost for categorical data?

Yes, CatBoost often outperforms XGBoost when working with datasets that have many categorical variables. Its native handling of categorical data eliminates the need for preprocessing like one-hot encoding or label encoding, which can lead to better performance and more accurate models without additional work.

How does regularization work in XGBoost?

In XGBoost, regularization is applied to the weights of the trees to prevent overfitting. It uses L1 regularization (Lasso) to enforce sparsity in the model by shrinking some weights to zero and L2 regularization (Ridge) to penalize large weights, keeping the model simpler and less prone to overfitting.

What is a weak learner in gradient boosting?

A weak learner is a model that performs slightly better than random guessing. In gradient boosting, weak learners are typically shallow decision trees, and the goal is to sequentially combine them to create a strong ensemble model by correcting the errors of the previous learners.

Can boosting algorithms handle unbalanced datasets?

Yes, boosting algorithms like XGBoost, LightGBM, and CatBoost can handle unbalanced datasets. They allow for class weights to be adjusted, ensuring that the model doesn’t bias predictions toward the majority class. XGBoost, for example, has a scale_pos_weight parameter that adjusts the weight of positive samples in binary classification.

How do boosting algorithms compare to random forests?

Boosting algorithms focus on sequentially building models where each tree corrects the errors of the previous one, while random forests build independent trees in parallel. Boosting generally produces models with higher accuracy but can be more prone to overfitting. Random forests are easier to train and tune but may not achieve the same level of accuracy on complex tasks.

What are the advantages of using gradient boosting over deep learning?

While deep learning excels in tasks involving image recognition, natural language processing, and unstructured data, gradient boosting models often outperform deep learning on tabular or structured datasets. They require less data preprocessing, are easier to interpret, and can handle missing values more efficiently than many deep learning models.