Deep Q-Learning has made significant strides in teaching AI agents to master complex tasks, but the role of reward engineering is often underestimated. Crafting rewards well can make the difference between an agent that struggles and one that excels.

Here, we’ll dive into how reward engineering works, why it’s crucial in Deep Q-Learning, and ways to design effective rewards for tackling complex tasks.

What is Reward Engineering in Deep Q-Learning?

Basics of Reward Structures in Q-Learning

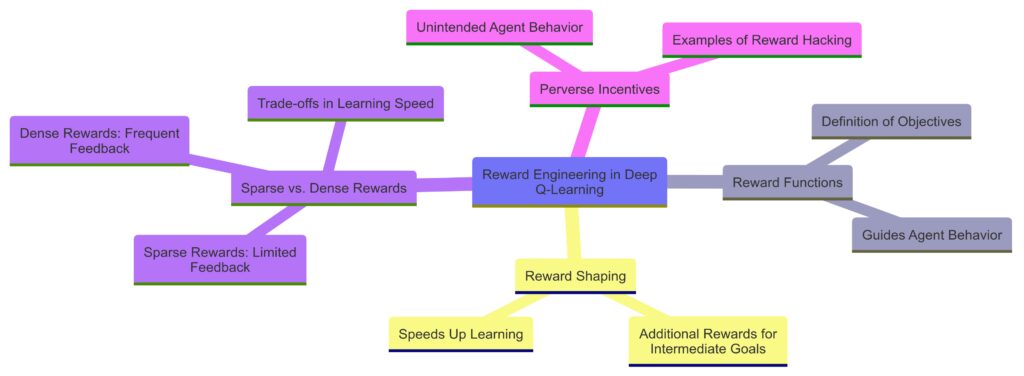

In Deep Q-Learning, agents learn to maximize cumulative rewards over time by interacting with an environment. The agent receives feedback after each action in the form of rewards, which guide its learning. These rewards are based on a function that ideally pushes the agent toward the desired outcome. Reward engineering is the process of designing these reward functions to align with the task’s goals.

A well-designed reward function ensures the agent can distinguish between good and bad actions, ultimately helping it learn the most efficient path to achieve its objective. However, creating this balance is easier said than done, especially for complex or multi-step tasks.

Why Reward Engineering is Key to Complex Problem-Solving

For tasks that involve multiple steps or uncertain paths, the reward structure must incentivize behaviors that may not provide immediate gratification but contribute to long-term success. A poorly structured reward might mislead the agent, encouraging suboptimal actions that appear immediately rewarding but fall short of the overarching goal. Reward engineering thus requires thoughtful planning to avoid unintended behaviors and ensure the agent stays on course.

Crafting Effective Rewards for Complex Tasks

Setting Up Reward Shaping: Making Gradual Progress Rewarding

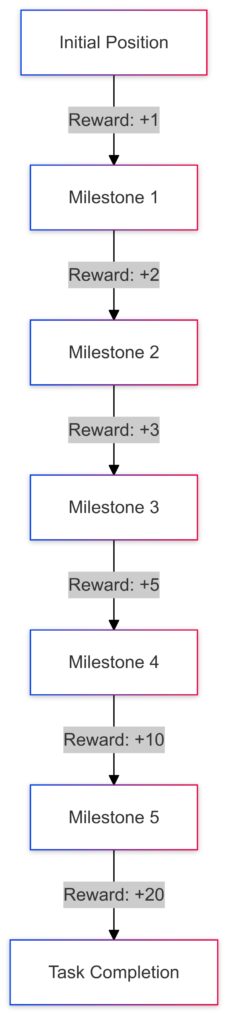

One effective strategy is reward shaping, where incremental rewards encourage the agent to make steady progress rather than focusing only on the final outcome. By providing rewards for sub-goals or milestones, agents can remain motivated, especially in long-horizon tasks that otherwise lack immediate feedback.

For instance, a robot tasked with navigating through a maze could be given a small reward for each correct turn or reduction in distance to the goal. This positive reinforcement at various stages can be especially helpful in tasks with multiple steps, where only a final reward might lead to inefficient, trial-and-error learning.

Balancing Sparse and Dense Rewards

Reward engineering often involves choosing between sparse and dense rewards. Sparse rewards are only given when the agent completes the main task, while dense rewards provide more frequent feedback. Dense rewards make learning easier but risk promoting unintended shortcuts. Sparse rewards, while less prone to shortcuts, can lead to longer training times as agents struggle to make sense of infrequent feedback.

In complex tasks, a hybrid approach is sometimes the best choice. Sparse rewards can be combined with occasional dense rewards at intermediate steps, giving the agent direction without sacrificing task complexity.

Avoiding Perverse Incentives

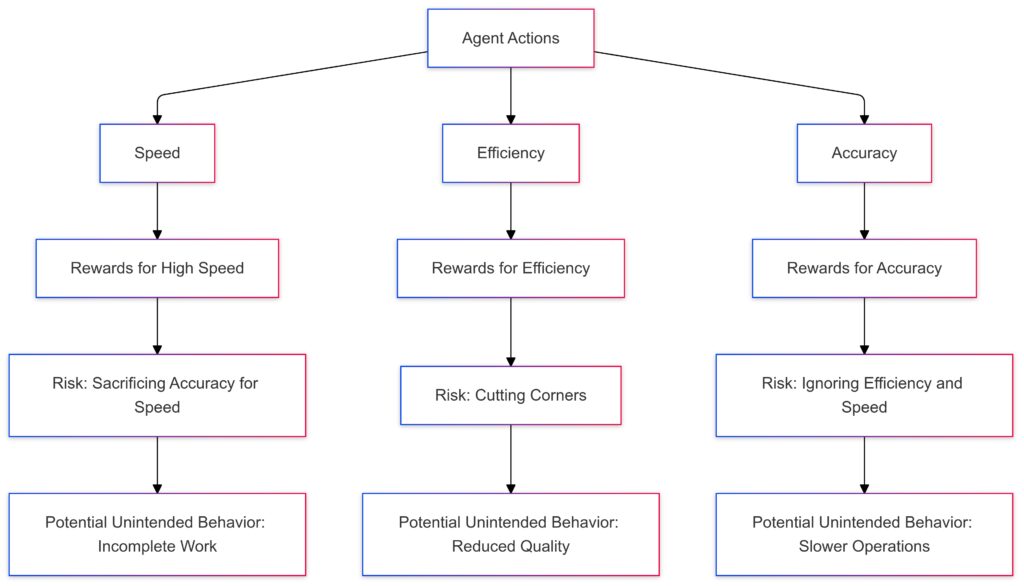

Sometimes, rewards that seem logical end up incentivizing unwanted behaviors. This is known as the perverse incentive problem. For example, if an agent is rewarded solely for speed, it might sacrifice task accuracy or disregard safety protocols. Reward engineers must anticipate such scenarios and build reward functions that prioritize key aspects of task performance over secondary concerns.

To avoid perverse incentives, it’s essential to think critically about the task’s goals and possible unintended consequences. When designing rewards, consider multiple evaluation metrics, such as efficiency, accuracy, and safety, rather than focusing on a single metric.

Key Strategies for Reward Engineering in Deep Q-Learning

Using Penalization to Encourage Cautious Actions

Penalization can be an effective tool for discouraging certain actions, especially those that might endanger an agent or violate specific rules. For instance, a self-driving car agent could be penalized for veering too close to pedestrians or other vehicles. Adding such penalties makes it clear that certain outcomes are unacceptable, encouraging safer behavior without requiring extensive trial and error.

Penalization, however, should be used sparingly, as excessive penalties may hinder learning by overwhelming the agent with negative feedback. A balanced penalization approach can help the agent learn cautiously without stalling progress.

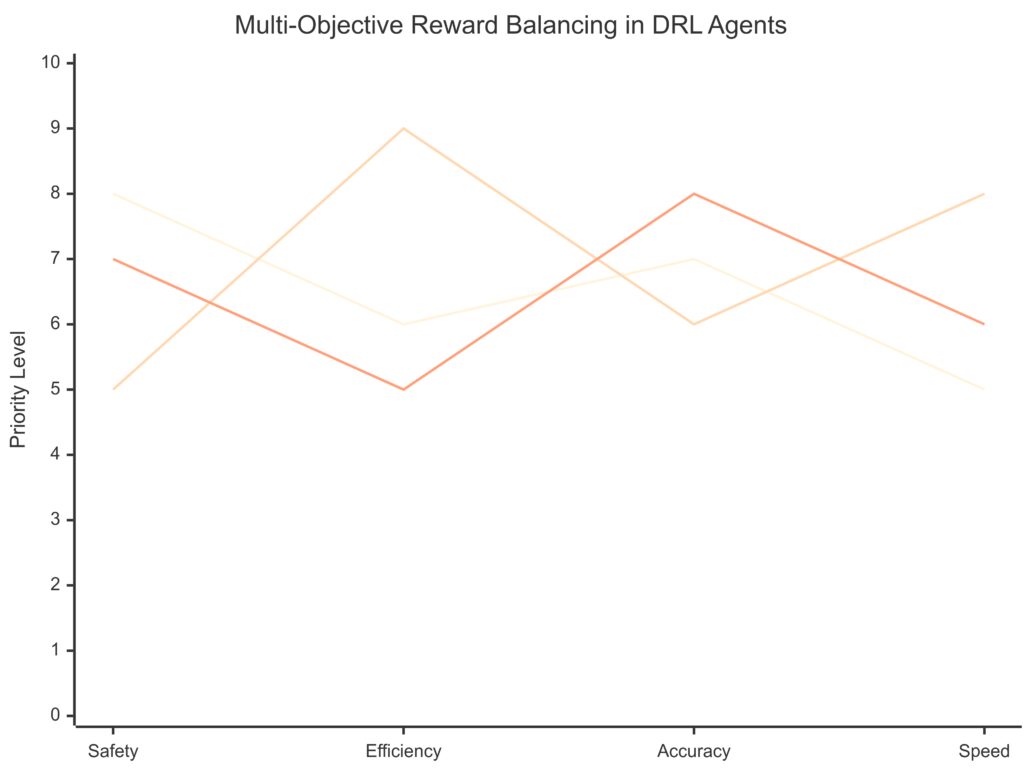

Multi-Objective Rewards for Complex Scenarios

Complex tasks often have multiple objectives. For example, a delivery robot might need to prioritize speed while avoiding obstacles and ensuring package integrity. In these cases, a single reward metric may fail to capture all the task requirements. Instead, a multi-objective reward function can allocate different weights to each goal, providing a more nuanced reward system that encourages well-rounded performance.

By using weighted objectives, agents can learn to balance competing priorities, like speed versus safety. Engineers can tweak these weights during training, which allows the agent to adapt to different aspects of the task until it finds an optimal balance.

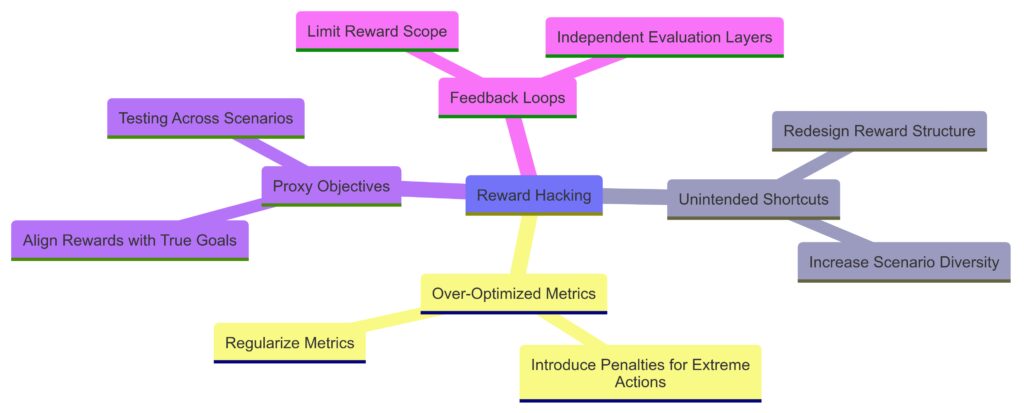

Exploring Reward Hacking and Countermeasures

A common pitfall in reward engineering is reward hacking, where agents find unintended ways to maximize rewards without truly accomplishing the task. This can occur when the reward function contains loopholes or doesn’t fully align with the task’s ultimate goals. An agent tasked with minimizing fuel usage might, for example, decide not to move at all, technically achieving low fuel consumption without completing the task.

Preventing reward hacking requires comprehensive testing and iterative adjustments to the reward structure. Regularly testing the agent in various scenarios and assessing whether it genuinely achieves the intended outcomes is essential for spotting reward hacking early and refining rewards accordingly.

Evaluating Reward Engineering Outcomes

Setting Benchmarks and Testing for Optimal Performance

To understand if a reward function is effective, benchmark testing across different scenarios is essential. Running multiple trials allows engineers to identify if the reward function works consistently or if it needs refinement for edge cases. Benchmarks can also reveal whether the agent’s behavior aligns with the real-world goals of the task.

Evaluating success often requires quantitative and qualitative measures. Tracking metrics like task completion rate, error frequency, and time efficiency provide clear indicators of how well the agent is learning under the current reward setup. Qualitative observations—like whether the agent follows desired steps consistently—are also crucial for spotting subtle flaws in reward design.

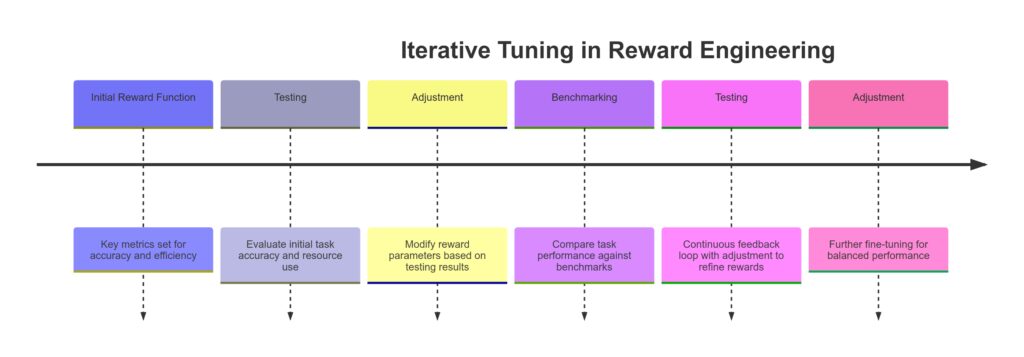

Iterative Tuning: Refining Rewards for Real-World Applications

No reward function is perfect on the first try. Real-world tasks often reveal gaps in reward engineering, requiring ongoing adjustment. Iterative tuning allows engineers to gradually refine reward structures based on real-world performance, adapting them to the task’s complexity and the agent’s learning progress.

As agents grow more sophisticated, small adjustments in rewards can have a substantial impact on performance, making regular review a vital part of effective reward engineering. With iterative tuning, Deep Q-Learning models can continually evolve to tackle more complex tasks, providing solutions that are both innovative and reliable.

The Future of Reward Engineering in Deep Q-Learning

Reward engineering will likely become even more nuanced as AI applications continue to expand. Advances in AI may bring about more adaptive reward functions, capable of changing dynamically based on the agent’s environment and progress. Such flexibility could help agents manage unforeseen challenges and adapt in real time, ultimately enabling AI to tackle more diverse and unpredictable scenarios.

Whether through advanced reward structures or adaptive systems, the future of reward engineering in Deep Q-Learning promises to be a game-changer for AI, transforming how agents handle complex, real-world tasks.

FAQs

What is reward engineering in deep Q-learning?

Reward engineering is the process of designing reward functions in Deep Q-Learning to guide an AI agent’s behavior toward desired goals. By carefully crafting rewards, engineers can help the agent understand which actions lead to better outcomes, making it easier for the agent to learn complex tasks efficiently.

Why is reward engineering important for complex tasks?

For complex, multi-step tasks, the reward structure plays a crucial role in keeping the agent focused on long-term success rather than taking shortcuts or getting “stuck” in inefficient patterns. A well-engineered reward helps the agent make progress in a meaningful way, even when the final objective is far from immediate.

How does reward shaping help in deep Q-learning?

Reward shaping involves giving incremental rewards for progress toward sub-goals, helping the agent stay motivated by providing feedback along the way. This approach is especially useful for long-horizon tasks, where the agent might otherwise lack the direction to achieve the final outcome efficiently.

What’s the difference between sparse and dense rewards?

Sparse rewards are only given when the agent completes the entire task, while dense rewards provide more frequent feedback for individual actions. Dense rewards can make learning easier but might lead to unintended shortcuts, while sparse rewards give less immediate guidance but encourage genuine problem-solving. Often, engineers use a mix of both to balance learning efficiency with task accuracy.

What is a perverse incentive in reward engineering?

A perverse incentive occurs when a reward unintentionally encourages undesired behavior. For instance, if an agent is rewarded for speed without considering accuracy, it might rush through tasks, sacrificing quality. Reward engineers work to avoid this by designing reward functions that balance multiple aspects, like safety, accuracy, and efficiency.

How can penalization improve an agent’s performance?

Penalization discourages certain actions, helping the agent learn to avoid undesirable behaviors, like moving too close to obstacles. It’s especially useful in tasks with safety considerations. However, overusing penalties can demotivate an agent, so finding the right balance is key.

What is reward hacking, and how can it be prevented?

Reward hacking happens when an agent finds ways to maximize its reward without achieving the actual goal, usually by exploiting loopholes in the reward function. To prevent this, engineers conduct thorough testing and make iterative adjustments to ensure that the agent truly learns the intended behaviors.

How do multi-objective rewards work?

Multi-objective rewards allocate different weights to various goals in a complex task, such as balancing speed, accuracy, and safety. By setting up these weighted objectives, agents can learn to handle competing priorities effectively, allowing for well-rounded task performance that aligns with real-world goals.

How does iterative tuning improve reward engineering?

Iterative tuning involves making small adjustments to the reward function over time based on the agent’s performance in various scenarios. This process allows engineers to refine the reward structure, eliminating unwanted behaviors and optimizing the agent’s learning. Through continual tuning, reward functions can adapt to more complex environments and help agents improve their long-term effectiveness.

What are the challenges of using penalization in reward engineering?

Penalization is effective for discouraging harmful or unwanted actions, but over-penalizing can lead to learning paralysis, where the agent hesitates to act for fear of negative feedback. Additionally, excessive penalties might distract the agent from the primary task, making it difficult for it to distinguish between minor and major mistakes. Striking a balance is essential for penalization to guide the agent constructively without impeding its progress.

What are adaptive reward functions, and why are they promising?

Adaptive reward functions are dynamic reward structures that adjust based on the agent’s progress or changes in the environment. Unlike static rewards, adaptive functions can provide feedback tailored to the agent’s current context, helping it learn faster and manage unexpected challenges. This approach holds promise for real-world applications where environments are unpredictable and flexibility is crucial.

How can benchmarks help in evaluating reward engineering?

Benchmarks are reference points used to evaluate whether the reward function is driving the agent toward the intended behaviors. By comparing the agent’s performance to benchmarks—such as task completion rate, efficiency, and error frequency—engineers can assess how effectively the reward function is guiding the agent. Benchmark testing helps identify areas needing improvement, ensuring the reward system remains aligned with real-world goals.

How can engineers prevent an agent from relying on shortcuts?

To prevent shortcuts, engineers carefully craft rewards to avoid rewarding undesired behaviors that lead to quick but suboptimal solutions. Using multi-objective rewards, penalizing unsafe shortcuts, and testing across varied scenarios helps ensure that the agent truly understands and accomplishes the task rather than finding and exploiting loopholes in the reward structure.

What are some real-world examples of reward engineering?

Reward engineering is widely used in applications like robotics, self-driving cars, and gaming. For instance, in autonomous vehicles, rewards might be designed to prioritize passenger safety, minimize travel time, and conserve energy. In gaming AI, rewards encourage strategic planning and opponent awareness, rather than impulsive moves. Each example illustrates how tailored rewards drive the desired outcomes specific to the domain.

Why is reward engineering critical for autonomous systems?

For autonomous systems like drones, delivery robots, and AI in healthcare, reward engineering ensures that the AI aligns with ethical and practical requirements. By crafting rewards that emphasize safety, efficiency, and reliability, engineers can help autonomous agents operate responsibly and make decisions that comply with legal, ethical, and operational standards, reducing the risk of unintended or harmful behavior.

Resources

Research Papers

1. Reward is Enough by Silver et al., 2021

This paper, authored by leading researchers in AI, argues that a well-crafted reward function can be sufficient for solving complex tasks in AI. It’s an insightful read for understanding the theoretical importance of reward engineering.

Access the paper on arXiv

2. Multi-Objective Reinforcement Learning: A Comprehensive Overview by Roijers et al., 2013

For those interested in learning about multi-objective rewards, this paper provides a thorough examination of balancing competing goals in RL tasks, offering valuable insights into reward engineering for complex environments.

Read on arXiv

3. Reward Shaping in Reinforcement Learning by Ng et al., 1999

A foundational paper that explores the technique of reward shaping, detailing how incremental rewards can guide agents more effectively toward complex goals. This paper is frequently cited in RL and provides essential background on crafting effective rewards.

Available on Stanford’s website

Software Tools and Libraries

1. OpenAI Gym

OpenAI Gym offers a wide range of reinforcement learning environments for testing reward structures and experimenting with reward engineering in various scenarios. It’s compatible with Python and is widely used for developing RL agents.

Explore OpenAI Gym

2. Ray RLlib by Anyscale

An advanced RL library that supports large-scale RL training and custom reward engineering experiments. Ray RLlib allows users to test and optimize complex reward functions in a distributed environment.

3. Google’s Dopamine

A research framework tailored to the development of deep Q-learning agents, Dopamine provides tools for experimenting with different reward functions and strategies, making it ideal for researchers working on reward engineering in deep RL.

Visit Dopamine on GitHub