The Synthetic Minority Over-sampling Technique (SMOTE) is commonly used to address imbalanced data in machine learning, especially for classification tasks where one class may be significantly underrepresented.

However, SMOTE can impact model interpretability and explainability, creating potential trade-offs for data scientists. Here, we’ll dive into the nuances of how SMOTE affects these two critical aspects of machine learning models.

What is SMOTE and How Does It Work?

Understanding SMOTE’s Role in Data Balancing

SMOTE stands for Synthetic Minority Over-sampling Technique, a powerful method for handling class imbalance by generating synthetic examples in the minority class. It accomplishes this by creating new data points that are similar to existing instances in the minority class, helping the model learn patterns better.

For instance, in a credit scoring system where fraudulent transactions may be underrepresented, SMOTE adds synthetic fraud cases, balancing the data. This can significantly improve the model’s performance, especially in metrics like recall and F1-score.

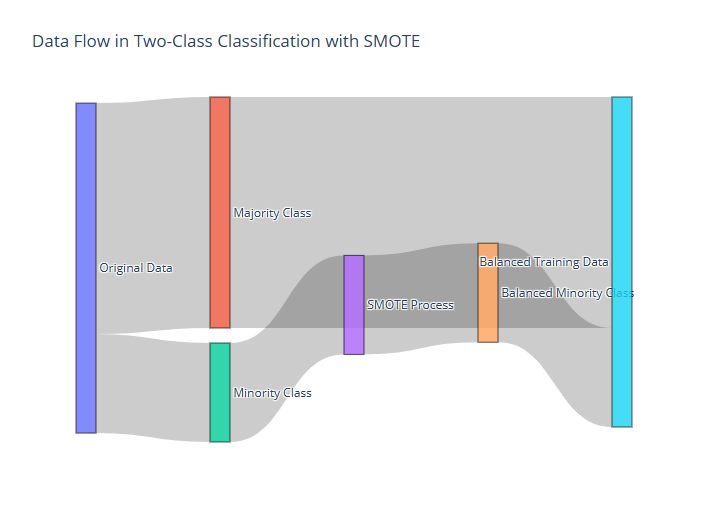

Nodes: Represent the main components: Original Data, Majority and Minority Classes, SMOTE, and Balanced Data.

Source and Target Flows: Define the direction of data flow (e.g., from Original Data to Majority and Minority Classes, from SMOTE to Balanced Minority Class).

Values: Indicate the size of each flow to reflect data distribution (e.g., original distribution and balanced outcome post-SMOTE).

How SMOTE Changes Data Distribution

While effective, SMOTE inherently alters the data distribution by introducing synthetic samples. These generated points can introduce new, artificial patterns that wouldn’t naturally occur in the original data. In high-dimensional data, this might mean SMOTE blends data boundaries, which can have implications for interpretability and explainability down the line.

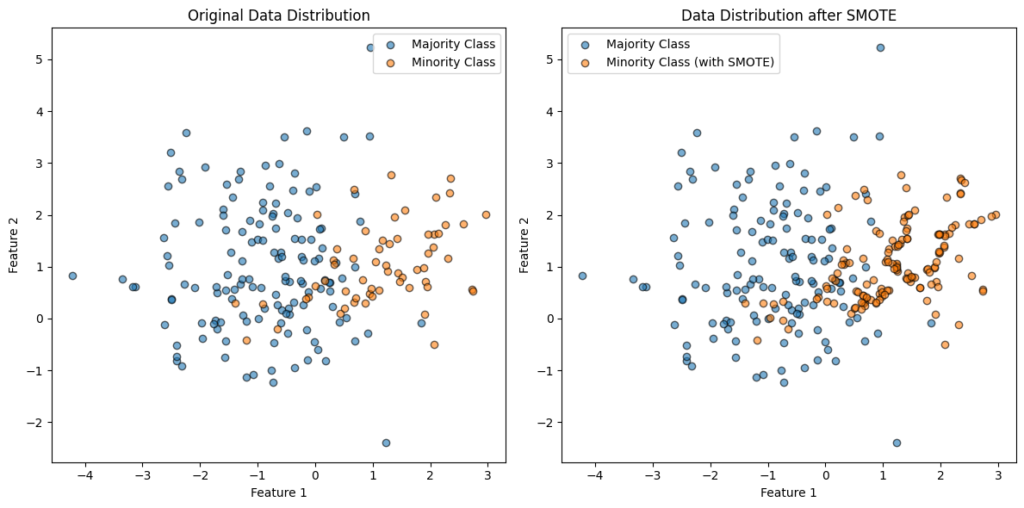

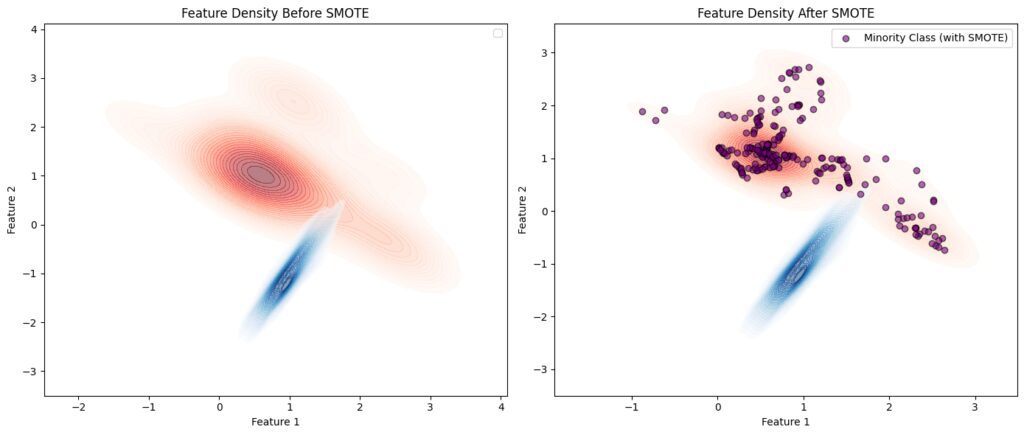

Left Plot: Shows the original data distribution with an imbalanced minority class.

Right Plot: Displays the data distribution after applying SMOTE, where synthetic samples increase the density and boundary similarity of the minority class to the majority.

How SMOTE Impacts Model Interpretability

Complex Models and Interpretability Challenges

When using SMOTE, data becomes less straightforward. Some models, like decision trees and logistic regression, may become harder to interpret due to the added complexity of synthetic data. With SMOTE, boundaries between classes can become fuzzier, making the feature importance less clear.

For example, decision trees might develop more complex rules to account for these synthetic points, making the tree deeper or the feature importance less intuitive.

Higher-Dimensional Spaces and Interpretability

SMOTE often involves k-nearest neighbors (k-NN) to generate synthetic samples, which can inflate the complexity of high-dimensional data. When dimensions increase, the distance between data points also increases, leading to “curse of dimensionality” issues. This makes it harder to interpret relationships between variables, as SMOTE-synthesized samples may not align with real-world observations.

KDE Plot (Kernel Density Estimate): Used to estimate the feature density for both classes.

Before SMOTE: The minority class has sparse regions, highlighted by lighter red concentrations.

After SMOTE: SMOTE fills these sparse regions with synthetic samples, increasing the minority class’s density where it was previously sparse.

Effects of SMOTE on Explainability of Model Predictions

Difficulty in Explaining Predictions with Synthetic Data

One of the key challenges is that explainability methods, like LIME or SHAP, rely on approximating how different features contribute to a model’s predictions. When SMOTE is applied, these interpretability tools may need to work with synthetic data that doesn’t represent actual, observed instances. This can make explanation artifacts less reliable.

For instance, LIME (Local Interpretable Model-agnostic Explanations) samples instances around a given point to understand its contribution to predictions. If the data point is synthetic, LIME might produce explanations that don’t reflect the original data distribution, potentially confusing stakeholders.

Model Transparency and Stakeholder Trust

Stakeholders are often interested in how models arrive at their predictions, especially in fields like healthcare, finance, or law. When synthetic data influences model predictions, explaining specific decisions can be challenging. In certain fields, regulators may scrutinize models that use synthetic data more heavily. Ensuring transparency while using SMOTE can be critical but difficult when synthetic examples drive some of the model’s behavior.

Mitigating Interpretability Challenges with SMOTE

Balancing Synthetic and Real Data in Training

One strategy is to limit SMOTE usage, balancing the number of synthetic samples with real samples. This helps retain some natural class distinctions, preventing the synthetic data from overwhelming real data points. For instance, instead of generating synthetic samples to completely balance classes, we might create only enough samples to reach a predefined threshold, like a 2:1 class ratio.

Employing Alternative Sampling Methods

Techniques like ADASYN (Adaptive Synthetic Sampling) focus more on challenging minority samples, potentially preserving more interpretability. ADASYN gives higher weights to harder-to-classify samples, making it a smarter option when interpretability is key.

Interpretable Model Alternatives to Using SMOTE

Leveraging Cost-Sensitive Models

An alternative to applying SMOTE is to use cost-sensitive models that penalize misclassifying the minority class more heavily. Instead of synthetically balancing the dataset, these models prioritize correctly identifying minority instances by adjusting the decision boundary or increasing the cost associated with minority class errors.

For instance, in fraud detection, assigning a higher penalty to incorrectly classifying fraudulent transactions can prompt the model to identify fraud without oversampling. This way, the original data structure is preserved, and the interpretability remains more natural, as the model is simply more responsive to the minority class without introducing synthetic points.

Using Explainable Algorithms for Imbalanced Data

Some inherently interpretable models like decision trees, rule-based models, or logistic regression with regularization can handle imbalanced data with greater transparency. When paired with threshold adjustments or class weighting, these models can still perform well without SMOTE.

For example, logistic regression can apply class weights to counterbalance imbalances without altering the data itself. This preserves interpretability, as the model’s decisions are still based on the original data distribution, making it easier to explain feature importance and individual predictions.

Analyzing SMOTE’s Impact on Feature Importance

Inflated Feature Importance Due to Synthetic Data

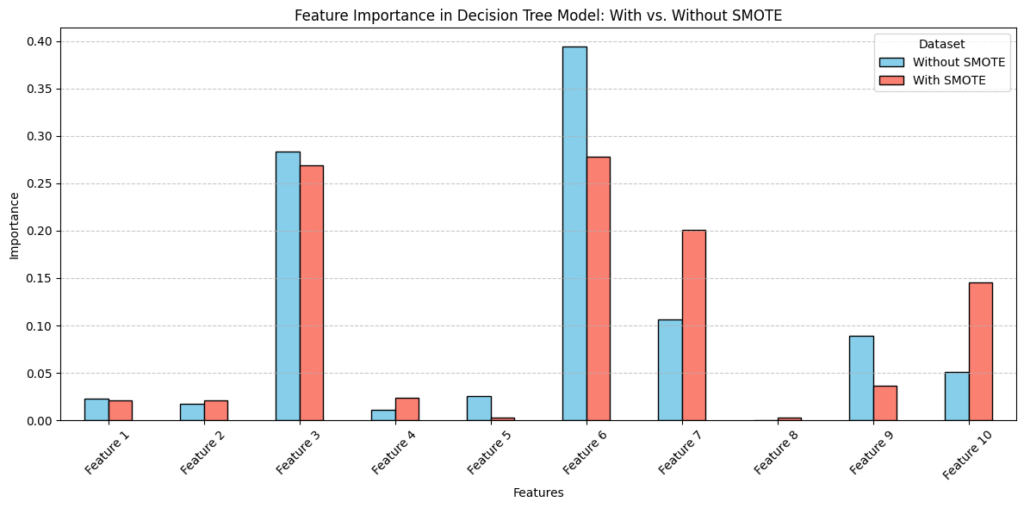

One significant concern with SMOTE is that feature importance metrics may become skewed. With synthetic samples added, certain features might appear more or less important than they truly are. This is particularly relevant for tree-based algorithms like random forests, which can misrepresent the influence of features as they adapt to synthetic data points.

For example, if SMOTE disproportionately generates synthetic samples around particular feature values, those features may gain undue importance. This inflation can distort feature importance results, potentially leading to incorrect interpretations.

Data Generation: Creates an imbalanced dataset.

Decision Tree Training: Trains models on the original and SMOTE-augmented data.

Feature Importance Comparison: Visualizes the impact of SMOTE on feature importance with a side-by-side bar chart.

Mitigating Feature Importance Skew with Post-SMOTE Adjustments

To reduce this issue, analysts can perform post-SMOTE feature selection by retraining the model on real data alone and then comparing the feature importance metrics. Alternatively, feature importance techniques that consider both the real and synthetic data distributions, such as permutation importance or SHAP, can provide more balanced insights.

Another approach is to use cross-validation carefully, splitting data before applying SMOTE so synthetic samples don’t leak across folds. This can give more reliable feature importance scores and help identify features truly critical to the model’s performance.

How SMOTE Impacts Global and Local Explainability Tools

Challenges in Explaining Black-Box Models with SMOTE

Global explainability tools like SHAP (SHapley Additive exPlanations) or PDPs (Partial Dependence Plots) may encounter challenges in explaining models trained with SMOTE. Since SMOTE can blend data points at class boundaries, it might obscure the global patterns that these tools reveal. This makes interpreting global feature effects less intuitive because synthetic points can mask the true relationships between variables and target classes.

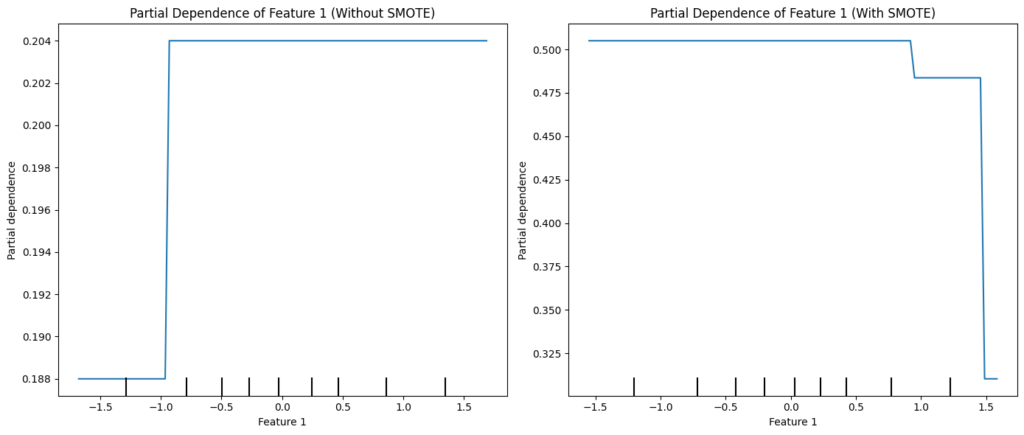

For instance, a partial dependence plot might show that a certain feature is highly predictive for a minority class, but this could be an artifact from SMOTE’s synthetic points rather than a genuine trend in the data.

The left PDP (without SMOTE) shows the original feature’s effect on predictions.

The right PDP (with SMOTE) displays the potential shifts in the feature’s importance and impact due to synthetic samples, which can alter interpretation of feature significance.

Addressing Local Interpretability with Counterfactual Explanations

For local interpretability, where individual predictions are explained, counterfactual explanations can offer a workaround. Counterfactuals describe minimal changes required for a model to alter its prediction. By assessing how features change for real samples rather than synthetic ones, counterfactuals can help maintain explainability even when SMOTE is applied.

For instance, if a model predicts fraud for a given transaction, a counterfactual approach could show how slightly altering transaction amount or frequency could result in a different prediction, bypassing reliance on synthetic samples.

Practical Steps to Balance SMOTE with Interpretability

Evaluating SMOTE’s Necessity in Model Pipeline

Before applying SMOTE, carefully consider if alternative strategies or smaller degrees of oversampling could achieve similar benefits without compromising interpretability. By limiting SMOTE to high-risk cases or using milder versions of oversampling, the model can often retain better transparency.

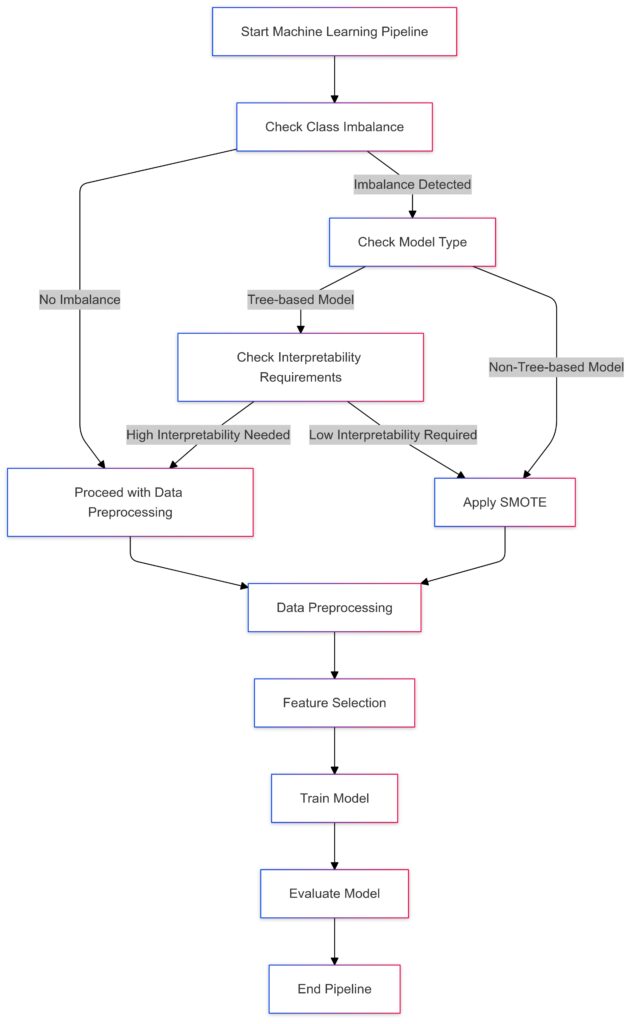

Check Class Imbalance: Determines if the dataset is imbalanced.

Model Type:If tree-based, consider interpretability requirements.

If non-tree-based or low interpretability needed, apply SMOTE.

Pipeline Continuation: Proceeds with data preprocessing, feature selection, model training, and evaluation.

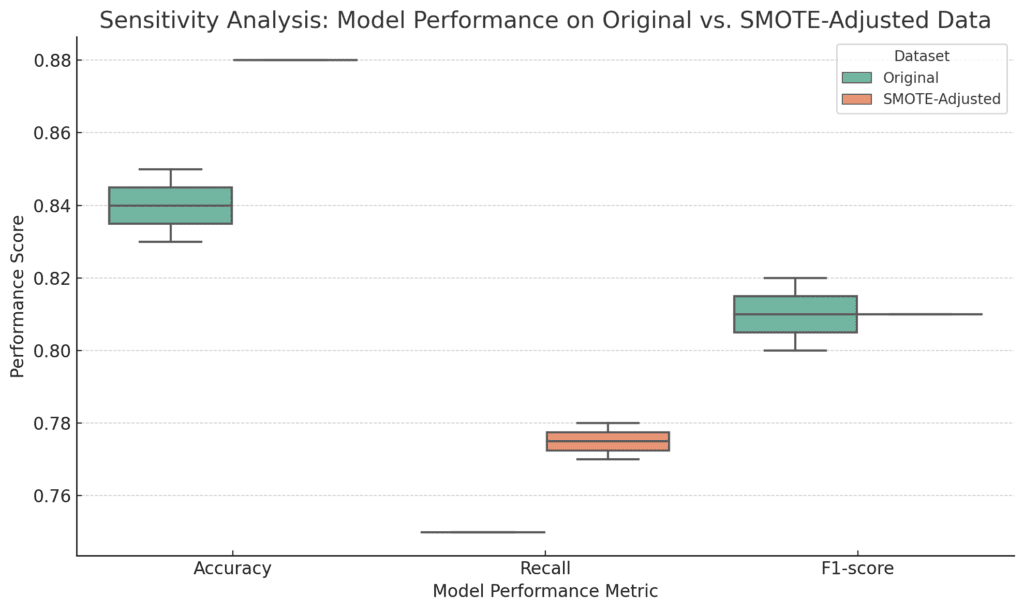

Conducting Sensitivity Analysis Post-SMOTE

After training a model with SMOTE, conducting a sensitivity analysis can help understand how much synthetic data affects predictions and explanations. Testing the model on subsets of the original (non-synthetic) data and comparing explanations can reveal whether SMOTE has altered feature importance or decision boundaries in unintended ways.

This can also involve assessing how much SMOTE-driven explanations align with domain knowledge, ensuring that they still make logical sense for stakeholders and use cases.

Variation in Metrics: Each metric shows performance distribution for both datasets, helping visualize the impact of SMOTE.

Insight on Synthetic Data: Differences in metric scores indicate sensitivity to synthetic data, showing potential improvements in Recall and F1-score with SMOTE.

These strategies can help maximize model performance with SMOTE while preserving interpretability. However, data scientists should weigh the benefits and trade-offs of SMOTE carefully, especially for sensitive applications where model transparency is essential. By being mindful of these impacts, SMOTE can be used in a balanced way, allowing for more robust models without losing interpretability or explainability.

FAQs

What are alternatives to SMOTE for handling imbalanced data?

Alternatives include cost-sensitive learning, which penalizes misclassifications of the minority class more heavily, and threshold adjustments to prioritize accurate minority class identification. You can also use sampling techniques that focus on minority samples likely to improve model performance, like ADASYN.

How can I evaluate if SMOTE is necessary in my model?

You can evaluate SMOTE’s necessity by analyzing performance metrics without SMOTE and only using it if there’s a marked improvement in minority class recall, F1-score, or overall balanced accuracy. Consider model type and interpretability requirements before applying SMOTE, as it’s not always the ideal choice.

Does SMOTE affect global vs. local explainability differently?

Yes, SMOTE can impact global explainability by altering class boundaries in the dataset, potentially masking or exaggerating feature effects across the entire model. Local explainability, where individual predictions are explained, might also be affected since SMOTE samples may not represent real-world instances, making local explanations less reliable.

Is there a way to use SMOTE while retaining interpretability?

Yes, you can limit the amount of synthetic data created or apply SMOTE only to high-risk cases. Additionally, using inherently interpretable models with SMOTE can help keep explanations more aligned with real-world data, balancing interpretability and performance.

How does SMOTE interact with high-dimensional data?

In high-dimensional spaces, SMOTE can face challenges due to the curse of dimensionality. When synthetic samples are created in many dimensions, they may not accurately represent realistic data points, leading to sparse or inconsistent patterns in the minority class. This can distort model training and make interpretability more complex, as patterns in high-dimensional space are often harder to interpret even without SMOTE.

Can SMOTE lead to overfitting in models?

Yes, SMOTE can contribute to overfitting, especially if a high number of synthetic samples are created in an already small dataset. By adding synthetic data points that closely resemble existing minority samples, models may learn from repeated patterns rather than generalizable features. Overfitting is particularly a risk in models that tend to memorize individual data points, like decision trees.

What kinds of models work best with SMOTE for balanced performance and interpretability?

Tree-based algorithms, like decision trees and random forests, often perform well with SMOTE since they can use synthetic samples to develop better minority class representations. However, interpretable linear models (e.g., logistic regression with class weighting) may sometimes be preferable to SMOTE for interpretability. The model choice depends on whether balanced accuracy or transparency is the primary goal.

How can I mitigate interpretability issues when using SMOTE?

To maintain interpretability with SMOTE, consider balancing the quantity of synthetic data with real data and applying SMOTE selectively, such as only for classes with extreme imbalances. You can also test feature importance and model explanations on both SMOTE-adjusted and original datasets to compare consistency and adjust the approach accordingly.

Is it possible to identify when SMOTE is creating misleading patterns?

Yes, performing sensitivity analyses or cross-validation checks on synthetic vs. real data can help identify if SMOTE is adding unrealistic patterns. If model metrics significantly differ between SMOTE-adjusted and original data, this may indicate that SMOTE is introducing artifacts, and it might be worth testing alternative oversampling techniques.

Does SMOTE affect interpretability for neural networks?

While neural networks tend to rely on many complex feature interactions, SMOTE can still impact interpretability by altering class boundaries. However, in complex networks where direct interpretability is already challenging, SMOTE’s impact may be less noticeable. Tools like SHAP or Integrated Gradients can help reveal if the synthetic data is affecting certain feature interactions disproportionately.

How does SMOTE influence model trustworthiness and stakeholder confidence?

SMOTE can sometimes reduce trustworthiness because synthetic samples may not represent real-world observations, raising questions about the reliability of model predictions. For applications requiring high transparency, such as finance or healthcare, extensive validation and explainability analysis are essential to ensure that SMOTE doesn’t reduce stakeholder confidence by introducing ambiguous or artificial patterns.

Resources

Articles and Guides

- Imbalanced-Learn Documentation

The Imbalanced-Learn library documentation provides comprehensive guides on SMOTE and other resampling techniques for imbalanced data. This is a good resource for understanding how SMOTE is implemented and how it interacts with different machine learning algorithms.

Read more at Imbalanced-Learn documentation - “A Survey of Classification Techniques in Data Mining”

This paper offers insights into various data-balancing techniques, including SMOTE, and discusses their effectiveness across different classification models. Useful for understanding the theoretical foundation of SMOTE and its practical limitations.

Available on ResearchGate Read the paper on ResearchGate - SMOTE: Synthetic Minority Over-sampling Technique

The original SMOTE research paper by Chawla et al. (2002) introduces the technique, explains its mechanics, and demonstrates its impact on model performance. An essential resource for those looking to understand SMOTE in-depth.

Read on SpringerLink Access the SMOTE paper

Tutorials and Code Examples

- Machine Learning Mastery: Handling Imbalanced Data with SMOTE

This tutorial provides practical examples of applying SMOTE in Python with Imbalanced-Learn, covering setup, implementation, and how SMOTE affects model performance. Great for hands-on understanding.

Visit the Machine Learning Mastery tutorial - KDnuggets: When to Use SMOTE or Undersampling in Imbalanced Datasets

This article explains when SMOTE is appropriate versus other methods, such as undersampling, and covers the advantages and drawbacks for model interpretability.

Read on KDnuggets