Understanding Fine-Tuning and Prompt Engineering

What Is Fine-Tuning?

Fine-tuning involves training a pre-existing AI model on additional, domain-specific data to adapt it for specialized tasks. This method requires a custom dataset and computational resources.

Fine-tuned models excel in niche applications like legal document analysis or medical diagnostics.

However, the process is resource-intensive. You’ll need expertise in machine learning, labeled data, and time for iterations. When done right, the results are deeply customized models that outperform generic AI for your needs.

What Is Prompt Engineering?

Prompt engineering optimizes the inputs you give to an AI to maximize performance without altering the model itself. It’s like crafting the perfect question to get the answer you need. By tweaking wording, structure, or providing examples, you can improve results without training or coding.

This method is fast and cost-effective, making it ideal for general use cases or exploratory tasks. However, the output depends on the model’s inherent abilities and limits you to what the model can already understand.

When to Choose Fine-Tuning

For Highly Specialized Use Cases

Fine-tuning is your go-to when your application demands specificity. For example, if you need a model to detect fraud patterns in financial data, a fine-tuned AI can learn those intricate details from your dataset.

In these cases, the trade-offs of time and resources are worthwhile because the model is tailored to your problem, improving both accuracy and consistency.

When Scalability Matters

If you’re deploying AI across a large enterprise, automation and reproducibility become critical. Fine-tuned models can seamlessly integrate into workflows, ensuring consistent results every time.

For instance, chatbots in customer support often rely on fine-tuned models to handle diverse, industry-specific queries effectively.

When Long-Term Control Is Needed

Fine-tuning provides you with ownership of the model. This is crucial if your industry demands control over data or if you want to reduce reliance on external providers.

For industries like healthcare or finance, where data sensitivity is a concern, fine-tuning offers a compliant and secure solution.

When to Opt for Prompt Engineering

For Quick Prototyping

If you need immediate results or are testing ideas, prompt engineering is the way to go. You can quickly craft questions and adjust responses without lengthy training cycles.

For example, startups exploring AI for market research or content creation can use prompt engineering to test use cases before committing to fine-tuning.

To Save on Costs and Resources

Prompt engineering is ideal if you lack the budget or expertise for fine-tuning. Instead of creating a new dataset, you’re leveraging the existing knowledge of a pre-trained model with minimal effort.

For example, using prompt techniques to generate summaries, create email templates, or draft reports saves significant time and money.

When Flexibility Is Key

Prompt engineering allows you to adapt on the fly. Need a different tone or style? Just tweak the wording. This flexibility makes it an excellent choice for content-related tasks or non-critical workflows where perfection isn’t mandatory.

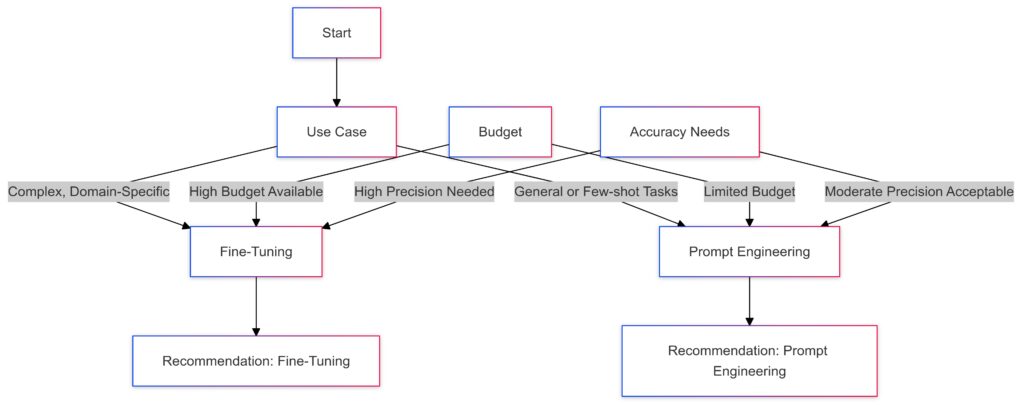

Key Differences Between Fine-Tuning and Prompt Engineering

Use Case:

Complex, Domain-Specific Tasks: Recommend Fine-Tuning for customized solutions.

General or Few-shot Tasks: Recommend Prompt Engineering for versatility.

Budget:

High Budget Available: Opt for Fine-Tuning, which requires computational resources and expertise.

Limited Budget: Opt for Prompt Engineering, which is cost-effective.

Accuracy Needs:

High Precision Needed: Choose Fine-Tuning for exacting standards.

Moderate Precision Acceptable: Choose Prompt Engineering for adequate flexibility.

How Fine-Tuning and Prompt Engineering Work Together

Bridging the Gap Between Customization and Flexibility

Fine-tuning and prompt engineering don’t have to be opposing approaches. In fact, combining them can often yield the best results. Fine-tune a model for its core task, then use prompt engineering to adjust the behavior or output for specific scenarios.

For instance, a fine-tuned model trained on legal contracts might excel at identifying clauses. However, by carefully crafting prompts, you can direct it to highlight only termination clauses or flag ambiguous terms in a user-friendly manner.

Building Efficiency in Iterative Development

For projects where you’re still defining the requirements, start with prompt engineering. Once patterns emerge—such as repeated types of queries or consistent shortcomings—shift toward fine-tuning. This ensures you’re investing in fine-tuning only when you have a clear picture of what’s needed.

This approach works especially well in customer service bots. Begin with general models and prompts. Over time, gather data on common customer issues to fine-tune the model for greater efficiency.

Enhancing User Experiences with Personalization

While fine-tuning establishes a robust base, prompt engineering allows you to tweak interactions dynamically. This combination is particularly valuable for AI tools in education, entertainment, or content personalization.

For example, an educational app could use a fine-tuned model for accurate subject knowledge while relying on prompt engineering to adjust tone—encouraging for young learners and more technical for advanced users.

Potential Challenges with Both Approaches

Overfitting Risks in Fine-Tuning

Fine-tuning involves the risk of overfitting, where the model becomes too focused on the training data, losing its general applicability. For instance, a model fine-tuned on a specific legal database may fail when encountering new legal jargon or jurisdictions.

To mitigate this, always ensure diverse, high-quality datasets during fine-tuning. Regularly test the model on real-world examples outside the training set.

Dependence on Human Skill in Prompt Engineering

Prompt engineering relies heavily on the user’s ability to craft effective inputs. Poorly worded or ambiguous prompts can lead to subpar outputs. This makes it less reliable in situations requiring high precision.

One way to overcome this is by experimenting with few-shot learning, where you include a few examples in the prompt to guide the AI more effectively.

Resource and Maintenance Costs in Both

Fine-tuning incurs upfront costs for data preparation and training, while prompt engineering may require constant monitoring and adjustments. Balancing the two methods ensures you’re not overspending in one area while neglecting another.

Tools and Techniques to Optimize Both

Tools for Fine-Tuning

- Hugging Face Transformers: Offers tools for training and deploying fine-tuned models with ease.

- Google Cloud AI Platform: Provides resources for fine-tuning at scale.

- OpenAI’s API Fine-Tuning: Allows customization of GPT models with proprietary data.

Each tool supports transfer learning, which reduces the data and time required by leveraging pre-trained capabilities.

Prompt Engineering Frameworks

- LangChain: Simplifies designing prompts for complex workflows, integrating them with multiple models.

- Prompt Layers: Tracks the performance of prompts across tasks to identify the most effective ones.

- AI21 Studio: Offers a playground for real-time experimentation with prompts.

Using these frameworks ensures consistency and helps you refine inputs over time.

Tools and Platforms for Fine-Tuning and Prompt Engineering

Fine-Tuning Tools:

Hugging Face

OpenAI API

Google Vertex AI

Output: Produces Customized Models tailored to specific requirements.

Prompt Engineering Tools:

LangChain

Prompt Layer

AI21 Studio

Output: Optimizes Inputs for better performance without modifying the underlying model.

Examples and Use Cases for Fine-Tuning and Prompt Engineering

Fine-Tuning in Action

Industry-Specific Chatbots

Imagine a healthcare chatbot designed to assist patients with symptoms and scheduling appointments. Fine-tuning the model on HIPAA-compliant datasets ensures that the bot understands medical terminology, patient concerns, and regulations specific to healthcare.

A fine-tuned model here eliminates ambiguity, providing precise answers like, “A fever of 102°F may warrant a doctor’s visit,” instead of general advice. The investment pays off in trustworthiness and functionality, which is essential in regulated industries.

Fraud Detection Models

In banking and finance, fraud detection systems require an acute understanding of patterns in large transaction datasets. Fine-tuning can train the AI to identify subtle anomalies, like unusually frequent transactions or deviations from spending habits, that generic models might miss.

The outcome? A solution that balances sensitivity (catching fraud) with specificity (avoiding false positives), tailored to a company’s unique operations.

Prompt Engineering in Action

Creative Writing Assistance

For tasks like storytelling or content generation, prompt engineering shines. By carefully framing a prompt such as, “Write a suspenseful paragraph introducing a mysterious character in a forest,” the model produces contextually rich outputs without needing additional training.

This flexibility is crucial for creative fields, where adaptability and tone shifts are frequent.

Customer Support with Context-Specific Responses

In customer service, you might use a generic AI model to answer FAQs. Through prompt engineering, you can ensure the model generates polite and empathetic responses, like:

- Prompt: “Respond to a customer who received the wrong product with an apology and a promise to replace it.”

- Output: “We deeply regret this mistake and will send the correct item immediately. Thank you for your understanding.”

This method saves time while maintaining a professional tone.

Metrics for Choosing Between Fine-Tuning and Prompt Engineering

Performance Goals

- If your task demands higher accuracy and task-specific behavior, fine-tuning is the clear winner. Use it when the stakes are high, like in clinical diagnostics or compliance workflows.

- When speed and generality suffice, such as for drafting social media captions or basic summarization, prompt engineering meets the need effectively.

Scalability Considerations

For scalable solutions like integrating AI into a corporate environment, fine-tuning ensures consistency. But if your team regularly updates content or processes, prompt engineering provides the agility to adapt in real time.

Cost and Resource Constraints

Fine-tuning is resource-intensive, requiring technical teams and computational power. If budgets are tight, prompt engineering can still deliver impressive results without the added infrastructure.

Future Trends in AI Customization

Advances in Fine-Tuning

Emerging tools are making low-resource fine-tuning more accessible. Techniques like LoRA (Low-Rank Adaptation) enable fine-tuning specific layers of a model, reducing computational overhead. This opens up opportunities for smaller businesses to explore fine-tuned solutions.

Automated Prompt Optimization

Prompt engineering is evolving with tools that use AI to optimize prompts for you. For example, tools like AutoPrompt analyze input-output pairs to create highly effective prompts automatically. This innovation reduces dependency on human expertise.

Hybrid Solutions for Maximum Efficiency

Expect to see hybrid solutions where fine-tuning handles the specialized groundwork, while prompt engineering adjusts output for contextual nuance. Such systems could revolutionize industries like e-learning, where a base model delivers accurate content, but prompts tailor it to each learner’s style.

Fine-Tuning vs. Prompt Engineering: Making the Right Choice

Choosing between fine-tuning and prompt engineering boils down to your goals, resources, and the complexity of your tasks. Fine-tuning delivers precision and scalability for specialized applications, ensuring long-term efficiency and control. In contrast, prompt engineering thrives on flexibility and quick adaptation, perfect for exploratory or creative tasks.

However, the two aren’t mutually exclusive. By combining a fine-tuned model’s robust foundation with the contextual adaptability of prompt engineering, you can unlock the full potential of AI customization. This hybrid approach balances accuracy, cost-effectiveness, and user-friendly interaction.

Whether you’re building an AI-powered chatbot, streamlining workflows, or crafting compelling content, understanding when and how to apply each method ensures you achieve the best outcomes for your unique needs. By leveraging the right tool for the job, you’re setting the stage for smarter, more tailored AI solutions.

FAQs

How do I combine fine-tuning and prompt engineering?

Use fine-tuning to build a strong foundation and prompt engineering to adapt it to specific contexts. For example:

- A fine-tuned model trained to summarize technical research papers can be adjusted with prompts like, “Summarize this for a non-technical audience.”

- In customer service, fine-tune a model for the company’s FAQs, then use prompts to adapt tone: “Respond politely to a frustrated customer.”

This blend delivers both accuracy and flexibility.

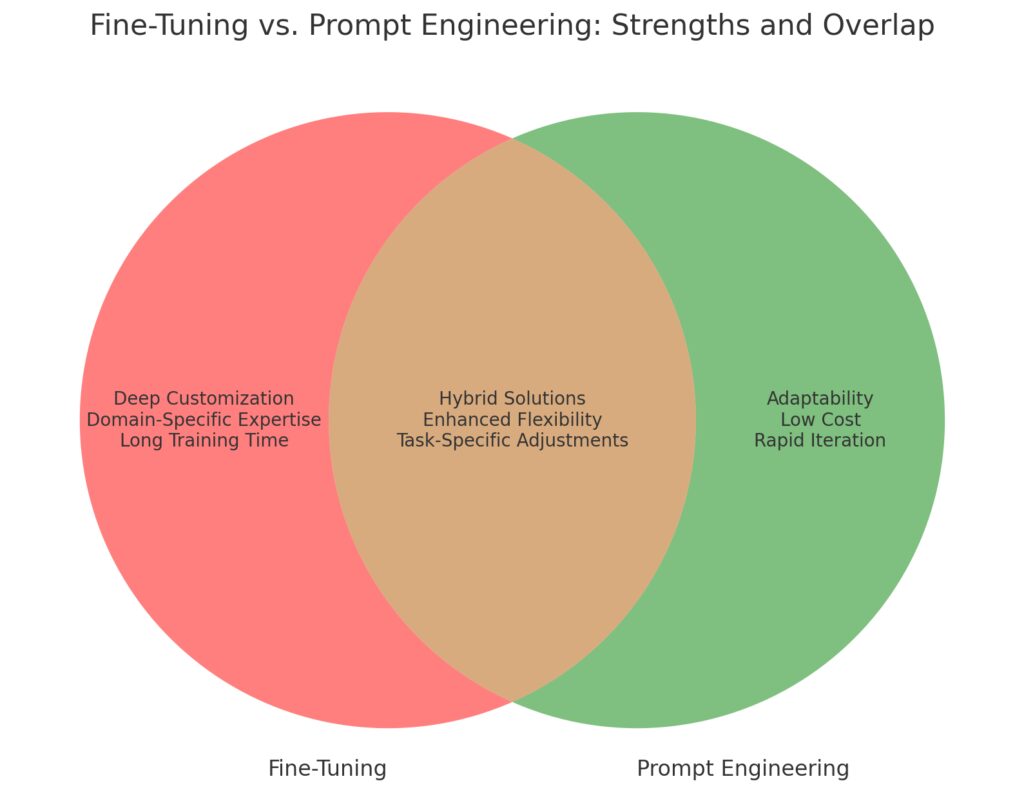

Fine-Tuning:

Deep Customization

Domain-Specific Expertise

Long Training Time

Prompt Engineering:

Adaptability

Low Cost

Rapid Iteration

Overlap (Hybrid Solutions):

Enhanced Flexibility

Task-Specific Adjustments

Are there any cost differences between the two approaches?

Yes, fine-tuning is resource-intensive and involves:

- Preparing datasets.

- Using computational resources for training.

- Hiring experts for implementation.

Prompt engineering is cost-effective, as it only requires creativity and experimentation with input phrasing. For example:

- Fine-tuning: Training a legal model might cost tens of thousands of dollars.

- Prompt engineering: Spending time refining inputs like, “Summarize this contract’s key risks” costs far less and requires no training.

Choose based on your budget and the complexity of your project.

How does fine-tuning enhance model performance?

Fine-tuning improves a model’s accuracy and specialization by training it on domain-specific datasets. This process aligns the model’s outputs with precise needs.

Example Use Cases:

- Healthcare AI: A model fine-tuned with radiology images can detect abnormalities in X-rays better than a general-purpose AI.

- E-commerce: A fine-tuned recommendation engine trained on historical customer purchase data will suggest products tailored to user preferences.

Fine-tuning makes the model better at specific tasks, but it requires time, expertise, and resources.

How can prompt engineering achieve similar results without fine-tuning?

Prompt engineering cleverly structures inputs to extract better responses from a general model. You’re essentially guiding the AI to deliver the answers you want.

Examples:

- Instead of fine-tuning a chatbot for customer queries, you can prompt:

“Respond empathetically to a customer saying their package was delayed.” - For summarizing technical documents, use:

“Explain the following in simple terms suitable for a high school student.”

This method saves time and costs but may require trial and error to perfect the prompt.

Can I use fine-tuning and prompt engineering together?

Absolutely! Combining the two creates a robust yet adaptable AI system.

Example Workflow:

- Fine-tune a legal model to understand contract terms, regulatory compliance, and risk analysis.

- Use prompt engineering to adjust outputs dynamically:

- “Focus on clauses related to termination and penalties.”

- “Rewrite this clause in plain English for a client.”

This approach ensures the AI is specialized and can cater to diverse use cases on demand.

How do I know if fine-tuning is worth the cost?

Fine-tuning is worth the investment if:

- High accuracy is critical. For instance, in medical diagnostics or fraud detection, errors can have serious consequences.

- The model will be used frequently. A fine-tuned AI can significantly reduce time and effort in repetitive workflows, justifying the upfront cost.

However, if your use case is temporary or exploratory—such as testing marketing ideas—prompt engineering is more cost-effective.

Example Comparison:

- Fine-tuning: A retail company builds a recommendation model that learns from millions of transactions.

- Prompt engineering: A startup uses prompt tweaks for basic product suggestions without creating a new dataset.

What tools or platforms can I use for fine-tuning and prompt engineering?

Fine-Tuning Tools:

- Hugging Face: Provides pre-trained models and tools to fine-tune them with minimal setup.

- OpenAI Fine-Tuning API: Enables custom GPT models with private data for personalization.

- Google Vertex AI: A platform for large-scale fine-tuning projects with built-in data management.

Prompt Engineering Frameworks:

- LangChain: Ideal for chaining multiple prompts and managing workflows.

- Prompt Layer: Tracks and compares the effectiveness of different prompts for a task.

- AI21 Studio Playground: A sandbox for real-time experimentation with model prompts.

These platforms streamline the customization process and improve productivity.

What are the risks of relying solely on fine-tuning or prompt engineering?

Fine-Tuning Risks:

- Overfitting: The model might become too specialized, failing to generalize to new data.

Example: A fraud detection AI trained on one bank’s data may miss schemes in another region. - High Costs: Requires datasets, skilled personnel, and infrastructure, making it unsuitable for small-scale projects.

Prompt Engineering Risks:

- Inconsistency: Outputs depend on how well you frame the prompt. Poorly crafted inputs can lead to inaccurate or irrelevant results.

- Limited Scope: You can only optimize what the pre-trained model already knows. If the base model lacks domain knowledge, even the best prompt won’t help.

Mitigating these risks often involves a hybrid approach, using fine-tuning for foundational knowledge and prompt engineering for contextual adjustments.

How do I measure the success of each method?

Fine-Tuning Success Metrics:

- Accuracy: Does the model provide domain-specific, error-free results?

- Consistency: Are outputs stable and reproducible across similar inputs?

- Efficiency Gains: Does it streamline operations or improve productivity?

Prompt Engineering Success Metrics:

- Prompt Effectiveness: Does tweaking the prompt significantly improve the output?

- Adaptability: Can the model handle various tasks without additional training?

- Speed: How quickly can you craft usable outputs?

Example Metrics in Practice:

- Fine-tuned fraud detection: A 20% drop in false positives.

- Prompt-engineered chatbot: Responses improved from 70% helpful to 90% with prompt refinement.

Resources

Fine-Tuning Resources

Courses and Tutorials

- Hugging Face Course

Learn to fine-tune transformers with Python and TensorFlow/PyTorch.

Course Link - Coursera’s “Custom Models, Layers, and Loss Functions with TensorFlow”

A great starting point for fine-tuning and model customization.

Course Link

Research Papers

- “Transfer Learning for NLP” by Sebastian Ruder

A foundational paper covering fine-tuning concepts and best practices.

Read here - “Fine-Tuning Pretrained Language Models: Weight Initializations, Data Orders, and Optimization”

Delves into technical nuances of fine-tuning strategies.

Read here

Tools and Platforms

- Hugging Face Transformers

Offers pretrained models ready for fine-tuning.

Visit - OpenAI Fine-Tuning API

Simplifies customizing GPT models with your data.

Visit - Google Vertex AI

Scalable fine-tuning capabilities for large datasets.

Visit

Prompt Engineering Resources

Courses and Tutorials

- Prompting 101 by OpenAI

A free guide to crafting prompts for OpenAI models.

Start here - LangChain Tutorials

Walks you through using prompts in multi-step workflows for complex tasks.

Visit

Communities and Blogs

- Prompt Engineering GitHub Repository

A curated list of techniques and examples.

Visit - AI21 Studio Blog

Offers tips and insights for effective prompt writing.

Visit

Tools and Platforms

- AI21 Studio

Experiment with prompts and track results in a user-friendly playground.

Visit - Prompt Layer

Helps you manage, optimize, and track prompt performance.

Visit - LangChain

A framework to integrate prompts into workflows and build apps around them.

Visit