The rapid development of AI models often feels like a race to be the biggest, but is scaling always the right choice? While massive datasets and sprawling architectures have dominated headlines, a growing movement emphasizes a data-centric approach to AI.

Let’s dive into why refining your data, rather than just adding more of it, might be the smarter strategy.

The Myth of Bigger Data Is Better Data

Quality vs. Quantity: Striking the Right Balance

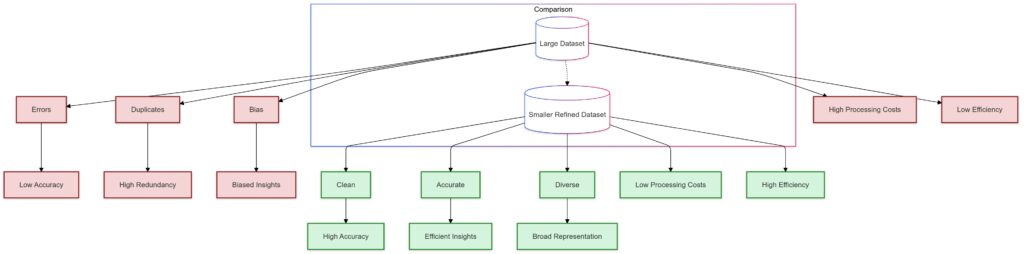

It’s tempting to believe that bigger datasets automatically lead to better results. But the truth? Quality often outweighs quantity. Large datasets can be riddled with duplicates, errors, and irrelevant noise. This extra baggage doesn’t improve models; it muddies them.

By focusing on clean, relevant, and diverse data, organizations create AI systems that are more robust, accurate, and adaptable. Think of it as perfecting a recipe rather than adding random ingredients.

The Hidden Costs of Massive Datasets

Bigger datasets bring storage, computing, and maintenance challenges. Training large models consumes enormous resources, increasing both environmental impact and costs. A smaller, well-curated dataset can be significantly more efficient—and effective.

Moreover, handling big data increases the likelihood of overfitting, where a model learns too much from its training data, becoming less generalizable in real-world scenarios.

Why a Data-Centric Approach Works

Focusing on Data Refinement

A data-centric mindset shifts the focus to improving data quality rather than endlessly tweaking model architecture. As Andrew Ng, a prominent AI figure, emphasizes, “80% of a model’s success lies in its data.” This means:

- Identifying and correcting errors.

- Adding edge cases for better robustness.

- Balancing datasets to reduce bias.

The Power of Human Feedback

Human-in-the-loop AI systems rely on experts to label, validate, and refine data. This creates a feedback loop that hones both data quality and model performance. In sectors like healthcare and finance, this targeted approach ensures AI outcomes are reliable and fair.

Rethinking the Role of Big Models

Do Bigger Models Always Perform Better?

Bigger models may excel at handling vast data, but they’re not immune to flaws. They amplify biases present in their datasets, require complex deployment strategies, and often exhibit diminishing returns beyond a certain scale.

For example, recent breakthroughs like ChatGPT and DALL·E stem more from innovative architecture and refined datasets than sheer size.

Specialized Models: Lean and Mean

Smaller, specialized models are gaining traction as a cost-effective alternative. By training on specific domains with clean, curated data, these models often outperform larger ones on niche tasks.

In the AI world, precision often beats power.

The Environmental Impact of Scaling

The Carbon Footprint of Big AI

Training gigantic AI models isn’t just expensive; it’s environmentally taxing. A single training run for some large language models emits as much carbon as several flights.

Adopting a data-centric approach reduces unnecessary resource usage, aligning AI practices with sustainability goals.

Energy-Efficient Solutions

Smaller datasets and smarter training pipelines lower energy consumption without sacrificing results. This approach helps organizations scale responsibly.

Building More Ethical AI

Fighting Bias with Better Data

One of the major criticisms of large AI systems is their propensity to amplify biases. Data-centric development addresses this by prioritizing diversity and fairness during curation. When the focus shifts to refining and balancing data, inclusivity improves across the board.

Transparency and Trust

By concentrating on data quality, teams can better explain their models’ outputs, fostering trust among stakeholders. A refined dataset also enables more effective audits for ethical compliance.

Practical Steps to Embrace Data-Centric AI

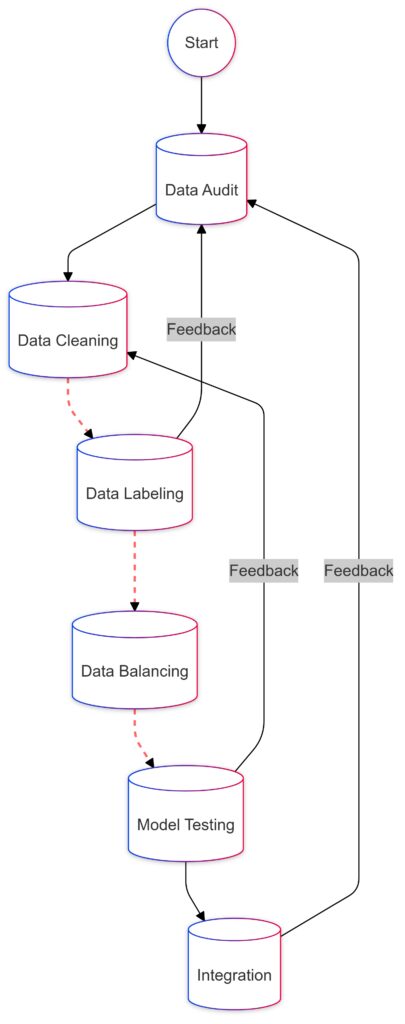

Start with a Data Audit

The first step in adopting a data-centric AI strategy is understanding your existing datasets. Ask yourself:

- Are there errors or inconsistencies?

- Does the data represent all key scenarios or edge cases?

- Are there any biases in the data that might affect the outcomes?

A thorough audit reveals the gaps that need fixing. Tools like data profiling software or frameworks such as pandas can assist in identifying and correcting these issues.

Invest in Annotation and Labeling

The quality of your labels can make or break an AI model. Collaborating with domain experts ensures that annotations are accurate and meaningful. Even minor mislabeling can skew results, so precision is key.

Automated tools like Label Studio or Amazon SageMaker Ground Truth streamline this process, but human oversight remains critical for nuanced tasks.

Iterative Data Improvements

AI isn’t a “set it and forget it” field. Periodically revisiting your datasets and refining them based on model performance ensures continual improvement. This includes adding new data points, removing irrelevant information, and correcting misclassifications.

Balancing Diversity and Representativeness

To reduce bias, ensure your data captures the diversity of real-world scenarios. For instance, a facial recognition system trained on images predominantly of one demographic will perform poorly for others.

Striving for balance and representativeness builds AI models that are both fair and effective.

Tools and Techniques for Data-Centric AI

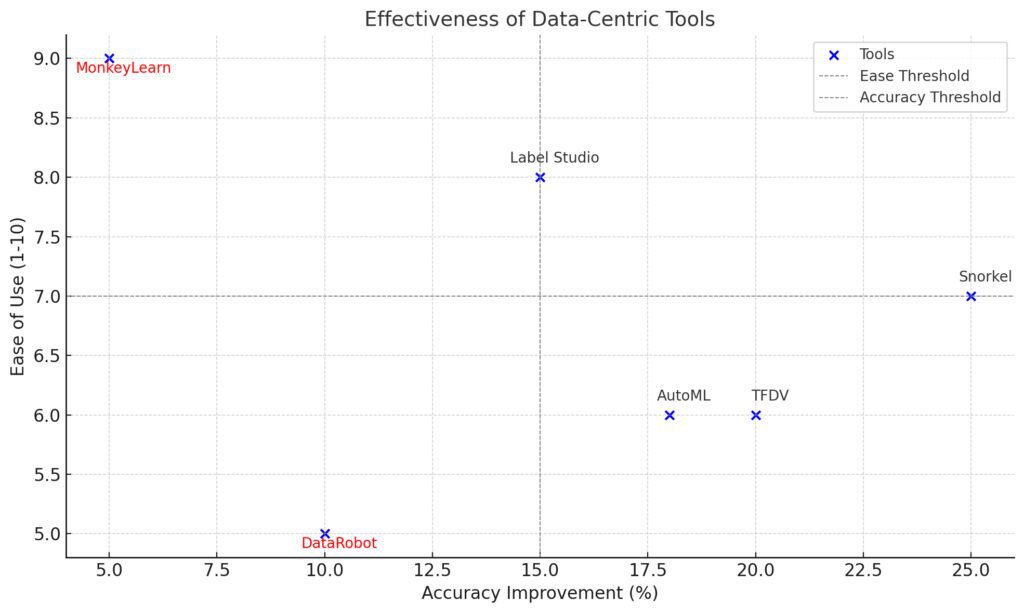

Data Validation Tools

Validation tools like TensorFlow Data Validation (TFDV) automatically detect anomalies and imbalances in datasets. By catching these early, you save time and resources during model training.

Synthetic Data Generation

When real-world data is scarce or difficult to obtain, synthetic data offers a viable alternative. By simulating realistic scenarios, tools like GANs (Generative Adversarial Networks) create training data without compromising privacy.

Synthetic data is particularly useful in industries like healthcare, where patient data is often sensitive and limited.

Active Learning Approaches

Active learning focuses on feeding a model with the most informative data points. Instead of blindly training on a vast dataset, active learning identifies areas where the model struggles and prioritizes those for retraining.

Frameworks like modAL can guide this iterative process.

Challenges of Data-Centric AI

The Time-Intensive Nature of Data Refinement

Curating high-quality data demands significant time and effort. Teams often grapple with balancing this process against the pressure to deploy AI solutions quickly.

However, investing upfront in data quality reduces retraining cycles and costly errors in the long term.

Scaling Without Losing Focus

As projects grow, maintaining a data-centric approach becomes more complex. It requires strong collaboration between data scientists, engineers, and domain experts.

Regular check-ins, robust data management policies, and clear documentation help teams stay aligned.

Organizational Resistance to Change

Shifting from a model-centric to a data-centric mindset can meet resistance. Traditional success metrics, like model size or complexity, may overshadow the importance of data quality.

Advocating for this approach requires education and clear demonstrations of its benefits, such as improved accuracy and reduced bias.

Real-World Applications of Data-Centric AI

Healthcare: Enhancing Diagnostic Accuracy

In healthcare, data-centric AI is revolutionizing diagnosis and treatment plans. Medical imaging systems, for instance, often rely on annotated datasets of X-rays, MRIs, and CT scans.

When curated correctly, this data can reduce false positives and improve diagnostic accuracy. For example:

- Mayo Clinic improved cancer detection models by including edge cases like atypical tumor presentations.

- AI systems in telemedicine are now trained on diverse demographic data to better identify conditions across populations.

The result? More equitable and precise healthcare outcomes.

Autonomous Vehicles: Navigating Complex Scenarios

Autonomous vehicle systems thrive on edge cases—rare scenarios like extreme weather or unusual road conditions. Instead of endlessly collecting millions of hours of driving footage, data-centric strategies target specific gaps, ensuring robustness.

Companies like Tesla and Waymo prioritize refining datasets to include these rare events, reducing risks in real-world applications.

By focusing on the right data, these systems achieve better safety benchmarks with fewer resources.

Finance: Fighting Fraud with Focused Data

In the finance industry, fraud detection models require datasets that are both accurate and up-to-date. Simply adding more data often leads to diminishing returns.

Data-centric techniques like anomaly detection and balanced sampling help:

- Capture rare fraudulent patterns.

- Avoid over-representation of non-fraudulent transactions, which could skew results.

Banks like JP Morgan and FinTech firms are adopting cleaner, curated data pipelines to create smarter fraud detection systems.

Retail: Personalization at Scale

E-commerce platforms thrive on personalization, but bias in data can alienate users. Instead of collecting endless browsing histories, companies like Amazon and Shopify focus on refining product datasets and feedback loops.

This ensures product recommendations reflect individual preferences without amplifying stereotypes or irrelevant trends.

Education: Tailored Learning Experiences

AI-driven education platforms, like Duolingo or Coursera, increasingly rely on data-centric methods to personalize learning. Instead of scaling indiscriminately, they refine data to:

- Adapt to various learning styles.

- Incorporate diverse cultural and linguistic examples.

This approach creates systems that are inclusive, adaptive, and more effective for a global audience.

The Future of Data-Centric AI

Industry-Wide Collaboration

The shift to data-centric AI will encourage collaboration between organizations, enabling the sharing of best practices for refining datasets. Open-source projects and data consortiums will play a pivotal role in improving AI outcomes.

Scaling Responsibly

As AI continues to scale, focusing on data quality ensures systems remain sustainable, ethical, and impactful. Responsible scaling emphasizes achieving better performance, not just bigger models.

FAQs

How is data-centric AI different from model-centric AI?

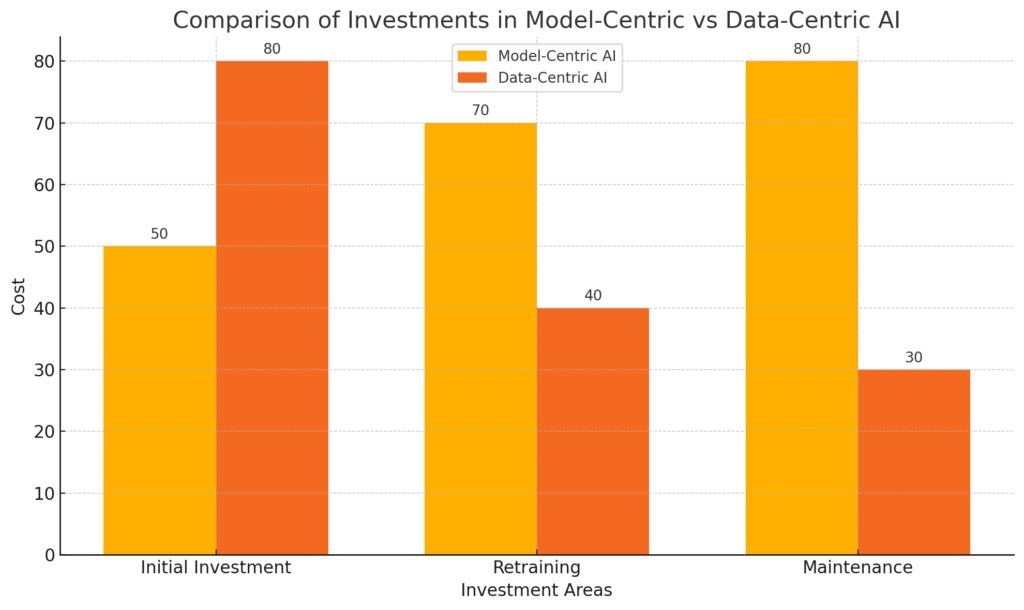

Model-centric AI focuses on improving the architecture and algorithms of AI systems. The primary goal is to enhance performance by optimizing the model itself.

In contrast, data-centric AI shifts the focus to refining the quality, balance, and diversity of the data used to train these models. For example:

- A model-centric approach to improving facial recognition might involve creating a larger neural network.

- A data-centric approach would ensure the dataset includes diverse skin tones and lighting conditions to reduce bias.

This shift results in smarter, more reliable AI systems without requiring larger, more complex models.

Can smaller datasets outperform larger ones?

Yes! Smaller, high-quality datasets often outperform larger, unrefined ones.

For instance, a fraud detection system trained on a curated dataset of 10,000 balanced and accurate transactions may perform better than one trained on a noisy, biased dataset of 1 million transactions.

By removing irrelevant or erroneous data, the system learns faster and generalizes better to new scenarios.

What industries benefit most from data-centric AI?

Almost every industry can benefit from this approach, but some examples include:

- Healthcare: Enhancing diagnostic accuracy by refining patient data and addressing biases in medical imaging.

- Finance: Improving fraud detection by curating datasets to reflect rare but critical patterns.

- Retail: Creating personalized recommendations through clean, representative data of user behaviors.

Is it more expensive to focus on data refinement?

Not necessarily! While refining data requires upfront investment, it can save costs in the long run.

For example:

- Training on clean, curated data reduces the need for constant retraining or debugging.

- Fewer errors in production mean lower costs for maintenance and issue resolution.

Additionally, tools like TensorFlow Data Validation and synthetic data generators help streamline the process.

How does data-centric AI improve fairness and inclusivity?

By prioritizing data quality, this approach ensures datasets are more representative of diverse populations and edge cases.

Take an example in natural language processing:

- A sentiment analysis tool trained with balanced data from different dialects and cultures performs more equitably.

- Without this refinement, the model might disproportionately misinterpret non-standard English phrases.

Data-centric AI identifies and addresses these gaps, fostering more ethical and inclusive outcomes.

How does data-centric AI address bias?

Bias arises when a dataset overrepresents or underrepresents certain groups or scenarios. A data-centric approach actively identifies and corrects these imbalances.

For instance:

- In hiring systems, curated datasets ensure job applicant data reflects diversity across gender, ethnicity, and socioeconomic backgrounds.

- For autonomous vehicles, edge cases like pedestrians using wheelchairs or jaywalking are included to ensure equitable safety measures.

By targeting biases in the data, organizations create more inclusive and fair AI systems.

What tools are available for data-centric AI?

Several tools and frameworks support data-centric workflows:

- Label Studio: For labeling and annotating datasets.

- TensorFlow Data Validation (TFDV): To detect data anomalies and ensure consistency.

- Amazon SageMaker Ground Truth: To streamline data labeling and refinement.

- GANs (Generative Adversarial Networks): For generating synthetic data to fill gaps or create edge cases.

Using these tools helps teams save time while improving data quality.

What’s the role of synthetic data in data-centric AI?

Synthetic data acts as a supplement when real-world data is hard to obtain or insufficient. It is particularly useful for edge cases or privacy-sensitive fields like healthcare.

Example applications include:

- Training autonomous vehicles using simulated traffic scenarios.

- Generating anonymized patient data for medical research to comply with privacy laws.

By carefully generating synthetic data, teams enhance their models without compromising quality or ethics.

How can small organizations adopt data-centric AI?

Smaller organizations can thrive with data-centric AI by focusing on efficiency and targeted improvements. They don’t need vast resources to get started.

Steps include:

- Conducting a simple data audit to spot errors or imbalances.

- Using open-source tools like pandas or scikit-learn for preprocessing.

- Leveraging synthetic data to fill critical gaps when real-world data is limited.

By concentrating on a small, clean dataset, smaller teams can achieve competitive results without the costs of large-scale training.

What are some examples of data-centric AI in action?

- Retail: An e-commerce platform improves its recommendation system by balancing product categories in the training dataset.

- Social Media: Platforms reduce content moderation errors by refining datasets to include diverse language nuances and slang.

- Healthcare: A diagnostic AI tool improves predictions by addressing missing data in rare disease cases.

These real-world examples showcase how targeted data improvements can lead to transformative results.

Can data-centric AI work with legacy systems?

Yes, data-centric AI can integrate with legacy systems by focusing on enhancing data pipelines. Rather than overhauling the system, teams refine the input data to improve the outputs.

For example:

- A bank using an old fraud detection model updates its datasets to include recent transaction patterns, improving accuracy without redesigning the system.

- A manufacturing firm improves predictive maintenance by removing noisy sensor data from legacy machines.

This compatibility makes data-centric AI an attractive approach for modernizing existing workflows.

What role does human expertise play in data-centric AI?

Human expertise is vital for identifying nuances that automated tools might miss. Domain experts ensure data quality by:

- Labeling datasets accurately, especially in specialized fields like medicine or law.

- Validating models’ performance on real-world scenarios to ensure reliability.

For instance, radiologists collaborating with AI teams help refine medical imaging datasets, leading to better diagnostic tools.

How does data-centric AI support regulatory compliance?

Regulations like GDPR and CCPA demand transparent, unbiased, and privacy-compliant AI systems. Data-centric AI supports compliance by:

- Removing sensitive or personally identifiable information from datasets.

- Ensuring datasets are balanced to reduce discriminatory outcomes.

For example, a financial AI model trained under data-centric principles can provide equitable loan approvals while meeting legal standards for transparency and fairness.

Resources

Tools for Data-Centric AI

- Label Studio

An open-source tool for annotating and labeling datasets, supporting complex workflows and domain-specific requirements. - TensorFlow Data Validation (TFDV)

Designed for anomaly detection and schema validation to maintain dataset consistency. - Snorkel

Facilitates programmatic data labeling, making it easier to create and refine large datasets efficiently. - Great Expectations

A robust tool for automating data quality checks, ensuring datasets meet defined standards before training. - Amazon SageMaker Ground Truth

Helps streamline labeling tasks with features like active learning and workforce management.