3D rendering has seen groundbreaking advancements, but few are as exciting as Neural Radiance Fields (NeRFs). This innovative approach to rendering promises to redefine how we perceive and interact with 3D environments.

Why NeRFs are being hailed as a game-changer for dynamic 3D rendering?

What Are Neural Radiance Fields?

A Quick Overview of NeRF Technology

NeRFs are AI-driven models designed to create highly realistic 3D scenes using 2D images. They achieve this by mapping the scene’s radiance (light) and density from different angles, effectively reconstructing a virtual environment.

In simpler terms, NeRFs synthesize 3D visuals from flat images, making it possible to view objects from any perspective. This isn’t just revolutionary—it’s efficient.

How NeRFs Differ From Traditional 3D Rendering

Traditional 3D rendering methods rely on polygon meshes and intricate computations, which can be time-consuming. NeRFs bypass these complexities by using neural networks to simulate light rays directly.

This allows for more fluid rendering, making NeRFs especially appealing for applications like gaming, VR, and real-time simulations.

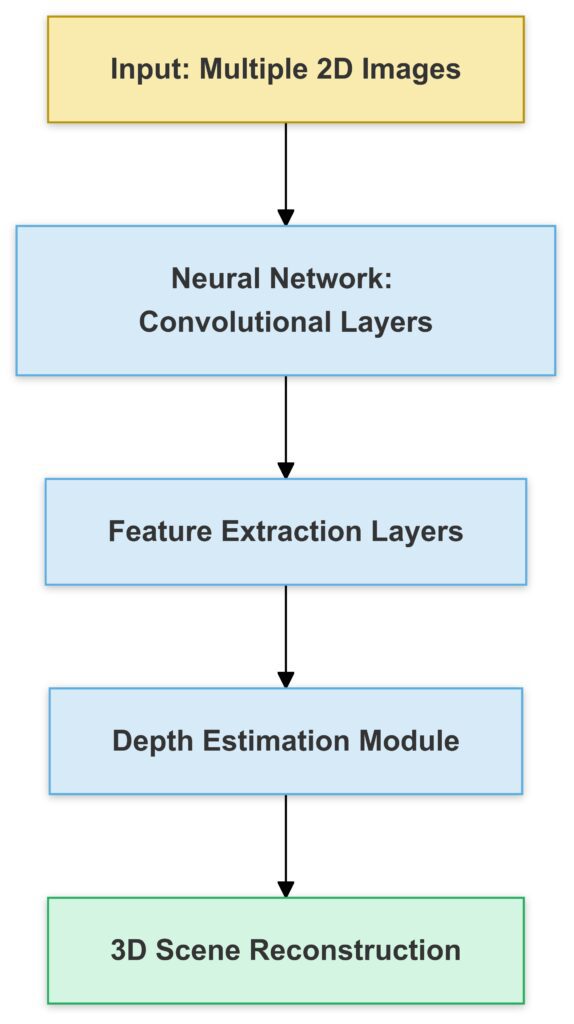

Input Stage:

- Multiple 2D images feed into the neural network.

Neural Network Processing:

- Convolutional Layers: Extract features from the images.

- Feature Extraction Layers: Identify key details for depth and structure.

- Depth Estimation Module: Generate spatial depth information.

Output Stage:

3D Scene Reconstruction: Produces a detailed 3D model.

Why NeRFs Are Disrupting the 3D Industry

Faster Rendering Speeds

NeRFs excel in rendering scenes quickly without sacrificing quality. Since they operate on light-field-based interpolation, they skip many of the traditional bottlenecks of rendering pipelines.

For example, generating a photorealistic object in NeRF takes minutes instead of hours—an efficiency leap that’s hard to ignore.

Superior Visual Quality

Thanks to their AI-driven approach, NeRFs can produce visuals that feel almost lifelike. Subtle details like reflections, shadows, and texture variations are captured with stunning accuracy.

This quality makes NeRFs a preferred choice for high-stakes industries like cinematography and architectural visualization.

Key Applications of Neural Radiance Fields

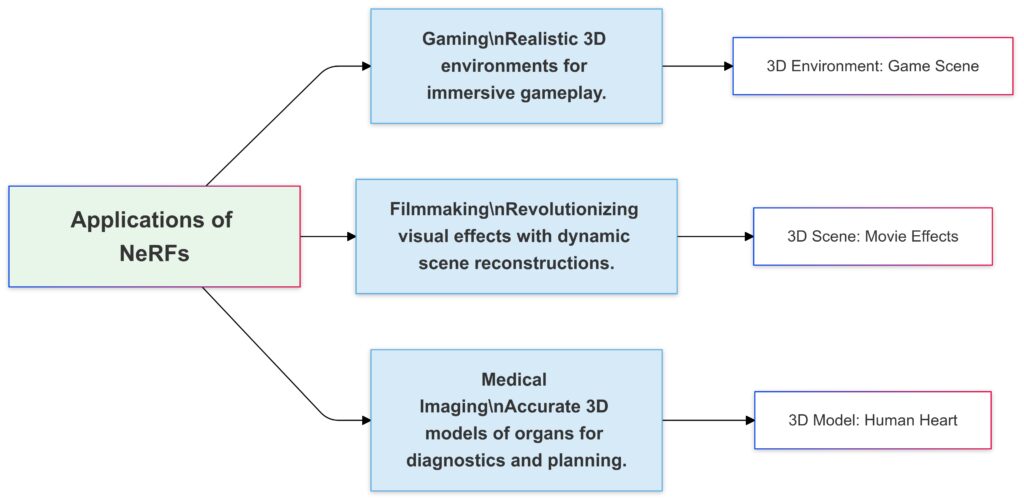

Revolutionizing Gaming and VR

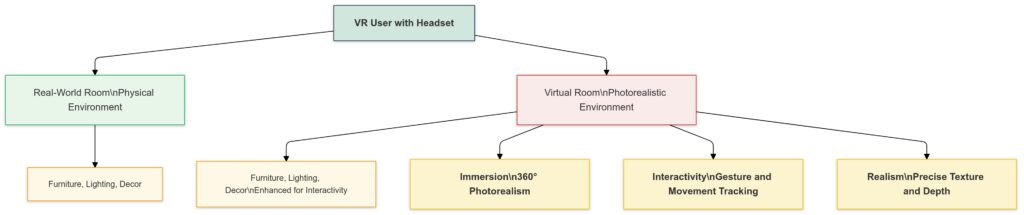

Imagine gaming environments where every detail feels tangible and immersive. With NeRFs, that’s no longer just a dream. By rendering dynamic scenes in real-time, NeRFs make VR worlds richer and more responsive.

Moreover, this tech could help create vast, seamless virtual spaces without compromising performance.

Advancing Medical Imaging

NeRFs can reconstruct complex 3D models of organs or tissues from medical scans. This opens new avenues for diagnostics and surgical planning, offering unprecedented precision in healthcare.

Realistic 3D environments for immersive gameplay.

Example: A 3D-rendered game scene.

Filmmaking:

Enhances visual effects with dynamic scene reconstructions.

Example: A reconstructed movie scene.

Medical Imaging:

Creates accurate 3D models of organs for diagnostics and planning.

Example: A 3D heart model.

Challenges Facing NeRF Adoption

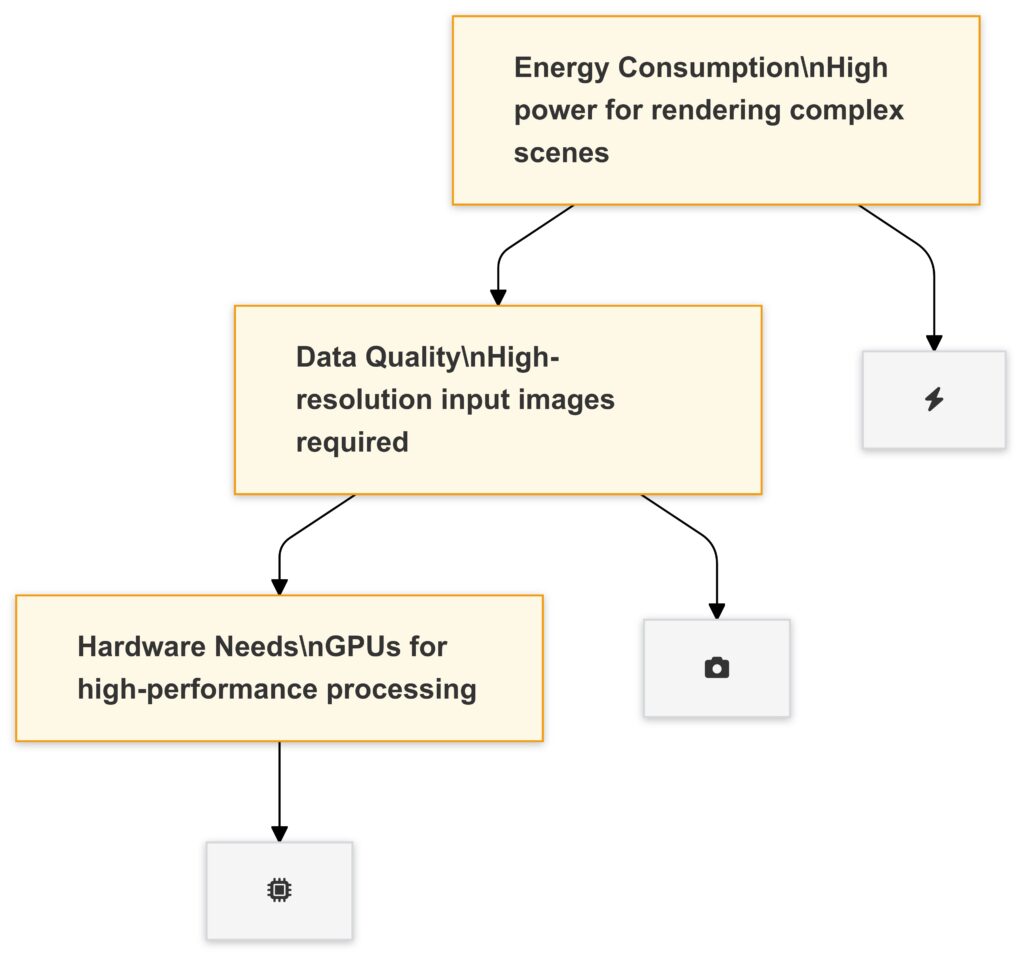

Diagram Details:

Corresponding icons provide visual clarity for each requirement.

The layers represent the hierarchical needs of NeRF technology.

Computational Power Demands

While NeRFs simplify many aspects of rendering, they require significant computational resources. GPUs capable of handling large neural networks are still a necessity, posing a barrier for widespread use.

Data Dependency

NeRFs thrive on well-captured, high-quality data. Poor input data leads to inaccurate or incomplete reconstructions, which can limit their effectiveness in certain scenarios.

Even with these challenges, the potential of NeRFs remains undeniable. As technology advances, these hurdles are expected to diminish.

How NeRFs Are Transforming Real-World Industries

NeRFs are not just theoretical—they’re actively reshaping various fields. Their ability to create dynamic, high-quality 3D models from minimal input makes them versatile and valuable.

Revolutionizing Film and Media Production

Cinematic visuals often demand extensive rendering, taking time and resources. NeRFs simplify this by generating photorealistic 3D environments swiftly.

Filmmakers can now simulate entire sets, adjust camera angles, or recreate scenes without expensive reshoots. The result? Reduced production costs and more creative flexibility.

Improving Robotics and Automation

For robots, understanding the physical world is critical. NeRFs provide robots with accurate, dynamic 3D maps of their environments, enhancing navigation and task execution.

This innovation is particularly impactful in industries like logistics, where precision and adaptability are key.

The Role of AI in Powering NeRFs

Neural Networks as the Core of NeRFs

NeRFs rely on deep learning to process and interpret visual data. By training neural networks to understand light behavior in a scene, NeRFs produce realistic 3D reconstructions.

These networks can learn to handle complex lighting, reflections, and textures, making NeRFs more advanced than traditional techniques.

The Importance of Training Data

NeRFs require diverse and high-resolution images to train effectively. The better the training data, the more accurate the final 3D model.

As data collection improves, the potential applications for NeRFs will expand even further.

What’s Next for NeRF Technology?

Scaling Up Accessibility

Current challenges like hardware demands and data quality are being addressed through innovations like optimized algorithms and cloud-based solutions. Soon, NeRFs could become accessible to smaller businesses and creators.

Potential for Real-Time Applications

Researchers are actively working to make NeRFs viable for real-time rendering. Imagine live 3D reconstructions during video calls or instant VR scene creation—NeRFs could bring these possibilities to life.

Conclusion: NeRFs as the Future of 3D Rendering

Neural Radiance Fields are undeniably revolutionizing the 3D rendering landscape. By blending the precision of AI with the demands of real-world applications, NeRFs deliver on both quality and efficiency.

From transforming industries like film, gaming, and robotics to pushing the boundaries of medical imaging, their potential seems limitless. While challenges like computational power and data requirements persist, ongoing advancements are steadily overcoming these hurdles.

As we look ahead, it’s clear that NeRFs will play a pivotal role in shaping how we interact with digital and virtual worlds. Whether you’re a developer, designer, or just a tech enthusiast, the evolution of NeRF technology is something to watch—and embrace.

FAQs

What are the limitations of NeRFs?

NeRFs require significant computational power to train and operate effectively, often needing high-end GPUs. They also rely heavily on the quality of input images—poor data leads to subpar reconstructions.

For example, trying to create a NeRF model from blurry or inconsistent images can result in an incomplete or inaccurate 3D representation.

Are NeRFs used in real-time applications?

Currently, NeRFs are mostly used for offline rendering, as real-time rendering remains a challenge due to computational demands. However, researchers are making strides toward optimizing NeRFs for real-time use.

Imagine a future where VR environments or augmented reality apps generate dynamic, interactive 3D scenes in real time—this is the direction NeRF technology is heading.

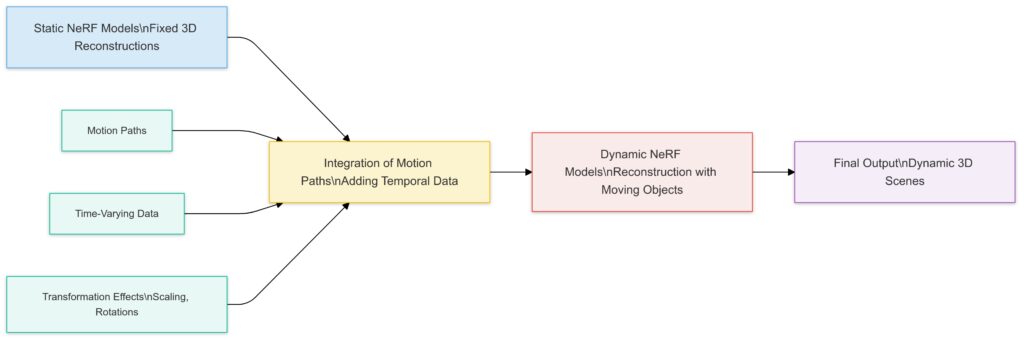

Can NeRFs handle dynamic scenes with moving objects?

Yes, researchers are developing methods to adapt NeRFs for dynamic scenes, which involve moving objects or changes over time. Traditional NeRFs are better suited for static environments, but advancements like Dynamic NeRFs allow for capturing motion.

For instance, a Dynamic NeRF could model a bustling street scene, capturing moving cars and pedestrians with remarkable detail.

How do NeRFs improve virtual reality experiences?

NeRFs can create highly immersive VR environments by generating photorealistic 3D scenes from real-world images. This capability makes virtual spaces more realistic and responsive to user movement.

For example, museums can use NeRFs to create virtual tours of exhibits, letting users explore in lifelike detail from their homes.

Are NeRFs accessible to small businesses or independent creators?

While the technology is still evolving, platforms are emerging to make NeRFs more accessible. Open-source frameworks and cloud-based tools reduce the need for expensive hardware, enabling smaller teams to experiment with NeRFs.

For example, an indie game developer could use a NeRF tool to quickly create stunning 3D assets without a large budget.

How do NeRFs contribute to scientific research?

NeRFs are increasingly valuable in fields like astronomy, archaeology, and biology. They help researchers reconstruct detailed 3D models from limited data, offering new insights into complex phenomena.

For instance, astronomers could use NeRFs to model celestial bodies based on telescope images, while archaeologists might reconstruct ancient ruins from fragmented photos.

Can NeRFs recreate environments with complex lighting and reflections?

Absolutely! NeRFs excel at handling complex lighting conditions and intricate reflections. Their neural network-based approach models how light interacts with surfaces in a scene, ensuring that shadows, highlights, and reflections are rendered with precision.

For instance, a NeRF reconstruction of a glass building on a sunny day would accurately depict reflections of the surrounding environment and shifting shadows.

Are NeRFs compatible with other 3D rendering tools?

Yes, NeRF outputs can often be integrated with traditional 3D tools. While they don’t rely on polygon meshes like conventional models, their data can complement tools used in animation pipelines or game engines.

For example, filmmakers might use NeRFs to generate realistic background scenes, which are then blended with traditional character animations in software like Blender or Unreal Engine.

How do NeRFs contribute to the gaming industry?

NeRFs bring a new level of realism and efficiency to game design by creating photorealistic environments from real-world photos. This reduces time spent on manual asset creation and allows for dynamic scene exploration.

Imagine a game where NeRFs let developers scan real-world locations and integrate them seamlessly into gameplay, making virtual worlds indistinguishable from reality.

Can NeRFs help in creating virtual twins of real-world objects?

Yes, NeRFs are excellent for creating digital twins of objects and environments. These virtual replicas can be used in industries like manufacturing, architecture, and urban planning for visualization, testing, or training purposes.

For example, engineers might use NeRFs to create a digital twin of a factory floor, enabling them to simulate equipment layouts and workflows before making changes in the physical space.

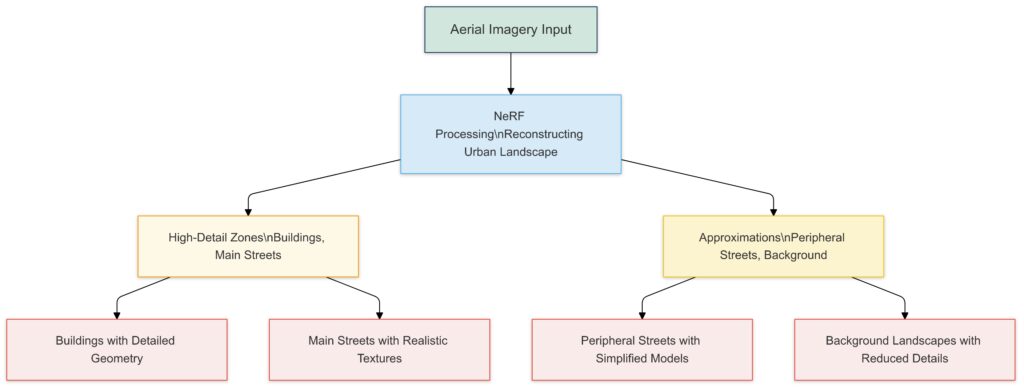

Can NeRFs be used for large-scale reconstructions, like entire cities?

Yes, NeRFs can scale to reconstruct large and complex environments, such as urban areas or natural landscapes. By combining multiple datasets or aerial imagery, they can create expansive 3D models with high levels of detail.

For example, city planners could use NeRFs to visualize proposed infrastructure changes or simulate the impact of new developments on existing neighborhoods.

How do NeRFs handle occluded or hidden parts of a scene?

NeRFs use light and density mapping to infer what might be hidden or partially occluded in an image. While they perform well with sufficient input data, completely obscured areas may result in less accurate reconstructions.

For instance, if only the front of a building is visible in the provided images, NeRFs can approximate the back, but with less precision than for the visible sections. Researchers are working on enhancing NeRFs to better handle such scenarios.

What role do NeRFs play in augmented reality (AR)?

NeRFs are a natural fit for AR applications, where realistic 3D objects or environments need to integrate seamlessly with real-world views. By reconstructing spaces or objects, they enable AR devices to anchor virtual elements with precision.

For example, furniture retailers could use NeRFs to let customers visualize how a new sofa would look in their living room through an AR app.

Resources for Learning More About Neural Radiance Fields (NeRFs)

Research Papers and Technical Insights

- Original NeRF Paper: “NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis” by Mildenhall et al. Link to Paper

- This groundbreaking paper introduces the concept of NeRFs and provides in-depth technical details about their implementation and capabilities.

- Dynamic NeRFs: “D-NeRF: Neural Radiance Fields for Dynamic Scenes” Link to Paper

- This paper explores extensions of NeRFs for handling motion and time-varying scenes.

- Instant Neural Graphics Primitives: Nvidia’s work on optimizing NeRFs for real-time applications. Project Details

Open-Source Implementations

- NeRF Codebase on GitHub: A variety of NeRF implementations and tutorials are available, such as this popular repository: GitHub NeRF Repository.

- Great for developers who want hands-on experience with building and training NeRF models.

- PlenOctrees: A faster NeRF implementation designed for real-time view synthesis. GitHub Repo

Tutorials and Online Courses

- NeRF Tutorials on YouTube: Channels like Two Minute Papers and CodeEmporium offer accessible tutorials and breakdowns of NeRF technology. Two Minute Papers.

- Machine Learning Mastery Blogs: Tutorials and guides on how to train neural networks for computer vision tasks, often touching on concepts related to NeRFs.

Industry News and Applications

- AI and Graphics Blogs: Nvidia’s Developer Blog and AI-focused publications often discuss the latest advancements in NeRFs. Nvidia Blog

- ArXiv Sanity Preserver: A curated collection of recent research papers on NeRFs and related topics. Explore Here.

Tools and Platforms

Blender Add-ons for NeRFs: Blender plugins are now available to integrate NeRFs into 3D workflows.

Colab Notebooks: Many NeRF projects offer prebuilt Colab notebooks where you can test the models directly in your browser.