What is Topic Modeling?

Topic modeling is a statistical method used to uncover hidden patterns within text data. It helps identify topics or themes that run through a collection of documents, making it essential for text analysis and classification.

Imagine you have thousands of articles to sort through. Topic modeling provides a structured way to group them based on similarities in their content. This approach has been widely adopted in content curation, recommendation systems, and academic research.

Its appeal lies in being unsupervised, meaning you don’t need labeled data—it simply works with the raw text corpus. But while effective, its limitations have become more evident in recent years.

Latent Dirichlet Allocation (LDA) Explained

LDA, introduced by Blei, Ng, and Jordan in 2003, is the cornerstone of traditional topic modeling. It operates on the assumption that:

- Documents are mixtures of topics.

- Topics are distributions over words.

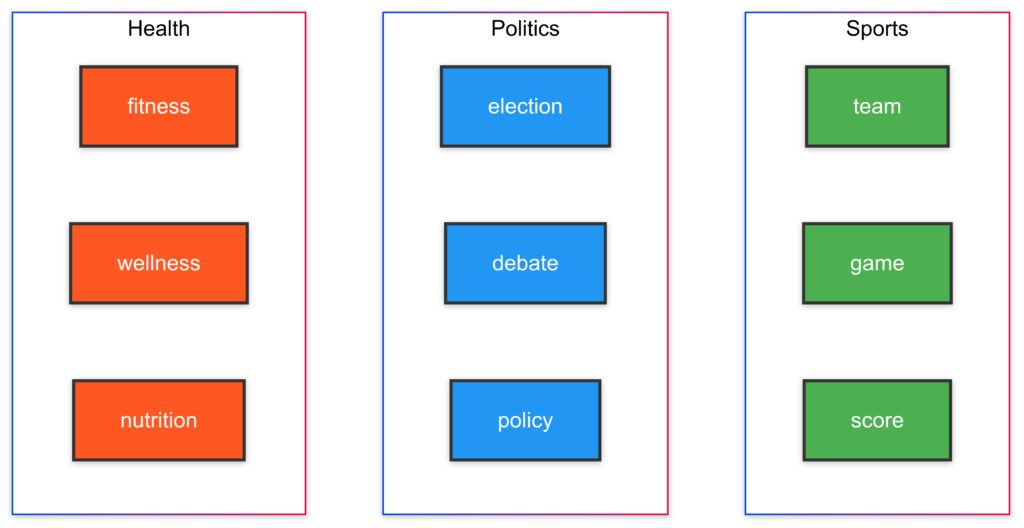

LDA works by assigning probabilities to words and topics, eventually creating clusters of related terms. For example: A document about sports might show high probabilities for words like “football,” “team,” and “score.”

LDA’s simplicity and explainability make it a go-to model for smaller datasets or academic studies. It’s been widely used in market research, summarization, and sentiment analysis.

Limitations of LDA in Modern Contexts

Despite its utility, LDA faces hurdles in today’s complex NLP landscape.

- Scalability Issues: LDA struggles with massive datasets due to its computational requirements.

- Semantic Contexts: It doesn’t fully grasp word meanings in varying contexts, often treating polysemy (e.g., “bank” as in river or financial) as a single term.

- Sparse Representations: It relies on bag-of-words models, ignoring word order and syntax.

As the demands for contextualized and scalable NLP solutions grow, these shortcomings make LDA less competitive.

The Rise of Embedding Models

Embedding models represent words and phrases as dense vectors in high-dimensional space. This innovation stems from methods like word2vec and GloVe, which transformed how NLP handles word relationships.

Key Features:

- Capturing semantics via vector proximity.

- Learning embeddings directly from raw text.

- Enabling deeper context understanding.

Embedding models gained significant traction with their ability to handle large vocabularies while preserving semantic relationships.

How Transformers Changed the Game

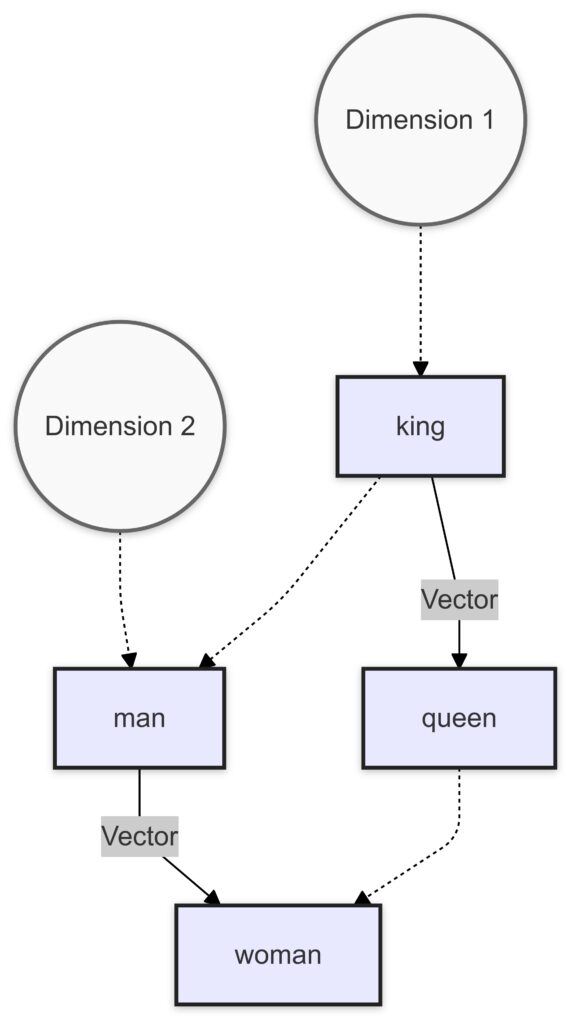

Contextual word embeddings showing semantic relationships and gender analogy.

Transformers, introduced through models like BERT and GPT, brought unparalleled advancements to NLP. Unlike LDA, which uses static probability distributions, Transformers dynamically model context by processing text in its entirety.

Core Benefits:

- Contextualized Embeddings: Words like “bat” are interpreted differently based on their context.

- Scalability: They excel at processing enormous corpora efficiently.

- Transfer Learning: Pretrained models can be fine-tuned for specific tasks, making them highly versatile.

These capabilities have elevated Transformers to dominate applications like chatbots, search engines, and summarization tools.

Comparing LDA and Embedding Models

Performance on Large Datasets

When it comes to large datasets, LDA’s reliance on bag-of-words representations becomes a bottleneck. Each document’s word distribution must be processed independently, making LDA computationally expensive and time-consuming as the data grows.

In contrast, embedding models powered by Transformers shine. Pretrained models like BERT and GPT handle vast corpora with ease, processing millions of documents by leveraging parallel computing on GPUs. Embedding models are not only scalable but also adaptable to new data without reengineering the entire pipeline.

For instance, while LDA may struggle with millions of reviews on an e-commerce site, a Transformer-based model can quickly analyze, categorize, and extract insights.

Semantic Understanding: A Critical Gap

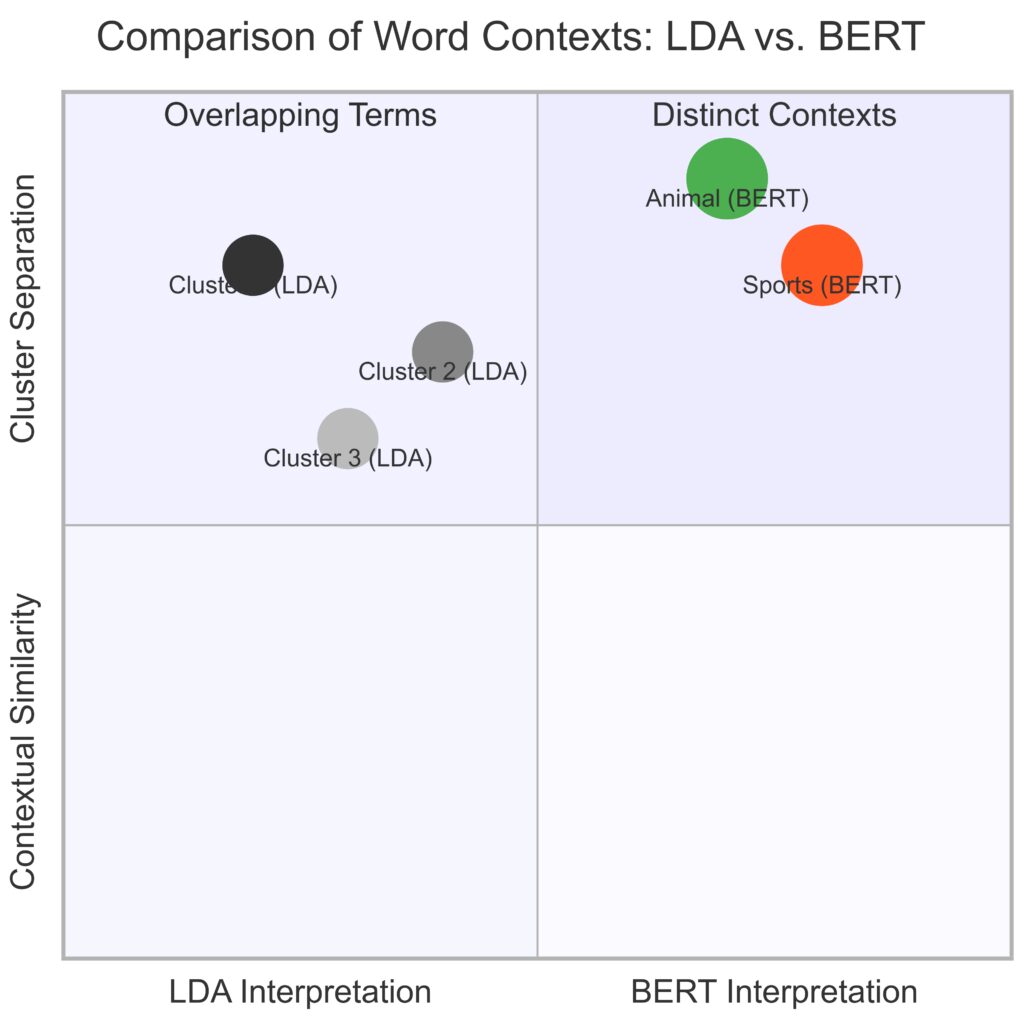

Contextual differentiation of word meanings in BERT compared to LDA’s probabilistic grouping.

LDA’s inability to understand nuanced semantics is one of its most significant drawbacks. It treats words purely as statistical occurrences, often missing subtle meanings or relationships.

Embedding models bridge this gap with contextual embeddings. They can differentiate between polysemous words (e.g., “bank” as a financial institution or riverbank) and consider the sentence context to infer the correct meaning.

Example: In a phrase like “batter swings the bat,” LDA might group “bat” with flying mammals. On the other hand, embedding models understand its sports-related context. This context awareness is crucial in tasks like sentiment analysis and conversational AI.

Interpretability in NLP Models

Interpretability is an area where LDA still has an edge. Its probabilistic nature makes it relatively straightforward to map topics to words, allowing users to clearly see what each topic represents.

Embedding models, while powerful, often lack this clarity. Their dense vector representations and multi-layered architectures can seem like a “black box,” making it harder to explain why a model made a particular decision.

In scenarios where model transparency is vital, such as legal or healthcare applications, LDA might still be preferred despite its limitations.

Speed and Computational Requirements

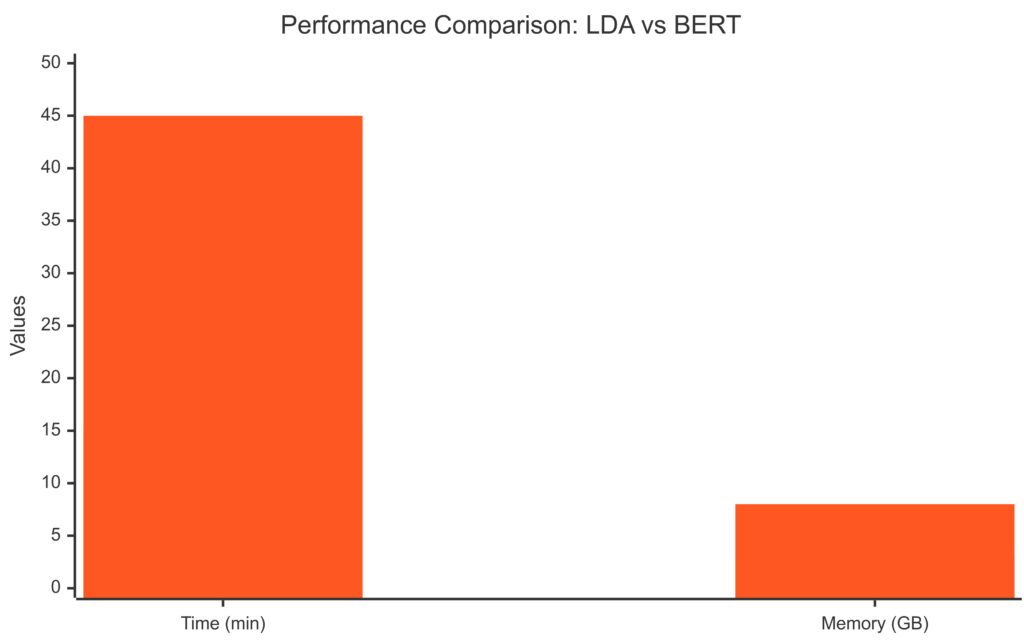

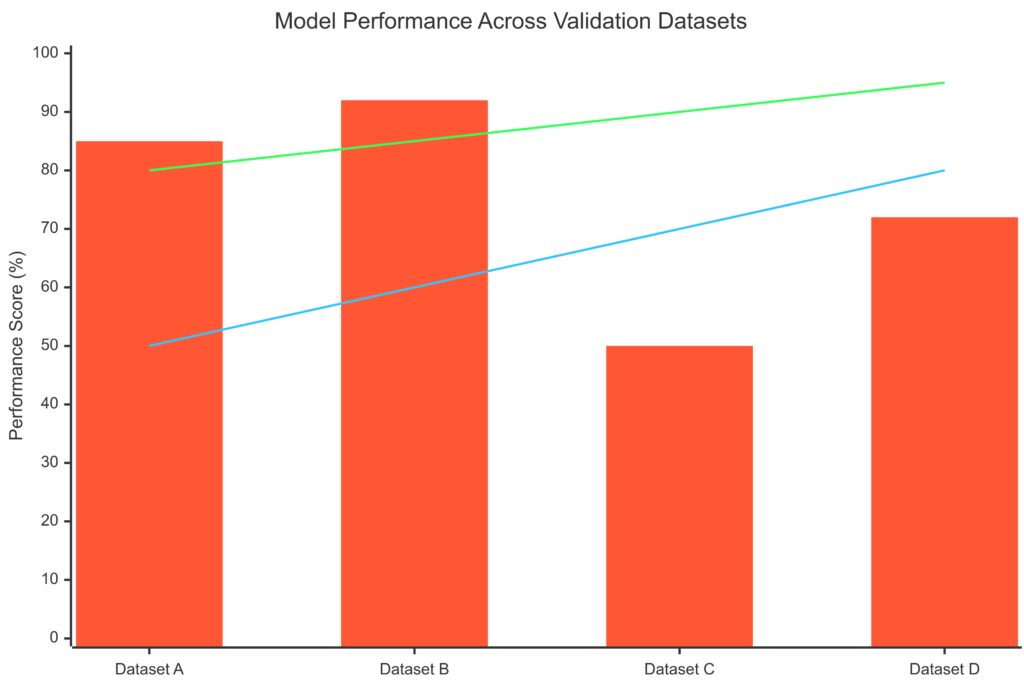

Performance comparison of LDA and BERT in terms of processing time and memory consumption.

LDA has lower computational requirements compared to modern embedding models. It can be implemented with basic CPU resources, making it ideal for settings with limited infrastructure.

Embedding models, especially Transformers, demand substantial computational power and memory. Training large models like GPT-4 can require thousands of GPUs and weeks of runtime. Even deploying them for inference often requires optimization techniques like distillation or quantization to improve efficiency.

For small-to-medium datasets or resource-constrained environments, LDA’s simplicity and speed still make it a practical choice.

Multilingual and Cross-Domain Applications

LDA’s performance drops significantly when dealing with multilingual corpora or domain-specific texts. It typically requires separate preprocessing for each language, which can be cumbersome.

Embedding models, however, are inherently multilingual. Pretrained models like mBERT (Multilingual BERT) and XLM-R (XLM-RoBERTa) have been fine-tuned on diverse languages, making them capable of understanding text across cultures and domains.

This cross-domain flexibility has allowed embedding models to dominate fields like translation, cross-lingual search, and global sentiment analysis.

Future Trends and LDA’s Relevance

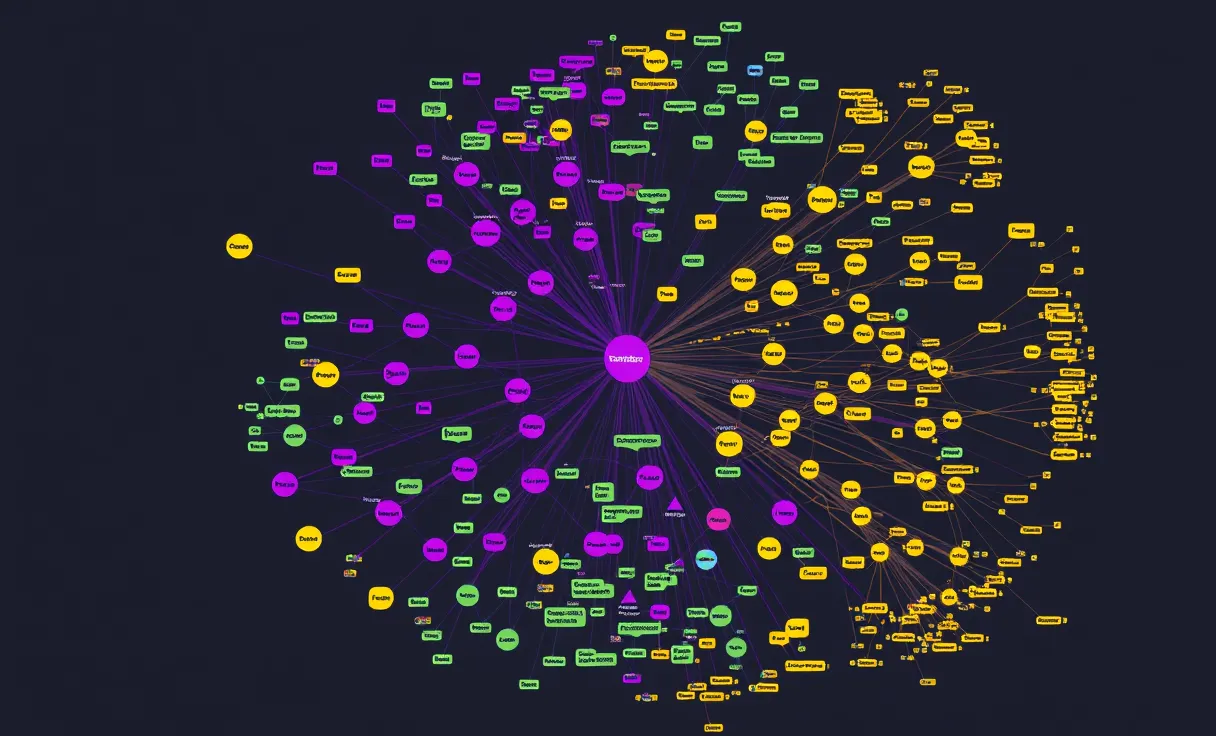

Evolving Hybrid Approaches

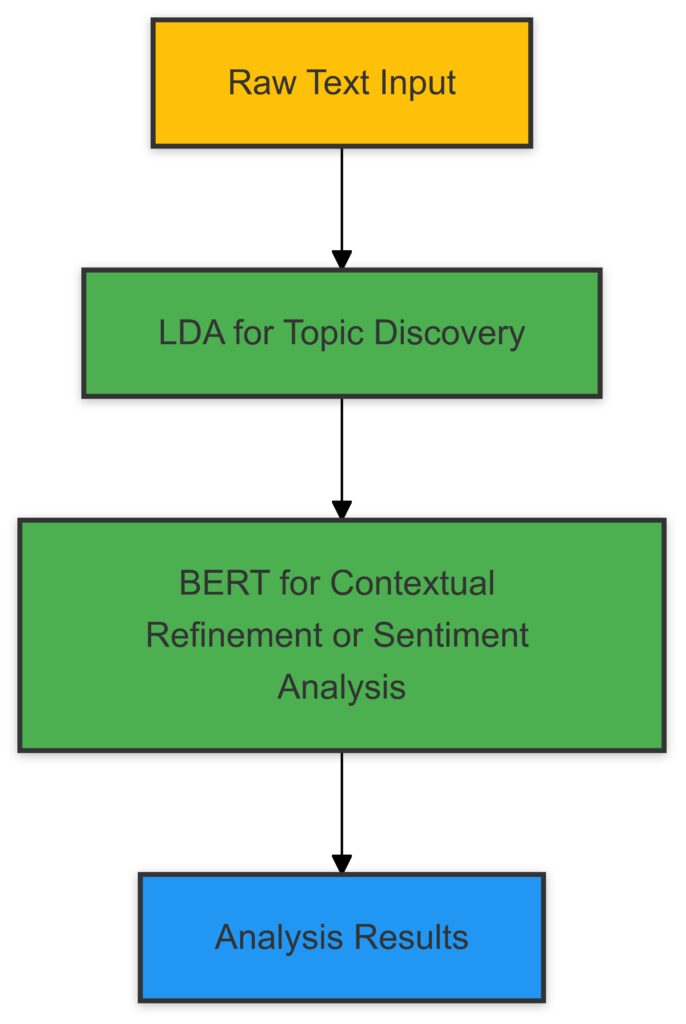

A hybrid NLP pipeline combining topic modeling and contextual embeddings for enhanced analysis.

The future of NLP isn’t just about choosing between LDA and embedding models—it’s about combining the best of both worlds. Hybrid approaches are emerging as powerful tools for tackling complex problems.

For instance, some systems use LDA for initial topic identification and then employ embedding models like BERT to refine results or analyze the context within each topic. This layered approach balances LDA’s interpretability with embedding models’ depth and scalability.

Example Use Case: In legal document analysis, LDA can cluster documents by topic, and embedding models can provide detailed contextual analysis within each cluster.

LDA in Niche Applications

Despite its limitations, LDA still holds relevance in specific scenarios:

- Small Datasets: For datasets too small to train embedding models effectively, LDA provides a practical and interpretable solution.

- Academic Research: LDA is often used in studies that prioritize simplicity and transparency over state-of-the-art accuracy.

- Low-Resource Environments: Its lower computational needs make it suitable for use in under-resourced settings or edge devices.

LDA’s enduring utility highlights that simpler models aren’t obsolete—they’re just better suited to particular niches.

The Ethical Edge: LDA’s Simplicity

The simplicity of LDA offers an ethical advantage. Its transparency allows researchers and practitioners to clearly trace its decision-making process, reducing the risk of unintended biases.

Embedding models, on the other hand, can perpetuate biases present in training data without users fully understanding how or why. This “black box” nature has raised concerns in areas like hiring algorithms and predictive policing.

For tasks that demand maximum explainability and fairness, LDA remains a trustworthy option, especially when accuracy is secondary to transparency.

Transformers and Beyond

Transformers like BERT and GPT represent the current zenith of NLP, but advancements continue to emerge. Models like ChatGPT and T5 are expanding the boundaries of contextual understanding, while innovations like LoRA (Low-Rank Adaptation) and quantization are making these models more efficient.

Looking ahead, next-gen Transformer models may address current limitations like resource demands and lack of interpretability, further solidifying their dominance in NLP.

Is LDA Still Relevant Today?

In a world dominated by Transformer-based models, LDA might seem like an underdog. However, its simplicity, interpretability, and practicality in low-resource scenarios ensure it still has a role to play.

For tasks requiring transparency, small datasets, or quick results, LDA remains a viable option. But for large-scale, nuanced, and multilingual tasks, embedding models are the clear winners.

Ultimately, the choice depends on the specific use case, resources, and priorities—proving that both LDA and embedding models have unique strengths to offer.

Conclusion: Balancing Simplicity and Sophistication in NLP

Latent Dirichlet Allocation (LDA) and embedding models, particularly Transformer-based architectures, represent two distinct approaches to solving natural language processing challenges.

LDA thrives in situations where interpretability, simplicity, and lower computational costs are critical. It’s a reliable choice for smaller datasets, academic research, or environments with limited resources. Its transparent probabilistic foundation ensures ease of understanding, even for non-experts.

On the other hand, embedding models like BERT and GPT dominate tasks requiring deep semantic understanding, scalability, and contextualized representations. These models power modern applications such as conversational AI, recommendation systems, and cross-lingual tools, redefining the boundaries of NLP capabilities.

While Transformers have undoubtedly overshadowed LDA in most contexts, hybrid models and specialized use cases demonstrate that LDA is far from obsolete. The choice between these approaches ultimately depends on the project’s scale, goals, and constraints.

As NLP evolves, both LDA and embedding models will continue to influence the field—one offering a foundation of simplicity, the other pushing the boundaries of sophistication. The future likely lies in integrating the strengths of both, ensuring flexibility and effectiveness across diverse applications.

FAQs

Can LDA and embedding models be used together?

Yes! Hybrid approaches are increasingly popular. Combining LDA’s topic clustering with embedding models’ contextual analysis creates robust pipelines.

Example Use Case: In customer review analysis, LDA can group reviews by topics like “price” or “quality.” Then, embedding models can provide detailed sentiment insights within each topic.

How do embedding models handle multilingual text compared to LDA?

Embedding models, especially mBERT and XLM-R, are designed for multilingual tasks. They learn representations across languages, allowing them to process and compare multilingual data seamlessly.

In contrast, LDA must preprocess text separately for each language, requiring significant manual effort to make it work across different languages.

Example: A Transformer-based model could analyze customer feedback in English, Spanish, and Mandarin simultaneously, while LDA would struggle to maintain consistency across languages.

Why are Transformers considered revolutionary in NLP?

Transformers revolutionized NLP by introducing contextualized embeddings and the ability to process sequences in parallel. This efficiency allows models like GPT-4 and BERT to handle tasks such as machine translation, text summarization, and question answering with unprecedented accuracy.

Key Example: In text generation, GPT can write entire articles with human-like coherence, while older models (like LDA) would merely cluster related words without producing meaningful text.

Is LDA suitable for large datasets?

LDA can process large datasets, but it is slower and less scalable compared to embedding models. It may struggle with high-dimensional, complex data. For tasks involving millions of documents, embedding models are a better choice due to their efficiency and ability to handle noise.

How do embedding models represent words and sentences?

Embedding models use dense vectors in a high-dimensional space to represent words or sentences. Similar words appear closer together in this space. For example:

- The words “king” and “queen” are closer in vector space than “king” and “chair.”

- Embedding models like BERT also consider sentence context, so “bank” in “river bank” differs from “bank” in “financial bank.”

This contextualization is a game-changer for tasks like machine translation and conversational AI.

What are the ethical concerns with embedding models?

Embedding models can inadvertently amplify biases present in their training data. For example, word associations like “doctor” with men and “nurse” with women reflect societal stereotypes. These biases can influence applications in hiring, policing, or other sensitive areas.

In comparison, LDA’s simpler design offers greater transparency, which can help mitigate some ethical concerns in smaller-scale projects.

Can LDA handle streaming data effectively?

LDA struggles with streaming data because it requires the entire dataset to compute word distributions. Updating the model with new data typically involves retraining from scratch, which can be resource-intensive.

For streaming or continuously updating datasets, dynamic topic modeling or embedding models with fine-tuning capabilities are better suited. For instance, Transformers like GPT-4 can be retrained incrementally with newer data, offering more flexibility.

What preprocessing steps are needed for LDA versus embedding models?

LDA typically requires extensive preprocessing:

- Removing stop words.

- Tokenization.

- Lemmatization or stemming.

These steps ensure the bag-of-words representation isn’t cluttered with irrelevant terms.

Embedding models, especially Transformer-based ones, handle much of the preprocessing internally. Models like BERT process raw text directly, capturing nuances like word order and morphology.

Example: In analyzing reviews, LDA may need “good” and “better” to be reduced to their root forms, while embedding models inherently understand their relationship.

How does scalability differ between LDA and embedding models?

LDA’s performance deteriorates as datasets grow because it must compute probabilistic distributions for all documents and topics. Embedding models scale more efficiently, especially with GPU or TPU acceleration.

Example: Processing a corpus of millions of tweets:

- LDA would take hours or days to converge.

- Embedding models like DistilBERT (a lightweight Transformer) could process and analyze the data in a fraction of the time.

Do embedding models require labeled data to work effectively?

Not necessarily. Many embedding models are pretrained on unlabeled data using self-supervised learning techniques. For example, BERT was trained on vast corpora of text without explicit labels.

However, for specific tasks (e.g., classification or summarization), embedding models benefit from fine-tuning on labeled datasets. LDA, being unsupervised, requires no labeled data at any stage.

Are there lightweight alternatives to Transformers for smaller projects?

Yes! If Transformers are overkill for your project, consider simpler embedding models like:

- word2vec or GloVe for basic word embeddings.

- FastText for efficient text classification.

These models are lightweight and can run on modest hardware, making them suitable for small-scale projects or prototyping.

For example, a local business analyzing 1,000 customer reviews might use GloVe embeddings paired with a simple classifier, skipping the complexity of Transformer architectures.

Resources

Beginner-Friendly Guides and Tutorials

- Topic Modeling with LDA

- Official LDA Paper by Blei, Ng, and Jordan: The foundational paper explaining Latent Dirichlet Allocation (technical but essential reading).

- Towards Data Science: A Beginner’s Guide to Topic Modeling with Python: Practical, hands-on tutorial for implementing LDA.

- Understanding Embedding Models

- Google’s Word2Vec Explainer: A comprehensive introduction to word embeddings.

- Stanford’s GloVe Documentation: Overview and downloadable pre-trained embeddings.

- BERT Explained: NLP’s Transformer: Visual and easy-to-digest explanation of BERT and Transformers.

Advanced Resources

- Books

- Speech and Language Processing by Dan Jurafsky and James H. Martin: Covers LDA, embeddings, and modern NLP techniques.

- Deep Learning for Natural Language Processing by Palash Goyal et al.: Focuses on embedding models and neural architectures.

- Papers on Transformers

- Attention is All You Need: The landmark paper introducing the Transformer architecture.

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding: A deep dive into the mechanics of BERT.

Tools and Libraries

- Python Libraries for LDA

- Gensim: Gensim Documentation: Popular library for building LDA models.

- Scikit-learn: LDA Implementation: Easy-to-use implementation of LDA in Python.

- Libraries for Embedding Models

- Hugging Face Transformers: Transformers Library: A hub for pre-trained models like BERT and GPT.

- spaCy: spaCy NLP Library: Includes word embeddings and supports Transformer pipelines.

Online Courses and Tutorials

- Coursera

- Natural Language Processing Specialization: Offered by DeepLearning.AI and taught by Andrew Ng. Covers embedding models and modern NLP.

- YouTube Channels

- StatQuest with Josh Starmer: Beginner-friendly explanations of machine learning, including LDA.

- Jay Alammar: Visual guides to Transformers and BERT.

Datasets for Experimentation

- 20 Newsgroups Dataset: Sklearn’s 20 Newsgroups: A classic dataset for experimenting with topic modeling.

- Common Crawl: Common Crawl Data: Massive web-scraped dataset ideal for testing embedding models.

- Kaggle Datasets: Kaggle: Offers a variety of datasets for NLP tasks.

Communities and Forums

- Reddit

- r/MachineLearning: Discussions, papers, and resources on LDA and embeddings.

- r/NLP: Focused on natural language processing advancements.

- GitHub Repositories

- Hugging Face Model Hub: Pre-trained Transformer models.

- LDA Notebook Examples: Practical examples of topic modeling with Gensim.