Why Domain-Specific Dataset Curation Matters

Achieving Relevant and Accurate Results

When fine-tuning OpenAI models, the dataset shapes the model’s understanding of the domain. A generic dataset won’t cut it if you’re aiming for precision in specific fields like medicine, finance, or engineering. By curating a focused dataset, you’re ensuring the model reflects the nuances of your domain.

Think about it: a well-prepared dataset is like a perfectly tailored suit—everything fits just right. In AI terms, this means relevant outputs and fewer inaccuracies.

Reducing Noise in Model Outputs

Noise refers to irrelevant, misleading, or incorrect information in the model’s responses. With a poorly curated dataset, you’ll see more noise creeping in, making outputs less reliable. By selecting high-quality, focused data, you set the tone for clarity and relevance.

Increasing Fine-Tuning Efficiency

A refined dataset minimizes computational wastage during training. Instead of wading through irrelevant information, the model learns faster, saving time and resources while achieving better results.

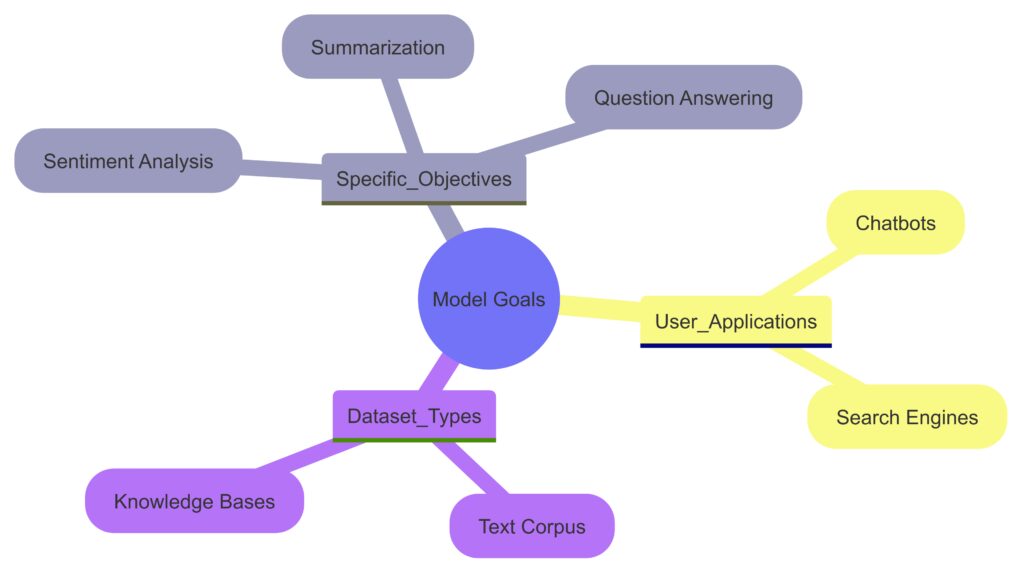

Defining Goals for Fine-Tuning

User Applications like Chatbots and Search Engines.

Dataset Types like Text Corpus and Knowledge Bases.

Clarify Your Objective

Before gathering data, ask: What do I want my model to achieve? Whether it’s summarization, sentiment analysis, or question answering, your dataset should align with this purpose.

For example, if you’re building a model for legal document review, include case law, statutes, and contracts. Avoid adding news articles or general commentary—they’ll dilute your results.

Map Your Use Case

Consider your target users and applications. Will your fine-tuned model serve researchers, general users, or niche professionals? This helps you define the scope and specificity of your dataset.

Balance Broadness and Specificity

A narrow focus ensures depth, but too much specificity can make your model less adaptable. For instance, a dataset on “European contract law” is specific enough to excel in its domain but broad enough to handle a range of tasks within that context.

Sources for Building Quality Datasets

Crowdsourced Data balances cost and availability but has moderate accuracy

Open Data Repositories are highly available but costlier.

Proprietary Data has the highest accuracy but lower availability and high cost.

Primary Domain-Specific Sources

Identify the gold standards in your domain. For example:

- Medical field: Peer-reviewed journals, clinical guidelines.

- Finance: Annual reports, market analyses.

- Education: Textbooks, verified online resources.

When curating, prioritize reliability and credibility over convenience.

Crowdsourced or Open Data Repositories

Open-source platforms like Kaggle, Data.gov, or PubMed offer domain-specific datasets. However, review these sources critically—quality varies widely.

Company-Specific Data

If you’re creating a model for internal use, leverage proprietary data such as reports, user feedback, or customer interactions. This gives you a unique edge, ensuring your dataset reflects real-world use cases.

Avoiding Low-Quality Sources

Steer clear of user-generated content like random blogs or unverified forums. While it might be tempting to bulk up your dataset, these sources often introduce biases and inaccuracies.

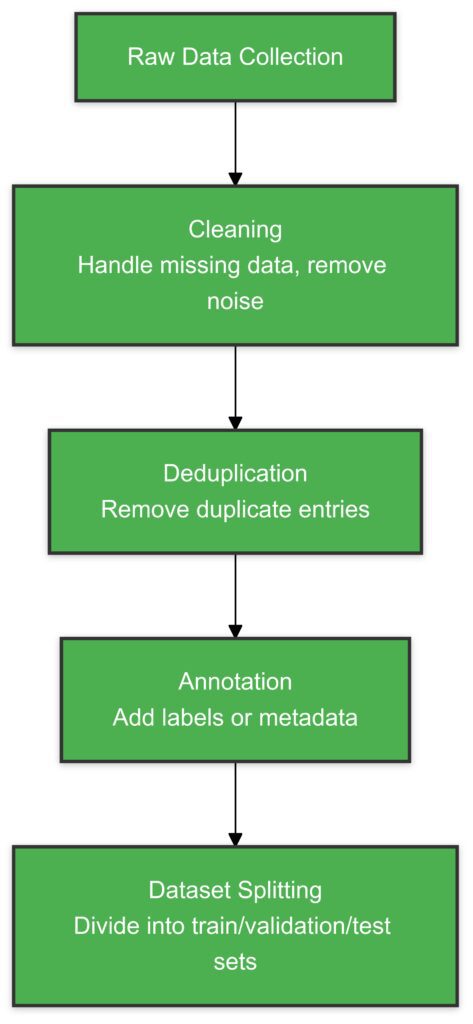

Preprocessing Your Dataset

Key preprocessing steps to transform raw data into a well-structured and usable dataset for fine-tuning.

Cleaning and Formatting

Raw data is often messy. Use techniques like:

- Deduplication: Remove repeated entries.

- Standardization: Ensure consistent formats for dates, units, and terminology.

- Noise Removal: Exclude incomplete, irrelevant, or misleading data.

A clean dataset is the foundation of successful fine-tuning.

Annotating and Labeling

For supervised tasks, annotations are key. Ensure high-quality, consistent labels for categories, sentiments, or intents. Consider involving domain experts to reduce labeling errors.

Handling Imbalanced Data

An unbalanced dataset skews results. If one category dominates, the model might struggle with underrepresented categories. Use data augmentation or oversampling to level the playing field.

Splitting Data Strategically

Divide your dataset into training, validation, and test sets. A good rule of thumb:

- 70% for training

- 15% for validation

- 15% for testing

This ensures the model generalizes well and prevents overfitting.

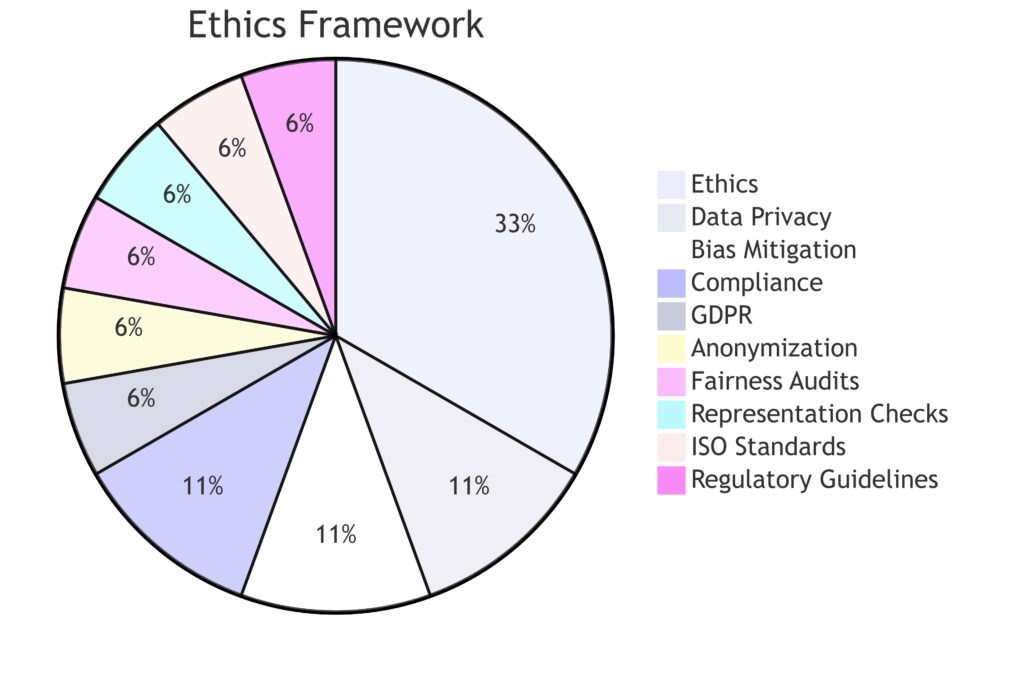

Ensuring Ethical and Legal Compliance

Core ethical considerations and legal requirements in dataset curation for responsible AI development.

Respect Copyright Laws

When curating a dataset, verify that your sources are free from copyright restrictions. This avoids legal headaches and maintains ethical integrity.

Avoid Bias in Data Selection

Bias in, bias out. Be mindful of potential biases in your dataset. For instance:

- In hiring-related datasets, avoid over-representing one demographic.

- For medical models, include data from diverse populations to ensure fair outcomes.

Data Privacy and Security

If your dataset includes sensitive information, like personal data or proprietary details, anonymize it. Tools like k-anonymity can help reduce risks.

Failing to prioritize privacy might not only break trust but also violate laws like GDPR or CCPA.

Common Pitfalls in Dataset Curation

Using Overly Generalized Data

A generic dataset may include irrelevant examples, leading to poor fine-tuning outcomes. Imagine training a customer support chatbot with Wikipedia data—it won’t deliver the specific tone or answers users expect. Stick to domain-specific sources instead.

Neglecting Diverse Scenarios

Your dataset should cover a range of possible use cases. For instance, an insurance model shouldn’t only handle simple claims but also include edge cases like complex litigation or disputes.

Failing to include diverse examples leads to a model that’s brittle when faced with the unexpected.

Overfitting on Training Data

A dataset that’s too narrow or repetitive risks overfitting. This happens when the model memorizes exact patterns instead of generalizing. A practical example? Overfitting would make a legal model struggle with documents it hasn’t explicitly seen before.

Underestimating Data Quality Issues

Poor-quality data often flies under the radar during curation but wreaks havoc during fine-tuning. Typos, inconsistencies, and irrelevant records all dilute the model’s performance.

Ignoring Dataset Size

Too small? Your model won’t learn enough. Too large? Training becomes costly and time-intensive. Aim for balance—enough data to capture domain nuances but not so much that training efficiency suffers.

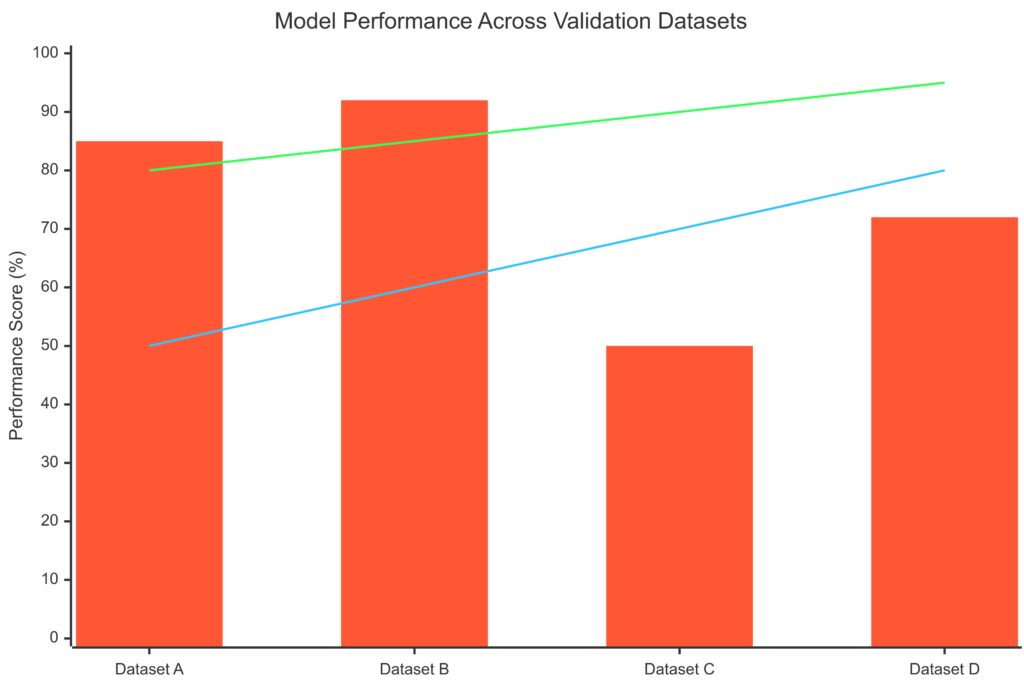

Evaluating Dataset Effectiveness

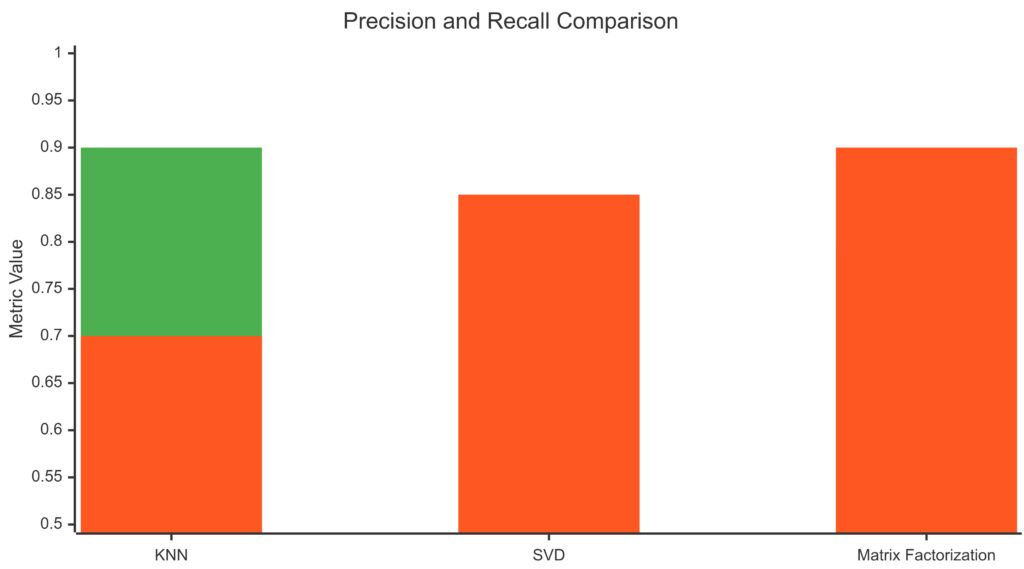

Tracking Model Performance Metrics

After fine-tuning, measure how well your model performs on validation and test datasets. Key metrics include:

- Accuracy: How often are the outputs correct?

- Precision and Recall: For classification tasks, these measure specific performance in detecting true positives or avoiding false negatives.

- Perplexity: For language models, lower perplexity indicates better fluency.

If metrics fall short, revisit your dataset—chances are, it’s lacking or skewed.

Conducting Domain-Specific Tests

Test your fine-tuned model with real-world tasks. For example:

- In healthcare, ask the model to recommend treatments and compare its answers to clinical guidelines.

- In finance, have it summarize market trends and verify against expert analyses.

Feedback from domain experts can also uncover hidden flaws in your dataset.

Iterative Improvement

Fine-tuning isn’t a one-and-done process. Update your dataset regularly with new, high-quality data, especially as your domain evolves. This keeps the model relevant and sharp.

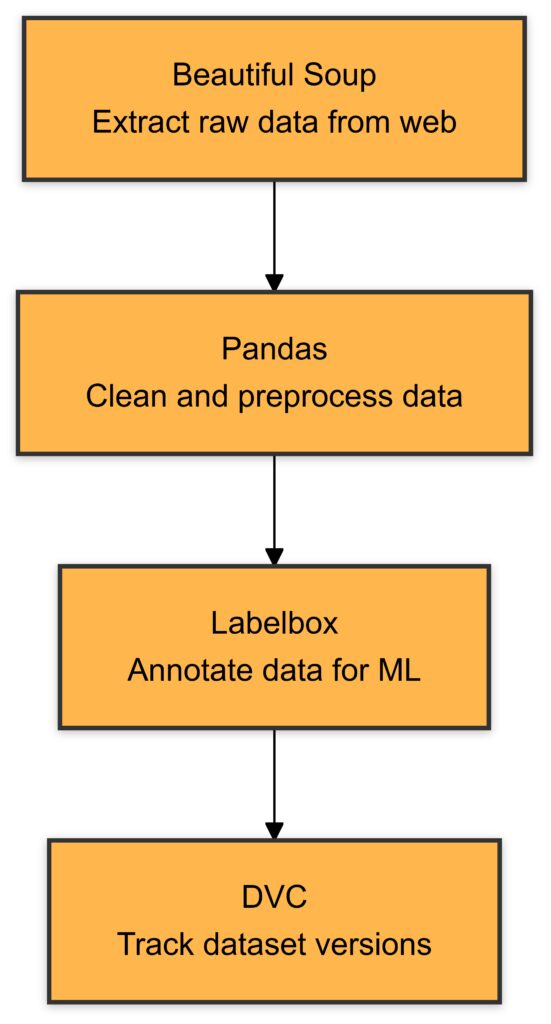

Leveraging Tools and Technologies for Dataset Curation

Tools and technologies streamline dataset curation, from collection to management, for domain-specific fine-tuning.

Automated Data Collection Tools

Manual dataset curation is time-consuming. Automated tools streamline the process by scraping, sorting, and categorizing domain-specific data. Some popular options include:

- Beautiful Soup: Ideal for scraping structured data from websites.

- Google Cloud Dataflow: For processing large-scale datasets.

- Pandas: A versatile library for cleaning and preprocessing datasets.

These tools reduce human error and help ensure consistent formatting.

Annotation Platforms

For tasks requiring labeled data, use annotation platforms to assign labels efficiently. Labelbox and Prodigy allow domain experts to annotate datasets while tracking quality.

Built-in AI accelerates the process, automatically suggesting labels based on existing patterns. This minimizes redundancy and speeds up fine-tuning preparation.

Version Control for Datasets

Tools like DVC (Data Version Control) or Git-LFS help track changes to datasets. This is especially useful when iterating on fine-tuning projects. You can roll back to previous versions or compare performance across different dataset versions.

Scaling Dataset Curation

Modular Dataset Design

Instead of creating a single monolithic dataset, divide it into modular subsets by topic or task. For instance:

- A legal chatbot dataset can have separate modules for contracts, litigation, and compliance.

- A healthcare model could include subsets for cardiology, oncology, and pharmacology.

This makes updates easier and ensures scalability for future expansions.

Active Learning for Dataset Growth

Active learning identifies examples where the model struggles most, then prioritizes them for labeling. This helps expand the dataset intelligently while avoiding redundant data.

For example:

- If a customer support bot misclassifies refund-related queries, add more refund examples to its dataset.

- In medical models, focus on conditions that generate ambiguous predictions.

Crowdsourced Data Collection

Platforms like Amazon Mechanical Turk or CrowdFlower enable rapid dataset scaling. However, set strict quality controls—crowdsourced labels can vary in accuracy. Combine this approach with expert validation for best results.

Data Augmentation Techniques

When data is scarce, use augmentation to artificially increase variety. Techniques include:

- Synonym Replacement: Replace words with synonyms to diversify text.

- Paraphrasing: Use tools like OpenAI’s own models to generate alternate phrasing for sentences.

- Noise Injection: Add minor errors to simulate real-world conditions (e.g., typos or missing words).

Testing Dataset Robustness

Measuring dataset robustness by evaluating model performance across diverse validation datasets and tasks.

Cross-Domain Testing

Test your dataset across different but related tasks to ensure versatility. For instance:

- A dataset built for summarization should also perform well in question answering within the same domain.

- In financial models, test both historical trends and real-time predictions.

If the model struggles, refine or expand your dataset.

Benchmark Against Established Datasets

Compare your model’s performance on a domain-specific benchmark dataset, such as:

- SQuAD for QA models.

- MedQA for healthcare-related tasks.

- Financial PhraseBank for finance.

This ensures your dataset meets industry standards and helps identify gaps.

Adversarial Testing

Expose the model to challenging examples to test robustness. For instance, provide ambiguous or contradictory inputs and observe how well the model handles them.

- In legal datasets, include hypothetical cases.

- In e-commerce datasets, test with slang or informal phrasing.

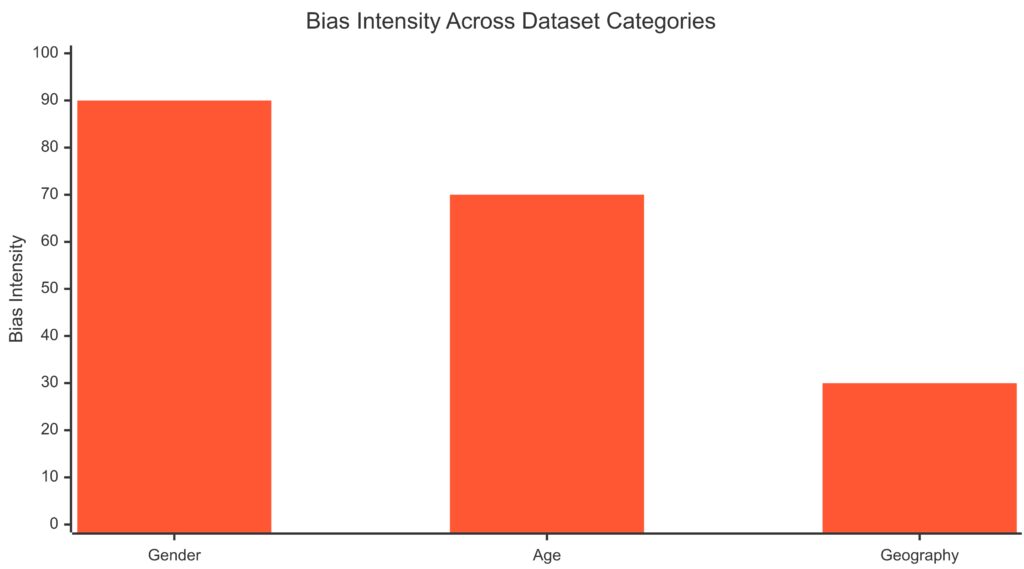

Handling Biases in Dataset Curation

Identifying and addressing bias in datasets to ensure fair and accurate AI models.

Identifying Biases

Biases often stem from unbalanced datasets or the overrepresentation of specific groups. Common pitfalls include:

- Gender or racial bias in hiring-related datasets.

- Geographic bias in healthcare datasets, favoring data from developed countries.

Perform regular audits to uncover patterns in predictions that signal underlying biases.

Correcting Biases

To reduce bias:

- Balance your dataset by including diverse demographics, geographies, or viewpoints.

- Use tools like Fairlearn to measure and mitigate disparities in model outputs.

Addressing bias isn’t just ethical—it improves your model’s performance across diverse real-world scenarios.

Maintaining Dataset Quality Over Time

Continuous Monitoring

Domains evolve, and your dataset needs to keep up. Schedule periodic reviews to:

- Remove outdated data.

- Add emerging trends or terms.

- Adapt to new legal or regulatory requirements.

For instance, a financial model might require updates to reflect changes in tax laws or market regulations.

Feedback Loops

Incorporate user feedback to refine your dataset. If users identify recurring errors, trace them back to gaps or flaws in your data.

For example:

- A chatbot consistently misunderstanding refund requests signals a need for better representation of refund-related phrases.

Real-World Examples of Dataset Curation

Healthcare: Building a Clinical Decision Model

When creating a fine-tuned model for healthcare, data quality and diversity are critical. For example, curating a dataset to predict patient diagnoses involves:

- Primary Sources: Electronic Health Records (EHR), clinical trial results, and research publications from platforms like PubMed.

- Challenges: EHR data often contains duplicates, inconsistencies, or missing fields. Preprocessing techniques, like imputation and deduplication, are essential.

- Outcome: A carefully curated dataset ensures the model provides accurate and explainable predictions, reducing the risk of misdiagnoses.

Finance: Analyzing Market Sentiment

In the finance domain, building a sentiment analysis model for stock predictions involves:

- Data Sources: Financial news, investor forums, and stock market reports.

- Preprocessing: Filter out irrelevant data, such as non-financial topics, and normalize terminologies (e.g., USD vs. $).

- Outcome: A focused dataset can enable the model to predict market trends with higher confidence, benefiting traders and analysts.

Legal: Automating Document Review

For legal professionals, models trained on contracts and case law need curated datasets that include:

- High-Quality Sources: Statutory texts, past judgments, and annotated contracts.

- Challenges: Legal language varies greatly by jurisdiction. Modular datasets segmented by region (e.g., US vs. EU) address this issue.

- Outcome: The model speeds up document review processes, saving time and reducing errors.

Domain-Specific Nuances in Dataset Curation

Handling Specialized Terminology

Every domain has its jargon. A general-purpose dataset won’t capture terms like “fiduciary duty” (law) or “angioplasty” (medicine). Include domain-specific glossaries to ensure accuracy.

For instance:

- In healthcare, synonyms like “heart attack” and “myocardial infarction” should be linked.

- In IT, abbreviations like API or SDK need consistent context across data entries.

Incorporating Contextual Variability

A dataset for weather-related insurance claims might need to account for:

- Cultural Differences: “Hurricane” vs. “Cyclone.”

- Temporal Variations: Seasonal trends in claim frequency.

These nuances ensure the model adapts well across contexts.

Regional and Cultural Considerations

Models trained on US-centric datasets might struggle elsewhere. For example:

- Healthcare models must include data from diverse populations to avoid diagnostic disparities.

- Legal models need jurisdiction-specific terminology and case law.

Avoiding Overfitting to a Domain

Incorporating External Data Sources

While domain-specific data is crucial, adding external general knowledge ensures the model generalizes better. For instance:

- A financial analysis model can benefit from general economic indicators, not just stock data.

- A legal chatbot improves by understanding broader conversational contexts, not just statutes.

Testing in Multitask Scenarios

Fine-tune your model to handle secondary tasks within the domain. For example:

- A medical diagnostic model could also assist in prescription generation.

- A customer service chatbot might also manage FAQ responses alongside ticket creation.

This ensures broader usability without over-specializing.

Case Studies: Successes and Lessons Learned

OpenAI’s Fine-Tuning with Codex

OpenAI fine-tuned Codex to handle programming tasks across languages. The success stemmed from:

- Data Sources: Publicly available code repositories like GitHub.

- Preprocessing: Deduplication of identical code snippets and normalization of formatting.

- Key Insight: Broad coverage across languages made Codex versatile, while domain-specific data (e.g., Python) enhanced performance for common tasks.

Legal Document Review by CaseText

CaseText developed CoCounsel, an AI lawyer trained on millions of legal documents. Lessons included:

- Data Selection: Using authoritative legal texts while avoiding informal commentary.

- Challenge: Biases in case law led to unexpected model outputs. Addressed by diversifying the dataset.

- Outcome: A tool capable of drafting contracts and summarizing legal precedents with professional accuracy.

Future Directions for Dataset Curation

Dynamic Dataset Updating

Fine-tuned models benefit from continuous updates. Consider automated pipelines that scrape and preprocess new data periodically. For instance:

- A model trained on financial regulations should regularly ingest updates from regulators like the SEC.

- Medical models should reflect the latest treatment guidelines and research.

Leveraging Multimodal Data

Combine text, images, and other formats to enrich datasets. Examples:

- Healthcare models using radiology reports (text) alongside X-rays (images).

- E-commerce models incorporating product descriptions, reviews, and images.

Ethical AI Frameworks

As fine-tuning grows, ethical considerations will remain pivotal. Future best practices include:

- Transparent data sourcing with clear audit trails.

- Public disclosure of datasets used for high-stakes applications.

With these real-world examples, nuanced practices, and lessons, you’re now equipped to tackle domain-specific dataset curation with confidence. Whether for healthcare, finance, or law, precision, diversity, and ethical rigor are your guiding stars!

Conclusion

Fine-tuning OpenAI models for domain-specific tasks is an art that blends technical expertise with thoughtful planning. Curating datasets isn’t just about gathering data—it’s about crafting a foundation for meaningful, impactful AI applications. By adhering to the tips outlined here and avoiding common pitfalls, you’ll create models that excel in accuracy, adaptability, and ethical responsibility.

FAQs

What are the risks of using crowdsourced data?

Crowdsourced data can vary in quality, introducing errors or inconsistencies. For instance:

- A crowdsourced dataset on product reviews might include spam or biased ratings.

- Annotators might misinterpret domain-specific tasks without proper training.

Mitigate these risks by employing quality controls, such as cross-checking or having domain experts review a subset of the data.

How often should I update my dataset?

Update datasets regularly to stay current with domain trends. For instance:

- A financial model should include data on recent economic changes or policy shifts.

- A legal model might need updates as new case law or statutes emerge.

Automating updates with tools like Google Cloud Dataflow ensures your dataset remains fresh.

Can I use multilingual datasets for fine-tuning?

Yes, especially if your application requires it. For instance:

- A global customer support bot benefits from datasets in multiple languages.

- A legal model might require English and regional language documents to handle jurisdiction-specific queries.

Ensure the data is balanced across languages and includes accurate translations to avoid skewing results.

How do I curate datasets for emerging fields with limited data?

In emerging fields like quantum computing or blockchain regulation, start by:

- Collecting open-access publications, patents, or whitepapers.

- Scraping niche platforms, such as specialized forums or research preprint servers.

- Generating synthetic data using AI tools to fill gaps.

For example, in quantum computing, simulate basic quantum circuits to augment real-world datasets.

What role do domain experts play in dataset curation?

Domain experts provide crucial input, such as:

- Validating dataset quality and relevance.

- Annotating complex data accurately (e.g., identifying rare diseases in medical records).

- Detecting gaps or biases within datasets.

For instance, in a legal dataset, a lawyer might flag missing precedents or incorrect classifications. Collaborating with experts ensures the model reflects real-world expectations.

How can I identify overfitting during fine-tuning?

Signs of overfitting include:

- High accuracy on the training set but poor performance on validation/test sets.

- Outputs that mirror the training data instead of generalizing to unseen inputs.

For example, a legal model trained exclusively on US law might fail when asked about international regulations. Mitigate this by expanding the dataset scope or using regularization techniques during training.

Should I use synthetic data for fine-tuning?

Synthetic data is helpful when real data is scarce. For instance:

- Generate anonymized customer interaction scenarios for a chatbot.

- Create simulated medical records to augment training data.

However, use synthetic data carefully—it may lack nuances found in real-world data. Always test the model against a validation set of real data.

What’s the ideal balance between training, validation, and test datasets?

A standard split is:

- 70% training: For the model to learn patterns.

- 15% validation: To tune parameters and avoid overfitting.

- 15% test: To measure performance objectively.

For example, in an e-commerce chatbot, use training data for FAQs, validation data for nuanced queries, and test data for edge cases.

Can I fine-tune a model with multilingual or multimodal datasets?

Yes, but the approach varies:

- Multilingual: Ensure balanced representation across languages. Avoid overloading with one dominant language.

- Multimodal: Align data types (e.g., captions paired with images).

For example, a model analyzing social media trends might combine tweets (text), memes (images), and hashtags (metadata) for a comprehensive view.

How do I ensure privacy and security when curating sensitive datasets?

Implement strategies like:

- Anonymization: Remove identifiers like names or addresses from data.

- Encryption: Secure sensitive datasets during storage and transfer.

- Access Control: Limit dataset access to authorized personnel only.

For example, a healthcare dataset might replace patient names with unique, anonymous IDs. Adhering to privacy laws like GDPR or HIPAA is crucial.

What’s the role of transfer learning in domain-specific fine-tuning?

Transfer learning involves starting with a pre-trained model and fine-tuning it on a domain-specific dataset. Benefits include:

- Reduced Training Time: Leverage existing knowledge to accelerate results.

- Improved Performance: Start from a strong baseline, especially when domain data is limited.

For example, a GPT model fine-tuned on a general text corpus can quickly adapt to legal drafting tasks with a curated dataset of contracts and precedents.

How do I address dataset imbalance?

Imbalance occurs when certain categories dominate. For instance:

- A fraud detection model might encounter more “non-fraud” than “fraud” examples.

- A medical dataset might include more data on common diseases than rare ones.

Strategies to address this:

- Use oversampling or data augmentation to boost minority categories.

- Apply techniques like SMOTE (Synthetic Minority Oversampling Technique) for balanced training data.

Are pre-built datasets always reliable?

Not always. While pre-built datasets (e.g., from Kaggle or UCI Machine Learning Repository) save time, they may:

- Contain errors or outdated information.

- Lack domain-specific nuances or context.

For example, a pre-built dataset for financial modeling might focus on US stock data, missing insights for global markets. Always review, clean, and tailor pre-built datasets to your use case.

What’s the impact of dataset quality on AI ethics?

High-quality datasets ensure fairness and minimize harm. Poor-quality datasets can:

- Perpetuate biases, leading to discriminatory outcomes.

- Erode trust in AI systems.

For example, a biased hiring model trained on skewed data could unfairly exclude qualified candidates from underrepresented groups. Ethical curation involves balancing diversity and ensuring transparency about data sources.

What is the importance of dataset diversity in fine-tuning?

Dataset diversity ensures the model performs well across varied scenarios and user demographics. Without it:

- A model trained only on English text might fail in multilingual environments.

- A healthcare model trained on data from one region might misdiagnose patients from another.

For instance, include data from multiple regions, ethnic groups, or contexts to make predictions more robust and inclusive.

How do I decide between public and proprietary data for fine-tuning?

Public Data is ideal for general-purpose applications, offering broad coverage but less specificity. Use it for tasks like sentiment analysis or general NLP.

Proprietary Data is tailored for specialized domains like legal compliance or internal business processes. It ensures relevancy but requires careful handling to maintain privacy and compliance.

For example, a financial institution might use proprietary client transaction data for risk modeling but supplement it with public economic reports for broader context.

What are some red flags to watch for during dataset curation?

Common issues include:

- Incomplete Data: Missing fields, such as unlabelled records in annotated datasets.

- Bias: Overrepresentation of specific demographics, geographies, or viewpoints.

- Outdated Information: Older datasets might not reflect current trends or knowledge.

For example, a legal dataset containing outdated laws could lead to incorrect model outputs. Regularly audit your dataset to avoid these pitfalls.

How do I handle domain-specific jargon in the dataset?

Define a glossary of terms for your domain and standardize terminology throughout the dataset. For example:

- In medicine, ensure consistency between terms like “diabetes mellitus” and “DM.”

- In finance, align variations of terms like “P&L” and “Profit and Loss.”

You can also pre-train the model on domain-specific texts (e.g., journals, manuals) to build its familiarity with the jargon.

Can I use OpenAI’s APIs to preprocess or clean my dataset?

Yes, OpenAI’s models can assist in preprocessing tasks, such as:

- Text Summarization: Condense long entries into concise formats.

- Classification: Automatically categorize data into relevant groups.

- Error Correction: Fix grammar or spelling inconsistencies in the dataset.

For example, use GPT models to clean user-generated content by removing irrelevant text and formatting data uniformly.

How can I validate a dataset before fine-tuning?

Validation involves assessing whether the dataset aligns with your goals. Steps include:

- Content Review: Ensure data reflects the intended domain and task (e.g., no off-topic examples).

- Test Subsets: Train the model on a small sample to identify any glaring issues.

- Cross-Validation: Divide data into subsets and train on different combinations to measure consistency.

For instance, before fine-tuning a legal chatbot, test it on common legal queries to ensure accuracy.

What is the role of metadata in dataset curation?

Metadata adds context and structure to your dataset. Examples include:

- Timestamps: Useful for temporal analysis, such as financial forecasting.

- Source Information: Indicates credibility and origin (e.g., peer-reviewed journals vs. blogs).

- Annotations: Labels or categories that guide supervised learning tasks.

For instance, a healthcare dataset with metadata about patient demographics enables better population-specific predictions.

How do I balance dataset size and training efficiency?

A large dataset improves model generalization but increases training time and cost. To strike a balance:

- Remove duplicates and irrelevant examples to reduce size.

- Use active learning to prioritize examples that improve model performance.

- Fine-tune on a subset, then expand if needed.

For example, start with 50,000 entries for a sentiment analysis model, evaluate results, and scale up only if necessary.

Can I reuse a fine-tuned model for other related tasks?

Yes, transfer learning allows you to adapt a fine-tuned model to related tasks. For instance:

- A sentiment analysis model fine-tuned on customer reviews can be adjusted for survey feedback.

- A healthcare diagnostic model can be repurposed for medical chatbot applications.

Fine-tune the model on a smaller dataset specific to the new task to preserve domain knowledge while adding new functionality.

How do I curate datasets for low-resource languages?

For low-resource languages, consider:

- Community Contributions: Engage native speakers to annotate and validate data.

- Transliteration and Translation: Leverage existing datasets in related high-resource languages by translating them.

- Synthetic Data: Use machine translation and language models to generate additional data.

For example, translate a dataset in English to Swahili, then validate accuracy with native speakers to build a quality corpus.

Should I include adversarial examples in my dataset?

Yes, adversarial examples test your model’s resilience and adaptability. For instance:

- In sentiment analysis, include mixed-tone reviews (e.g., “Great product, terrible shipping”).

- In customer service datasets, add queries with typos or slang to simulate real-world interactions.

Adversarial examples ensure the model doesn’t break down when faced with unexpected inputs.

How do I decide when to stop adding to my dataset?

Stop expanding your dataset when:

- Model performance plateaus: Additional data doesn’t improve validation metrics.

- Task objectives are met: The model consistently achieves desired accuracy or reliability.

- Cost outweighs benefit: Acquiring and processing more data becomes inefficient.

For example, if your chatbot achieves 95% accuracy in handling customer queries, further data might yield diminishing returns.

Resources

General Dataset Repositories

- Kaggle: A vast repository of datasets across domains like healthcare, finance, and education.

- UCI Machine Learning Repository: A trusted source for classic datasets, especially in research-oriented tasks.

- Google Dataset Search: A meta-search engine for finding publicly available datasets on nearly any topic.

- AWS Open Data Registry: Includes domain-specific datasets, often useful for large-scale tasks.

Domain-Specific Sources

Healthcare

- PubMed: A database of biomedical literature, ideal for research and clinical model fine-tuning.

Access PubMed - MIMIC-III and MIMIC-IV: Open datasets containing de-identified electronic health records from ICU patients.

- OMOP Common Data Model: Standardizes healthcare data for research and AI applications.

Legal

- Casetext Corpus: A repository of annotated legal cases for AI training.

Learn about Casetext - CourtListener: Offers free access to millions of legal opinions across jurisdictions.

Visit CourtListener - Harvard Law School Library Data: Contains case law data useful for training legal models.

Explore legal data resources

Finance

- Yahoo Finance API: Access historical stock prices, market data, and financial reports.

Use the Yahoo Finance API - Quandl: A rich source of economic and financial datasets.

Explore Quandl - Financial PhraseBank: A dataset of annotated financial sentiment phrases.

Access Financial PhraseBank

Tools for Data Curation

- Beautiful Soup: A Python library for web scraping structured data.

- DVC (Data Version Control): Tracks changes and versions of datasets, similar to Git.

Explore DVC - Labelbox: A tool for labeling and annotating datasets, particularly for supervised learning tasks.

Visit Labelbox - Prodigy: An annotation tool designed for machine learning workflows, with support for active learning.

Learn about Prodigy - Snorkel: A system for programmatically creating and managing training datasets with weak supervision.

Explore Snorkel

Preprocessing and Cleaning Tools

- OpenRefine: A powerful tool for cleaning messy data, especially tabular datasets.

Visit OpenRefine - Pandas: A Python library for data manipulation and cleaning, ideal for preprocessing large datasets.

- NLTK and SpaCy: Libraries for natural language processing tasks like tokenization, lemmatization, and text cleaning.

Explore NLTK

Learn SpaCy

Ethical and Privacy Resources

- Fairlearn: A Python library for measuring and mitigating AI bias.

- DeID: A suite of tools for anonymizing datasets, useful for privacy-sensitive domains like healthcare.

- OpenAI Guidelines on Fine-Tuning: Insights on preparing and managing datasets ethically and effectively.

- GDPR and CCPA Compliance Resources: For understanding privacy laws when using sensitive data.