Artificial intelligence (AI) has come a long way, but one of its biggest challenges remains data labeling. Traditional AI models rely on supervised learning, which requires massive amounts of manually labeled data. However, a new approach—self-supervised learning (SSL)—is revolutionizing AI training.

SSL allows AI models to learn from vast amounts of unlabeled data, reducing dependence on human annotation. But how exactly does it work? And why do experts believe it could be the future of AI? Let’s dive in!

What is Self-Supervised Learning?

Breaking Down the Concept

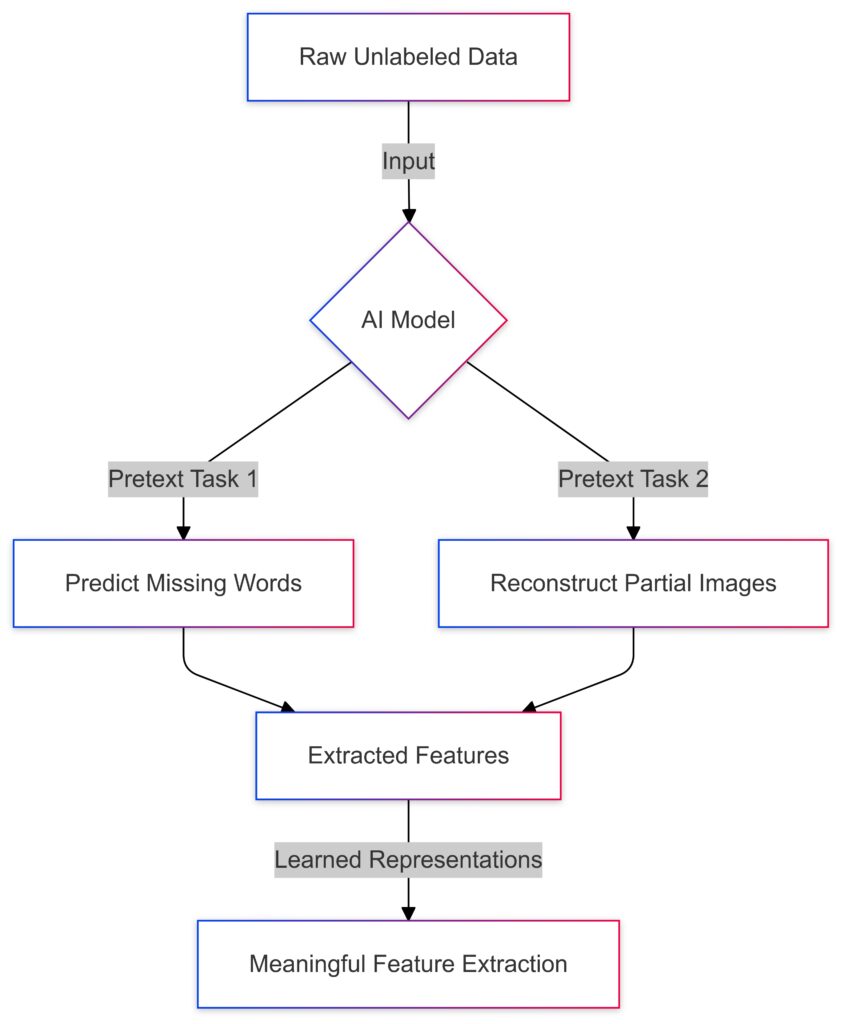

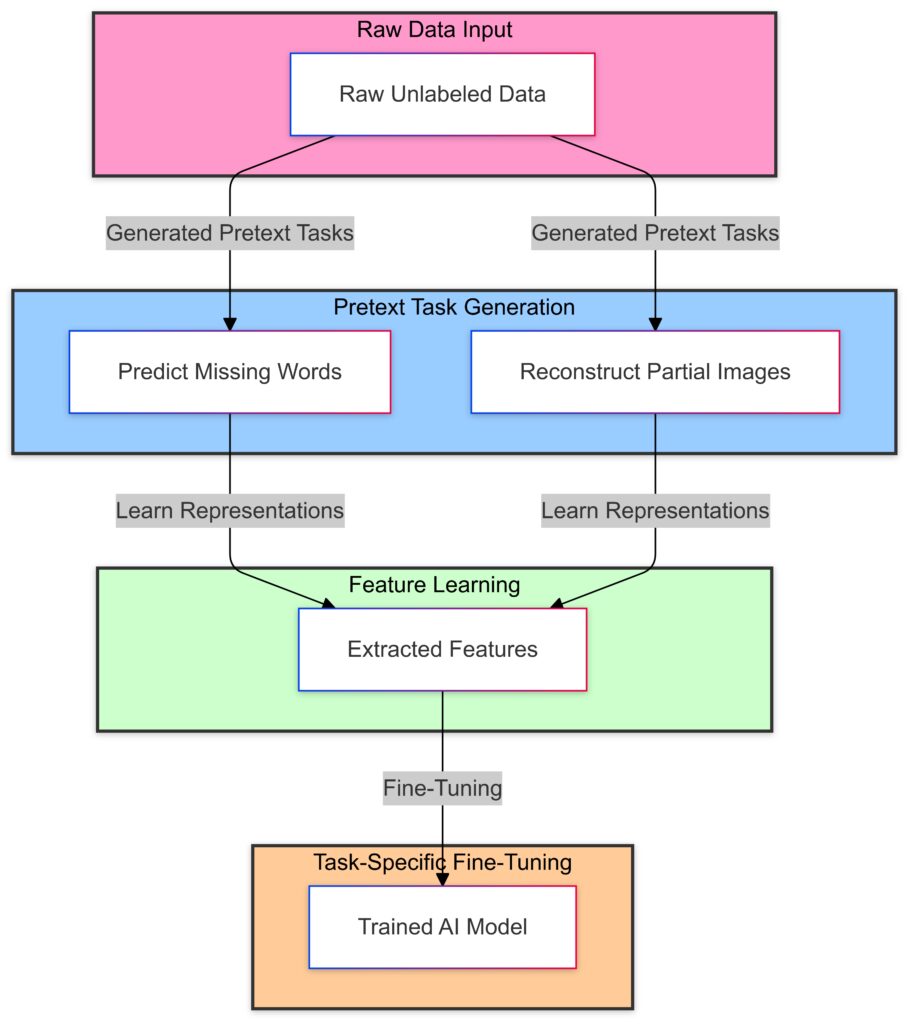

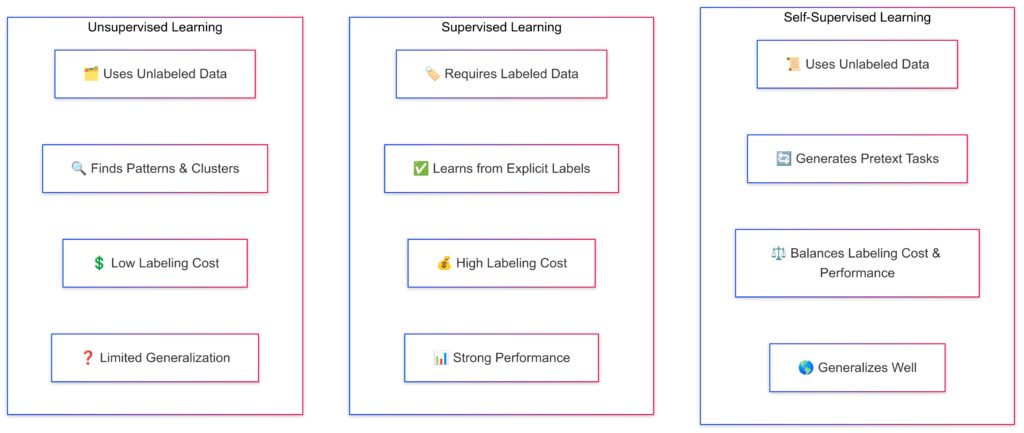

Self-supervised learning is a subset of unsupervised learning where an AI model generates its own labels from raw data. Unlike traditional supervised learning, which relies on external annotations, SSL derives meaning from the structure of the data itself.

In essence, SSL models create pretext tasks—small learning objectives that help them understand patterns before tackling real-world problems. This process helps AI develop generalized knowledge, making it more efficient and adaptable.

Why It Matters in AI Development

The biggest limitation of supervised learning is its need for labeled data, which is expensive and time-consuming to produce. Self-supervised learning eliminates this bottleneck by allowing models to train on massive, unlabeled datasets.

This approach not only improves scalability but also enables AI to learn in a more human-like manner—through experience rather than explicit instruction.

How Does Self-Supervised Learning Work?

Pretext Tasks: AI’s Training Exercises

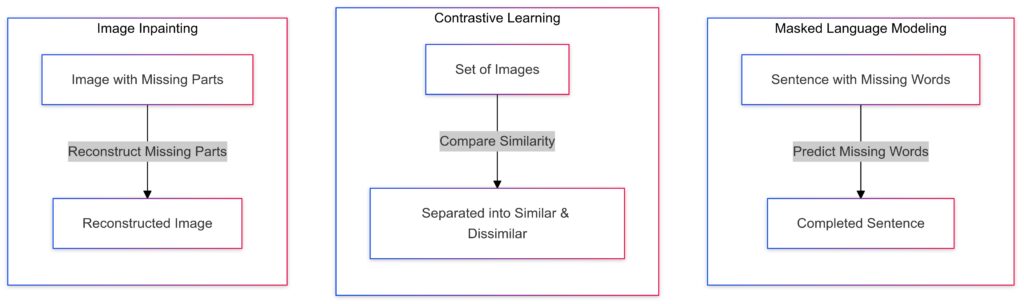

🖼️ Contrastive Learning (CL): Distinguishing between similar and dissimilar images.

🎨 Image Inpainting (II): Reconstructing missing parts of an image.

SSL models train by solving pretext tasks. These tasks are designed to help AI understand relationships and structures in data. Some common examples include:

- Image inpainting: Predicting missing parts of an image.

- Contrastive learning: Distinguishing between similar and different data points.

- Masked language modeling (MLM): Predicting missing words in a sentence (like in GPT models).

These tasks teach AI to recognize patterns and structures before being fine-tuned for specific applications.

Feature Learning Without Labels

One of the biggest advantages of SSL is that it helps AI learn feature representations autonomously. Instead of relying on predefined labels, the model discovers important features on its own.

For example, self-supervised models for speech recognition learn phonetic structures before being trained for speech-to-text conversion. This drastically reduces the need for labeled datasets.

Key Advantages of Self-Supervised Learning

1. Eliminates the Need for Large Labeled Datasets

One of the main reasons SSL is gaining traction is its ability to learn from raw, unlabeled data. This makes AI training more scalable and removes the dependency on expensive human annotation.

2. More Efficient & Scalable Training

With SSL, AI models can process millions of unstructured data points, making training faster and more efficient. This is particularly useful for companies dealing with big data.

3. Improved Generalization & Adaptability

Since self-supervised models learn from unstructured data, they generalize better across different tasks. This adaptability is crucial for applications like natural language processing (NLP), robotics, and healthcare AI.

4. Accelerates AI Research & Development

By removing the need for labeled data, SSL accelerates AI research, enabling faster development of cutting-edge models in fields like computer vision, speech recognition, and autonomous systems.

Real-World Applications of Self-Supervised Learning

Transforming Natural Language Processing (NLP)

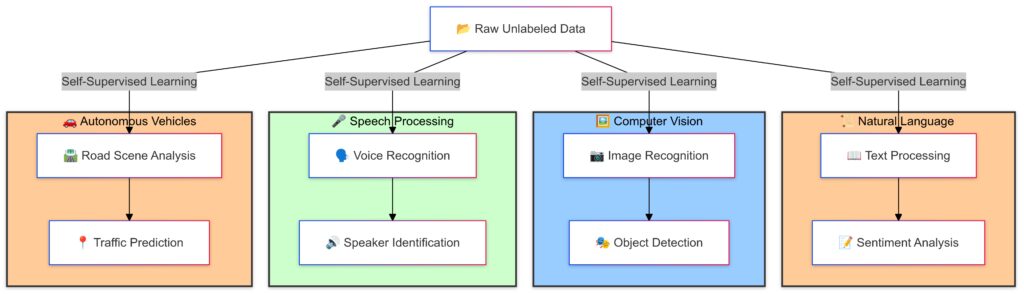

Modern NLP models, like BERT, GPT, and T5, rely on self-supervised techniques such as masked language modeling and next-sentence prediction. These methods allow AI to understand and generate human-like text with minimal labeled data.

Revolutionizing Computer Vision

In computer vision, SSL enables AI to understand images without explicit labels. Techniques like contrastive learning help models learn object representations, which improves performance on tasks like image recognition and segmentation.

Advancing Autonomous Vehicles

Self-supervised learning is crucial for autonomous driving. AI models learn from vast amounts of sensor data without relying on manually labeled road conditions. This allows self-driving cars to predict and react to real-world scenarios more efficiently.

Challenges and Limitations of Self-Supervised Learning

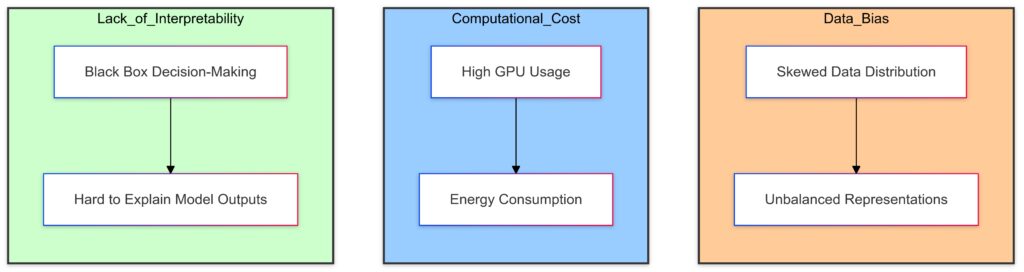

1. Computational Power Requirements

While SSL reduces the need for labeled data, it demands massive computing resources. Training large self-supervised models requires high-performance GPUs or TPUs, making it expensive for smaller organizations.

Additionally, the sheer volume of data processed in SSL can lead to longer training times, especially when compared to traditional supervised learning with labeled datasets.

2. Risk of Learning Unhelpful Patterns

Self-supervised models don’t always distinguish useful patterns from irrelevant ones. Without human supervision, there’s a risk of the AI learning biased, redundant, or misleading features.

For example, in medical imaging, an SSL model might focus on background artifacts rather than the actual disease indicators. Fine-tuning and rigorous validation are necessary to ensure meaningful learning.

3. Difficulty in Designing Effective Pretext Tasks

Pretext tasks are the foundation of SSL, but creating the right ones is tricky. If the task is too simple, the model won’t learn useful representations. If it’s too complex, the model might struggle to generalize.

Researchers constantly experiment with new task designs, balancing complexity and utility to achieve optimal performance.

4. Lack of Interpretability

One of the biggest concerns in AI is interpretability—understanding how and why a model makes decisions. SSL models, especially deep neural networks, can behave like “black boxes,” making it difficult to trace their learning process.

For industries like finance and healthcare, this lack of transparency can be a significant barrier to adoption.

Self-Supervised Learning vs. Other AI Training Methods

How SSL Compares to Supervised Learning

| Factor | Self-Supervised Learning | Supervised Learning |

|---|---|---|

| Data Labeling | No manual labeling required | Requires large labeled datasets |

| Scalability | Highly scalable due to unlabeled data | Limited by data labeling costs |

| Generalization | Learns broad representations | Often task-specific |

| Computational Cost | High for training but efficient in the long run | Lower for small datasets but expensive for big ones |

SSL is ideal for scenarios where labeled data is scarce, while supervised learning remains useful for highly specialized tasks requiring precise labels.

Self-Supervised vs. Unsupervised Learning

Unsupervised learning focuses on clustering and pattern detection, whereas SSL actively generates labels from raw data. This makes SSL more effective for complex tasks like language understanding and image recognition.

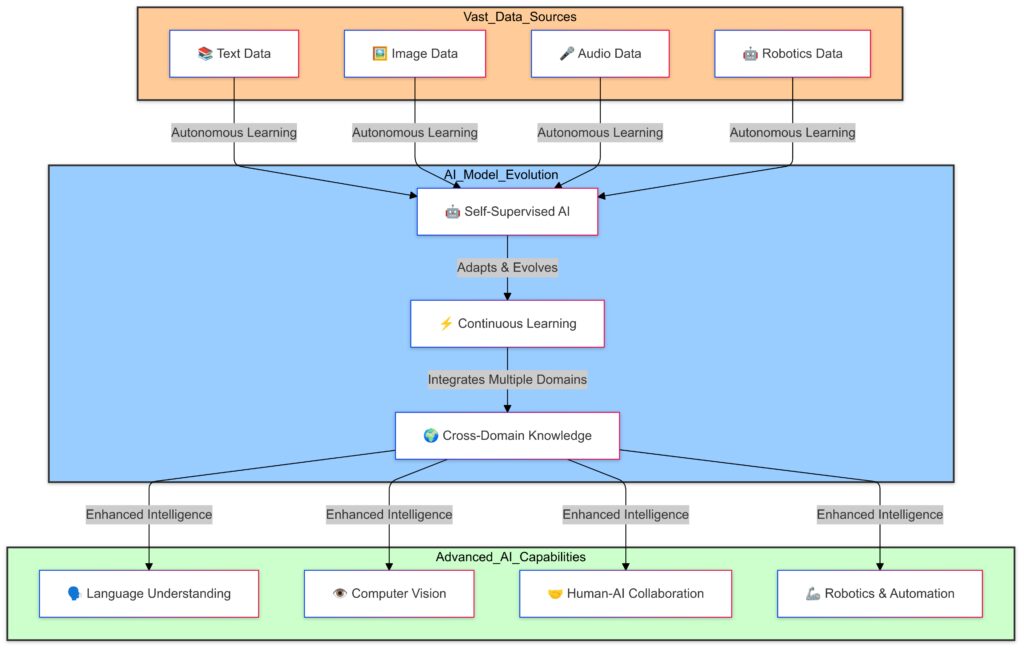

The Future of AI with Self-Supervised Learning

1. Paving the Way for Artificial General Intelligence (AGI)

Many researchers believe SSL is a critical step toward AGI—AI that can learn and reason across multiple domains like a human. Since self-supervised models learn from raw experiences, they are closer to human cognition than supervised AI.

2. Enhancing AI Efficiency Across Industries

From healthcare to cybersecurity, SSL is set to transform multiple industries. In biomedicine, AI models are already analyzing vast amounts of genomic data without requiring human annotations.

In finance, self-supervised models help detect fraudulent transactions by learning normal spending behaviors and identifying anomalies.

3. A More Sustainable AI Training Approach

As the demand for AI grows, SSL offers a more sustainable solution by minimizing the reliance on manually curated datasets. This not only speeds up development but also makes AI more accessible to researchers worldwide.

Key Innovations Driving Self-Supervised Learning Forward

1. Transformers and Large-Scale Language Models

The rise of transformer architectures like BERT, GPT, and T5 has fueled the success of self-supervised learning. These models use techniques like masked language modeling (MLM) and next-sentence prediction to learn from vast amounts of text without labeled data.

- BERT: Learns contextual word representations by predicting missing words.

- GPT: Trains using next-word prediction, making it highly effective for text generation.

- CLIP (OpenAI): Combines text and image representations, making AI better at understanding multimodal data.

These advancements have revolutionized NLP, search engines, and AI-generated content.

2. Contrastive Learning for Computer Vision

One of the most impactful innovations in self-supervised learning is contrastive learning. This technique trains models to differentiate between similar and dissimilar data points without explicit labels.

- SimCLR and MoCo: Use data augmentations to create different views of the same image, helping AI learn representations without labels.

- DINO (by Facebook AI): Uses contrastive learning to improve image recognition models, allowing them to generalize better across datasets.

This approach has led to significant improvements in image classification, object detection, and even medical imaging analysis.

3. Self-Supervised Speech and Audio Processing

Speech recognition has historically relied on supervised learning, but SSL is changing the game. Models like wav2vec 2.0 (by Meta AI) use self-supervised techniques to learn speech representations without transcripts.

- wav2vec 2.0: Trains on raw audio waveforms and improves speech-to-text accuracy, even for low-resource languages.

- HuBERT: Uses hidden-unit discovery to refine audio learning, making AI more effective in noisy environments.

These innovations are expanding voice AI capabilities, especially for applications like virtual assistants, transcription services, and accessibility tools.

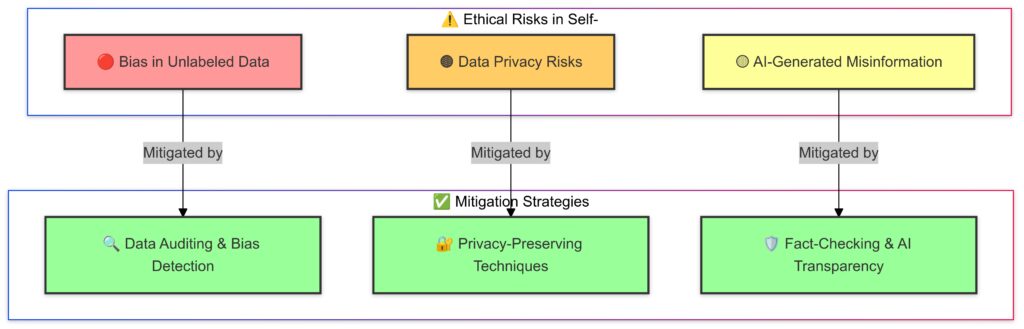

Ethical Considerations in Self-Supervised Learning

1. Bias in Unlabeled Data

Since SSL models learn from unstructured web data, they risk inheriting biases present in that data. If the training data is skewed, the AI might reinforce gender, racial, or cultural biases in its predictions.

- Example: AI-generated resumes trained on biased hiring data might favor male candidates for technical roles.

- Solution: Researchers are developing bias detection tools and fairer data sampling techniques to mitigate these risks.

2. Data Privacy Concerns

Self-supervised models often train on publicly available data, which raises concerns about user privacy and consent.

- Scraping web data without explicit permission can lead to ethical dilemmas.

- Companies are exploring federated learning to train AI without exposing sensitive user data.

3. The Risk of Misinformation and Deepfakes

As SSL models become more advanced, they can be used to generate realistic fake content, including deepfake videos, misleading news articles, and synthetic voices.

- Social media platforms are developing AI-powered detection systems to combat misinformation.

- Governments are considering regulations to control the misuse of AI-generated content.

Final Thoughts: Is Self-Supervised Learning the Future?

Self-supervised learning is already transforming AI across multiple domains—from language models and computer vision to speech recognition and autonomous systems. By reducing reliance on labeled data, SSL is making AI more scalable, efficient, and accessible.

However, challenges like bias, computational cost, and ethical concerns must be addressed to ensure responsible deployment. If researchers and developers continue refining these models, SSL could very well be the key to unlocking artificial general intelligence (AGI).

What do you think? Will self-supervised learning reshape the future of AI? Let’s discuss!

FAQs

Can self-supervised learning work with small datasets?

Yes, but SSL is most effective with large-scale data. Since it learns patterns without human labeling, having more data improves its generalization ability.

For small datasets, techniques like transfer learning can help. A model can first be pre-trained on a massive SSL dataset (e.g., ImageNet or Wikipedia text) and then fine-tuned on a smaller dataset for specific tasks.

What are some real-world applications of self-supervised learning?

Self-supervised learning is already transforming multiple industries:

- Healthcare: AI models learn to detect diseases from MRI scans without requiring labeled images.

- Finance: Fraud detection systems learn spending patterns to flag unusual transactions.

- Autonomous Vehicles: Self-driving cars analyze road conditions without human-labeled datasets.

- Search Engines: Google’s RankBrain and BERT improve search relevance using SSL techniques.

Why is self-supervised learning important for artificial general intelligence (AGI)?

AGI requires AI to learn like humans, adapting to multiple tasks without explicit supervision. SSL enables models to develop generalized knowledge by identifying meaningful patterns from raw data, making it a stepping stone toward human-like intelligence.

For instance, humans learn language by exposure, not labeled datasets—similar to how GPT models learn by predicting words in vast text corpora.

Does self-supervised learning completely eliminate the need for labeled data?

Not entirely. SSL reduces reliance on manual labeling but still requires some labeled data for fine-tuning.

For example, GPT models are pre-trained using SSL on vast unlabeled text but later fine-tuned with supervised learning (e.g., Reinforcement Learning with Human Feedback, RLHF) to improve quality and safety.

What are the biggest challenges in implementing self-supervised learning?

Several challenges exist:

- Computational cost: Training SSL models requires massive computing power.

- Data biases: Learning from unfiltered web data may introduce undesirable biases.

- Interpretability: Understanding why a model makes decisions remains difficult.

- Task design complexity: Creating effective pretext tasks requires expertise.

Despite these hurdles, SSL is evolving rapidly and shaping the next generation of AI models.

How does self-supervised learning handle noisy or low-quality data?

SSL models are designed to learn robust representations, but noisy or low-quality data can still introduce problems. To address this:

- Data augmentation techniques like random cropping, rotation, and noise injection help models generalize better.

- Contrastive learning forces the model to differentiate between similar and dissimilar examples, reducing the impact of noisy data.

- Denoising autoencoders reconstruct original data from corrupted versions, making them useful in image and text applications.

For example, wav2vec 2.0 in speech recognition improves performance even in noisy environments, such as call centers or outdoor recordings.

What industries will benefit the most from self-supervised learning?

SSL has the potential to disrupt several industries:

- Healthcare: AI learns to identify tumors in medical scans without extensive labeled datasets.

- E-commerce: Personalized product recommendations improve as AI understands customer preferences from unlabeled behavior data.

- Cybersecurity: AI models detect anomalous behavior in network traffic, identifying potential cyber threats without pre-labeled attack patterns.

- Education: Adaptive learning platforms use SSL to personalize content based on student interactions.

Can self-supervised learning be combined with reinforcement learning?

Yes! Combining self-supervised learning (SSL) with reinforcement learning (RL) can create more intelligent AI systems.

- SSL helps RL models learn better feature representations before interacting with an environment.

- This reduces the number of trial-and-error cycles needed, making RL training faster and more efficient.

For example, in robotics, SSL helps robots understand visual and sensory inputs before being fine-tuned with RL for specific tasks like grasping objects or navigating obstacles.

Is self-supervised learning better than transfer learning?

Not necessarily—it depends on the use case.

- Self-supervised learning trains models from scratch, making them more generalized and scalable.

- Transfer learning reuses pre-trained models, making it more efficient for domain-specific tasks.

For example, a medical AI model might use transfer learning from an ImageNet-trained model instead of relying on SSL from scratch due to limited medical imaging data.

What role does self-supervised learning play in multimodal AI?

Multimodal AI models learn from multiple data types, such as text, images, and audio. SSL is crucial in this area because it allows models to:

- Align different data types (e.g., linking images with captions).

- Learn cross-modal representations without requiring labeled pairs.

A prime example is OpenAI’s CLIP, which learns to associate text with images, enabling models to perform zero-shot image classification without specific labels.

How can businesses adopt self-supervised learning today?

Businesses can start by:

- Leveraging pre-trained SSL models (e.g., BERT for NLP, SimCLR for vision).

- Experimenting with custom SSL tasks tailored to their datasets.

- Combining SSL with existing AI pipelines to improve efficiency.

For instance, a company in customer service can use self-supervised NLP models to improve chatbots by training them on unstructured conversation logs instead of manually labeled support tickets.

Resources

Research Papers & Academic Sources

- “A Survey on Self-Supervised Learning” (2021) – Yann LeCun & Ishan Misra

Read here – A deep dive into self-supervised learning techniques and future directions. - “SimCLR: A Simple Framework for Contrastive Learning” (2020) – Google AI

Read here – A foundational paper on contrastive learning, a key SSL technique. - “BERT: Pre-training of Deep Bidirectional Transformers” (2018) – Google AI

Read here – How self-supervised learning revolutionized NLP.

Online Courses & Tutorials

- Deep Learning Specialization – Andrew Ng (Coursera)

Enroll here – Covers modern deep learning techniques, including SSL concepts. - Self-Supervised Learning Course – Hugging Face

Learn here – Hands-on tutorials on implementing SSL in NLP and vision models. - Fast.ai Course – Practical Deep Learning for Coders

Start here – Explains self-supervised learning in an accessible, code-first manner.

Industry & Tech Blogs

- Meta AI Blog – Self-Supervised Learning Research

Visit here – Insights from Yann LeCun and the Meta AI team. - Google AI Blog – Self-Supervised Learning Advances

Read here – Updates on Google’s SSL research and applications. - OpenAI Blog – Contrastive Learning & GPT Models

Explore here – How OpenAI uses SSL in models like GPT, CLIP, and DALL·E.

GitHub Repositories & Code Implementations

- Hugging Face Transformers (BERT, GPT, T5)

Explore here – Pre-trained self-supervised models for NLP. - SimCLR – Google Research Implementation

Code here – Hands-on contrastive learning for computer vision. - wav2vec 2.0 – Meta AI’s Self-Supervised Speech Model

Try here – Learn how SSL improves speech recognition.