Machine learning (ML) model training can be time-consuming and complex, requiring expertise in data preprocessing, feature engineering, model selection, and hyperparameter tuning. AutoML (Automated Machine Learning) simplifies this by automating many of these tasks, making ML accessible to non-experts while improving efficiency for experienced data scientists.

This guide explores how AutoML works, its key components, and how to implement it effectively.

Understanding AutoML and Its Benefits

What is AutoML?

AutoML refers to tools and techniques that automate the end-to-end process of applying machine learning to real-world problems. It helps users build models without deep expertise in ML algorithms or tuning parameters manually.

AutoML platforms use algorithms to:

- Clean and preprocess data

- Select the best model architecture

- Tune hyperparameters

- Evaluate performance

Why Use AutoML?

AutoML provides several advantages:

- Faster Model Development: Automates repetitive tasks like feature selection and tuning.

- Lower Barrier to Entry: Helps users without ML expertise deploy models effectively.

- Optimized Performance: Finds the best model with minimal manual intervention.

- Scalability: Works well for large datasets and multiple models.

Popular AutoML Platforms

Many tools and frameworks support AutoML, including:

- Google AutoML (Cloud-based, user-friendly)

- H2O.ai AutoML (Open-source, highly customizable)

- AutoKeras (Deep learning-focused)

- TPOT (Tree-based pipeline optimization for machine learning)

- Microsoft Azure AutoML (Enterprise-level automation)

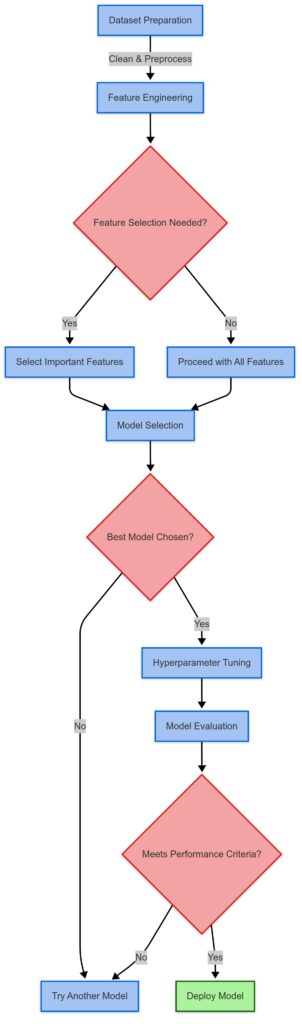

Key Steps in Automating Model Training with AutoML

Step 1: Preparing Your Dataset

AutoML tools require a well-structured dataset. This involves:

- Cleaning Data: Handling missing values, duplicates, and inconsistencies.

- Feature Engineering: Creating meaningful input variables if necessary.

- Splitting Data: Dividing into training, validation, and test sets.

Step 2: Choosing an AutoML Framework

Your choice depends on the problem type:

- For classification/regression: TPOT, H2O.ai, Google AutoML

- For deep learning tasks: AutoKeras, Google AutoML Vision

- For enterprise applications: Azure AutoML, Google Cloud AutoML

Step 3: Configuring AutoML

Most AutoML platforms allow users to specify:

- Target variable: The outcome you’re predicting.

- Evaluation metric: Accuracy, RMSE, F1-score, etc.

- Compute constraints: Time limits, hardware selection (CPU/GPU).

Step 4: Running the AutoML Process

AutoML tools handle:

- Feature selection and transformation

- Model selection (e.g., decision trees, neural networks, ensembles)

- Hyperparameter optimization using algorithms like Bayesian optimization or genetic algorithms.

This process might take minutes to hours depending on dataset size and complexity.

Step 5: Evaluating and Deploying the Best Model

Once AutoML finds the best model, it provides performance metrics. You can:

- Interpret results (confusion matrix, ROC curve, feature importance).

- Export the model for deployment in production (e.g., as a REST API or cloud service).

- Fine-tune manually if needed for additional performance improvements.

Advanced AutoML Techniques for Better Model Training

AutoML is powerful, but understanding its advanced techniques can further optimize your models. This section covers how to fine-tune AutoML, handle custom models, and integrate it into production workflows.

Customizing AutoML for Specific Use Cases

While AutoML automates many tasks, sometimes you need custom configurations to improve results.

Using Custom Feature Engineering

AutoML handles feature selection, but manually creating domain-specific features can enhance model performance.

- Use domain knowledge to generate meaningful variables.

- Apply dimensionality reduction (PCA, LDA) for high-dimensional data.

- Engineer interaction terms that capture relationships between features.

Defining Search Space for Hyperparameter Tuning

AutoML tools like TPOT and H2O allow users to define hyperparameter search spaces, limiting unnecessary computations.

- Set ranges for hyperparameters (e.g., learning rate: 0.01–0.1).

- Choose specific algorithms to explore (e.g., random forest, XGBoost).

- Adjust early stopping criteria to prevent overfitting.

Ensemble Learning with AutoML

Many AutoML frameworks build ensemble models for better generalization.

- Stacking ensembles: Combine predictions from multiple models.

- Boosting techniques: XGBoost, LightGBM, and CatBoost for improved accuracy.

- Bagging methods: Random Forest for robust predictions.

Handling Custom Models in AutoML Pipelines

If the default models don’t fit your needs, some AutoML tools allow custom model integration.

AutoKeras for Neural Networks

- Allows users to design custom deep learning architectures.

- Supports transfer learning for tasks like image classification.

- Provides multi-input models for complex datasets.

H2O AutoML with Custom Models

- Lets users import pre-trained models and blend them with AutoML’s output.

- Offers Python and R APIs for fine-tuning models post-training.

- Supports custom loss functions for non-standard ML problems.

Google Cloud AutoML with Custom Training

- Users can upload custom models via TensorFlow or PyTorch.

- Allows custom training loops while still benefiting from AutoML’s optimizations.

- Integrates with Google AI Platform for scalable deployment.

Integrating AutoML into Production Workflows

Once AutoML finds the best model, the next step is deploying and maintaining it.

Exporting and Deploying Models

- Convert models to ONNX, TensorFlow SavedModel, or PMML formats for compatibility.

- Deploy models using Docker and Kubernetes for scalable inference.

- Use serverless ML deployment with Google Cloud, AWS Lambda, or Azure Functions.

Automating Model Retraining

Data changes over time, requiring continuous learning.

- Set up scheduled AutoML runs (e.g., weekly updates).

- Use ML pipelines (Kubeflow, Airflow) to retrain models automatically.

- Implement data drift detection to trigger retraining when input patterns change.

Monitoring and Optimization

After deployment, monitor model performance:

- Use MLFlow or Weights & Biases to track model metrics.

- Set up real-time anomaly detection to catch prediction errors.

- Continuously update AutoML models based on new data trends.

Real-World Applications, AutoML Limitations, and Best Practices

AutoML is revolutionizing industries by making machine learning more accessible. However, it has limitations and requires best practices for optimal performance. This section covers practical applications, challenges, and strategies to maximize AutoML’s potential.

Real-World Applications of AutoML

AutoML is used across multiple industries to solve complex problems efficiently.

Healthcare: Disease Prediction & Medical Imaging

- AutoML assists in predicting diseases like diabetes and heart conditions from patient data.

- Used in medical imaging (Google AutoML Vision, AutoKeras) for tumor detection and anomaly classification.

- Enables faster drug discovery by analyzing chemical compound structures.

Finance: Fraud Detection & Risk Analysis

- Detects fraudulent transactions using anomaly detection models.

- Assesses credit risk by analyzing historical loan repayment patterns.

- AutoML-powered algorithmic trading helps optimize stock market strategies.

Retail & E-commerce: Personalization & Demand Forecasting

- Enhances recommendation engines (Amazon AutoML for product suggestions).

- Predicts customer churn and optimizes marketing campaigns.

- Improves inventory management with demand forecasting models.

Manufacturing: Quality Control & Predictive Maintenance

- Identifies defective products in real-time using AutoML-powered image recognition.

- Implements predictive maintenance, reducing downtime and repair costs.

- Enhances supply chain optimization with intelligent demand prediction.

Marketing & Customer Service: NLP & Chatbots

- Powers AI-driven chatbots using AutoML for natural language processing (NLP).

- Helps analyze customer sentiment from reviews and social media data.

- Automates email classification and lead scoring for sales teams.

Limitations and Challenges of AutoML

Despite its advantages, AutoML has some limitations that users should be aware of.

Limited Interpretability

- Many AutoML models, especially deep learning-based ones, operate as black boxes.

- Harder to explain decisions in regulated industries like healthcare and finance.

- Solutions: Use SHAP (SHapley Additive Explanations) or LIME to interpret model outputs.

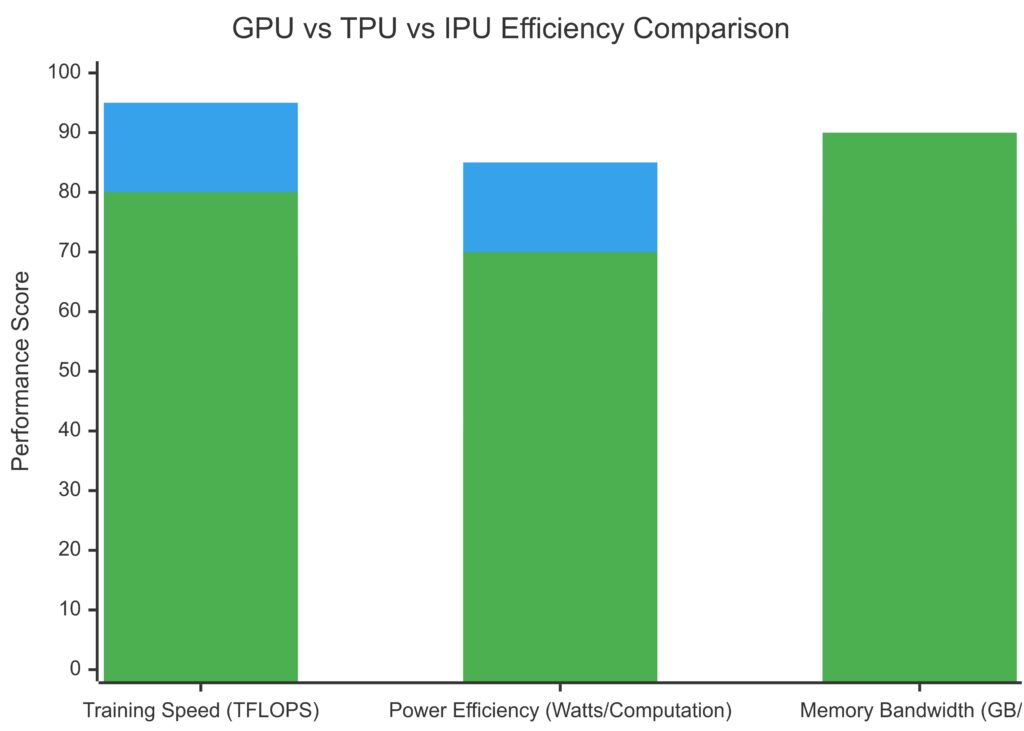

Computational Costs & Time Constraints

- Some AutoML processes can take hours or days to find the best model.

- Running AutoML on large datasets requires high-performance GPUs/TPUs.

- Solutions: Set time limits on model search and use efficient AutoML frameworks like H2O.ai.

Limited Customization in Some Platforms

- Certain AutoML platforms, like Google AutoML, have predefined model architectures with limited tuning options.

- Users may need to integrate custom models manually for more control.

Data Quality Dependency

- AutoML works best with clean, structured data; it doesn’t fix poor-quality datasets.

- Solutions: Conduct thorough data preprocessing before running AutoML.

Best Practices for Using AutoML Effectively

To maximize the benefits of AutoML, follow these best practices.

1. Start with High-Quality Data

- Perform data cleaning (handle missing values, outliers).

- Use feature engineering to create relevant variables.

- Normalize or standardize numerical data when necessary.

2. Choose the Right AutoML Tool for Your Use Case

- For business users: Google AutoML, Azure AutoML.

- For open-source flexibility: H2O.ai, TPOT.

- For deep learning applications: AutoKeras.

3. Define Business Goals Before Running AutoML

- Clarify the problem statement (classification, regression, time series).

- Select the right evaluation metric (e.g., accuracy, F1-score, RMSE).

- Set constraints on runtime and computing resources.

4. Monitor and Maintain Models Post-Deployment

- Use ML monitoring tools like MLFlow, Weights & Biases.

- Set up automated retraining pipelines.

- Continuously check for data drift to ensure model relevance.

5. Balance Automation with Human Expertise

- While AutoML automates tasks, human oversight is crucial for ethical AI.

- Data scientists should validate model outputs before deployment.

Final Thoughts

AutoML is transforming machine learning by reducing the technical barriers and accelerating model development. From healthcare to finance, it enables businesses to leverage AI with minimal expertise.

However, while it simplifies many tasks, AutoML isn’t a one-size-fits-all solution. Understanding its limitations, best practices, and customization options is key to unlocking its full potential.

Want to explore a specific AutoML tool or real-world case study? Let me know!

FAQs

Is AutoML suitable for small datasets?

AutoML works best with medium to large datasets, as smaller datasets may result in overfitting or unreliable predictions. However, some platforms like H2O.ai and TPOT use advanced techniques such as cross-validation and ensemble learning to improve performance on limited data.

A startup with only 1,000 customer records might struggle to achieve strong generalization using AutoML, whereas a dataset with 100,000 records would yield more robust insights.

How does AutoML deal with missing data?

Most AutoML platforms automatically handle missing values using techniques such as:

- Mean, median, or mode imputation (for numerical and categorical data).

- Dropping features with excessive missing values.

- Using models that can handle missing data, such as XGBoost and LightGBM.

For example, H2O AutoML decides whether to fill missing values or exclude features based on their importance in predictive performance.

Can AutoML models be integrated into existing applications?

Yes, most AutoML frameworks allow exporting models in formats such as ONNX, TensorFlow SavedModel, PMML, or pickle, making them compatible with various applications. They can be deployed via APIs, cloud services, or embedded systems.

A financial institution might integrate an AutoML-powered fraud detection model into its real-time transaction monitoring system using an API hosted on AWS Lambda or Google Cloud Functions.

Does AutoML replace data scientists?

No, AutoML enhances productivity but does not eliminate the need for data scientists. While it automates tasks like feature selection, model tuning, and hyperparameter optimization, human expertise is essential for:

- Defining business problems and selecting relevant features.

- Ensuring fairness and avoiding biased models.

- Interpreting and improving model predictions.

For example, an AutoML system might build an accurate loan approval model, but a data scientist must verify that it doesn’t discriminate against specific demographics.

Which industries benefit the most from AutoML?

AutoML is widely used across various industries:

- Healthcare: Disease prediction, medical imaging analysis.

- Finance: Fraud detection, credit scoring, risk assessment.

- Retail & E-commerce: Product recommendations, customer segmentation.

- Manufacturing: Predictive maintenance, defect detection.

For example, in medical imaging, AutoML helps classify X-rays and MRI scans, assisting radiologists in diagnosing diseases more accurately.

Can AutoML results be trusted without manual tuning?

AutoML provides a strong baseline model, but manual tuning can improve results, especially for complex tasks. Some platforms, like H2O.ai, allow further hyperparameter adjustments after AutoML completes its optimization process.

An insurance company using AutoML to detect fraudulent claims may need to manually adjust classification thresholds to minimize false positives and false negatives.

What are the limitations of AutoML?

AutoML is powerful, but it has certain limitations:

- Computational costs: Training complex models on large datasets requires high-performance hardware.

- Limited interpretability: Some models, especially deep learning-based ones, function as black boxes with low explainability.

- Data dependency: AutoML doesn’t fix poor-quality data—it requires well-structured, clean datasets.

For example, a banking system using AutoML for loan approvals must ensure that the data does not contain historical biases, as AutoML alone will not detect or correct them.

How can beginners get started with AutoML?

- Choose an AutoML platform based on your needs (Google AutoML for cloud-based solutions, TPOT for Python users, H2O.ai for enterprise applications).

- Prepare a clean dataset with relevant features.

- Define an objective (classification, regression, time series forecasting).

- Run AutoML and analyze the best-performing model.

- Deploy the model and monitor its performance.

For a hands-on start, Google Cloud AutoML allows users to upload labeled data, train models, and deploy them via API without needing coding experience.

How does AutoML perform hyperparameter tuning?

AutoML uses automated search algorithms such as Bayesian optimization, grid search, random search, and genetic algorithms to find the best hyperparameters for a given model.

For instance, TPOT (Tree-based Pipeline Optimization Tool) applies genetic algorithms to iteratively evolve better-performing models, adjusting parameters like learning rate, tree depth, and regularization strength.

Does AutoML work for time series forecasting?

Yes, many AutoML platforms support time series forecasting. Some tools, like Facebook Prophet, AutoTS, and H2O AutoML, include specialized models designed for sequential data.

For example, a retail company using AutoML for sales forecasting might have the tool automatically select ARIMA, LSTMs, or gradient boosting models, optimizing seasonal trends and lag-based features.

How does AutoML handle imbalanced datasets?

AutoML uses techniques like:

- Oversampling (SMOTE) – Synthetic Minority Over-sampling Technique to balance classes.

- Undersampling – Reducing instances of the majority class to avoid bias.

- Class weighting – Adjusting the importance of different classes during training.

For example, in fraud detection, where fraudulent transactions are rare, AutoML may use SMOTE to generate synthetic fraud cases, ensuring the model doesn’t overlook them.

Can AutoML be used for NLP (Natural Language Processing)?

Yes, AutoML supports text classification, sentiment analysis, entity recognition, and summarization. Google AutoML Natural Language and AutoKeras allow users to train NLP models without deep learning expertise.

For instance, a customer support chatbot powered by AutoML can classify user queries as billing issues, technical support, or product inquiries, routing them to the appropriate department.

Is there a risk of AutoML overfitting?

Yes, AutoML can overfit, especially when:

- The dataset is too small.

- The model complexity is too high.

- The training time is too long without early stopping.

To prevent overfitting, AutoML often includes:

- Cross-validation techniques.

- Regularization methods (L1, L2 penalties).

- Ensemble learning (bagging, boosting).

For example, Google AutoML Vision automatically applies techniques like dropout layers in neural networks to reduce overfitting in image classification tasks.

How scalable is AutoML for big data?

AutoML scales well when deployed on cloud-based platforms like Google Cloud AutoML, AWS SageMaker Autopilot, and Azure AutoML, which support distributed computing.

For instance, a telecom company analyzing millions of customer interactions can use H2O.ai AutoML on a Spark cluster, distributing computations across multiple nodes for faster model training.

Can AutoML generate explainable AI models?

Some AutoML tools provide model interpretability using:

- SHAP (Shapley Additive Explanations) – Measures the impact of each feature.

- LIME (Local Interpretable Model-Agnostic Explanations) – Creates simplified local approximations of complex models.

- Feature importance rankings – Highlights the most influential variables.

For example, in a loan approval model, SHAP values can show whether income or credit score had a higher influence on approval decisions.

What are the key differences between Google AutoML, H2O AutoML, and TPOT?

- Google AutoML: Best for non-coders, fully cloud-based, integrates with Google services.

- H2O AutoML: Open-source, supports deep learning and scalable big data analysis.

- TPOT: Python-based, best for optimizing ML pipelines with genetic algorithms.

For example, a startup without ML expertise might use Google AutoML, while a data scientist optimizing a Kaggle competition model may prefer TPOT or H2O.ai AutoML for more flexibility.

Can AutoML handle real-time predictions?

Yes, AutoML models can be deployed as APIs for real-time inference. Cloud platforms like AWS SageMaker, Google AI Platform, and Azure ML allow seamless real-time prediction integration.

For instance, an e-commerce platform using AutoML for product recommendations can deploy a model via a REST API, serving personalized suggestions within milliseconds.

How does AutoML compare to traditional machine learning approaches?

| Feature | AutoML | Traditional ML |

|---|---|---|

| Model Selection | Automated | Manual |

| Hyperparameter Tuning | Automated | Manual |

| Feature Engineering | Partially Automated | Manual |

| Interpretability | Varies | Higher |

| Customizability | Limited | High |

| Required Expertise | Low | High |

For example, a business analyst with no ML experience could use Google AutoML, whereas a data scientist working on custom NLP models might prefer manually designing algorithms in TensorFlow or PyTorch.

How much does AutoML cost?

AutoML pricing varies by provider:

- Google AutoML: Pay-per-use model, costs depend on training and prediction hours.

- H2O AutoML: Free open-source version, enterprise support available.

- AWS SageMaker Autopilot: Charges based on compute resources.

For example, a small startup might start with the free version of H2O AutoML, whereas a large corporation needing enterprise support might invest in Google AutoML or Azure ML.

Can AutoML be used for reinforcement learning?

Most AutoML tools focus on supervised and unsupervised learning, but research is evolving toward automated reinforcement learning (AutoRL).

For example, AutoRL frameworks like RLlib and Google Brain’s AutoRL are being developed to optimize robotic control tasks and financial trading strategies automatically.

Resources

Official Documentation & Platforms

- Google Cloud AutoML – A cloud-based AutoML platform for vision, NLP, and structured data.

- Microsoft Azure AutoML – Enterprise-grade AutoML for various ML tasks.

- AWS SageMaker Autopilot – Amazon’s AutoML tool that automates model training and deployment.

- H2O.ai AutoML – Open-source AutoML framework for data scientists.

- TPOT (Tree-based Pipeline Optimization Tool) – An AutoML tool using genetic algorithms for ML pipeline optimization.

- AutoKeras – Open-source deep learning AutoML library built on TensorFlow & Keras.

Online Courses & Tutorials

- Coursera – Automated Machine Learning by Google Cloud – Covers Google AutoML’s capabilities.

- Udacity – Machine Learning with AutoML – A practical course on implementing AutoML.

- Fast.ai – Practical Deep Learning – Introduces automation techniques for deep learning.

- H2O.ai Learning Resources – Guides and webinars on H2O AutoML.

Books & Research Papers

- “Automated Machine Learning: Methods, Systems, Challenges” – Comprehensive book covering AutoML techniques and tools.

- “AutoML: A Survey of the State-of-the-Art” (Research Paper) – Overview of AutoML advancements in ML and deep learning. (Read it here)

- “Hands-On Automated Machine Learning” by Sibanjan Das & Umit Mert Cakmak – Practical guide on using AutoML in real-world projects.

Communities & Forums

- Kaggle AutoML Discussions – Active community discussing AutoML techniques and challenges.

- Stack Overflow AutoML Questions – Troubleshooting and solutions for AutoML-related queries.

- Reddit r/MachineLearning – Discussions on the latest AutoML research and industry trends.

Code Repositories & Examples

- Google AutoML Samples on GitHub – Code examples for training and deploying models.

- H2O AutoML Tutorials – Hands-on tutorials for H2O AutoML users.

- AutoKeras GitHub – Deep learning AutoML implementations.

- TPOT Example Pipelines – Sample pipelines optimized with TPOT.