Unveiling the Power of Supervised Learning in Anomaly Detection

Introduction to Supervised Learning

Supervised learning methods for anomaly detection require a labeled dataset where anomalies are explicitly marked. The algorithm learns to differentiate between normal and anomalous instances based on this labeled data. This technique is crucial in various applications, particularly in detecting anomalies such as fraud.

Key Algorithms in Supervised Learning

Classification Algorithms

Random Forest: A tree-based ensemble method that combines multiple decision trees to improve classification performance. Each tree is trained on a random subset of the data, and the final decision is made by aggregating the predictions of all the trees.

- Advantages: Robust to overfitting, can handle large datasets, and provides feature importance scores.

- Disadvantages: Can be computationally intensive and less interpretable than single decision trees.

Support Vector Machines (SVM): A classification algorithm that constructs a hyperplane in a high-dimensional space to separate different classes. For anomaly detection, it maximizes the margin between normal and anomalous data points.

- Advantages: Effective in high-dimensional spaces, works well with clear margin of separation.

- Disadvantages: Less effective when classes are not linearly separable, can be sensitive to kernel parameters.

Real-world Application: Credit Card Fraud Detection

Context: Credit card companies face significant financial losses due to fraudulent transactions. Detecting such fraud in real-time is critical to mitigate these losses.

Implementation: Supervised learning algorithms like Random Forest and SVM are trained on historical transaction data, which includes labels indicating whether each transaction is fraudulent or legitimate.

- Data Preparation: The dataset includes features such as transaction amount, time, location, and merchant details, along with fraud labels.

- Model Training: The algorithms learn patterns associated with fraudulent transactions from the labeled data. Random Forest can provide insights into which features are most indicative of fraud, while SVM aims to create a clear boundary between normal and fraudulent transactions.

- Detection: Once trained, these models can process new transactions and predict the likelihood of fraud in real-time, allowing the company to flag and investigate suspicious activities promptly.

Advantages of Supervised Learning in Fraud Detection:

- Accuracy: High accuracy in detecting fraud due to the availability of labeled examples during training.

- Speed: Models can quickly process and classify new transactions, making them suitable for real-time applications.

- Feature Insights: Algorithms like Random Forest provide feature importance, helping to understand which transaction attributes are most critical for fraud detection.

Challenges:

- Class Imbalance: Fraudulent transactions are much rarer than legitimate ones, leading to class imbalance in the training data. Techniques such as oversampling the minority class, undersampling the majority class, or using different performance metrics can help address this issue.

- Evolving Patterns: Fraudsters continually change their tactics, so the models need to be regularly updated with new data to maintain their effectiveness.

By leveraging supervised learning methods like Random Forest and SVM, credit card companies can significantly enhance their ability to detect and prevent fraudulent transactions, thereby reducing financial losses and protecting customers.

Unveiling the Power of Supervised Learning in Anomaly Detection

Introduction to Supervised Learning

Supervised learning methods for anomaly detection require a labeled dataset where anomalies are explicitly marked. The algorithm learns to differentiate between normal and anomalous instances based on this labeled data. This technique is crucial in various applications, particularly in detecting anomalies such as fraud.

Key Algorithms in Supervised Learning

Classification Algorithms

Random Forest: A tree-based ensemble method that combines multiple decision trees to improve classification performance. Each tree is trained on a random subset of the data, and the final decision is made by aggregating the predictions of all the trees.

- Advantages: Robust to overfitting, can handle large datasets, and provides feature importance scores.

- Disadvantages: Can be computationally intensive and less interpretable than single decision trees.

Support Vector Machines (SVM): A classification algorithm that constructs a hyperplane in a high-dimensional space to separate different classes. For anomaly detection, it maximizes the margin between normal and anomalous data points.

- Advantages: Effective in high-dimensional spaces, works well with clear margin of separation.

- Disadvantages: Less effective when classes are not linearly separable, can be sensitive to kernel parameters.

Real-world Application: Credit Card Fraud Detection

Context: Credit card companies face significant financial losses due to fraudulent transactions. Detecting such fraud in real-time is critical to mitigate these losses.

Implementation: Supervised learning algorithms like Random Forest and SVM are trained on historical transaction data, which includes labels indicating whether each transaction is fraudulent or legitimate.

- Data Preparation: The dataset includes features such as transaction amount, time, location, and merchant details, along with fraud labels.

- Model Training: The algorithms learn patterns associated with fraudulent transactions from the labeled data. Random Forest can provide insights into which features are most indicative of fraud, while SVM aims to create a clear boundary between normal and fraudulent transactions.

- Detection: Once trained, these models can process new transactions and predict the likelihood of fraud in real-time, allowing the company to flag and investigate suspicious activities promptly.

Advantages of Supervised Learning in Fraud Detection:

- Accuracy: High accuracy in detecting fraud due to the availability of labeled examples during training.

- Speed: Models can quickly process and classify new transactions, making them suitable for real-time applications.

- Feature Insights: Algorithms like Random Forest provide feature importance, helping to understand which transaction attributes are most critical for fraud detection.

Challenges:

- Class Imbalance: Fraudulent transactions are much rarer than legitimate ones, leading to class imbalance in the training data. Techniques such as oversampling the minority class, undersampling the majority class, or using different performance metrics can help address this issue.

- Evolving Patterns: Fraudsters continually change their tactics, so the models need to be regularly updated with new data to maintain their effectiveness.

By leveraging supervised learning methods like Random Forest and SVM, credit card companies can significantly enhance their ability to detect and prevent fraudulent transactions, thereby reducing financial losses and protecting customers.

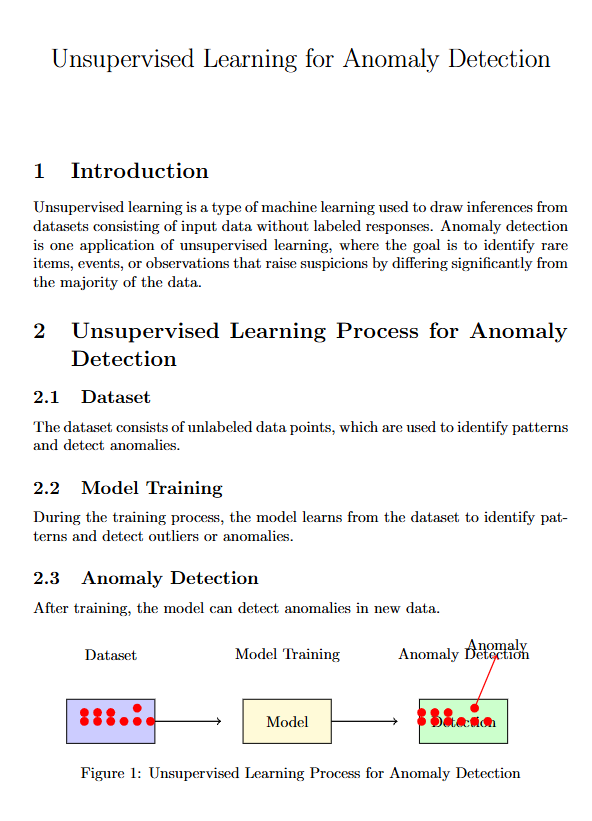

Exploring the World of Unsupervised Learning for Anomaly Detection

Understanding Unsupervised Learning

Unsupervised learning methods do not require labeled data. These algorithms model the normal behavior of the data and identify deviations from this model as anomalies. This makes them particularly useful in scenarios where labeled datasets are scarce.

Prominent Unsupervised Learning Algorithms

Isolation Forest: This algorithm isolates observations by randomly selecting a feature and then randomly selecting a split value between the maximum and minimum values of the selected feature. The logic is that anomalies are few and different, hence easier to isolate.

- Advantages: Efficient for high-dimensional data, and works well with large datasets.

- Disadvantages: May struggle with datasets having subtle anomalies or highly overlapping distributions.

One-Class SVM: A variant of the SVM algorithm, trained only on normal data. It learns a decision function that encompasses the normal data points and identifies anything outside this boundary as anomalous.

- Advantages: Effective in high-dimensional space and can handle nonlinear boundaries using kernel functions.

- Disadvantages: Sensitive to the choice of hyperparameters and kernel type.

Autoencoders: A type of neural network used to learn efficient representations of data, typically for dimensionality reduction. For anomaly detection, an autoencoder is trained on normal data to reconstruct it. Data points that cannot be accurately reconstructed are considered anomalies.

- Advantages: Can capture complex patterns in data and are highly flexible.

- Disadvantages: Requires significant computational resources and can be prone to overfitting.

Real-world Applications

Network Intrusion Detection:

Context: Protecting computer networks from unauthorized access and cyberattacks is critical for maintaining data security and integrity.

Implementation: Isolation Forest and One-Class SVM are used to detect unusual network traffic patterns that might indicate a cyberattack. These models are trained on normal network behavior data.

- Data Preparation: The dataset includes features such as packet size, flow duration, and source/destination IP addresses.

- Detection:

- Isolation Forest isolates anomalies by considering data points that are few and different, effectively flagging unusual patterns in network traffic.

- One-Class SVM learns the normal traffic pattern and flags deviations from this pattern as potential intrusions.

Advantages:

- Speed: Both algorithms can process real-time data to detect anomalies quickly.

- Scalability: These methods can handle large volumes of network traffic data.

Manufacturing Quality Control:

Context: Ensuring product quality and detecting defects early in the production process is vital for maintaining manufacturing standards and reducing waste.

Implementation: Autoencoders are used to monitor production processes. The autoencoder is trained on data from normal operational conditions.

- Data Preparation: The dataset includes sensor readings, machine settings, and operational parameters during normal production.

- Detection:

- The autoencoder is trained to reconstruct normal operational data accurately. If the reconstruction error for a new data point exceeds a certain threshold, it indicates a potential defect or anomaly in the manufacturing process.

Advantages:

- Accuracy: Autoencoders can capture complex patterns and identify subtle anomalies that might be missed by simpler methods.

- Adaptability: The model can be retrained regularly to adapt to changes in production processes.

By leveraging unsupervised learning methods like Isolation Forest, One-Class SVM, and Autoencoders, organizations can effectively detect anomalies in various real-world scenarios, enhancing security, quality control, and operational efficiency.

Real-World Case Studies in Anomaly Detection

Yahoo S5 Dataset

Description: Yahoo released the S5 dataset to help with anomaly detection in time series. This dataset has multiple time series with labeled anomalies, making it a great benchmark for testing anomaly detection algorithms.

Methods Applied:

- Isolation Forest: We used Isolation Forest to find anomalies by isolating points that deviated from the normal pattern. This method is great for high-dimensional data and flagged unusual web traffic effectively.

- One-Class SVM: We trained One-Class SVM on normal web traffic data to set a decision boundary. The algorithm successfully spotted anomalies outside this boundary.

Effectiveness: Both methods worked well on the S5 dataset, accurately spotting sudden changes or unusual patterns in web traffic. These results help detect potential issues early, proving how effective these algorithms are.

KDD Cup 1999 Dataset

Description: The KDD Cup 1999 dataset includes various network intrusion activities, both normal and abnormal. It’s widely used to test and compare different anomaly detection algorithms.

Methods Applied:

- Supervised Learning: We used algorithms like Random Forest and SVM, trained on labeled data, to tell apart normal activities from intrusive ones. These methods accurately classified different types of network intrusions.

- Unsupervised Learning: We applied Isolation Forest and One-Class SVM to model normal network behavior and spot deviations as potential intrusions. These methods effectively detected new or unknown intrusions.

Effectiveness: Using both supervised and unsupervised methods on the KDD Cup 1999 dataset showed their strengths. Supervised methods were accurate with known intrusions, while unsupervised methods found new anomalies effectively.

NASA’s Space Shuttle O-ring Data

Description: This dataset includes information on O-ring performance in NASA’s space shuttles. Detecting O-ring failures early is crucial for the safety and reliability of space missions.

Methods Applied:

- Autoencoders: We used autoencoders for unsupervised anomaly detection. Trained on normal operational data, the autoencoder learned efficient representations. High reconstruction errors indicated potential failures.

Effectiveness: The autoencoder accurately predicted anomalies in O-ring performance. By spotting conditions that might lead to failure, the model improved the safety and reliability of space missions. This shows the power of unsupervised learning in detecting subtle anomalies in critical areas.

Conclusion

These case studies show how versatile and effective supervised and unsupervised machine learning methods are for anomaly detection. Whether it’s web traffic monitoring, network security, manufacturing quality control, or aerospace safety, these methods offer strong solutions for spotting and addressing anomalies, ensuring smooth and secure operations.

For more insights into these methods, check out