The Growing Threat

In today’s digital age, AI-generated disinformation is rapidly becoming a major concern in political campaigns. The ability of artificial intelligence to create convincing fake content—ranging from images and videos to entire news articles—is posing significant challenges for democracies worldwide. These AI-driven fabrications not only undermine trust but also distort the democratic process by influencing voter perceptions and behaviors.

Notable Instances

Countries like Argentina and Slovakia have already experienced the impact of AI-generated disinformation.

Argentina

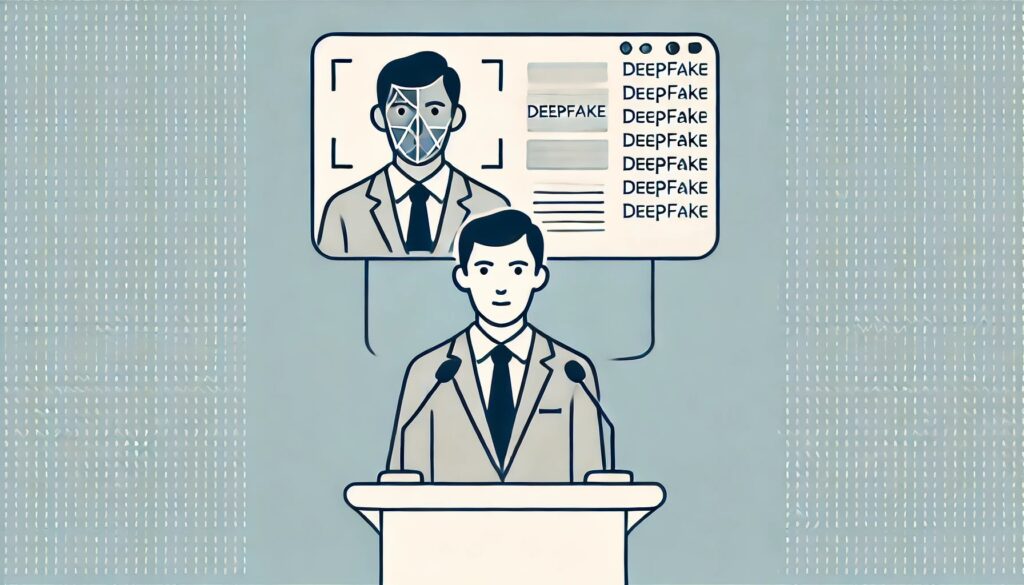

In Argentina, during the 2019 presidential elections, deepfake technology was used to create videos of political figures saying things they never said. One particularly damaging video showed a candidate making derogatory remarks about a specific ethnic group. Although the video was quickly debunked, it had already been shared thousands of times, causing significant damage to the candidate’s reputation and fueling public outrage.

Slovakia

In Slovakia, AI-generated videos emerged during the 2020 parliamentary elections. These videos depicted opposition leaders engaging in corrupt activities and making inflammatory statements. The videos were widely circulated on social media platforms, leading to increased polarization among the electorate. Despite efforts to discredit these videos, the disinformation campaign succeeded in creating lasting doubt and division among voters.

The Complexity of Detection

One of the primary challenges of AI-generated content is its incredible realism. As technology advances, it becomes increasingly difficult to distinguish between what is real and what is fake. This blurring of lines is especially problematic during election campaigns, where the stakes are high and misinformation can spread rapidly. Traditional fact-checking methods are often too slow to keep up with the speed at which disinformation spreads, creating a reactive rather than proactive stance in combating these threats.

Potential Impacts on Democracy

The proliferation of AI-generated disinformation poses a significant threat to the integrity of elections. By flooding social media and other platforms with false information, bad actors can undermine public trust in the electoral process. This erosion of trust can lead to lower voter turnout, increased political polarization, and a general sense of cynicism towards democratic institutions. Moreover, it can also result in the election of candidates based on manipulated and false narratives, rather than informed decision-making by the electorate.

Experts Warn of Worse to Come

Experts warn that AI and deepfake technologies will likely be even more sophisticated and pervasive in the coming elections. As these technologies become more accessible and affordable, the potential for their misuse increases, posing even greater risks to the democratic process. Future elections could see a surge in highly realistic and damaging fake content, making it even more challenging to discern truth from falsehood.

Combatting the Threat

Addressing the issue of AI-generated disinformation requires a multi-faceted approach:

Technological Solutions

- Advanced Detection Tools: Developing sophisticated algorithms to identify and flag AI-generated content. These tools can analyze metadata, check for inconsistencies in visuals and audio, and compare content against trusted databases to verify authenticity.

- Blockchain Verification: Using blockchain technology to verify the authenticity of digital content. Blockchain can provide a transparent and immutable ledger that tracks the origin and modifications of digital files, making it easier to trace back and authenticate original content.

Policy and Regulation

- Stronger Regulations: Implementing laws that hold platforms accountable for the spread of disinformation. Governments can enforce stricter penalties on social media companies and other digital platforms that fail to take adequate measures to prevent the spread of false information.

- International Cooperation: Countries working together to create a unified front against AI-generated disinformation. This includes sharing intelligence, best practices, and technological advancements to create a global standard for combating disinformation.

Public Awareness

- Media Literacy Campaigns: Educating the public on how to identify fake content. These campaigns can teach individuals how to critically evaluate the information they consume, recognize common signs of disinformation, and verify sources before sharing content.

- Transparency: Promoting transparency in how information is disseminated and consumed. Encouraging media outlets and social media platforms to disclose the origins of their content and the algorithms they use can help build trust and accountability.

Government and Organizational Responses

Governments and organizations are increasingly recognizing the threat posed by AI-generated disinformation and are taking steps to address it:

- Legislation: Several countries are drafting and implementing laws aimed at curbing the spread of deepfakes and AI-generated disinformation. For example, the European Union’s Digital Services Act includes provisions to hold tech companies accountable for content moderation.

- Task Forces and Committees: Governments are establishing special task forces and committees to study the impact of AI on elections and recommend policies to mitigate its risks. These bodies often include experts in technology, law, and media.

- Public-Private Partnerships: Collaboration between government agencies and private tech companies is essential. Initiatives like the Partnership on AI bring together stakeholders to develop best practices for the ethical use of AI and combatting disinformation.

- Educational Programs: Organizations such as the International Fact-Checking Network are expanding their efforts to educate the public and journalists on identifying and countering disinformation.

Looking Ahead

As we approach upcoming elections in various parts of the world, it is crucial to remain vigilant about the potential for AI-generated disinformation. While the technology behind AI continues to evolve, so too must our strategies for mitigating its negative impacts. Future advancements in AI detection, coupled with robust policy frameworks and heightened public awareness, will be key in preserving the integrity of our electoral processes.

Conclusion

The rise of AI-generated disinformation in political campaigns is a pressing issue that demands immediate and sustained attention. By leveraging technology, implementing robust policies, and educating the public, we can work towards safeguarding the integrity of our elections and, by extension, our democracies. The challenge is significant, but with a coordinated and comprehensive approach, it is one that can be effectively addressed.

Further Reading

For more information on how AI is shaping political campaigns and the measures being taken to combat disinformation, visit the following resources: