What is Class Imbalance in Machine Learning?

Class imbalance occurs when one class in a dataset heavily outweighs another. In simple terms, your machine learning algorithm is exposed to more examples from one class (say, spam emails) and very few from another (non-spam).

This imbalance can lead to skewed model predictions.

Imbalanced datasets are common in real-world scenarios, from medical diagnosis to fraud detection. Often, the minority class holds more importance in these cases, but traditional algorithms tend to ignore it.

Why? Because the model can still achieve high accuracy by favoring the majority class.

Why Imbalanced Datasets are Problematic

When dealing with highly imbalanced datasets, the model tends to “overlearn” from the majority class. Accuracy becomes deceptive. Your algorithm might score 95% accuracy, but it may just be ignoring the minority class entirely. This can be catastrophic in critical applications, like diagnosing diseases or detecting fraud, where minority class predictions are more crucial.

Traditional metrics like accuracy don’t tell the whole story. Instead, precision, recall, and F1 scores offer better insight into how well your model handles the minority class.

The Basics of Boosting Algorithms

Boosting is a popular ensemble technique where multiple weak learners are combined to create a strong classifier. Each weak learner is trained sequentially, with the model focusing on mistakes from the previous round. The end result is a robust model that can handle more complex data patterns.

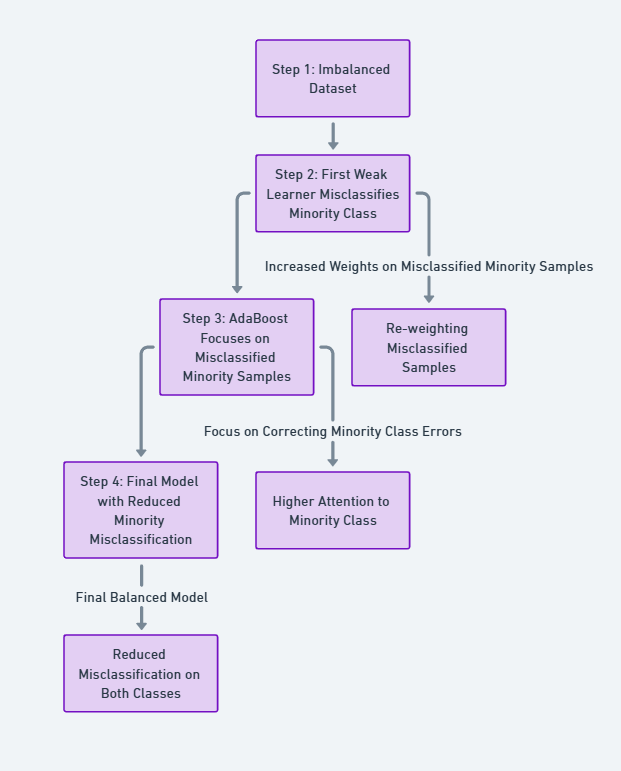

AdaBoost, short for Adaptive Boosting, is one of the most well-known algorithms in this family. The principle behind AdaBoost is simple: increase the weights of the misclassified data points, forcing the model to improve performance on harder-to-classify samples. But can it handle imbalanced datasets?

AdaBoost: A Quick Overview

AdaBoost uses a combination of decision stumps (one-level decision trees) as weak learners. At each stage, it identifies the samples the previous weak learner misclassified and assigns higher weights to them. These weighted errors drive the next weak learner to focus more on these hard-to-classify samples.

However, while AdaBoost works well for balanced datasets, class imbalance presents a challenge. The model may still focus too much on the majority class, ignoring the minority ones.

How AdaBoost Addresses Class Imbalance

AdaBoost has a built-in mechanism to handle misclassified samples, and this is where it shows promise for imbalanced datasets. By automatically increasing the weight of misclassified instances (often from the minority class), AdaBoost can be trained to give more attention to the underrepresented class.

Yet, this isn’t always enough for highly imbalanced data. The minority class might be so small that even after weighting, the model’s overall predictions remain skewed. For this reason, modifications like cost-sensitive learning or hybrid methods are often required to boost performance further.

Handling Minority Class: Key Challenges

The minority class is often the more important class in imbalanced datasets, but that’s exactly what makes it tricky. When there are significantly fewer samples for this class, it becomes difficult for the model to learn meaningful patterns. The model tends to classify new samples as belonging to the majority class since it’s seen far more examples of it during training.

This creates a vicious cycle. The minority class gets overlooked, predictions lean heavily towards the majority, and critical events—such as fraudulent transactions or rare diseases—are missed. Addressing these challenges is crucial if we want models that are both accurate and equitable.

Weighted Sampling in AdaBoost

To handle this, AdaBoost can implement weighted sampling. In imbalanced datasets, the minority class can be assigned higher weights. When the algorithm adjusts the importance of each misclassified sample, it automatically raises the influence of underrepresented classes. Essentially, these under-sampled examples receive more attention during each iteration of boosting, forcing the algorithm to learn from them more carefully.

In cases where class imbalance is severe, adjusting the weight manually to ensure minority class samples are adequately represented can make all the difference. But, even this tactic has limitations, so further enhancement techniques might be required.

Combining AdaBoost with SMOTE for Better Results

While AdaBoost is a powerful algorithm on its own, pairing it with SMOTE (Synthetic Minority Over-sampling Technique) often yields better results on imbalanced datasets. SMOTE works by creating synthetic instances of the minority class, essentially amplifying its presence in the dataset.

When these generated samples are fed into AdaBoost, the algorithm has more examples to work with, reducing the bias toward the majority class. This hybrid approach significantly improves classification accuracy for the minority class without needing major alterations to AdaBoost’s framework.

Modifying AdaBoost: Cost-Sensitive Learning

Another strategy to combat class imbalance is cost-sensitive learning. In this variation, a higher “cost” is assigned to misclassifying a minority class sample compared to a majority class one. AdaBoost can be modified to account for these higher misclassification costs during training. By doing so, the model is forced to minimize errors on the minority class, ensuring it doesn’t prioritize majority class performance.

This technique pushes the algorithm to treat misclassifying rare events as more serious than misclassifying common ones, making it particularly useful in fraud detection or healthcare applications.

Evaluation Metrics: Gauging AdaBoost Performance on Imbalanced Data

One of the biggest pitfalls in handling imbalanced data is over-reliance on accuracy as a metric. It’s tempting to assume a model that achieves 90% accuracy is performing well, but for imbalanced datasets, this can be misleading. If the majority class dominates the dataset, a model might achieve high accuracy simply by predicting everything as belonging to that class.

To properly evaluate AdaBoost’s performance, precision, recall, and F1-score should be used. These metrics focus specifically on the model’s ability to correctly classify the minority class, giving a better sense of how well AdaBoost is addressing the imbalance. ROC curves and AUC (Area Under the Curve) scores are also helpful for measuring true positive rates against false positives.

Avoiding Overfitting in Imbalanced Datasets

One of the biggest risks when using AdaBoost with imbalanced datasets is overfitting. Because AdaBoost increases the weights of misclassified instances, it can sometimes focus too intensely on specific, hard-to-classify samples, especially in cases where there’s heavy class imbalance. This can lead to the model learning noise in the data rather than meaningful patterns.

To avoid overfitting, regularization techniques can be applied. Limiting the number of weak learners or adjusting learning rates can help the model generalize better without becoming too focused on anomalies or rare patterns in the minority class. Cross-validation is also essential for ensuring the model performs well across various data subsets.

Comparison of AdaBoost with Other Imbalance Techniques

AdaBoost isn’t the only algorithm designed to tackle class imbalance, so how does it stack up against other methods?

- Random Forests: While Random Forests handle class imbalance by generating multiple trees from randomly sampled data, they lack the focus on hard-to-classify samples that AdaBoost offers. However, they tend to be more robust when the dataset is noisy, reducing the risk of overfitting.

- SMOTE (mentioned earlier) is often combined with other algorithms, including AdaBoost, to handle extreme imbalances. But on its own, SMOTE lacks the adaptive learning process of AdaBoost, making it less effective without a boosting technique.

- XGBoost, a more advanced form of boosting, incorporates regularization directly and tends to perform better than AdaBoost on imbalanced datasets due to its ability to fine-tune decision trees with regularization and learning rates. It’s considered a more flexible tool in many cases.

A comparison table of AdaBoost and other common techniques used to handle class imbalance

| Method | Description | Advantages | Disadvantages | Best Use Cases |

|---|

| AdaBoost | A boosting algorithm that focuses on misclassified instances by adjusting their weights in each iteration | – Naturally emphasizes hard-to-classify samples, including minority class – Easy to implement and interpret | – Prone to overfitting on noisy data – Struggles with severe imbalance without modification | – Small to medium-sized datasets – Cases where interpretability matters (e.g., fraud detection) |

| Random Forest | Ensemble learning technique using multiple decision trees, with random feature selection at each split | – Handles noisy data well – Robust and less prone to overfitting than AdaBoost | – Lacks a focus on hard-to-classify samples like AdaBoost – Doesn’t naturally address imbalance issues | – General classification problems – Datasets where noise is prevalent |

| SMOTE (Synthetic Minority Over-sampling Technique) | Oversampling technique that creates synthetic samples for the minority class | – Increases the presence of the minority class – Reduces overfitting caused by simple oversampling | – Can create unrealistic or redundant data – Doesn’t address majority class issues | – Works well with AdaBoost and other algorithms – Datasets with extreme imbalance |

| Cost-Sensitive Learning | Algorithm is modified to assign a higher cost to misclassifying minority class samples | – Prioritizes correct classification of minority class – Flexible and adaptable to different algorithms | – More complex to implement – Needs careful tuning of cost values | – Situations where misclassifying the minority class is highly problematic (e.g., medical diagnosis) |

| XGBoost | An optimized boosting algorithm with built-in regularization | – More robust to overfitting than AdaBoost – Handles complex, large-scale datasets well | – Computationally expensive – Requires more hyperparameter tuning | – Large, high-dimensional datasets – Cases where performance is more important than interpretability (e.g., predictive maintenance) |

| Undersampling | Reduces the size of the majority class by randomly selecting fewer samples | – Simple to implement – Helps balance the dataset without adding synthetic data | – Can discard valuable information from the majority class – Not suitable for extremely imbalanced datasets | – Small datasets where reducing sample size won’t cause significant information loss |

| Deep Learning | Neural networks that can learn complex representations of data | – Can automatically learn complex patterns – Effective on large datasets | – Needs a lot of data and tuning to handle imbalanced datasets – Overfitting is common on small datasets | – Large-scale problems like image recognition, text classification, and where deep learning is preferred |

However, AdaBoost remains a strong contender when paired with sampling techniques or cost-sensitive approaches, making it particularly effective for imbalanced classification tasks in simpler, structured datasets.

Real-World Applications of AdaBoost in Imbalanced Data

AdaBoost has been successfully applied in several real-world scenarios involving imbalanced datasets. In medical diagnostics, for instance, models often need to identify rare but critical conditions, such as specific types of cancer. By focusing on misclassified instances, AdaBoost helps ensure that these rare cases receive the attention they deserve.

Similarly, in fraud detection, where fraudulent transactions are few and far between, AdaBoost can be used to hone in on these rare events. By emphasizing errors in classifying fraudulent cases, it trains the model to better recognize unusual patterns that might indicate fraud.

The algorithm has also found use in predictive maintenance in industrial settings, where failures are rare but expensive, and in detecting network intrusions, where false positives need to be minimized while still catching threats.

Pros and Cons of Using AdaBoost for Class Imbalance

Pros:

- AdaBoost naturally increases the weight of hard-to-classify instances, making it a good fit for class imbalance.

- The sequential learning process allows the model to adapt to challenging patterns in the data.

- When combined with techniques like SMOTE or cost-sensitive learning, AdaBoost’s performance on imbalanced datasets improves significantly.

Cons:

- AdaBoost is prone to overfitting, especially on noisy data.

- It struggles with extremely imbalanced datasets unless modified or paired with other techniques.

- The algorithm’s reliance on weak learners (usually decision stumps) can limit its performance on more complex problems, especially when compared to newer methods like XGBoost.

Fine-Tuning AdaBoost for Imbalanced Datasets

Fine-tuning AdaBoost for imbalanced datasets often requires adjustments in both the model parameters and the data preparation process. Here are a few strategies to improve performance:

- Adjusting the Learning Rate: Lowering AdaBoost’s learning rate can help prevent overfitting by ensuring that each weak learner contributes less to the final model. This allows the algorithm to correct errors without becoming too sensitive to small fluctuations in the data.

- Limiting the Number of Weak Learners: While adding more weak learners can improve performance, too many can lead to overfitting. For imbalanced datasets, it’s often better to stop boosting earlier, ensuring the model remains generalizable.

- Rebalancing the Dataset: Applying techniques like undersampling the majority class or oversampling the minority class (as with SMOTE) can give AdaBoost a more balanced training set, allowing it to learn better patterns from the minority class.

By carefully tuning these parameters, AdaBoost can become a powerful tool for handling imbalanced datasets, especially when classifying critical but rare events.

When to Choose AdaBoost Over Other Techniques

While AdaBoost is a versatile and powerful tool for tackling imbalanced datasets, it isn’t always the best choice. When should you choose AdaBoost over other methods?

- When simplicity is key: AdaBoost is relatively easy to implement and interpret. Unlike more complex algorithms like XGBoost, it doesn’t require extensive hyperparameter tuning.

- When your dataset isn’t too large: AdaBoost works best with smaller to moderately sized datasets. With large datasets, its sequential learning process can become computationally expensive.

- When you’re working with structured data: AdaBoost performs well with structured data, especially when there’s a clear distinction between classes that the model can focus on.

However, in scenarios involving large datasets, unstructured data (like text or images), or extreme class imbalance, alternative methods like XGBoost or deep learning techniques might offer better performance.

Future Directions: AdaBoost in Deep Learning Context

As the landscape of machine learning evolves, there’s growing interest in how boosting algorithms like AdaBoost can be integrated with deep learning models. Traditionally, AdaBoost has been associated with decision trees or simpler classifiers, but new research is exploring how boosting can complement neural networks. This fusion could be particularly useful in addressing imbalanced datasets, where deep learning sometimes struggles to balance class representation.

One approach is to use AdaBoost as a pre-training phase, helping neural networks focus on difficult examples early in the learning process. Another idea is to develop hybrid models where AdaBoost refines the outputs of a deep network, correcting errors in underrepresented classes. These new techniques could help build models that combine the strengths of deep learning with the adaptability and precision of boosting.

Moreover, ensemble learning methods are gaining traction in handling massive datasets with high complexity and imbalance. Boosting could potentially play a role in creating more balanced, reliable deep learning systems by refining how neural networks process minority class instances, especially in fields like image recognition, natural language processing, and large-scale fraud detection.

Looking ahead, AdaBoost’s integration into deep learning frameworks might open up new ways to handle class imbalance, especially as AI systems tackle increasingly complex real-world problems. It’s an exciting direction for anyone grappling with imbalanced datasets in both classical machine learning and cutting-edge AI applications.

Resources

- AdaBoost Overview

- AdaBoost’s original paper by Yoav Freund and Robert Schapire:

AdaBoost paper (1996) – This is the foundational paper that introduced AdaBoost and explained how the algorithm works.

- AdaBoost’s original paper by Yoav Freund and Robert Schapire:

- Imbalanced Datasets

- Imbalanced-learn library documentation:

imbalanced-learn Documentation – This Python library offers various techniques to handle class imbalance, such as SMOTE and undersampling methods.

- Imbalanced-learn library documentation:

- Boosting in Machine Learning

- Boosting Algorithms in Machine Learning by Towards Data Science:

Towards Data Science: Boosting Algorithms – A comprehensive blog that explains AdaBoost, Gradient Boosting, and XGBoost.

- Boosting Algorithms in Machine Learning by Towards Data Science:

- Synthetic Minority Over-sampling Technique (SMOTE)

- Original SMOTE Paper:

SMOTE: Synthetic Minority Over-sampling Technique – The paper that introduced SMOTE, a popular technique for creating synthetic samples to combat class imbalance.

- Original SMOTE Paper:

- Evaluation Metrics for Imbalanced Datasets

- Metrics for Imbalanced Datasets:

Precision, Recall, and F1 Score Explained – A blog post explaining how metrics like Precision, Recall, and F1-score are essential when working with imbalanced datasets.

- Metrics for Imbalanced Datasets: