In reinforcement learning, Q-learning is a popular method used to train agents to make decisions that maximize cumulative rewards over time. A critical part of Q-learning—and, by extension, any reinforcement learning algorithm—is the balance between exploration and exploitation.

This article explores the mechanisms that help achieve this balance, focusing on adaptive exploration strategies that allow the Q-learning agent to learn efficiently and effectively.

Understanding Exploration vs. Exploitation in Q-Learning

What Is Exploration in Q-Learning?

In the context of Q-learning, exploration refers to an agent’s attempts to try new actions rather than always picking those that seem optimal at a given time. Exploration helps the agent discover previously unknown strategies or areas within its environment, which is especially crucial in the early stages of training when it has limited knowledge.

Why exploration matters:

- Prevents premature convergence to suboptimal solutions

- Encourages broader understanding of the environment

- Reduces the risk of overfitting to known actions

Exploitation: Leveraging Known Information

On the flip side, exploitation refers to choosing the best-known action based on the current Q-values, the values indicating the predicted rewards of actions in different states. By exploiting, the agent maximizes its short-term rewards based on what it has already learned.

Exploitation benefits:

- Maximizes rewards in well-understood areas of the environment

- Enhances performance consistency

- Useful in later training stages when optimal paths are better understood

Balancing exploration and exploitation is fundamental for learning agents to perform optimally in their environment. Too much exploration leads to excessive trial-and-error, while too much exploitation can trap the agent in local optima.

Table that contrasts Exploration and Exploitation in Q-learning:

| Aspect | Exploration | Exploitation |

|---|---|---|

| Key Characteristics | – Encourages novelty, trying out new actions. – Prioritizes discovering potentially valuable states and actions. – Helps build a complete knowledge of the environment. | – Focuses on using known, high-reward actions. – Prioritizes maximizing immediate rewards. – Relies on existing knowledge to make decisions. |

| Benefits | – Helps avoid local optima by finding alternative actions. – Provides a broader understanding of the action space and environment. | – Increases efficiency by focusing on the best-known actions. – Directly contributes to maximizing cumulative rewards. |

| Drawbacks | – Can delay convergence, as many actions may lead to suboptimal outcomes. – Risk of exploring unprofitable paths. | – Risk of getting “stuck” in suboptimal actions if they yield higher immediate rewards. – Misses out on potentially better actions not yet explored. |

Exploration: Ideal for early stages of learning to build a robust action-value map.

Exploitation: Suitable for later stages to capitalize on known actions for high cumulative rewards.

Classic Exploration Strategies in Q-Learning

Epsilon-Greedy Strategy

The epsilon-greedy strategy is one of the simplest approaches for balancing exploration and exploitation. The agent explores randomly with probability ϵ\epsilonϵ and exploits the best-known action with probability 1−ϵ1 – \epsilon1−ϵ.

- Dynamic Epsilon: In many applications, the epsilon value is reduced over time (decay), allowing the agent to explore more at the beginning and exploit as it becomes more knowledgeable.

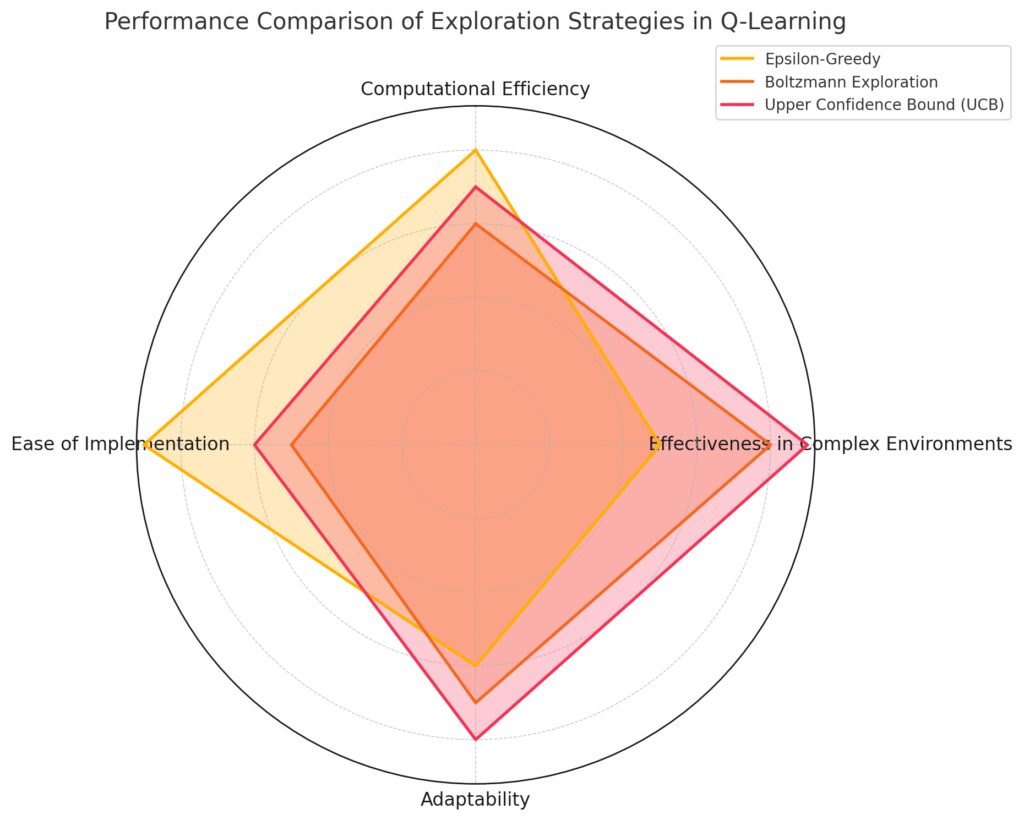

Metrics:Computational Efficiency

Effectiveness in Complex Environments

Adaptability

Ease of Implementation

Strategies:Epsilon-Greedy: High in ease of implementation and computational efficiency.

Boltzmann Exploration: Excels in adaptability and effectiveness in complex environments.

Upper Confidence Bound (UCB): Strong in adaptability and complex environments, with moderate efficiency.

Each strategy shows unique strengths, helping highlight which aspects are best addressed by each exploration method.

Boltzmann Exploration

Boltzmann exploration introduces temperature-based randomization, where actions are chosen probabilistically based on their Q-values. Actions with higher Q-values are more likely to be chosen but not guaranteed, thus incorporating a degree of randomness and exploration.

Why Boltzmann? It allows the agent to consider all possible actions but prioritize those with higher rewards, offering a more nuanced balance than epsilon-greedy.

Upper Confidence Bound (UCB)

The Upper Confidence Bound (UCB) method, commonly used in multi-armed bandits, can also apply to Q-learning. It balances exploration and exploitation by increasing the value of actions that have been less frequently tried, encouraging the agent to try these actions to reduce uncertainty.

Key advantages:

- Systematically encourages exploration of lesser-known actions

- Especially effective in dynamic or complex environments with many possible actions

Adaptive Exploration Strategies for Dynamic Environments

Adaptive Epsilon Decay

In adaptive epsilon decay, the decay rate of epsilon is adjusted based on the agent’s performance and knowledge. If the agent’s performance is improving rapidly, epsilon can decay faster, leading to more exploitation. If the agent is struggling or frequently encountering new situations, epsilon decays more slowly to allow for additional exploration.

Benefits:

- Flexible: Adapts to the learning needs of the agent

- Efficient: Balances the need for exploration only when it’s genuinely beneficial

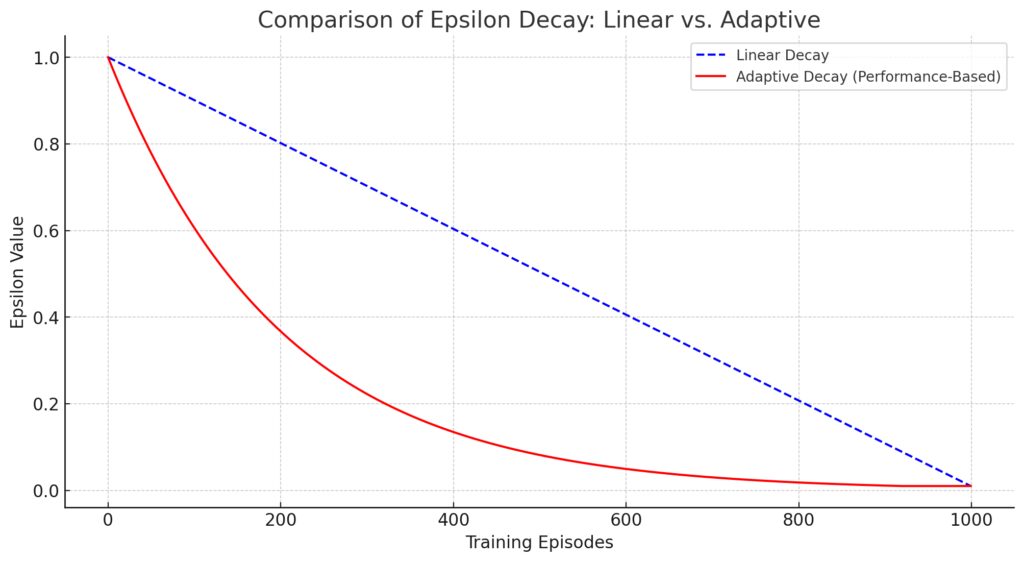

Linear Decay: Follows a steady, straight-line reduction, gradually lowering exploration intensity.

Adaptive Decay: Decreases epsilon more quickly in the early stages, based on simulated performance improvements, then slows as it nears the minimum epsilon value.

This demonstrates how adaptive decay can enable faster convergence by reducing exploration sooner when the agent is performing well, while maintaining some flexibility for further adjustments.

Q-Value Uncertainty-Based Exploration

This method considers the uncertainty in Q-values to inform exploration. Actions with high Q-value uncertainty (often calculated by estimating a confidence interval) are explored more often. This approach is especially useful in environments where certain actions yield rewards inconsistently.

Advantages:

- Focused exploration: Targets areas where the agent lacks confidence

- Improved learning rate: Reduces redundant exploration of well-understood actions

Exploration with Intrinsic Rewards

Incorporating intrinsic rewards encourages the agent to explore novel areas, regardless of external rewards. An agent might receive a small intrinsic reward for visiting a new state or trying a rarely used action. This approach is particularly valuable in sparse reward environments where external feedback is limited.

Pros:

- Novelty-seeking behavior: Drives the agent to explore uncharted territory

- Enhances long-term learning: Useful in complex tasks where immediate rewards are rare

Count-Based Exploration

Count-based exploration maintains a count of how often each state-action pair has been visited. Actions or states that are visited less frequently receive a bonus, encouraging the agent to explore them more.

Advantages:

- Systematic exploration: Ensures even, thorough coverage of the environment

- Effective in complex tasks: Useful in large state spaces with many possible actions

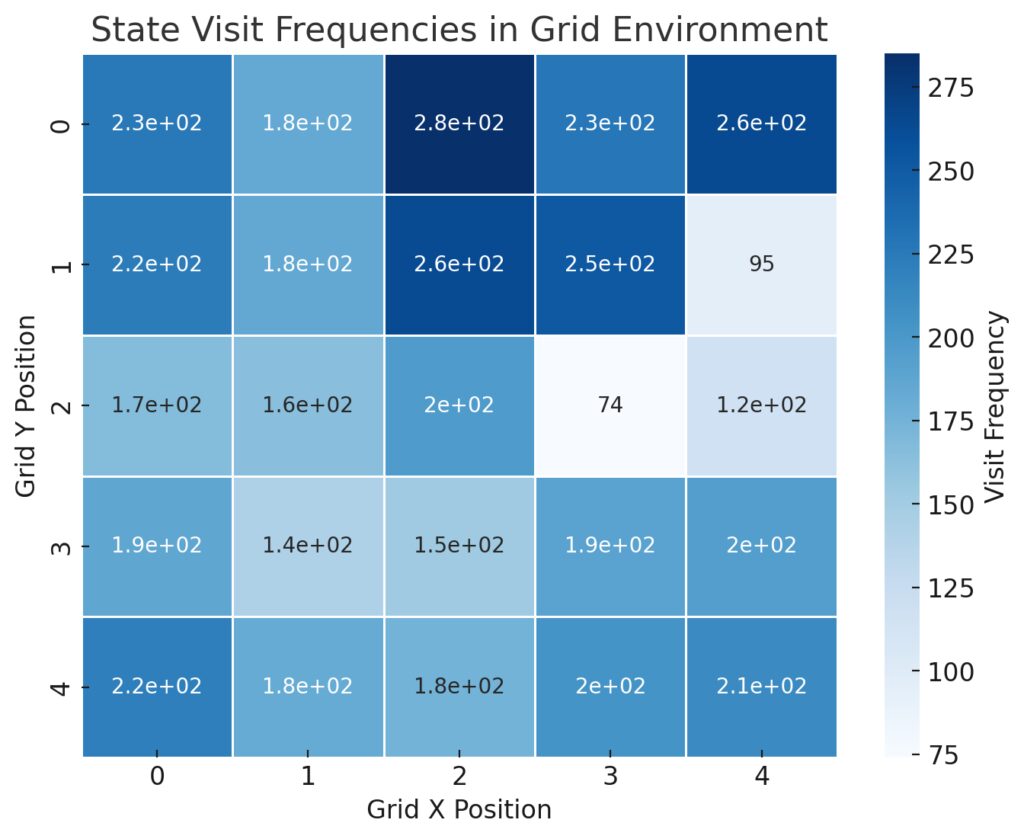

Color Gradient: Darker blues represent higher visit counts, while lighter shades indicate less-frequented states.

Annotations: Each cell shows the total visit frequency for that state, combining all actions.

This visualization highlights which states are most explored, assisting in identifying regions that may need more or less exploration for balanced state-action coverage.

Implementing Adaptive Exploration in Real-World Q-Learning Applications

Games and Simulations

In environments like games and simulations, adaptive exploration strategies can significantly enhance an agent’s performance. For example, using intrinsic rewards in exploration encourages the agent to discover efficient gameplay strategies. Additionally, Boltzmann exploration can help agents deal with dynamic game environments where different actions may yield variable results.

Robotics and Autonomous Navigation

In robotics, adaptive epsilon decay is valuable because exploration is often resource-intensive and can involve risks. By allowing the robot to adjust its exploration based on learning progress, this strategy saves both time and energy. UCB and count-based methods also fit well in robotics, helping the agent explore uncertain terrain in a structured manner.

Financial Markets

Q-learning agents in financial markets benefit from uncertainty-based exploration, as the environment is volatile and rewards are not always consistent. This approach helps agents navigate uncertainty by exploring actions with unclear outcomes, which can lead to a better understanding of complex market trends.

This blend of adaptive exploration strategies empowers Q-learning agents to learn efficiently in diverse, real-world scenarios. The right combination of strategies depends on the agent’s goals and the nature of the environment—yet with a balanced exploration-exploitation approach, the agent can become a powerful decision-maker in any field.

Advanced Techniques for Balancing Exploration and Exploitation

Thompson Sampling for Probabilistic Exploration

Thompson sampling is a Bayesian approach that draws actions based on probability distributions of expected rewards, making it ideal for scenarios with uncertain reward structures. Instead of relying solely on average Q-values, Thompson sampling periodically selects actions with some randomness based on the probability that they’re optimal. This strategy encourages exploration while prioritizing actions that are likely beneficial.

Advantages:

- Probabilistic approach: Reduces deterministic exploitation, leading to richer exploration patterns.

- Risk-aware: Allows exploration in a controlled manner, beneficial for high-stakes decisions.

Thompson sampling can be advantageous in environments with fluctuating dynamics, like financial markets or adaptive user interactions, where predicting rewards is challenging.

Noisy Networks for Exploration

Noisy networks introduce learnable noise parameters to Q-networks, adding randomness to action selection. These networks dynamically adapt the noise level based on the agent’s experience, allowing for controlled exploration. As the agent trains, it learns to reduce noise in predictable areas while maintaining noise in uncertain regions.

Why use noisy networks?

- Controlled variability: The noise factor provides an adaptive balance.

- Efficient learning: Reduces unnecessary exploration by concentrating on less-known states.

Noisy networks can be particularly useful in large-scale environments or multi-agent settings where states and actions vary significantly, and a systematic exploration approach is essential.

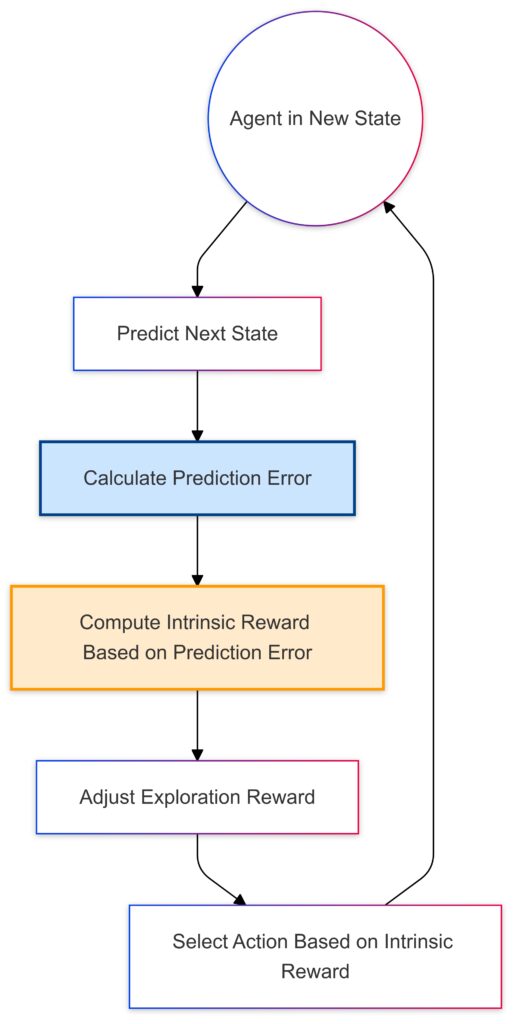

Curiosity-Driven Exploration

Curiosity-driven exploration introduces a secondary internal reward system based on the agent’s ability to predict state changes. When the agent encounters states where its predictions are inaccurate, it is encouraged to explore them to improve its understanding. This approach is often implemented with an auxiliary model that predicts the next state given the current state and action. If the prediction is incorrect, the agent gains intrinsic motivation to explore further.

Key benefits:

- Explores unknown dynamics: Drives exploration based on novelty, not external rewards.

- Reduces exploration bias: Especially useful in sparse-reward environments.

Curiosity-based approaches are highly applicable in open-ended environments, such as game levels, robotic exploration, and discovery tasks, where agents need to learn without consistent external feedback.

Agent in New State: The agent encounters a new state.

Predict Next State: The agent predicts the next state based on its current model.

Calculate Prediction Error: Compares the prediction to the actual outcome.

Compute Intrinsic Reward: Assigns a reward based on the prediction error to drive exploration.

Adjust Exploration Reward: Modifies exploration intensity based on the intrinsic reward.

Select Action Based on Intrinsic Reward: Chooses the next action, looping back to explore new states with updated rewards.

Multi-Armed Bandit Hybrid Models

In complex environments, a hybrid model combining Q-learning with multi-armed bandit strategies can allow the agent to choose exploration strategies dynamically. For instance, an agent could use a bandit framework to select between epsilon-greedy, Boltzmann exploration, and UCB at each step. The agent learns which strategy works best in each context, adapting its approach over time.

Advantages:

- Flexible exploration: Dynamically adjusts to environment changes.

- Optimized learning: Avoids rigid reliance on a single strategy, improving overall efficiency.

Such hybrid models work well in dynamic, multi-objective environments, like traffic control systems, where optimal actions vary based on real-time conditions.

Challenges and Considerations in Adaptive Exploration Strategies

Balancing Adaptability and Stability

One of the main challenges with adaptive exploration is maintaining stability in learning. Adaptive methods like noisy networks or dynamic epsilon can cause fluctuations in training if not tuned carefully. If the agent oscillates between exploration and exploitation too frequently, it can hinder convergence to optimal policies.

Solutions:

- Use smooth decay functions in epsilon or other exploration parameters to prevent sharp shifts.

- Monitor exploration performance metrics to adjust the level of adaptation dynamically.

Computational Complexity

Strategies like curiosity-driven exploration and hybrid models can be computationally intensive, especially in environments with high-dimensional state spaces. Agents may require additional neural networks for auxiliary tasks (e.g., predicting state changes), which can increase the demand for computational resources.

Solutions:

- Simplify the model architecture or use lower-dimensional embeddings to make auxiliary tasks less resource-intensive.

- Apply distributed learning techniques to scale training across multiple processors if feasible.

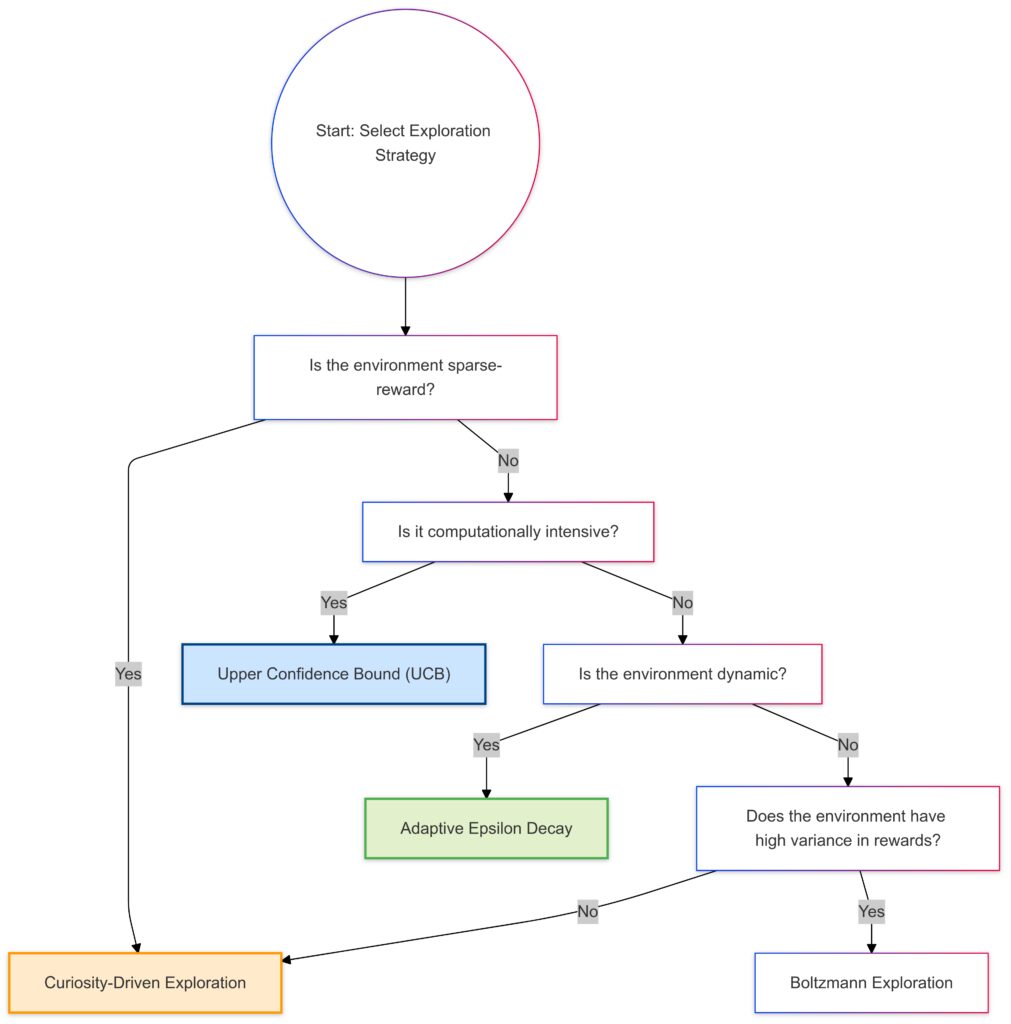

Choosing the Right Exploration Strategy for the Environment

Different environments may require specific exploration approaches based on factors like reward sparsity, state-action complexity, and time constraints. It’s essential to evaluate these factors before implementing adaptive strategies, as they affect both the agent’s performance and the efficiency of training.

For instance:

- Sparse reward environments: Curiosity-driven exploration or intrinsic rewards can help agents find meaningful actions.

- Dynamic environments: Hybrid models and probabilistic approaches like Thompson sampling can adapt quickly to changing conditions.

- High-risk environments: Conservative methods like UCB or uncertainty-based exploration prevent unnecessary risk-taking.

Sparse-Reward Environment:Yes → Curiosity-Driven Exploration.

No → Further analysis.

Computationally Intensive:Yes → Upper Confidence Bound (UCB).

No → Proceed to dynamic check.

Dynamic Environment:Yes → Adaptive Epsilon Decay.

No → Evaluate variance.

High Variance in Rewards:Yes → Boltzmann Exploration.

No → Curiosity-Driven Exploration for novelty.

Future Directions in Adaptive Exploration Research

Meta-Learning for Adaptive Exploration

Meta-learning, or “learning to learn,” enables agents to adapt their exploration strategies based on prior experience across tasks. This can be achieved by training an agent in multiple environments and then allowing it to refine exploration strategies based on the current task’s characteristics. Meta-learning-based exploration can streamline adaptation in new tasks, leading to faster convergence.

Potential applications:

- Autonomous vehicles that encounter diverse environments

- Multi-domain recommender systems

Exploration in Multi-Agent Systems

In multi-agent environments, agents must adapt their exploration strategies while accounting for other agents’ actions. This requires coordination strategies that promote collective learning while balancing exploration and exploitation. Cooperative exploration frameworks and multi-agent UCB approaches are emerging areas of research in adaptive exploration for multi-agent reinforcement learning.

Examples:

- Traffic management systems where vehicles coordinate route exploration

- Swarm robotics where robots collectively map unknown terrain

Transfer Learning with Adaptive Exploration

Transfer learning allows an agent to apply learned policies or exploration strategies from one environment to another. Using transfer learning, an agent could inherit exploration policies from previous tasks, adapting them to suit new conditions. This process can significantly shorten the time required to reach optimal policies in new but related tasks.

Key applications:

- Industrial robots trained in simulated environments and deployed in real-world settings

- Personalized recommendation systems using insights from similar users or environments

With the right adaptive exploration strategy, Q-learning agents can excel across a wide range of applications. These evolving techniques help agents make smarter, more informed decisions in even the most complex and unpredictable environments, paving the way for future advancements in autonomous systems.

Conclusion

Adaptive exploration strategies are crucial for training effective, adaptable Q-learning agents. Balancing exploration with exploitation allows agents to maximize learning while preventing premature convergence on suboptimal solutions. Through methods such as adaptive epsilon decay, curiosity-driven exploration, Thompson sampling, and hybrid multi-armed bandit models, agents can dynamically adjust their learning processes to suit various environments, from highly dynamic systems to sparse-reward tasks.

These strategies also address specific challenges in real-world scenarios:

- Flexibility in complex environments: Dynamic methods let agents adapt exploration tactics as they encounter changing conditions.

- Efficiency in large state spaces: Intrinsic rewards, noisy networks, and uncertainty-based approaches focus exploration, preventing resource waste.

- Reduced computational costs: Techniques like simplified auxiliary models and transfer learning allow agents to manage exploration without excessive computational overhead.

For future advancements, exploration strategies like meta-learning, multi-agent coordination, and transfer learning will be instrumental in expanding Q-learning applications in fields such as autonomous navigation, financial trading, and personalized recommendations.

As the landscape of reinforcement learning continues to evolve, so does the need for sophisticated exploration strategies, positioning Q-learning as a foundational method for training intelligent, autonomous agents in increasingly complex environments.

FAQs

How does adaptive epsilon decay improve exploration?

Adaptive epsilon decay adjusts the exploration rate based on the agent’s progress, allowing more exploration in early stages and more exploitation as it learns effective actions. This method helps an agent to shift gradually from broad exploration to focused exploitation, enhancing both learning efficiency and overall performance.

When should curiosity-driven exploration be used?

Curiosity-driven exploration is particularly effective in sparse-reward environments or tasks where external feedback is limited. By rewarding the agent for discovering new states or actions, it encourages exploration even when the environment doesn’t offer immediate rewards. This can lead to better long-term learning and a deeper understanding of complex tasks.

How does Thompson sampling differ from traditional epsilon-greedy methods?

Thompson sampling selects actions based on probability distributions of potential rewards, rather than following a simple probability threshold like epsilon-greedy. This probabilistic approach lets the agent make risk-aware decisions and offers a more nuanced exploration-exploitation balance, which can be helpful in uncertain or variable environments.

What are noisy networks, and why are they used in exploration?

Noisy networks add random noise to the Q-values, allowing controlled randomness in action selection. This introduces a layer of adaptive exploration, where the agent learns to reduce noise for well-understood actions and retain noise in unfamiliar areas. Noisy networks help agents handle large and dynamic environments by enabling varied exploration.

How do adaptive exploration strategies apply to real-world use cases?

In real-world applications, adaptive exploration strategies enable agents to adjust their actions efficiently. For example:

- Robotics: Adaptive epsilon decay conserves energy by reducing exploration once the environment is learned.

- Financial markets: Uncertainty-based exploration helps agents manage risk and handle market fluctuations.

- Games and simulations: Intrinsic rewards drive agents to discover new gameplay tactics or strategies effectively.

What are the challenges of implementing adaptive exploration?

Implementing adaptive exploration can introduce complexity, such as ensuring computational efficiency, managing stability, and selecting the best strategy for a given environment. Some strategies may require additional models or data processing, which increases the training time and resources needed. Careful tuning and monitoring are necessary to prevent oscillations in exploration levels.

How can future techniques like meta-learning enhance adaptive exploration?

Meta-learning allows agents to adapt their exploration strategies based on previous tasks, essentially “learning to learn.” In adaptive exploration, this can significantly shorten training times in new environments. Meta-learning is especially promising for applications that require agents to quickly adjust to varying or unfamiliar tasks, such as autonomous vehicles in changing terrains or adaptive customer service bots.

What is the role of intrinsic rewards in adaptive exploration?

Intrinsic rewards are rewards generated from within the agent rather than from the external environment. In adaptive exploration, they motivate the agent to explore new states or perform actions it hasn’t tried before. This is especially useful in environments with sparse external rewards, where immediate feedback is limited. By assigning value to novel actions or experiences, intrinsic rewards drive the agent to discover and learn even in less informative environments.

How does count-based exploration enhance learning efficiency?

In count-based exploration, the agent tracks the frequency of visits to each state-action pair, giving less-visited pairs a temporary reward boost. This encourages the agent to explore underexplored actions or areas, enhancing learning efficiency by ensuring the agent thoroughly examines the environment. This method can be effective in large or complex state spaces, as it systematically reduces redundant actions and focuses on learning less-known areas.

How does Upper Confidence Bound (UCB) handle exploration in Q-learning?

Upper Confidence Bound (UCB) exploration adjusts action selection based on the agent’s level of confidence in each action’s reward. It prioritizes actions with high rewards and high uncertainty, encouraging the agent to explore uncertain but promising options. This strategy is highly effective in dynamic or complex environments, as it reduces exploration in familiar areas and focuses on learning in more ambiguous regions where rewards are less predictable.

Why is balancing exploration and exploitation challenging in multi-agent environments?

In multi-agent environments, the actions of one agent can impact the rewards or optimal strategies of others, making it challenging to balance exploration and exploitation. Agents need to anticipate or respond to the actions of others, which requires flexibility in exploration. Coordination strategies, such as multi-agent UCB or cooperative exploration frameworks, help agents balance exploration without interfering with others, promoting overall system stability.

What is the significance of using hybrid models in adaptive exploration?

Hybrid models combine different exploration techniques (e.g., epsilon-greedy with Boltzmann exploration or UCB) to dynamically adapt to environmental changes. By selecting between strategies based on the situation, hybrid models offer the flexibility to balance exploration in diverse or variable environments. This is useful in real-world tasks with varying conditions, such as autonomous driving or stock trading, where agents benefit from both systematic and random exploration methods.

Can transfer learning improve adaptive exploration in Q-learning?

Yes, transfer learning enables an agent to apply exploration strategies learned in one environment to another, reducing training time and improving adaptability. For example, an agent trained in a simulation environment could transfer its exploration patterns to a real-world setting, like robotic navigation. This can accelerate learning in complex tasks and reduce the need for excessive exploration, making it highly practical in real-world applications.

How do exploration strategies impact the convergence rate in Q-learning?

The choice of exploration strategy directly affects the convergence rate, or how quickly an agent learns an optimal policy. Strategies like adaptive epsilon decay and count-based exploration help the agent prioritize high-value learning, which can speed up convergence. However, overly aggressive exploration can delay convergence, especially if the agent continually tests suboptimal actions. The right balance—achieved through adaptive methods—ensures that exploration supports faster convergence without excessive trial-and-error.

How does curiosity-driven exploration improve performance in open-ended environments?

Curiosity-driven exploration provides the agent with intrinsic motivation to explore unfamiliar states, which is particularly beneficial in open-ended or complex environments where rewards are sparse. This type of exploration enables the agent to autonomously seek out novel situations, improving performance by fostering a more comprehensive understanding of the environment. It is especially useful in games, simulations, and research-oriented tasks where agents need to explore beyond simple reward-based incentives.

How can adaptive exploration strategies be optimized for computational efficiency?

Some adaptive exploration strategies, like noisy networks and Thompson sampling, can be computationally intensive. Optimizing for computational efficiency may involve:

- Simplifying model architectures: Reducing the number of parameters in auxiliary networks.

- Batch processing: Using batch processing for data-intensive methods to handle exploration in larger environments.

- Distributed training: Leveraging multiple processors or GPUs to manage complex tasks in parallel. These optimizations ensure that adaptive exploration remains feasible even in resource-constrained environments or real-time applications.

Resources

Tools and Libraries

- OpenAI Gym

A popular library for creating and experimenting with reinforcement learning environments, with various built-in simulations. - Stable Baselines3

This PyTorch-based library offers ready-made RL algorithms, allowing for easy adjustment of exploration parameters. - RLlib (Ray)

A scalable reinforcement learning library within Ray, suitable for distributed training and multi-agent environments.