How AI Bridges Cultural Gaps Through Gesture Recognition

Understanding the Importance of Gestures in Communication

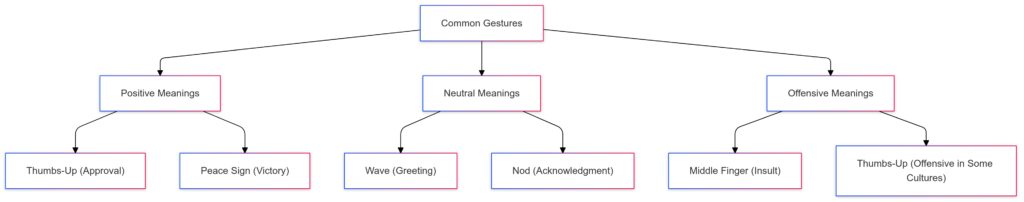

Gestures are an essential part of human interaction, often expressing more than words can. A nod, a wave, or even subtle hand movements carry meaning, but these interpretations aren’t universal.

In one culture, a thumbs-up may mean approval, while in another, it might be offensive. Misinterpreting gestures can lead to awkward or even harmful misunderstandings, especially in globalized environments. AI steps in here as a translator, enabling smoother cross-cultural exchanges.

By leveraging machine learning and data from various cultural contexts, AI can map gestures to their appropriate meanings, offering nuanced interpretations that reduce the risk of miscommunication.

Positive Meanings:

Thumbs-Up (Approval)

Peace Sign (Victory)

Neutral Meanings:

Wave (Greeting)

Nod (Acknowledgment)

Offensive Meanings:

Middle Finger (Insult)

Thumbs-Up (Offensive in Some Cultures)

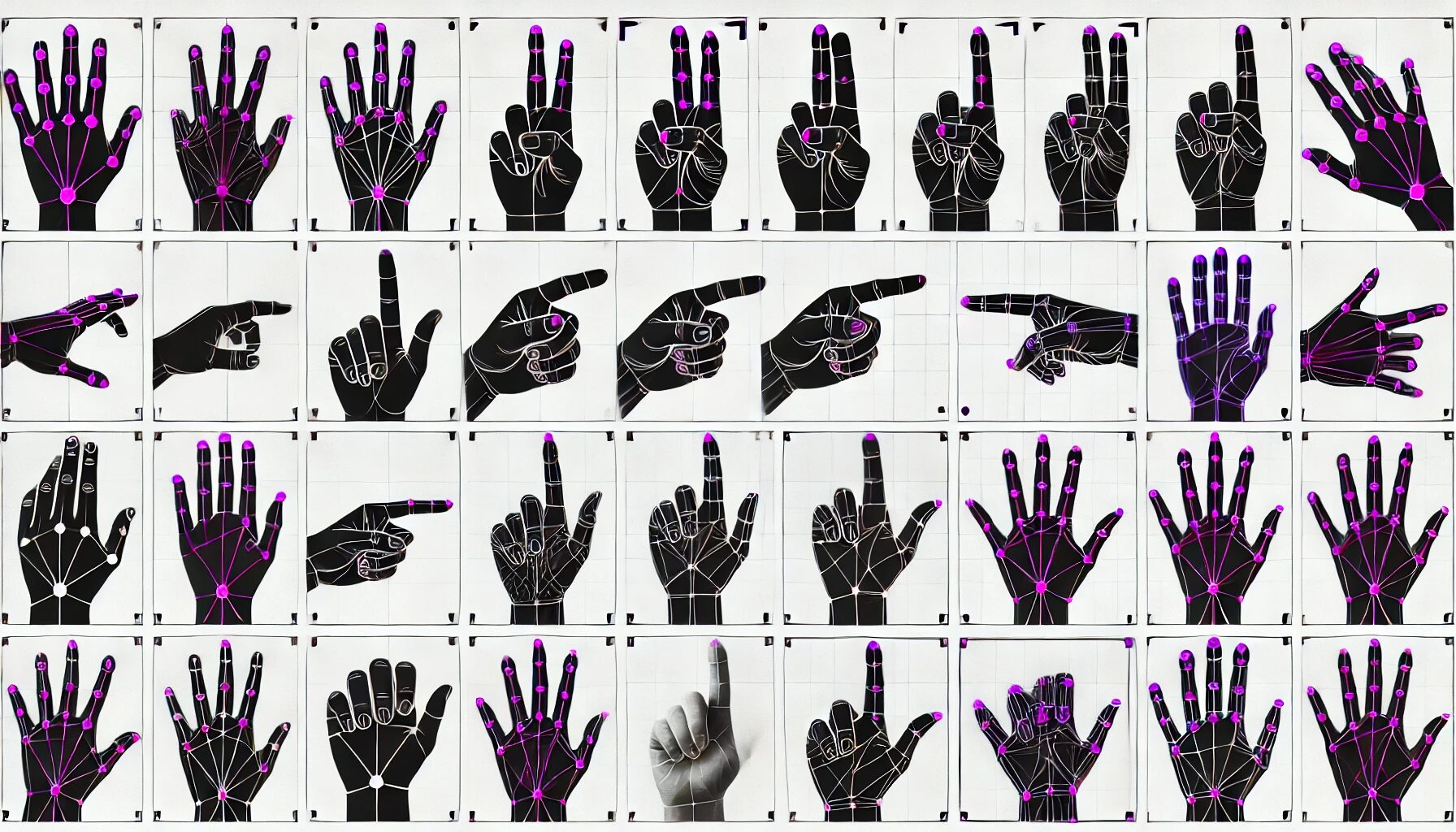

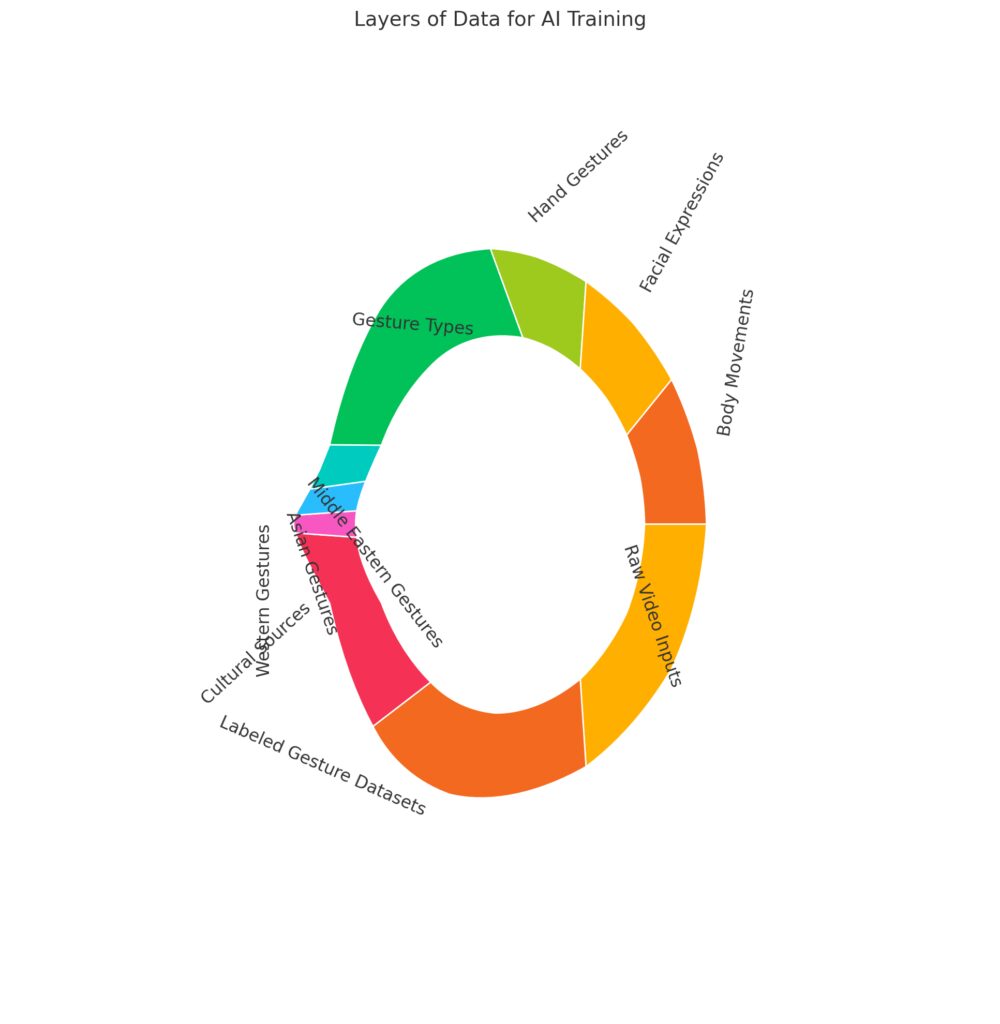

How Gesture Databases Fuel Accurate AI Models

For AI to interpret gestures accurately, it relies on vast datasets that catalog cultural variations. These databases compile gestures’ visual, contextual, and situational meanings.

AI systems analyze videos, images, and real-world interactions to identify patterns. For instance:

- Facial expressions paired with hand gestures refine meaning.

- Environmental context—like formal settings vs. casual ones—adds clarity.

By training on diverse datasets, AI models develop cultural sensitivity, enabling them to adapt to new contexts effectively.

Labeled Gesture Datasets: Organized datasets created from raw inputs.

Cultural Sources:Western Gestures

Asian Gestures

Middle Eastern Gestures

Gesture Types:Hand Gestures

Facial Expressions

Body Movements

Challenges AI Faces in Interpreting Gestures

Ambiguity in Multi-Cultural Contexts

Sometimes, gestures overlap in meaning across cultures, creating confusion. For instance, the “peace sign” can signify peace, victory, or even mockery based on the angle of the hand.

AI can struggle with such ambiguities, especially in environments where multiple cultures mix. The solution lies in continuous refinement, with systems trained to assess the gesture’s context and surrounding interactions.

Bias in Gesture Recognition Algorithms

Like any machine-learning system, gesture recognition AI can inherit biases from its training data. If a dataset skews toward Western-centric gestures, interpretations of non-Western cues may falter.

Researchers are actively working to include data from underrepresented regions, ensuring algorithms become more inclusive and reliable for global users.

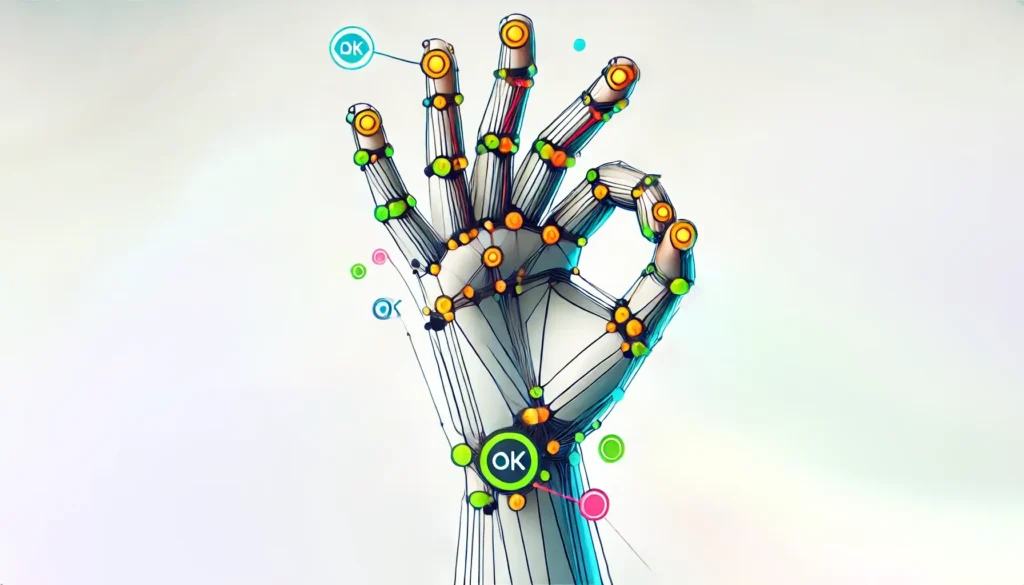

Real-World Applications of AI in Gesture Interpretation

Enhancing Communication for Travelers

Imagine traveling to a country where you don’t speak the language. With AI-powered tools like gesture-recognition apps, you could understand local non-verbal cues effortlessly.

Such tools can identify gestures through your phone camera and provide instant explanations. This not only enhances tourism but also fosters mutual respect and understanding.

Improving Cross-Cultural Business Interactions

In global business meetings, gestures can subtly impact negotiations. AI tools that monitor and interpret body language help bridge cultural divides, offering insights into participants’ emotions or intentions.

This tech equips professionals with better context, minimizing misunderstandings and strengthening partnerships.

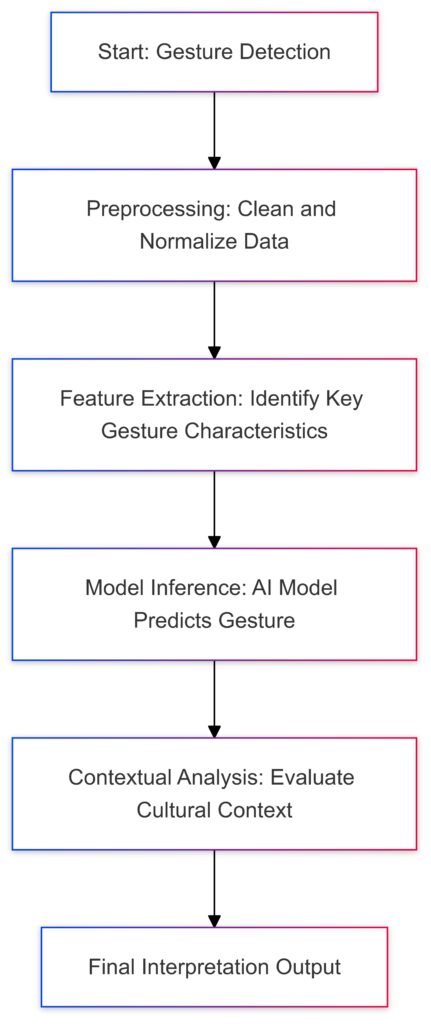

Gesture Detection: Initial identification of gestures in the visual input.

Preprocessing: Cleaning and normalizing data for consistent analysis.

Feature Extraction: Identifying key characteristics of the gesture.

Model Inference: AI model predicts the gesture based on learned patterns.

Contextual Analysis: Evaluating the cultural context to refine the interpretation.

Final Output: Producing the culturally informed gesture interpretation.

AI’s Role in Cross-Cultural Education

Teaching Cultural Sensitivity Through Simulated Interactions

AI-powered tools are transforming the way individuals learn about cultural norms. Simulations using gesture-recognition technology allow learners to engage in virtual scenarios, interpreting and responding to gestures within a specific cultural context.

For example:

- Training modules for diplomats can simulate negotiations, emphasizing cultural nuances in body language.

- Healthcare professionals can use gesture-focused VR training to better connect with patients from diverse backgrounds.

These immersive experiences provide real-time feedback, reinforcing correct interpretations and highlighting potential misunderstandings.

Language Learning with Non-Verbal Cues

Most language learning platforms focus on verbal communication, but gestures are equally crucial. AI-integrated programs now include gesture training to complement spoken language skills.

Learners can use augmented reality (AR) glasses or apps to practice interpreting gestures alongside phrases, enabling them to grasp the full spectrum of communication.

Ethical Considerations in Gesture-Recognition AI

Protecting Privacy in Gesture Data Collection

To train AI effectively, systems require substantial data, often sourced from videos and real-world interactions. However, this raises concerns about consent and privacy.

For instance, recording gestures in public spaces without explicit permission can breach ethical boundaries. Developers must prioritize:

- Transparent consent processes for users.

- Anonymized datasets to prevent identity linkage.

By adhering to strict privacy protocols, AI can balance cultural sensitivity with ethical data practices.

Avoiding Cultural Stereotyping in AI Outputs

AI models run the risk of stereotyping, such as assigning rigid interpretations to gestures without accounting for individual or situational variation.

Developers must emphasize flexibility, training algorithms to recognize that gestures can hold different meanings even within the same culture. Ensuring AI respects nuance prevents reinforcing harmful generalizations.

Future Innovations in AI for Gesture Interpretation

Gesture-Aware Wearables

Imagine smart glasses or wristbands that interpret gestures in real-time, providing instant feedback through discreet vibrations or visuals. These tools could revolutionize interactions for businesspeople, travelers, and even those navigating social events in unfamiliar cultural settings.

AI-Driven Emotional Intelligence Tools

Future AI systems may combine gesture recognition with emotional intelligence, analyzing facial expressions and tone of voice alongside gestures. This holistic approach would offer deeper insights into people’s emotions and intentions, fostering richer interactions.

Conclusion: AI’s Transformative Role in Gesture Interpretation

AI is reshaping how we navigate the complexities of non-verbal communication, providing a bridge across cultural divides. By analyzing and interpreting gestures with cultural sensitivity, it reduces misunderstandings that often stem from differing norms, fostering smoother interactions in both personal and professional contexts.

The real power of AI lies in its adaptability. By continuously learning from diverse datasets and refining its interpretations, it can cater to varied cultural nuances. From enabling travelers to connect with locals to enhancing global business negotiations, AI demonstrates the potential to redefine human connection.

However, challenges persist. Addressing bias in training data, maintaining ethical standards, and ensuring privacy are crucial for its continued success. Without these safeguards, the technology risks reinforcing stereotypes or alienating underrepresented cultures.

Looking forward, gesture-recognition AI holds promise for advancing education, accessibility, and emotional intelligence tools, offering new ways to understand and relate to one another. Its ultimate value will depend on how thoughtfully it is developed and applied—with respect for the rich diversity of human expression.

FAQs

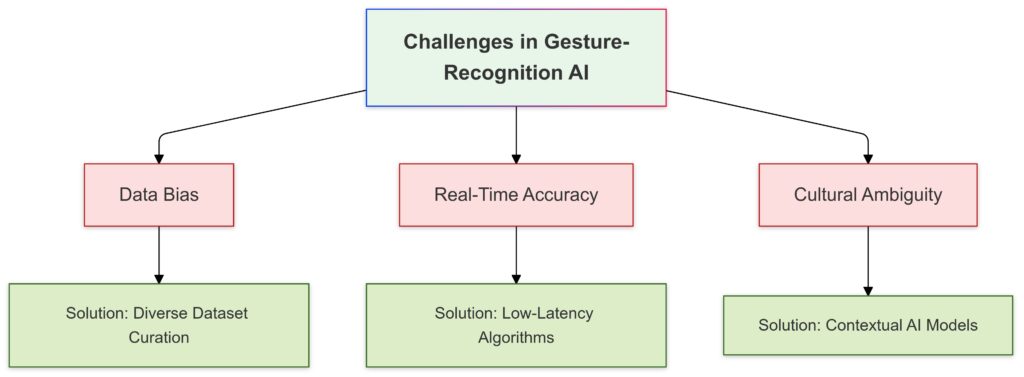

Data Bias: Limitations due to non-representative datasets.

Real-Time Accuracy: Challenges with maintaining precision in live scenarios.

Cultural Ambiguity: Misinterpretation of gestures across cultural contexts.

Solutions:

Data Bias: Addressed by curating diverse datasets.

Real-Time Accuracy: Improved with low-latency algorithms.

Cultural Ambiguity: Managed using contextual AI models.

Can AI recognize gestures in real-time situations?

Yes, AI can interpret gestures in real-time using tools like computer vision and sensors embedded in cameras or wearables. Apps with gesture-recognition capabilities analyze visual cues and provide immediate feedback or translations.

For instance, a traveler using an AR headset could interpret a vendor’s hand signal as a negotiation gesture rather than an offensive one, avoiding potential misunderstandings.

What industries benefit most from gesture-recognition AI?

Gesture-recognition AI has a wide range of applications, including:

- Tourism: Helps travelers understand cultural norms through interactive guides.

- Business: Enhances cross-cultural communication in international meetings by decoding subtle gestures.

- Healthcare: Assists doctors in interpreting patient gestures, especially in multilingual environments.

For example, a doctor treating a non-verbal patient might use AI to recognize hand signals that indicate pain levels, improving care quality.

How does AI address cultural biases in gesture interpretation?

Developers mitigate bias by curating diverse datasets that include gestures from underrepresented regions. They also train AI to assess gestures within specific contexts, ensuring interpretations are flexible and not overly rigid.

For example, a wave might signify “hello” in one culture but mean “no” in another. AI models trained on both contexts learn to differentiate based on environmental cues, like accompanying facial expressions or spoken words.

Is gesture-recognition AI ethical and private?

Ethical use of AI requires transparency and consent in data collection. To protect privacy, researchers anonymize datasets and ensure compliance with global data protection laws.

For instance, systems deployed in public spaces, like gesture-interpreting kiosks, should provide clear notifications about data use, allowing individuals to opt-out if they wish.

Can AI distinguish between similar gestures with different cultural meanings?

Yes, but it requires context and advanced training. AI uses contextual data like location, surrounding actions, or accompanying verbal cues to differentiate between gestures.

For example, the “thumbs-up” gesture signifies approval in the U.S. but is considered offensive in parts of the Middle East. By analyzing regional or situational contexts, AI can determine the intended meaning accurately.

How does AI handle gestures with emotional significance?

AI can combine gesture recognition with emotional intelligence models, analyzing facial expressions, tone of voice, and gestures together. This approach helps it capture the emotional undertone of a gesture.

For example, a shrug might indicate indifference, confusion, or frustration, depending on the speaker’s facial expression or voice. AI can identify these variations to provide a more nuanced interpretation.

Are there limitations to AI in interpreting gestures?

Despite advancements, AI struggles with:

- Ambiguity: Gestures that lack clear meaning without context.

- Overlapping cultural interpretations: Some gestures are so similar they’re hard to distinguish without additional cues.

- Real-time processing delays: Complex gestures in fast-paced environments may cause AI to lag or misinterpret.

For example, a peace sign shown during a heated debate could mean “let’s calm down” or mock surrender, which AI might misjudge without full context.

How does AI integrate gestures into language-learning tools?

Modern language apps include gesture modules powered by AI, helping learners associate gestures with spoken phrases. These tools teach cultural appropriateness alongside verbal skills.

For instance, an AI-driven language app teaching Italian might encourage users to pair “come stai?” (how are you?) with a common hand gesture of fingers pinched together and moving up and down.

Could AI help people with disabilities interpret gestures?

Yes, AI has significant potential to assist individuals with disabilities, especially in bridging non-verbal communication gaps.

For example, people with visual impairments can use AI-powered devices to describe others’ gestures audibly. Similarly, those with autism could use wearable tech to decode subtle social cues, enhancing their social interactions.

How accurate is gesture-recognition AI compared to humans?

AI can often match or even surpass human accuracy in recognizing repeated or predictable gestures, especially in controlled settings. However, humans excel in understanding subtle or complex gestures that rely on emotional depth or situational nuances.

For instance, while AI can quickly identify a wave or nod, interpreting sarcasm in a dismissive hand gesture still challenges machines.

What happens when gestures evolve or change over time?

AI systems require regular updates to keep pace with evolving cultural norms. Developers must feed new data into the system, ensuring it stays relevant.

For example, the “heart hand” gesture popularized by social media influencers is relatively recent and might not exist in older datasets. AI must be retrained to recognize it as a sign of affection or love.

Can AI reduce cross-cultural misunderstandings in diplomacy?

Yes, AI-powered tools can act as mediators in diplomatic settings by interpreting gestures with cultural sensitivity. These tools analyze subtle non-verbal cues to prevent missteps.

For example, an AI system might detect discomfort if a delegate folds their arms tightly during negotiations, prompting the speaker to adjust their tone or approach.

How does AI improve cross-cultural training programs?

AI is revolutionizing cross-cultural training by offering interactive simulations and real-time feedback on gestures. This helps users understand cultural nuances without the risk of making mistakes in real-life situations.

For example, corporate teams preparing for global assignments can practice scenarios where AI interprets their gestures and suggests culturally appropriate alternatives. A trainee might learn to bow correctly in Japan or avoid using certain hand signals in Brazil.

Can AI adapt to regional dialects of gestures?

Absolutely. Just as spoken languages have dialects, gestures can vary between regions within the same culture. AI models can be trained to recognize these subtleties by incorporating region-specific datasets.

For instance, in India, a head nod might mean “yes” in one region but convey ambiguity in another. AI tools tailored for such nuances help prevent misunderstandings, especially for users working across multiple regions of the same country.

How does AI handle rapid or overlapping gestures?

AI uses advanced algorithms and motion analysis to track the speed, trajectory, and sequence of gestures, even when they’re fast or overlap. By breaking down each movement frame-by-frame, the system can interpret complex gestures accurately.

For example, in high-energy environments like sports events or public performances, AI can distinguish between celebratory gestures like fist-pumping and potentially aggressive ones like fist-raising.

Can AI help in resolving cross-cultural conflicts?

Yes, gesture-recognition AI can serve as a neutral interpreter in conflict resolution, identifying non-verbal cues that indicate tension or misunderstanding. This can help mediators de-escalate situations.

For instance, if a hand gesture perceived as offensive in one culture is used unintentionally during negotiations, AI could step in to explain its original intent, diffusing the situation.

Are there applications for AI in virtual reality and gaming?

In virtual reality (VR) and gaming, AI enables culturally accurate and immersive experiences. Gesture recognition allows players to interact naturally with characters from diverse cultural backgrounds.

For example, in a VR game set in Egypt, an AI system might guide players to use the traditional hand-over-heart gesture as a polite greeting, enriching their cultural understanding within the gameplay.

How does AI assist with non-verbal cues in legal settings?

Gesture-recognition AI is increasingly used in legal and investigative contexts to analyze body language and gestures. It helps identify stress signals, deception, or cooperative behavior during depositions or interrogations.

For example, an AI system could detect subtle avoidance gestures, like a person frequently touching their face while answering questions, flagging potential discomfort or dishonesty.

What role does AI play in healthcare communication?

AI aids healthcare providers by interpreting patient gestures, especially in multilingual or non-verbal communication scenarios. It bridges gaps between caregivers and patients who rely on gestures to express needs.

For instance, a non-verbal stroke patient might point to parts of their body to indicate pain, which AI-powered devices can interpret and convey to the medical staff, enhancing care delivery.

Can AI learn new gestures autonomously?

Yes, some advanced AI systems use unsupervised learning to identify new gestures and their meanings without explicit instruction. By analyzing repetitive movements and their outcomes, AI can deduce likely meanings.

For example, in regions with emerging sign languages or evolving youth trends, AI can recognize new gestures like viral social media signals (e.g., finger hearts) and associate them with the appropriate context.

How does AI support inclusivity in public services?

AI-powered gesture interpretation is becoming a vital tool for inclusivity in government and public service settings, ensuring equitable access to information and resources.

For example, in multilingual government offices, AI can interpret gestures from non-native speakers to facilitate clear communication. Similarly, AI at international airports can guide travelers using gestures rather than requiring fluency in local languages.

Resources

Academic Studies and Research Papers

- “Gesture Recognition with Machine Learning” by IEEE Xplore

A foundational paper detailing machine learning techniques for gesture recognition, including applications in cultural contexts.

Access it here - “Cultural Variations in Nonverbal Communication” by the Journal of Cross-Cultural Psychology

This study explores how gestures differ across cultures and their implications for AI. - “Bridging the Gap: AI and Cross-Cultural Communication” by SpringerLink

Focused on AI’s role in addressing cultural nuances, with real-world applications.

Read more

AI Tools and Platforms

- OpenPose

An open-source tool for real-time multi-person detection of body movements, hand gestures, and facial expressions.

Visit OpenPose - Microsoft Azure Cognitive Services

Offers AI-powered APIs for gesture and body language recognition, with customizable features for cultural contexts.

Explore Microsoft Azure - Google Mediapipe

A cross-platform framework for building gesture recognition solutions. Frequently used in education and training applications.

Organizations and Communities

- World Gesture Archive

A global database documenting gestures from various cultures, serving as a resource for AI training.

Explore the Archive - Cultural AI Lab

A research initiative focusing on culturally aware AI solutions.

Learn More - AI4Good Initiative

A global community dedicated to using AI for ethical and inclusive purposes, including gesture recognition.

Join AI4Good