The Rise of AI in Border Security

A new frontier for artificial intelligence

AI is no longer confined to labs or sci-fi plots. It’s showing up at borders, screening travelers in real-time. Governments are deploying AI lie detectors to assess whether someone’s telling the truth during entry interviews.

These systems read facial expressions, vocal stress, and micro-movements. The goal? Flag risky travelers without human bias or delay.

In theory, this means tighter, faster, smarter security. But the reality is far more layered—and controversial.

Meet the virtual border agents

One of the most widely reported examples is iBorderCtrl, a pilot project funded by the EU. It used AI avatars to quiz travelers before letting them proceed.

The system analyzed responses, eye movement, tone of voice, and subtle facial cues to detect deception. Based on this analysis, a traveler could be cleared—or flagged for further questioning.

Sounds futuristic, right? But did it actually work? And was it fair?

How AI Lie Detectors Actually Work

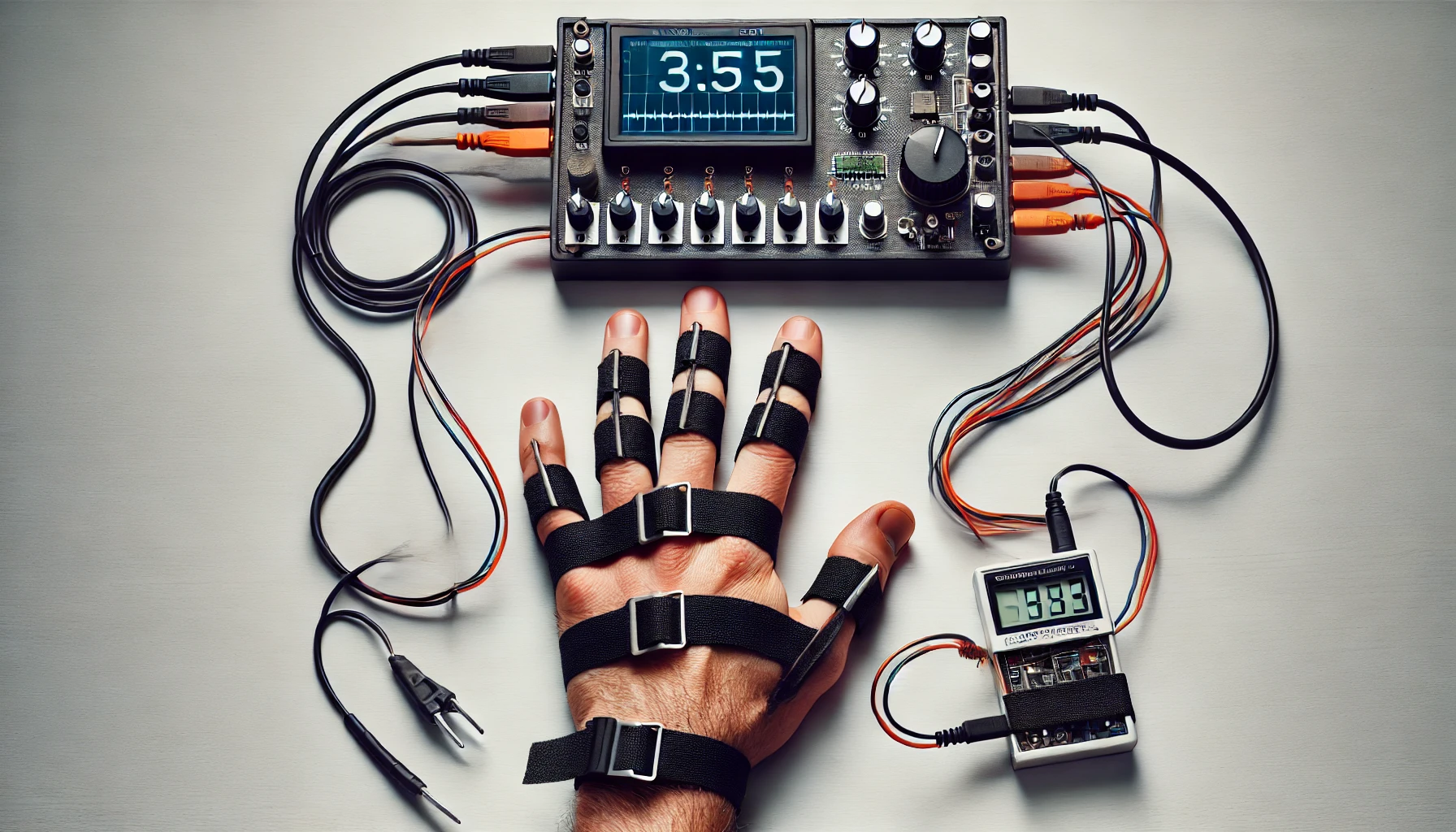

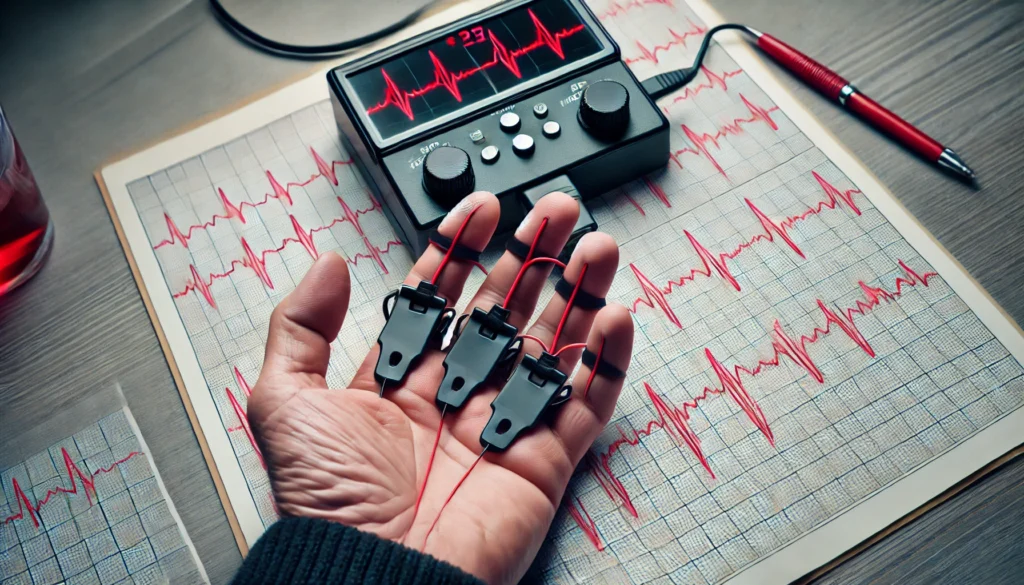

Micromovements and machine learning

These tools rely on machine learning models trained to spot “tells” of deception. They focus on:

- Microexpressions—those fleeting facial reactions we don’t consciously control.

- Voice pitch and stress patterns, which may shift when someone’s lying.

- Eye tracking, noting shifts in gaze or blink rate.

These systems gather data across multiple inputs and cross-check against trained patterns.

Still, not all cues mean deception. Nervous travelers, cultural differences, or language barriers can all skew results.

Black box algorithms, real-world consequences

One major concern is transparency. Most of these AI models are “black boxes.” Even developers can’t fully explain why a system flagged someone as deceptive.

This lack of accountability makes it tricky to contest decisions—especially for someone already under suspicion.

Accuracy Concerns: Can AI Truly Detect Lies?

Let’s talk numbers (and flaws)

Despite high-tech hype, results have been mixed. Some AI lie detectors claim up to 85% accuracy. But independent tests often show lower performance, especially in uncontrolled settings.

In the case of iBorderCtrl, researchers admitted the system was not reliable enough for real-world deployment. False positives were common.

Bias creeps into the code

AI isn’t immune to human flaws. If training data is biased—or if emotional cues vary across cultures—the system might unfairly target certain groups.

For example, someone from a high-stress region may appear “nervous” even when truthful. In this context, AI can unintentionally reinforce digital profiling.

Digital Profiling or Smart Screening?

Where’s the line?

Digital profiling refers to the use of data-driven systems to infer personal traits—often based on patterns that may or may not be accurate.

AI lie detectors walk a fine line. When used to pre-screen people based on physical and behavioral markers, they flirt with ethical grey zones.

It’s easy to imagine a future where travel becomes less about identity and more about biometric behavior.

Ethical and legal minefields

Privacy advocates warn that this tech violates core rights. The idea of being judged by an algorithm—without a human explanation—challenges legal norms.

Several watchdog groups argue that the lack of due process and explainability make these tools incompatible with democratic values.

Real-World Trials and Public Backlash

Pilot programs under the microscope

From the EU to the U.S. and Canada, AI lie detection has been tested at airports and border crossings. But public feedback has been mixed at best.

Many trials were quietly shelved due to technical limitations, ethical concerns, or legal pushback.

Civil liberties groups have demanded greater oversight, and some lawmakers have called for outright bans on facial recognition and biometric AI at borders.

Governments are still intrigued

Despite the pushback, interest hasn’t died down. Border agencies still see potential in AI as a force multiplier—not a standalone solution. They’re investing in systems that combine AI with human review rather than full automation.

That’s a small but meaningful shift toward accountability.

What’s Next in Border AI?

The debate is far from over. Up next, we’ll explore how these AI tools may evolve, what rights travelers should know about, and where the legal system fits in.

Stay tuned for the next section—because the future of AI at the border is still being written.

The Legal Gray Zone: Rights vs. Tech

Are travelers protected?

When AI decides your fate at the border, do you have any legal recourse? That’s the big question. Most countries haven’t yet created clear laws around AI-based interrogation tools.

Right now, decisions made by these systems can often bypass the usual safeguards. There’s no clear protocol to challenge or appeal a machine-flagged decision.

Due process, meet digital ambiguity

Traditional legal systems rely on transparency and the right to confront your accuser. With AI, your “accuser” might be a neural network. That’s a game-changer.

This creates legal tension: Is it fair—or even constitutional—to let algorithms make these high-stakes calls without human oversight?

Who Watches the Algorithms?

Oversight is patchy at best

There’s a serious lack of regulatory frameworks for border AI. Some watchdogs exist, but enforcement is weak and inconsistent.

For instance, the EU’s GDPR requires explainability in automated decisions. But that standard is rarely met in practice—especially at borders, where security often trumps transparency.

Calls for algorithmic audits

Privacy advocates are demanding independent audits of these systems. They want to know:

- How the algorithms were trained

- What data was used

- How bias is mitigated

Until then, many argue these tools are operating in a legal black hole.

Public Perception: Suspicion or Support?

Travelers are uneasy

Surveys suggest a growing discomfort with automated border screenings. People worry about false positives, data misuse, and lack of appeal options.

Some feel it’s invasive—like being interrogated by a robot before you even talk to a person.

And let’s face it: even law-abiding folks get nervous when AI scans their face for “micro-lies.”

But safety sells

At the same time, support exists—especially among those who prioritize security. If AI promises faster lines and fewer threats, many are willing to trade a bit of privacy.

Governments lean on this mindset to justify further investment.

The Global Landscape: Who’s Using What?

A patchwork of adoption

Different countries are taking different approaches. Here’s a quick snapshot:

- EU: Focused on pilot programs like iBorderCtrl, now mostly paused.

- U.S.: Exploring voice analysis and behavior-tracking tools, with limited rollout.

- Canada & Australia: Testing AI-enhanced kiosks, but with tight oversight.

- Asia: Countries like China lead in biometric surveillance, integrating AI at multiple border points.

Each system comes with its own set of risks and public reactions.

Some are pumping the brakes

Not everyone’s jumping in. Countries like Germany and the Netherlands have expressed serious reservations. They’re urging caution until more ethical frameworks are in place.

Beyond Lie Detection: The Next Evolution of AI at Borders

Predictive profiling is coming

AI isn’t stopping at lie detection. Some systems are now shifting toward predictive behavior modeling—using travel history, online activity, and biometric data to forecast future risk.

That opens up a whole new can of worms. It’s like pre-crime, only less sci-fi and more policy.

Blurring the line between security and surveillance

This next wave could mean travelers are rated or ranked before they even arrive. Your social media, past itineraries, and visa patterns might feed into the algorithm.

It’s efficient. But it raises massive ethical questions: How much data is too much?

What You Should Know So Far

- AI lie detectors rely on voice, facial expressions, and behavior cues.

- Accuracy is inconsistent, with many false positives.

- Travelers often have no clear way to contest machine-made decisions.

- These systems face growing backlash over bias and legality.

- The future leans toward even more invasive predictive tech.

Future Outlook Module: What Lies Ahead?

Expect smarter AI, wider deployment, and fiercer debate. With predictive tools gaining traction, borders may soon act like pre-screening labs—judging travelers before they speak.

But don’t count out public pushback. As scrutiny grows, so does the demand for transparency, ethics, and legal reform.

The big question isn’t if this tech will expand. It’s whether it’ll do so responsibly.

Travelers’ Rights in the Age of Border AI

What you need to know before crossing

Most travelers don’t realize that their rights at borders are limited. Border agents already have broad authority to question, search, and detain. Adding AI lie detection complicates things further.

Currently, there’s no global standard outlining how these tools should be disclosed, challenged, or opted out of. In most cases, you may not even know you’ve been screened by AI.

Transparency varies by region

Some governments provide a degree of disclosure—for example, noting AI is in use at self-check kiosks. Others stay vague, citing national security.

Either way, travelers are often left in the dark. That imbalance needs attention as tech continues to evolve.

Ethical Tech or Digital Gatekeeping?

The danger of over-reliance

AI is a tool—not a truth machine. But in high-stress environments like borders, it’s tempting to treat its results as gospel. That’s risky.

Flagging someone based on subtle facial expressions or voice tone assumes too much about human behavior. When that misfires, it doesn’t just create inconvenience—it can lead to wrongful detention or denied entry.

Rethinking border ethics

If governments continue down this path, the focus must shift from surveillance to accountable AI. That means:

- Public reporting of error rates

- Independent audits

- Appeals processes for flagged travelers

Without these, AI at borders becomes a form of digital gatekeeping—with no real checks or balances.

Industry Response: Developers Under Pressure

Tech companies feel the heat

Developers building border AI tools are facing scrutiny too. Civil rights groups are pressuring them to be more transparent about data sources, testing environments, and biases in their models.

Some companies are now including “bias audits” and working with ethicists. But these moves are still the exception, not the rule.

If the industry doesn’t self-regulate, broader regulation is likely on the horizon.

Governments are taking notes

With AI under a global microscope, expect new international standards to emerge—likely influenced by frameworks like the EU AI Act, which sets guidelines for high-risk AI systems.

Borders will likely be included under those definitions. So what happens here will set a global precedent.

Could AI Actually Improve Border Experiences?

When used with caution, maybe

Let’s not forget the upsides. AI has the potential to streamline border crossings—especially for low-risk, frequent travelers.

It can reduce human bias, minimize wait times, and improve threat detection if implemented with transparency and oversight.

Imagine a future where AI is used as a support system, not a judge. That balance could change everything.

Human-AI collaboration is the key

The most promising models don’t remove humans from the process. Instead, they use AI to assist officers—offering insights, not conclusions. That approach can preserve fairness while still enhancing efficiency.

Expert Opinions

Petra Molnar: Critique of Border Technologies

Petra Molnar, a lawyer and anthropologist, argues that many border technologies, including AI lie detectors, are both ineffective and harmful. She observes that these technologies often fail to function as intended and can exacerbate existing issues within immigration systems. Molnar emphasizes the need for a more humane approach to migration, suggesting that resources could be better allocated to support services rather than unproven technological solutions. Weizenbaum Institut

Javier Sánchez-Monedero and Lina Dencik: Scientific Validity Concerns

Researchers Sánchez-Monedero and Dencik critically examined the iBorderCtrl project, which aimed to detect deception through facial recognition and micro-expression analysis. They highlighted significant scientific shortcomings, questioning the foundational assumptions and statistical validity of such systems. Their analysis suggests that deploying these technologies could lead to unjust outcomes and infringe on individual rights. arXiv

Debates & Controversies

Effectiveness and Ethical Implications

The deployment of AI lie detectors at borders has sparked debates regarding their effectiveness and ethical ramifications. Critics argue that these systems lack robust scientific backing and may produce unreliable results, leading to potential human rights violations. The ethical dilemma centers on balancing national security interests with the protection of individual freedoms and the prevention of discriminatory practices.

Algorithmic Bias and Transparency

Concerns about algorithmic bias and the opacity of AI systems are central to the controversy. The potential for these technologies to disproportionately target specific groups without clear accountability mechanisms raises significant ethical questions. The lack of transparency in how these algorithms operate further complicates efforts to ensure fairness and justice in their application.

Journalistic Sources

Predictive Travel Surveillance

An investigation by Wired magazine delved into the use of predictive surveillance systems that analyze passenger data to assess risks. The article highlighted cases where individuals were flagged based on algorithmic profiling, raising concerns about privacy, data accuracy, and the potential for unjust treatment. WIRED

Surveillance Technology in Immigration Enforcement

The Associated Press reported on the advancement of surveillance technologies, including AI-driven risk assessment tools like the “Hurricane Score,” used to monitor and evaluate immigrants. The report discussed the implications of such technologies on privacy and civil liberties, especially in the context of heightened immigration enforcement. AP News

Case Studies

iBorderCtrl Pilot Project

The European Union’s iBorderCtrl project serves as a notable case study in the application of AI for border security. Designed to detect deception through facial recognition and micro-expression analysis, the project faced criticism for its scientific validity and ethical considerations. Researchers highlighted the system’s potential for high error rates and the risk of unjust outcomes, questioning the appropriateness of its deployment. arXiv

Vibraimage Technology

Vibraimage, a system that assesses a person’s mental and emotional state by analyzing head movements, has been implemented in various security settings, including airports and major international events. Despite its widespread use, there is a lack of reliable evidence supporting its effectiveness, raising concerns about the legitimacy and potential misuse of such technologies. arXiv

Statistical Data

Accuracy and Reliability Metrics

Studies have shown that AI-based lie detection systems often struggle with accuracy, particularly in diverse, real-world settings. Factors such as cultural differences, individual variability, and the complexity of human emotions contribute to high rates of false positives and negatives, undermining the reliability of these technologies.

Impact on False Accusations

The implementation of AI lie detectors has been associated with an increase in false accusations, as the technology may misinterpret nervousness or cultural communication styles as signs of deception. This raises significant concerns about the potential for unjust treatment of individuals based on flawed algorithmic assessments.

Policy Perspectives

Regulatory Oversight

The rapid deployment of AI in border security has outpaced the development of comprehensive regulatory frameworks. Policymakers face the challenge of crafting regulations that ensure the ethical use of AI while addressing national security concerns. The need for transparency, accountability, and public engagement in the policymaking process is critical to prevent potential abuses.

Human Rights Considerations

International human rights organizations advocate for policies that protect individuals from invasive and potentially discriminatory surveillance technologies. Emphasizing the importance of privacy, due process, and non-discrimination, these organizations call for a moratorium on the use of unproven AI lie detection systems until thorough ethical and scientific evaluations are conducted.

So… Should We Trust AI at the Border?

This question doesn’t have a simple answer. AI lie detectors may offer speed and security—but they also carry serious risks: misidentification, bias, and erosion of rights.

Whether they evolve into helpful tools or dystopian gatekeepers depends on how they’re built, deployed, and regulated.

We’re at a crossroads. And the next few years will shape not just how we travel—but how we define truth, trust, and fairness in the age of intelligent machines.

What’s Your Take?

Have you encountered AI at a border crossing—or would you be okay with it if it meant shorter lines?

Drop your thoughts below. Do you think AI lie detectors are smart security—or just digital profiling in disguise?

FAQs

Is there scientific consensus that this works?

No, and that’s a big red flag. Many behavioral scientists and ethicists argue there’s no reliable way to detect lies based solely on non-verbal cues. Human behavior is messy—affected by stress, culture, and personality.

For example, studies have shown that some people can lie with a completely straight face, while others appear visibly anxious even when truthful. This undermines the very foundation of AI deception analysis.

Are these systems biased against certain groups?

Yes, bias is a real and well-documented issue. If the AI was trained mostly on Western, white, male subjects, it might misinterpret expressions from women, non-native speakers, or people of color.

Imagine a Nigerian traveler speaking in accented English. The system might register pauses or tonal shifts as suspicious—even though they’re simply linguistic differences.

Bias isn’t always intentional. But if it’s built into the dataset, it gets baked into the algorithm.

Why are governments so interested in this tech?

Efficiency and control. Border security agencies are constantly under pressure to process more travelers with fewer staff. AI offers a tempting solution: faster screenings, fewer officers, and 24/7 vigilance.

In theory, AI could spot red flags earlier, allowing border agents to focus on high-risk individuals. But without proper safeguards, it can also result in profiling and wrongful detentions.

What’s the difference between lie detection and predictive profiling?

Lie detection reacts to answers. Predictive profiling anticipates risk based on pre-existing data—like your travel history, visa patterns, social media presence, or biometric scans.

For example, someone with frequent one-way trips to high-risk regions might be flagged before they speak a word. The system doesn’t need to catch a lie—it assumes risk from patterns.

This blurs the line between safety and surveillance, especially when travelers aren’t told what’s being used to judge them.

Resources: Learn More About AI Lie Detection & Border Surveillance

Here’s a curated list of credible sources and thought-provoking reads to dive deeper into the topic of AI lie detectors, digital profiling, and border technology.

🧠 Research & Technical Reports

- European Commission’s iBorderCtrl Final Report

Details the pilot project’s design, outcomes, and ethical concerns.

View Report (PDF) - AI Now Institute – Algorithmic Accountability Policy Toolkit

A breakdown of legal gaps in AI deployment, with border tech as a key case study. - “Facial Recognition Technology: Ethical and Legal Considerations” – Brookings Institute

A comprehensive look at biometric tech in public spaces and borders.

📚 Articles & Investigative Journalism

- The Guardian – “Virtual border guard with lie detector to screen EU travelers”

Coverage of the iBorderCtrl pilot and its criticisms.

Read the Article - Wired – “The Border Patrol Wants to Use AI to Spot People Who Might Be Lying”

Deep dive into U.S. experiments with AI-enhanced interviews. - MIT Technology Review – “Why AI lie detectors don’t work”

Analysis from behavioral scientists and AI ethics researchers.

⚖️ Legal Frameworks & Human Rights Watchdogs

- Electronic Frontier Foundation (EFF) – Surveillance & Border Tech

Advocacy and legal insights on digital rights and AI systems.

Visit EFF Border Surveillance - Access Now – “Algorithmic Injustice at the Border”

Reports and toolkits to fight surveillance-based profiling. - European Union AI Act (proposed legislation)

The legal roadmap for classifying and regulating high-risk AI like lie detectors.

Track Progress