AI model training is a fascinating and intricate process. For experts, understanding its nuances is critical to achieving accurate, reliable models. Whether you’re fine-tuning an existing model or building one from scratch, this guide breaks down the essential steps.

Understanding the Foundations of AI Training

What Is AI Model Training?

AI model training involves teaching an algorithm to recognize patterns and make predictions. It requires data, algorithms, and computational power.

- Algorithms process input data to find patterns.

- Training tunes these algorithms to make accurate predictions.

- The end result is a model capable of solving real-world problems, like image recognition or natural language understanding.

Why Is Training Iterative?

Training is rarely a one-and-done process. Models improve through:

- Multiple cycles of training, validation, and testing.

- Adjustments to parameters (e.g., learning rate, number of layers).

Key Concepts to Know Before Training

Experts must grasp concepts like:

- Loss function: Measures how far the predictions are from the actual values.

- Backpropagation: Updates weights in the model to reduce errors.

- Optimization algorithms: Techniques like Adam or SGD optimize the training process.

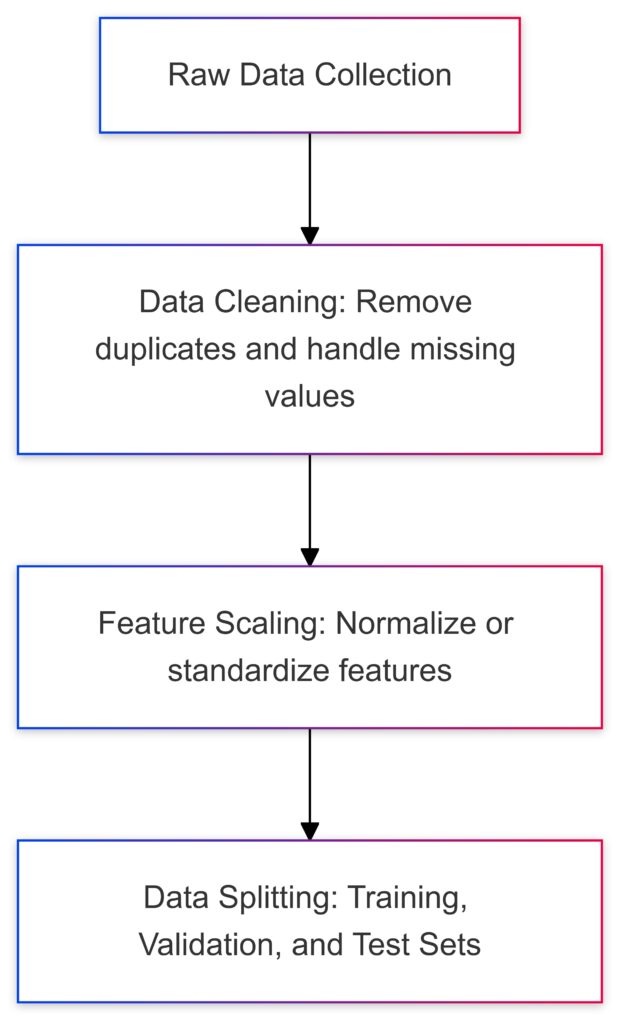

Preparing Data for Training

Data Collection and Sourcing

AI thrives on data. The better your data, the better your model. Consider:

- Diversity: Include varied data for robust performance.

- Volume: More data usually means better training.

- Labeling: Ensure accurate labels for supervised tasks.

Pro Tip: Use sources like Kaggle or build custom datasets.

Data Cleaning: The Hidden Hero of Success

Raw data often contains noise and inconsistencies. Clean it by:

- Removing duplicates and irrelevant data.

- Handling missing values using imputation techniques.

- Standardizing formats (e.g., date and numerical scales).

Splitting Data: Training, Validation, and Testing

For optimal performance:

- 70% for training the model.

- 20% for validation to tune hyperparameters.

- 10% for testing accuracy.

Never let the model see test data during training!

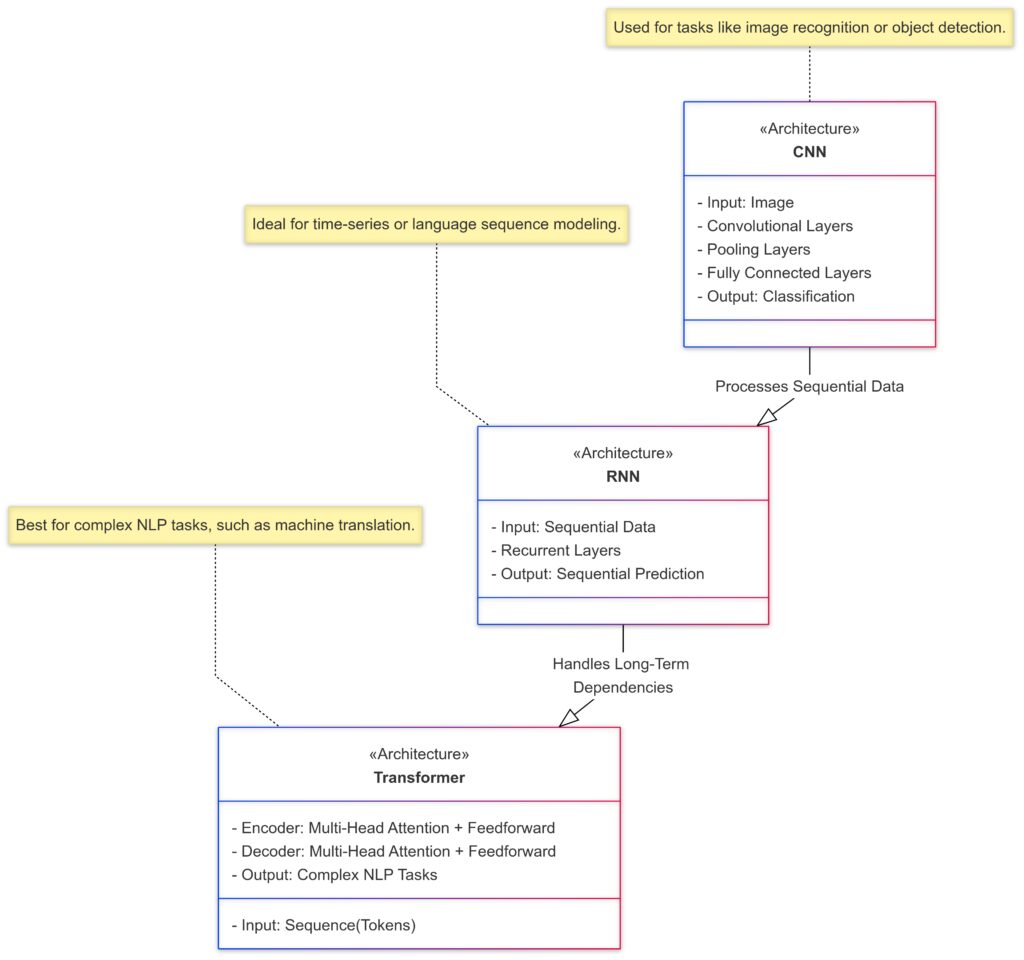

Building the Model Architecture

Choosing the Right Model Type

The architecture depends on your task:

- CNNs (Convolutional Neural Networks): Perfect for image-related tasks.

- RNNs (Recurrent Neural Networks): Great for sequential data like text or time-series.

- Transformers: Ideal for complex tasks like language understanding (e.g., GPT models).

Configuring Layers

Model layers define how data flows through the network:

- Input layer: Receives raw data.

- Hidden layers: Process data to learn features.

- Output layer: Produces the prediction.

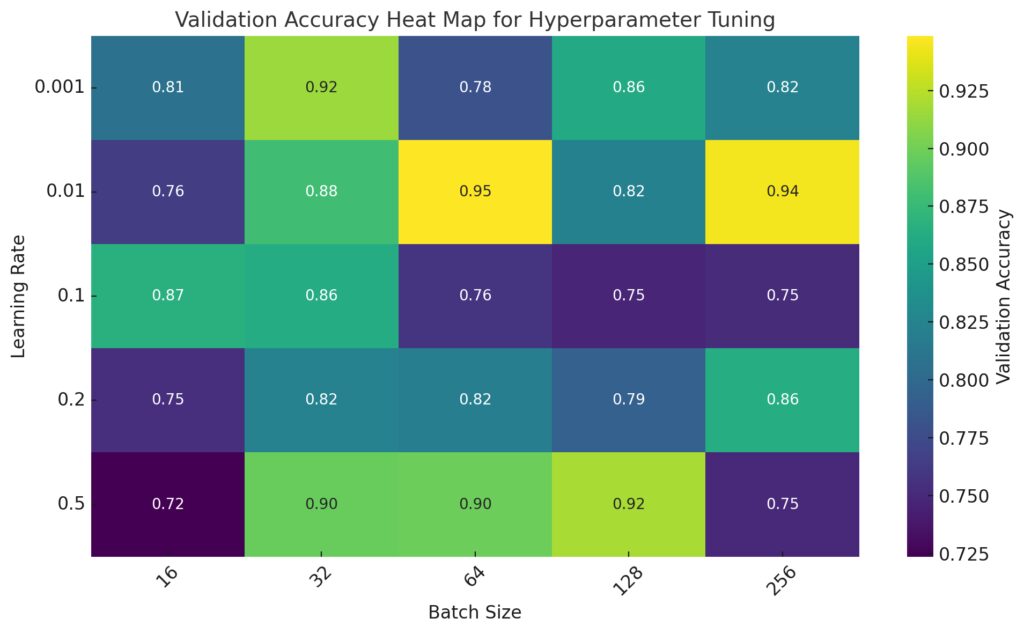

Hyperparameter Tuning

Tuning parameters like batch size, learning rate, and dropout rate is critical. Tools like Optuna or grid search can help.

Training the Model

The Training Loop Explained

Model training involves repeatedly passing data through the architecture. The steps include:

- Forward pass: Make a prediction.

- Calculate the loss: How wrong was the prediction?

- Backpropagation: Adjust weights to reduce loss.

- Update weights: Use optimization algorithms to improve predictions.

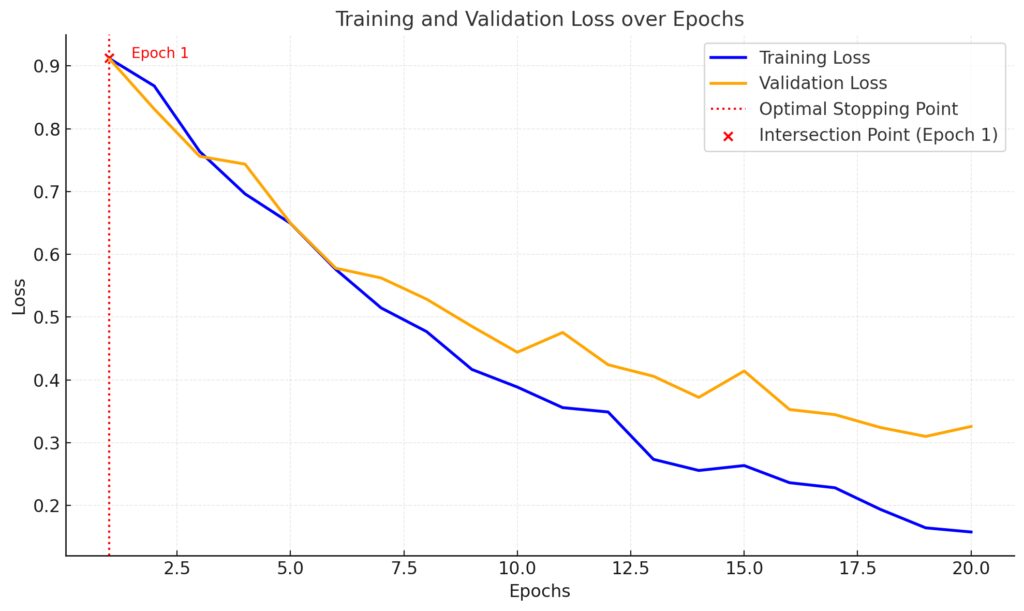

Handling Overfitting

Overfitting occurs when the model performs well on training data but poorly on unseen data. Avoid it by:

- Adding dropout layers.

- Using early stopping to halt training once performance stagnates.

- Applying regularization techniques like L2 penalties.

Training Loss: Decreases consistently as the model learns.

Validation Loss: Initially decreases but begins to rise after a certain point due to overfitting.

Optimal Stopping Point: Marked with a red dotted line and annotated, indicating where validation loss starts to diverge from training loss (epoch of intersection).

Optimizing Model Performance

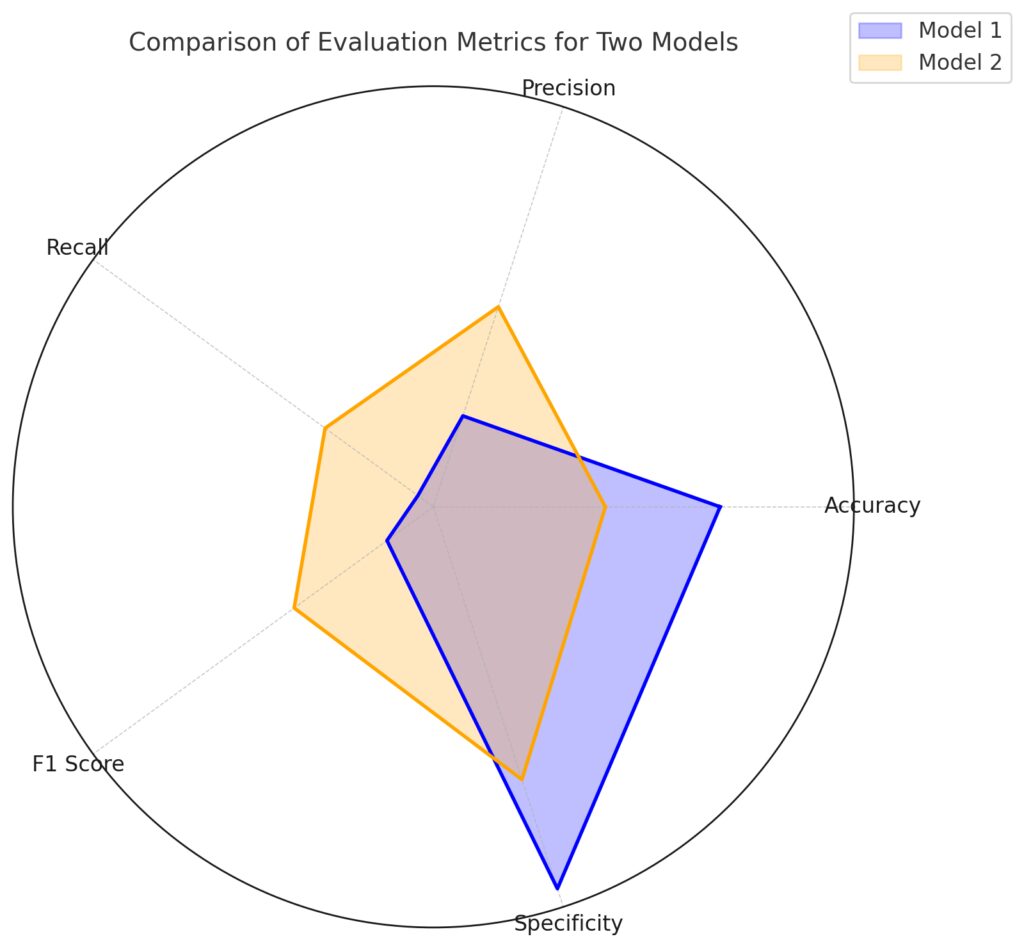

Evaluating Model Performance

After training, evaluate your model to determine how well it performs. Focus on key metrics tailored to your task:

- Accuracy: Useful for balanced datasets but may mislead for imbalanced classes.

- Precision and Recall: Key for tasks where false positives or false negatives are critical.

- F1 Score: Balances precision and recall for comprehensive insights.

For regression tasks, metrics like Mean Squared Error (MSE) or Mean Absolute Error (MAE) are more relevant.

Metrics: Accuracy, Precision, Recall, F1 Score, and Specificity.

Model 1 (blue): Shown as a blue polygon, indicating its strengths and weaknesses across the metrics.

Model 2 (orange): Shown as an orange polygon, offering a contrasting performance profile.

Techniques to Enhance Performance

Even well-trained models can improve with optimization:

- Learning Rate Schedules: Adjust the learning rate over time for smoother convergence.

- Data Augmentation: Expand your dataset with transformations like rotation or flipping (for images) or synonym replacement (for text).

- Transfer Learning: Start with a pre-trained model and fine-tune it for your specific problem.

Cross-Validation for Robust Results

Use k-fold cross-validation to divide data into multiple subsets for training and testing. This helps ensure the model generalizes well to new data.

Hyperparameter optimization results showing the impact of learning rate and batch size on validation accuracy.

Fine-Tuning the Model

Adjusting Hyperparameters

Refining hyperparameters can significantly boost model performance.

- Experiment with batch size, dropout rates, and number of epochs.

- Use automated tools like Hyperband for efficient tuning.

Regularization for Stability

Prevent overfitting and improve generalization:

- L1 and L2 regularization: Add penalties to large weights.

- Dropout layers: Randomly disable neurons during training for robustness.

Balancing Bias and Variance

- High bias means underfitting; the model is too simple.

- High variance means overfitting; the model is too complex.

Achieve balance by tweaking complexity or training duration.

Deploying the AI Model

Preparing for Deployment

Before deploying, ensure your model is ready for real-world environments:

- Model Compression: Use techniques like pruning or quantization to reduce size without sacrificing accuracy.

- Latency Optimization: Ensure the model runs efficiently within the target environment.

- Testing: Simulate deployment conditions to identify bottlenecks or errors.

Deployment Options

Choose a deployment platform based on your requirements:

- Cloud Services: AWS, Google Cloud AI, and Azure provide scalable infrastructure.

- On-Premise: For sensitive data, deploy locally.

- Edge Devices: Compress models for real-time processing on mobile or IoT devices.

Tools like TensorFlow Lite or PyTorch Mobile simplify deployment on resource-constrained devices.

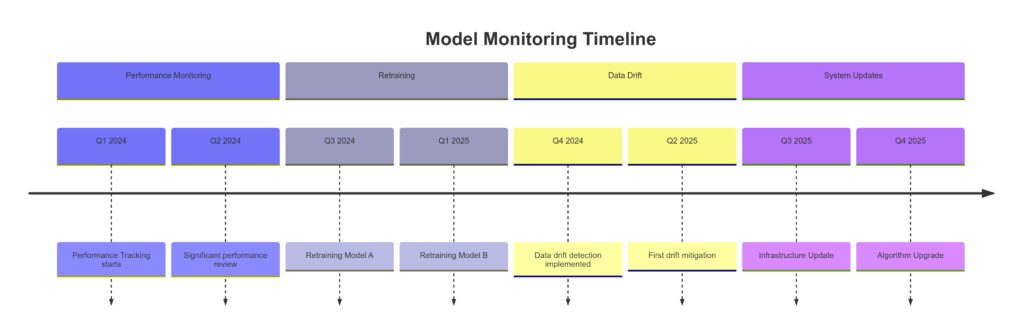

Monitoring Post-Deployment

Once deployed, monitor your model to ensure sustained performance. Key steps:

- Track drift: Monitor data to identify shifts in input patterns.

- Periodic retraining: Update the model with fresh data to maintain relevance.

Scaling AI Solutions

Automating Retraining Pipelines

Set up automation for retraining using frameworks like MLFlow or Kubeflow. This ensures models stay updated with minimal manual intervention.

Building Scalable Infrastructure

Use tools like Kubernetes to manage deployments across clusters for high-availability systems. APIs can simplify interaction with your AI solutions.

Ethical AI Considerations

Always keep ethics in mind:

- Ensure fairness by auditing for biases in data and outputs.

- Protect privacy by adhering to regulations like GDPR.

Scaling responsibly ensures trust and longevity in your AI solutions.

Now you have a comprehensive roadmap for AI model training and deployment. Ready to take your models to the next level?

Troubleshooting Common Challenges in AI Training

Debugging Model Issues

AI training doesn’t always go smoothly. Common issues include:

- Vanishing or Exploding Gradients: Gradients shrink or grow exponentially, making learning unstable.

- Solution: Use activation functions like ReLU or techniques like gradient clipping.

- Slow Convergence: Training takes too long to improve.

- Solution: Experiment with learning rates or use adaptive optimizers like Adam.

- Unstable Loss Curves: Sudden spikes or drops in loss indicate instability.

- Solution: Regularize the model or lower the learning rate.

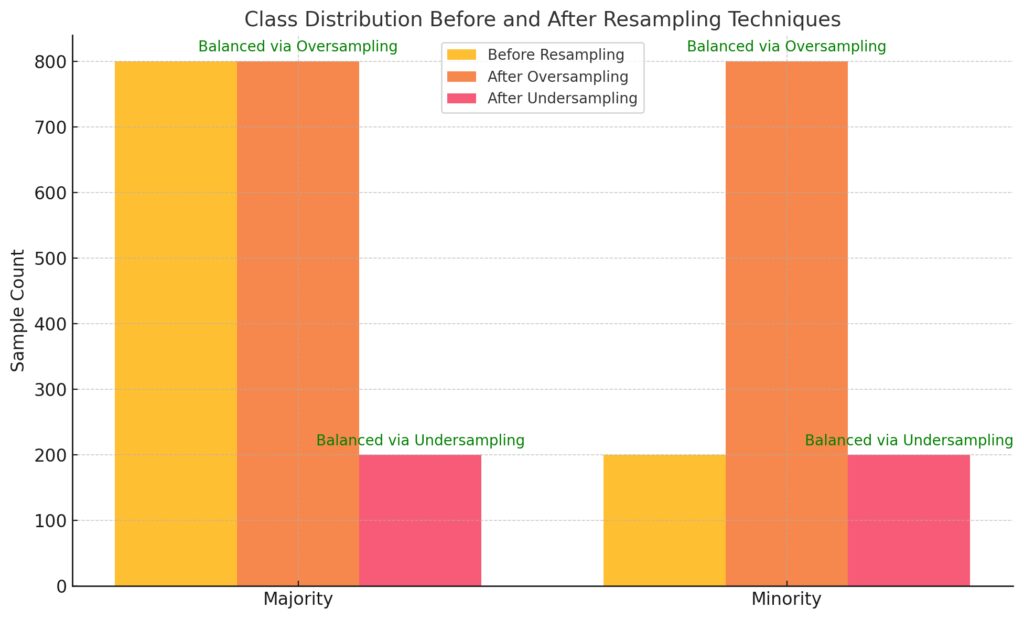

Handling Data-Related Problems

Data challenges can derail training:

- Imbalanced Datasets: Overrepresentation of certain classes skews results.

- Solution: Use oversampling, undersampling, or class weights in loss functions.

- Noisy Data: Outliers or irrelevant data reduce model accuracy.

- Solution: Clean the data rigorously or use robust training techniques.

Before Resampling: Highlights the imbalance, with the majority class having significantly more samples than the minority class.

After Oversampling: The minority class is increased to match the majority, creating a balanced distribution.

After Undersampling: The majority class is reduced to match the minority, achieving balance by reducing total samples.

Dealing with Computational Constraints

Training deep learning models can demand significant computational resources:

- Insufficient Hardware: Training is slow or infeasible on limited systems.

- Solution: Use cloud-based GPUs/TPUs or optimize models for faster processing.

- Memory Overload: Large datasets and models exceed available memory.

- Solution: Use data generators or model checkpointing.

Insider Tips for AI Model Training Experts

To elevate your skills, you need the kind of insights that come from real-world experience. Here are expert tips to refine your AI model training process and achieve superior results.

Use Pre-Trained Models Strategically

Why start from scratch when pre-trained models are available?

- Leverage models like BERT, ResNet, or GPT for tasks closely related to their original training.

- Fine-tune pre-trained models with your custom data to save time and resources.

Tip: For niche tasks, use domain-specific pre-trained models like BioBERT (biomedical) or CodeBERT (software).

Prioritize Data Efficiency Over Quantity

The saying “more data equals better performance” isn’t always true. Focus on quality and relevance:

- Use active learning to label only the most informative samples.

- Employ synthetic data for rare or hard-to-obtain scenarios.

- Clean and preprocess data meticulously—garbage in, garbage out!

Pro Tip: Augment data for low-resource environments using techniques like SMOTE (synthetic minority oversampling).

Master Advanced Regularization Techniques

To keep your model generalizable:

- Use Batch Normalization to stabilize training and speed up convergence.

- Implement DropConnect (a generalization of dropout) for more robust neural networks.

- Add noise to inputs or labels during training to improve resistance to real-world variability.

Experiment with Cutting-Edge Optimizers

Sticking to classic optimizers like SGD or Adam is fine, but experimenting with newer methods can yield breakthroughs:

- RAdam (Rectified Adam): Addresses instability issues in early training.

- AdaBelief: Focuses on the belief in gradient changes, often outperforming Adam.

- Lookahead Optimizer: Enhances existing methods by reducing variance in weight updates.

Pro Tip: Start with Adam for quick iterations, then test alternatives for your final model.

Monitor Training with Visualization Tools

Real-time visualization can prevent training pitfalls. Use tools like:

- TensorBoard: Monitor loss curves, activation maps, and gradients.

- Weights & Biases: Track experiments, hyperparameters, and collaboration insights.

Insider Insight: Visualize embedding spaces to ensure your model is learning meaningful representations.

Utilize Model Ensembles

Ensemble techniques can combine multiple models for better performance:

- Stacking: Train a meta-model on the predictions of base models.

- Bagging (Bootstrap Aggregating): Aggregate results from several models trained on random subsets.

- Blending: Use a weighted average of different model outputs.

Tip: Ensembles can be computationally expensive—use them wisely for critical tasks.

Incorporate Explainability from the Start

Explainable AI (XAI) isn’t just a buzzword—it builds trust and ensures compliance:

- Use SHAP (SHapley Additive exPlanations) to interpret predictions.

- For computer vision, apply techniques like Grad-CAM to visualize what your model “sees.”

Pro Tip: Debug your model using explanations. If your model relies on the wrong features, fix it early.

Build Robustness with Adversarial Training

Real-world data often includes noise and adversarial elements. Prepare your model by:

- Training with adversarial examples to improve resilience.

- Regularly testing the model against edge cases and adversarial attacks.

Insider Insight: Incorporate differential privacy techniques to add noise and protect sensitive data.

Optimize Inference Time for Deployment

Training isn’t the endgame—models must perform well in production.

- Quantization: Reduce precision to speed up inference.

- Pruning: Remove less critical neurons or layers.

- Distillation: Train a smaller model to mimic a larger one’s predictions.

Tip: Use tools like TensorRT for deployment optimizations on NVIDIA hardware.

Document and Automate Your Workflow

As an expert, scaling your process is essential:

- Use version control for data (e.g., DVC) and code (Git).

- Automate repetitive tasks with pipelines using Airflow or MLFlow.

Pro Insight: Document lessons from failed experiments—they often lead to breakthroughs later.

Future-Proofing Your AI Models

Staying Updated with Advances

AI evolves rapidly. To remain competitive:

- Follow reputable sources like ArXiv for the latest research papers.

- Stay engaged with communities like KDnuggets or Towards Data Science.

Embracing Emerging Technologies

New technologies reshape AI landscapes:

- Federated Learning: Train models across decentralized data sources for privacy preservation.

- Self-Supervised Learning: Reduce dependency on labeled data.

- Explainable AI (XAI): Build models that clearly explain their decisions, fostering trust.

Planning for Model Lifecycles

AI models need maintenance over time. Build a lifecycle management strategy:

- Regularly evaluate performance metrics.

- Schedule retraining to adapt to new data patterns.

- Decommission models responsibly when no longer relevant.

Wrapping Up

Mastering AI model training involves more than just coding. It requires a deep understanding of data, architecture, and deployment strategies. As an expert, your ability to troubleshoot challenges, optimize performance, and adapt to advancements will set you apart.

Leverage this roadmap to refine your approach and achieve cutting-edge results. AI’s potential is boundless—what will you create next?

FAQs

How do I debug a model with poor performance?

Poor performance can stem from several factors. Debug by:

- Checking the data pipeline for errors like mislabeling or skewed distributions.

- Visualizing the training process using TensorBoard to spot issues like vanishing gradients.

- Evaluating activation maps to ensure the model focuses on meaningful features.

For example, in image recognition, if the model fixates on irrelevant background details, adjust the architecture or data preprocessing.

What are the best tools for tracking AI experiments?

Tracking experiments is crucial for reproducibility and optimization. Tools include:

- Weights & Biases (W&B): Logs hyperparameters, training metrics, and visualizations.

- MLFlow: Manages experiment tracking, model versioning, and deployment pipelines.

- Comet.ml: Offers real-time tracking and team collaboration features.

Example: Use MLFlow to compare models trained with different learning rates and select the best-performing one for deployment.

Can I use transfer learning for non-image or NLP tasks?

Yes! Transfer learning isn’t limited to images or text. It can apply to tasks like:

- Audio Analysis: Using models pre-trained on speech datasets to classify sound patterns.

- Time Series Prediction: Fine-tuning models trained on financial or weather data for your specific needs.

Example: Adapting a pre-trained time-series model to forecast energy consumption in a new region.

How often should I retrain my AI model?

Retraining depends on how quickly your data changes:

- Static environments: Retraining may not be necessary unless performance degrades.

- Dynamic environments: Update frequently to adapt to data drift.

For instance, a spam classifier might need weekly updates to catch new patterns in malicious emails.

What’s the difference between validation and testing datasets?

Validation datasets are used during training to tune parameters and prevent overfitting. Testing datasets, on the other hand, evaluate final model performance on unseen data.

Example: If you’re training a model on 10,000 samples, split it as:

- Training: 70%

- Validation: 20%

- Testing: 10%

This ensures your test metrics reflect real-world performance.

How can I ensure my model generalizes well to unseen data?

Generalization is the model’s ability to perform well on unseen data. To enhance it:

- Use cross-validation to assess performance across multiple data splits.

- Regularize with techniques like L2 regularization or dropout layers.

- Train on diverse, representative datasets to avoid overfitting to specific patterns.

For example, in speech recognition, training on accents from multiple regions ensures the model performs well globally.

Are there specific datasets recommended for benchmarking AI models?

Yes, standardized datasets help compare model performance. Examples include:

- ImageNet for image classification tasks.

- COCO for object detection and segmentation.

- SQuAD for natural language question-answering tasks.

- MNIST for handwriting recognition, though it’s considered basic.

For example, researchers test computer vision models on ImageNet to validate their effectiveness across various image categories.

What’s the role of batch size in training?

Batch size determines how many samples are processed before updating model weights.

- Small batches: Provide more frequent updates but can introduce noise.

- Large batches: Offer smoother updates but require more memory and may overfit.

Tip: Start with a medium batch size (e.g., 32 or 64). Use smaller sizes for memory-limited environments or larger ones if stability is critical.

How do I measure the computational cost of a model?

Evaluate computational cost to balance accuracy and efficiency:

- Flops (Floating Point Operations): Measures the number of operations per inference.

- Latency: Time taken for a model to make predictions.

- Memory Usage: Tracks resource consumption during training and inference.

For example, reducing the number of layers in a CNN lowers latency and memory usage, ideal for edge devices.

How important is feature engineering in deep learning?

Deep learning models can learn features automatically, but feature engineering still adds value in some cases:

- For structured data, manual feature creation (e.g., combining existing fields) improves performance.

- In unsupervised learning, feature scaling or dimensionality reduction aids clustering or visualization.

Example: In credit risk analysis, creating ratios like income-to-debt enhances model predictions.

What’s the difference between precision and recall?

Precision measures how many of your positive predictions were correct, while recall measures how many actual positives were identified.

- High precision: Few false positives.

- High recall: Few false negatives.

For example, in medical diagnosis, recall is crucial to avoid missing sick patients, even if some healthy individuals are flagged incorrectly.

Can I combine multiple loss functions in training?

Yes, combining loss functions can optimize models for multiple objectives. Examples include:

- Weighting classification loss with regularization loss to balance accuracy and simplicity.

- Merging reconstruction loss and adversarial loss in GAN training for better generative quality.

For instance, in a style-transfer model, combining content loss and style loss ensures images retain structure while adopting the target style.

How can I identify data drift in production?

Data drift occurs when the distribution of input data changes over time. Detect it by:

- Monitoring feature distributions against the training data.

- Comparing model predictions over time to historical benchmarks.

- Using tools like Evidently AI or Alibi Detect for automated drift detection.

Example: In a recommendation system, if user preferences shift seasonally, periodic retraining might be required.

How do I choose the right activation function?

Activation functions affect a model’s learning ability. Consider:

- ReLU (Rectified Linear Unit): Default for most deep networks due to simplicity and effectiveness.

- Sigmoid or Tanh: Useful for binary outputs but prone to vanishing gradients.

- Softmax: Ideal for multi-class classification tasks.

For example, in a binary classifier, using a sigmoid activation ensures outputs remain between 0 and 1, interpretable as probabilities.

How can I scale my models for real-time applications?

Scaling for real-time use requires balancing speed and accuracy:

- Compress models with pruning or quantization.

- Optimize inference with frameworks like TensorRT or ONNX.

- Use caching for frequently accessed predictions.

For instance, in a chatbot, caching answers to common queries speeds up response times without re-processing every request.

Should I preprocess data differently for time-series tasks?

Yes, time-series tasks have unique preprocessing needs:

- Normalize time scales: Ensure consistent intervals between data points.

- Create lag features: Add past values as predictors for the current target.

- Handle seasonality: Remove trends or use Fourier transforms to capture periodicity.

For example, in stock market prediction, calculating moving averages and adding them as features improves forecasting accuracy.

Resources

Popular Frameworks and Libraries

- TensorFlow: Robust for production-grade deep learning models.

- PyTorch: Preferred for research and quick prototyping.

- Keras: High-level API for easy neural network development.

- Hugging Face Transformers: Simplifies working with pre-trained language models.

- Scikit-learn: For classical machine learning and preprocessing.

Pro Tip: Combine TensorFlow for deployment and PyTorch for experimentation to get the best of both worlds.

Datasets for Training and Benchmarking

General Datasets

- Kaggle: A massive repository of datasets across domains. Explore

- UCI Machine Learning Repository: Classic datasets for ML tasks. Explore

- Google Dataset Search: Aggregates datasets from multiple sources.

Domain-Specific Datasets

- ImageNet: Gold standard for image classification. Explore

- SQuAD: Benchmark for natural language understanding.

- COCO: Dataset for image captioning, object detection, and segmentation. Explore

- OpenML: Collaborative platform for machine learning datasets. Explore

Pro Tip: Use synthetic data generation tools like DataGen for rare scenarios or when privacy is a concern.

Experiment Tracking and Collaboration

- Weights & Biases (W&B): Logs experiments, visualizes metrics, and enables team collaboration. Explore

- MLFlow: Tracks experiments, manages deployments, and version-controls models. Explore

- Comet.ml: Offers real-time monitoring and experiment comparison. Explore

Model Deployment Tools

- TensorFlow Serving: For serving TensorFlow models in production. Explore

- ONNX Runtime: Optimizes and runs models across multiple platforms. Explore

- Docker: Streamlines deployment with containerization. Explore

Pro Tip: For edge AI, try TensorFlow Lite or PyTorch Mobile for lightweight deployment.

Visualization Tools

- TensorBoard: Built into TensorFlow for visualizing metrics, graphs, and embeddings.

- Plotly: Interactive visualizations for metrics and results. Explore

- Seaborn and Matplotlib: For data analysis and exploratory visualization.

Pro Tip: Use SHAP for explainability and insights into model predictions.