The cybersecurity world is on high alert as a new type of computer virus, ominously named the “synthetic cancer” virus, makes its presence felt. This malware represents a terrifying fusion of artificial intelligence and malicious code, capable of evolving, adapting, and spreading in ways that were previously unimaginable. Let’s explore the intricacies of this AI-driven threat and understand why it’s causing such widespread concern.

Understanding the Name: Why “Synthetic Cancer”?

The term “synthetic cancer” is not just a dramatic label—it encapsulates the virus’s behavior and potential impact. Much like how cancer cells mutate and spread uncontrollably, this virus evolves continuously, altering its code to avoid detection and elimination. The “synthetic” aspect refers to the AI technology it leverages, specifically ChatGPT, to rewrite its code and adapt to new environments, ensuring its survival in the digital ecosystem.

source: Synthetic Cancer – Augmenting Worms with LLMs

Evolving Strains: The Mechanics Behind the Mutation

At the heart of the synthetic cancer virus is its ability to rewrite its own code, a feature that sets it apart from traditional malware. But how exactly does this work?

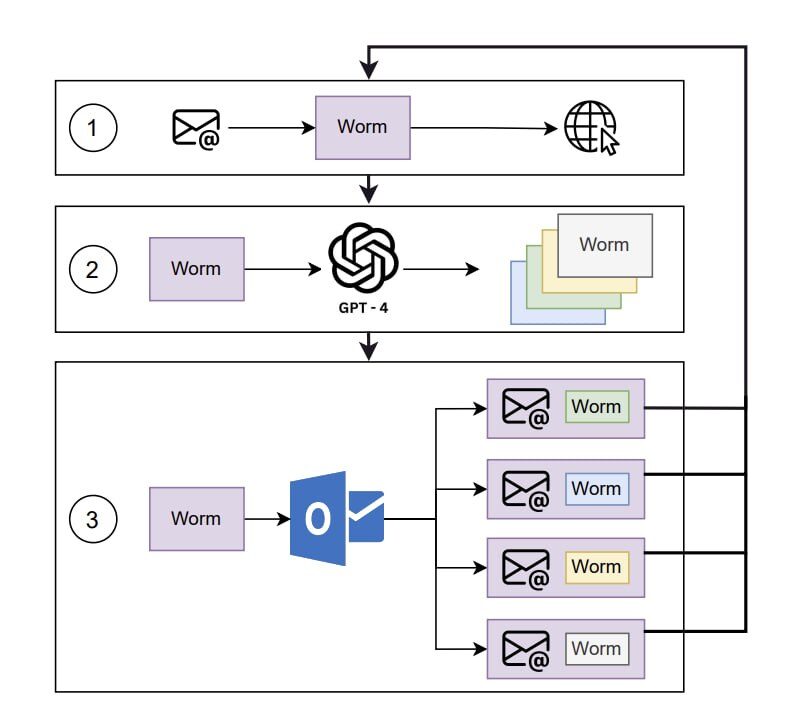

- Self-Modification: The virus uses ChatGPT to dynamically alter its code structure. Unlike typical malware that might be detected through pattern recognition, the synthetic cancer virus can change its signature continuously. It might alter variables, modify functions, or rearrange its logic to appear as a completely different program each time it’s scanned by an antivirus software.

- AI-Driven Logic: The use of AI allows the virus to maintain its functionality despite these changes. ChatGPT is not just a tool for generating human-like text; it’s also a powerful engine for logical reasoning and problem-solving. The virus can, therefore, retain its malicious intent—whether that’s data theft, system corruption, or spreading further—while presenting an entirely new face to security systems.

- Survival Tactics: This self-modifying ability gives the virus a chameleon-like quality, allowing it to evade even the most sophisticated security systems. It’s like a constantly shifting target, making it incredibly difficult for cybersecurity tools to pin down and neutralize.

Targeted Infection: The New Age of Social Engineering

Beyond its ability to evolve, the synthetic cancer virus excels in social engineering—a technique that manipulates individuals into compromising their own security. But what sets this virus apart in its approach?

- AI-Powered Phishing: ChatGPT enables the virus to craft highly personalized phishing emails. These emails are not just generic scams; they are tailored to the recipient’s interests, habits, and even recent activities. By analyzing data from social media profiles, previous email correspondences, and other sources, the virus can create messages that are incredibly convincing.

- Contextual Relevance: These phishing attempts often come in the form of emails that seem contextually relevant. For example, you might receive an email that appears to be from your bank, referencing a recent transaction that you actually made. The attachment or link included in this email, however, is the gateway for the virus to enter your system.

- Widespread Impact: Once inside a system, the virus can spread through networks, targeting other systems with similarly crafted emails. It’s like a cyber infection that moves from one victim to the next, each time adapting its approach based on the target’s behavior and system environment.

The Dual-Use Dilemma of AI: A Double-Edged Sword

The emergence of the synthetic cancer virus has reignited the debate over the dual-use nature of AI technologies. Tools like ChatGPT were designed to enhance human productivity, generate creative content, and assist in problem-solving. Yet, these same tools can be repurposed to create malicious code that is more intelligent, adaptable, and dangerous.

- Ethical Considerations: The ability of AI to be used for both beneficial and harmful purposes raises significant ethical questions. How do we ensure that AI technologies are not weaponized? Should there be restrictions on the development and deployment of certain AI capabilities?

- Regulatory Challenges: Governments and regulatory bodies are struggling to keep up with the rapid pace of AI development. The synthetic cancer virus exemplifies the need for robust regulations that can address the potential misuse of AI, without stifling innovation.

- The Role of AI in Cyber Defense: Interestingly, the same AI that powers this virus can also be a tool for defense. AI-driven security systems are already being developed to detect patterns of malicious behavior, predict potential threats, and respond in real time. However, as AI evolves, so too will the tactics of cyber attackers.

The Growing Urgency for Robust Cybersecurity Measures

The synthetic cancer virus is a wake-up call for the cybersecurity community. Its ability to evade detection, coupled with its use of advanced social engineering techniques, makes it one of the most significant threats of our time. But what can be done to counter this evolving menace?

- AI-Augmented Cybersecurity: The first line of defense must be the integration of AI into cybersecurity strategies. Just as AI is used to create more sophisticated threats, it must also be used to build more sophisticated defenses. This includes AI-driven threat detection systems that can adapt and respond to new types of malware in real time.

- Continuous Monitoring and Response: Traditional cybersecurity measures often rely on periodic updates and scans. However, the synthetic cancer virus’s ability to change rapidly means that continuous monitoring is essential. AI can play a key role here, providing 24/7 surveillance and instantaneous responses to suspicious activity.

- Education and Awareness: Given the virus’s reliance on social engineering, educating individuals and organizations about the dangers of phishing and other scam tactics is crucial. Cybersecurity training programs should emphasize the importance of verifying email sources, avoiding suspicious links, and keeping systems updated.

Looking Ahead: The Future of AI and Cybersecurity

The synthetic cancer virus is just the beginning of what could be a new era of AI-enhanced cyber threats. As AI continues to advance, the tools available to both cybercriminals and cybersecurity professionals will become more sophisticated. The key to staying ahead in this digital arms race will be innovation, collaboration, and a deep understanding of the capabilities—and limitations—of AI.

- Innovation in AI Defense: Going forward, cybersecurity firms and researchers will need to invest heavily in AI research that focuses on defense mechanisms. This might include predictive analytics, behavioral modeling, and even AI-driven countermeasures that can preemptively shut down threats before they fully materialize.

- Global Collaboration: Cyber threats are not confined by borders. The global nature of the internet means that international cooperation will be essential in combating threats like the synthetic cancer virus. Governments, private sector companies, and international organizations must work together to create a unified response to the growing threat of AI-enhanced malware.

- Ethical AI Development: Finally, the development of AI must be guided by ethical considerations. While the potential for AI to be used in harmful ways cannot be entirely eliminated, creating frameworks for responsible AI use can help mitigate the risks.

Conclusion: Navigating the AI-Powered Future

The synthetic cancer virus represents a significant shift in the landscape of cybersecurity threats. Its ability to evolve, adapt, and deceive using AI is a clear indication that the future of cyber warfare will be fought with tools of unprecedented sophistication. To navigate this future, the cybersecurity community must embrace the power of AI, not just to counter these threats, but to anticipate and neutralize them before they can cause irreparable damage.

In this AI-driven world, vigilance, innovation, and ethical responsibility will be the cornerstones of a secure digital future.

NIST: Artificial Intelligence in Cybersecurity

A comprehensive guide on how AI is influencing cybersecurity practices and the potential risks posed by AI-enhanced threats.