Understanding AI Workflow Automation

AI workflow automation involves using artificial intelligence to streamline tasks within a process. It’s about letting machines take over repetitive tasks so teams can focus on strategy.

From data collection to decision-making, AI-driven systems handle complex processes with efficiency. But the magic happens when these workflows come together as part of an orchestrated pipeline.

Why is Workflow Orchestration Important?

Orchestration ensures seamless integration between tools in your AI pipeline. It keeps tasks aligned and ensures each step flows into the next without hiccups.

Think of it as the conductor of an orchestra. Without it, your system can fall out of sync, causing delays or missteps. With orchestration, end-to-end pipelines operate like a well-tuned machine.

Real-World Applications of Automation

AI workflow automation powers industries like healthcare, finance, and retail. Examples include:

- Predictive analytics to anticipate trends.

- Automating customer interactions with chatbots and virtual agents.

- Streamlining supply chain management for faster deliveries.

Each use case highlights the value of orchestration in uniting diverse systems.

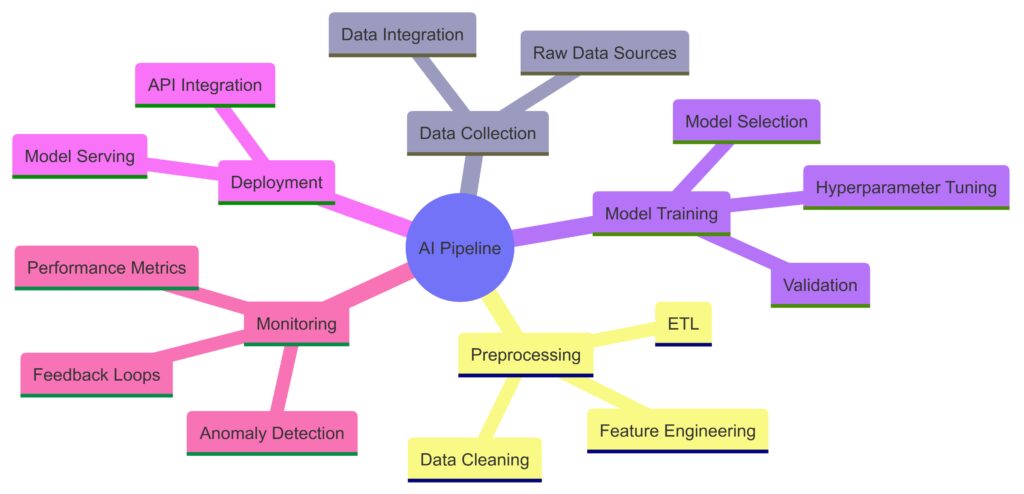

Components of an AI Pipeline

Data Collection and Preprocessing

At the heart of every AI pipeline is data. Systems collect, clean, and preprocess data to make it usable. Without proper preparation, your models won’t perform as expected.

Key tools like ETL (Extract, Transform, Load) frameworks play a significant role here. Orchestration ensures each stage runs without delays or missing links.

Model Training and Deployment

Once the data is ready, it fuels model training. This stage involves creating and refining machine learning models.

Orchestration steps in again to schedule and monitor training jobs, ensuring smooth transitions into deployment phases.

Monitoring and Feedback Loops

After deployment, AI models require constant monitoring to ensure accuracy. Feedback loops provide fresh data to refine the system. Orchestration handles log aggregation, anomaly detection, and updates to the model.

Every component ties together, thanks to robust orchestration frameworks.

The Role of Orchestration in Seamless AI Pipelines

Aligning Multiple Tools and Technologies

AI pipelines rely on various tools—each specializing in a specific task. Orchestration ensures that these tools work together in harmony.

It integrates platforms like:

- Cloud storage for data pipelines.

- Specialized libraries for AI training.

- Scalable compute resources.

Without orchestration, managing these diverse components can feel overwhelming.

Enhancing Scalability and Flexibility

Orchestration frameworks allow businesses to scale operations effortlessly. For instance, as data volumes grow, pipelines can scale up without significant manual intervention.

Frameworks like Apache Airflow or Kubernetes make scaling possible while maintaining a flexible system design.

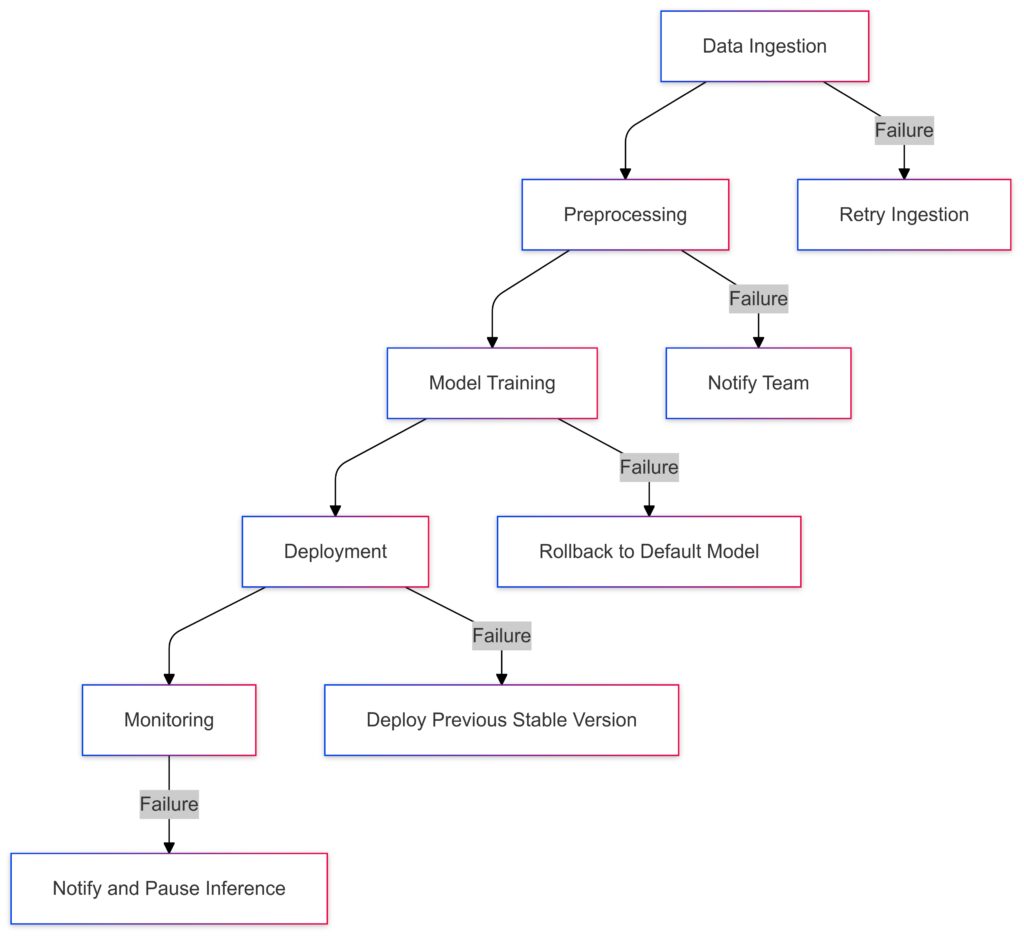

Handling Failures Gracefully

Failures happen, even in AI systems. Orchestration frameworks mitigate risks by retrying failed tasks, notifying teams, or switching to backup options.

This ensures that your pipeline remains resilient and operational under pressure.

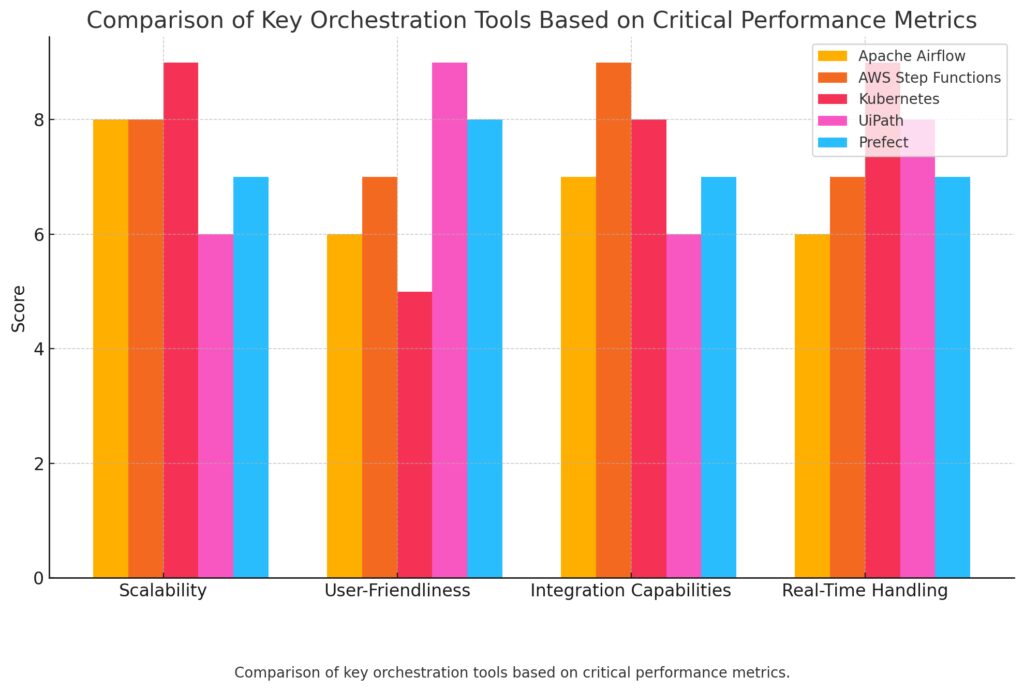

Popular Tools for AI Workflow Orchestration

Apache Airflow

Airflow is an open-source tool widely used for workflow scheduling and orchestration. Its DAG (Directed Acyclic Graph) structure makes it easy to visualize dependencies and manage workflows efficiently.

Kubernetes

Originally designed for container orchestration, Kubernetes excels in AI workflows, especially for resource-intensive tasks like model training. It offers automated scaling and deployment capabilities.

Prefect

Prefect specializes in managing dynamic workflows. It’s known for handling complex data workflows that require high adaptability.

Each of these tools brings unique benefits to pipeline orchestration, depending on your project needs.

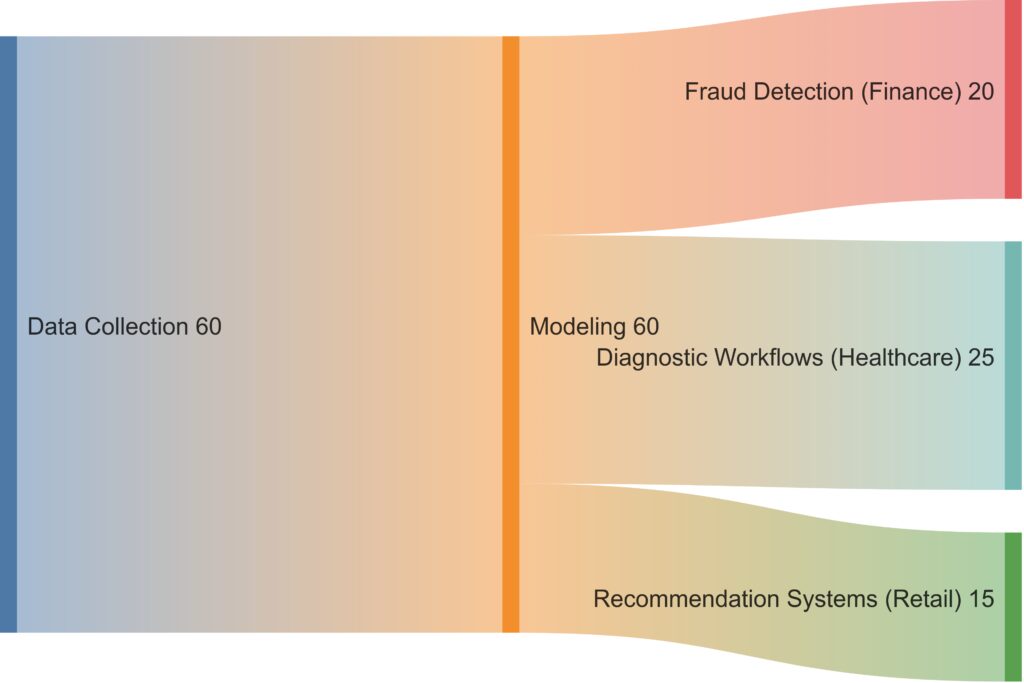

Use Cases of AI Workflow Orchestration

Automating Financial Fraud Detection

In finance, fraud detection systems rely on orchestrated workflows to analyze vast transaction data in real time.

- Data Ingestion: Orchestration pipelines aggregate transactional data from multiple sources like banks, credit card networks, and user logs.

- Model Training: AI models detect anomalies, flagging potential fraud cases based on patterns.

- Alerts & Responses: The system triggers automated actions, such as notifying users or freezing accounts.

With orchestration, all these stages are seamless, minimizing response times and reducing fraud risks.

Streamlining Healthcare Diagnostics

Healthcare systems use AI to assist with diagnostics and treatment plans. Orchestration helps connect disparate stages:

- Image Processing: AI scans radiology images for anomalies.

- Data Integration: Patient histories and test results are combined for deeper analysis.

- Doctor Notifications: The system sends insights to physicians, enabling quicker diagnoses.

This ensures a holistic workflow, where each piece works in harmony to improve patient care.

Optimizing E-Commerce Operations

E-commerce giants like Amazon leverage AI workflow orchestration to streamline operations:

- Inventory Management: Predictive AI determines restocking needs.

- Personalized Recommendations: Orchestrated workflows analyze customer preferences to offer tailored product suggestions.

- Logistics Automation: End-to-end pipelines optimize shipping routes, improving delivery times and reducing costs.

These workflows are automated yet carefully orchestrated to avoid bottlenecks.

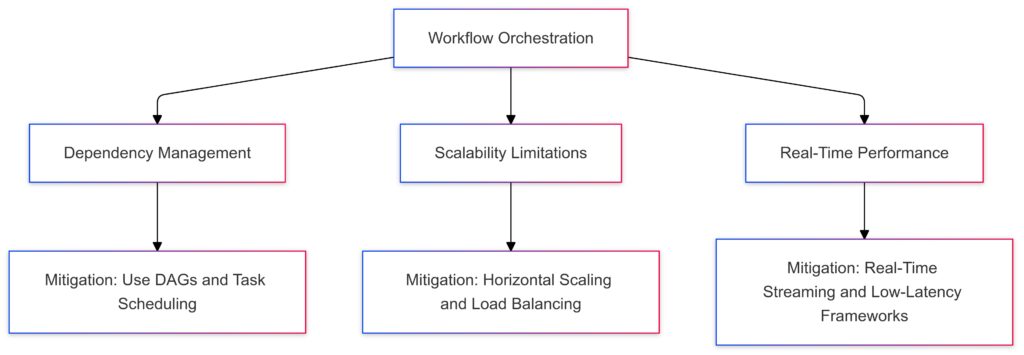

Challenges in Implementing Workflow Orchestration

Complex System Dependencies

AI pipelines often involve a web of interconnected systems. Managing dependencies between tools, APIs, and databases can become overwhelming without proper orchestration.

For example, a delay in data preprocessing might halt model training. Effective orchestration ensures these dependencies are handled gracefully.

Scalability Constraints

While orchestration tools can scale operations, not every system is ready for large-scale implementations.

Teams must ensure that their:

- Infrastructure supports growing data demands.

- Orchestration framework is robust enough to handle additional layers.

Real-Time Performance Needs

In workflows requiring real-time actions, delays are unacceptable. For instance, in autonomous driving, split-second decisions are life-critical. Ensuring these workflows remain low-latency under varying conditions is a significant challenge.

Emerging Trends in Workflow Orchestration

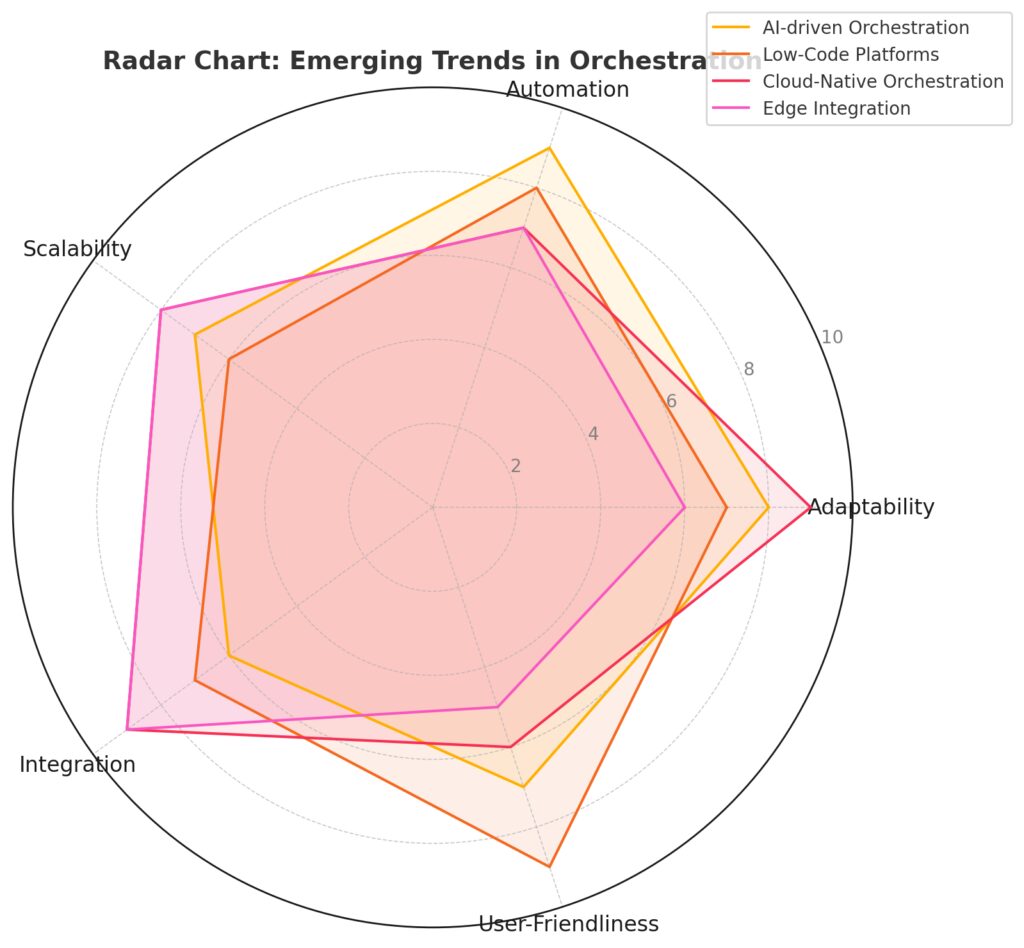

AI-Driven Orchestration

Next-generation orchestration tools leverage AI to:

- Predict bottlenecks in pipelines.

- Optimize resource allocation automatically.

- Suggest workflow adjustments based on historical performance.

This self-healing orchestration minimizes manual intervention and enhances efficiency.

AI-driven Orchestration excels in automation and adaptability.

Low-Code Platforms prioritize user-friendliness and integration.

Cloud-Native Orchestration leads in scalability and integration.

Edge Integration is strong in integration and scalability but lower in user-friendliness.

Low-Code Orchestration Platforms

Low-code platforms are making orchestration more accessible to non-technical teams. These tools use visual interfaces to design pipelines without extensive programming knowledge.

Examples include tools like Zapier for simple workflows or enterprise-grade solutions like UiPath for more complex systems.

Cloud-Native Orchestration

Cloud orchestration is becoming a default for businesses. With providers like AWS Step Functions and Azure Logic Apps, companies can build, monitor, and scale workflows entirely in the cloud.

These cloud-native solutions reduce setup times and improve scalability, aligning with modern business demands.

Best Practices for Successful AI Orchestration

Start Small and Scale

Begin by orchestrating a small, well-defined pipeline. This allows you to iron out potential issues before scaling the system.

For example: Start with data preprocessing workflows before integrating full-scale AI model training.

Monitor and Iterate

Effective orchestration isn’t a “set it and forget it” task. Use monitoring dashboards to track pipeline performance, spot inefficiencies, and refine processes regularly.

Invest in Team Training

Orchestration frameworks often require specialized knowledge. Investing in training your team ensures smooth adoption and long-term success.

Future Predictions for AI Workflow Orchestration

Fully Autonomous Pipelines

As AI advances, autonomous orchestration systems will emerge. These systems will:

- Adjust workflows dynamically based on real-time data.

- Automatically allocate resources for peak efficiency.

- Self-heal during failures without human intervention.

This could lead to zero-downtime AI pipelines, where systems are always learning, adapting, and improving.

Integration with Edge Computing

With the rise of edge computing, orchestration will extend beyond centralized systems.

- AI models will process data locally at the edge, such as on IoT devices or autonomous vehicles.

- Orchestration frameworks will handle synchronization between edge devices and central cloud systems.

This trend will unlock real-time processing for latency-critical applications, like healthcare monitoring and smart cities.

Growing Role of Explainability

AI orchestration tools will prioritize model transparency. Organizations will demand frameworks that:

- Log decisions made by AI models.

- Provide insights into pipeline actions.

Explainable orchestration will boost compliance, especially in industries like finance and healthcare where regulatory scrutiny is high.

Fusion of Blockchain and AI Orchestration

Blockchain technology may complement orchestration by introducing secure, immutable records for workflows.

- This can enhance data integrity in multi-party collaborations.

- Smart contracts could trigger AI workflows automatically, ensuring trustworthy automation.

Actionable Steps for Adopting AI Workflow Orchestration

Assess Your Current Workflows

Start by mapping out your existing workflows. Identify areas where manual processes slow things down or where tools fail to integrate seamlessly.

Choose the Right Orchestration Tool

Select an orchestration framework tailored to your needs. For simple workflows, tools like Prefect work well. For large-scale operations, consider Kubernetes or Apache Airflow.

Leverage Cloud Infrastructure

Adopt cloud-native orchestration tools to enhance scalability. Providers like AWS and Google Cloud offer services that minimize setup complexities while enabling advanced functionality.

Prioritize Monitoring and Security

Invest in tools to monitor your pipelines. This ensures smooth operation and allows for quick interventions if failures occur. Security should also be a top concern, especially when handling sensitive data.

Commit to Continuous Optimization

Treat your pipelines as dynamic systems. Use feedback from performance metrics to continuously improve workflows, reduce latency, and optimize resource allocation.

With these strategies, businesses can unlock the full potential of AI workflow automation, creating systems that are not only efficient but future-ready. Let me know if you’d like a tailored example or more insights into a specific industry!

FAQs

What is the difference between automation and orchestration?

Automation focuses on completing individual tasks without human intervention, like running a script to clean data.

Orchestration, however, manages how multiple automated tasks connect and work together. For example, in an AI pipeline:

- Automation: Cleans a dataset.

- Orchestration: Ensures the clean dataset is passed to the model training stage and, later, to deployment.

How do orchestration tools handle failures?

Orchestration tools have built-in mechanisms to detect and mitigate failures.

For instance:

- If a data extraction task fails in Apache Airflow, it can retry automatically or notify your team.

- Kubernetes can redirect workloads to healthy nodes if one becomes unresponsive.

This ensures minimal disruption to the pipeline.

Can orchestration tools work with legacy systems?

Yes, many orchestration frameworks support legacy systems through APIs or middleware. For example:

- Prefect can integrate with older databases by adding connectors.

- Kubernetes can run legacy applications inside containers, allowing them to participate in modern workflows.

This makes orchestration valuable for businesses transitioning to newer technologies.

Are orchestration frameworks suitable for small businesses?

Absolutely! Small businesses can benefit from lightweight orchestration tools like Zapier or Prefect, which offer user-friendly interfaces and manageable costs.

For example, a small e-commerce store could use Zapier to:

- Automatically sync order data with inventory systems.

- Trigger emails to customers when their orders ship.

Orchestration isn’t just for tech giants—it’s scalable to your needs.

How does orchestration support real-time applications?

In real-time applications, orchestration manages time-sensitive processes with precision.

For example:

- In autonomous vehicles, orchestration ensures that sensor data is quickly analyzed and decisions are made on the spot.

- In financial trading, it coordinates rapid data analysis and transaction execution within milliseconds.

These systems use event-driven orchestration to react immediately to triggers.

What skills are needed to implement orchestration frameworks?

Teams implementing orchestration need expertise in:

- Scripting and programming languages (e.g., Python for Apache Airflow).

- Cloud platforms (e.g., AWS, Azure).

- Workflow design and monitoring tools.

For beginners, low-code platforms like UiPath provide a gentler learning curve while still offering robust orchestration capabilities.

Which industries benefit the most from AI orchestration?

Orchestration transforms industries such as:

- Healthcare: Automating diagnostics and integrating patient data.

- Retail: Streamlining supply chains and personalizing marketing.

- Finance: Enhancing fraud detection and automating compliance checks.

For example, in healthcare, orchestration ensures that diagnostic AI tools communicate seamlessly with electronic medical records (EMRs).

How do I choose the right orchestration tool?

Consider factors like:

- The complexity of your workflows (simple vs. multi-stage).

- Your infrastructure—cloud, on-premises, or hybrid.

- Team expertise in tools like Airflow, Kubernetes, or Prefect.

For example:

- Use Kubernetes if you need scalable, resource-intensive workflows.

- Choose Prefect for dynamic workflows with user-friendly customization.

Is orchestration overkill for basic automation tasks?

If you’re automating isolated tasks, like sending a single report daily, orchestration may not be necessary. However, when you have:

- Multiple interconnected processes.

- Dependencies that require coordination.

Then orchestration becomes invaluable for ensuring smooth and efficient operations.

Comparison Guide: Popular AI Workflow Orchestration Tools

Here’s a side-by-side comparison of key orchestration frameworks to help you find the right fit for your needs.

Apache Airflow

Overview:

A widely used, open-source tool designed for scheduling and monitoring workflows. Its DAG-based (Directed Acyclic Graph) approach is ideal for visualizing dependencies.

Best For:

- Batch processing workflows (e.g., data pipelines running nightly).

- Teams with Python expertise.

Key Features:

- Workflow visualization via DAGs.

- Extensive library of prebuilt operators for tasks like SQL queries and API calls.

- Integration with cloud services like AWS and Google Cloud.

Use Case Example:

A marketing agency automates nightly data collection from multiple platforms (Google Analytics, CRM) and cleans the data for next-day reporting.

Limitations:

- Real-time applications: Airflow isn’t ideal for low-latency, event-driven workflows.

- Steep learning curve for non-developers.

Kubernetes

Overview:

A container orchestration platform ideal for managing resource-intensive workflows. Kubernetes automates deployment, scaling, and management of containerized applications.

Best For:

- AI model training at scale.

- Organizations using containerized systems like Docker.

Key Features:

- Automated scaling of compute resources.

- Fault-tolerant workload management (reschedules tasks if nodes fail).

- Supports hybrid cloud environments.

Use Case Example:

A biotech company uses Kubernetes to train deep learning models for genome sequencing. Tasks scale across hundreds of GPUs automatically based on data size.

Limitations:

- Complex setup; requires expertise in DevOps.

- Overkill for simpler workflows.

Prefect

Overview:

A modern orchestration tool focused on flexibility and developer-friendliness. Prefect offers a “code-first” approach, letting you define workflows in Python.

Best For:

- Dynamic workflows with rapidly changing requirements.

- Teams preferring Python-native frameworks.

Key Features:

- Handles failures gracefully with built-in retries and alerts.

- Hybrid mode allows sensitive data to remain on-premises.

- Visual dashboards for monitoring workflow execution.

Use Case Example:

An e-commerce platform uses Prefect to:

- Aggregate customer data daily.

- Trigger recommendation model updates.

- Deploy personalized offers to users.

Limitations:

- Not as robust for large-scale container orchestration compared to Kubernetes.

AWS Step Functions

Overview:

A cloud-native orchestration service provided by AWS. It integrates seamlessly with the entire AWS ecosystem, from Lambda functions to S3 storage.

Best For:

- Teams heavily invested in AWS infrastructure.

- Serverless workflows.

Key Features:

- Visual workflow builder with drag-and-drop interface.

- Event-driven triggers for real-time applications.

- Managed service—no need to handle servers.

Use Case Example:

A financial services company uses Step Functions to process loan applications:

- Extract customer data from an S3 bucket.

- Trigger ML models to assess creditworthiness.

- Notify the customer of results via SMS.

Limitations:

- Limited portability outside AWS.

- Costs can add up for high-volume workflows.

UiPath

Overview:

A leader in robotic process automation (RPA), UiPath is great for automating repetitive tasks and connecting systems without APIs.

Best For:

- Non-technical users who need low-code solutions.

- Back-office automation tasks like invoice processing.

Key Features:

- Drag-and-drop interface for workflow design.

- AI-driven automation suggestions.

- Prebuilt templates for common business processes.

Use Case Example:

A manufacturing company uses UiPath to automate:

- Extracting invoice details from PDFs.

- Logging data into accounting software.

- Sending payment reminders to vendors.

Limitations:

- Not ideal for complex AI model training or container orchestration.

Quick Comparison Table

| Tool | Best For | Key Strengths | Limitations |

|---|---|---|---|

| Apache Airflow | Batch processing, data pipelines | Extensive integrations, DAGs for visualization | Not suitable for real-time tasks |

| Kubernetes | Scalable AI model training | Resource management, fault tolerance | Complex for non-DevOps teams |

| Prefect | Flexible workflows | Python-native, hybrid data handling | Limited for container orchestration |

| AWS Step Functions | AWS-centric, serverless workflows | Cloud-native, event-driven triggers | AWS lock-in, cost scaling |

| UiPath | RPA, repetitive tasks | Low-code, business-friendly | Not for large-scale AI workflows |

Resources

Open-Source Projects

- Airflow GitHub Repository

Access the codebase, community contributions, and sample DAGs to get started with Airflow.

Explore Repository - Kubernetes GitHub Repository

Dive into Kubernetes source code, tutorials, and deployment scripts to learn the platform inside out.

Explore Repository - Prefect’s GitHub Repository

Includes templates, example workflows, and advanced integrations.

Explore Repository

Tools and Platforms

- Orchestration Frameworks

- Apache Airflow: Best for scheduling and batch processing.

- Prefect: Great for Python-friendly dynamic workflows.

- Dagster: A modern orchestration tool with strong support for data pipelines.

- Cloud Platforms

- AWS Step Functions: Ideal for serverless orchestration.

- Google Cloud Workflows: Great for end-to-end workflows in Google’s ecosystem.

- Azure Logic Apps: Low-code orchestration for Microsoft Azure users.

Communities and Forums

- Airflow Slack Community

Join to ask questions, share workflows, and learn from experienced developers. - Kubernetes Community Slack

A hub for Kubernetes users to discuss tips, troubleshoot issues, and explore new features. - Reddit: r/devops and r/machinelearning

Active subreddits where professionals discuss orchestration tools, share tutorials, and provide feedback.

Visit r/devops

Visit r/machinelearning

Conferences and Webinars

- KubeCon + CloudNativeCon

A premier conference focused on Kubernetes and cloud-native technologies. Learn about the latest in container orchestration.

Event Details - Data + AI Summit by Databricks

A global conference covering orchestration, data pipelines, and machine learning integration.

Event Details - Prefect Webinars

Regularly hosted by Prefect, these webinars cover new features, integrations, and best practices.

View Webinars