Introduction to Audit Trails in AI

The Role of Audit Trails in Modern AI Systems

Audit trails serve as a critical mechanism for tracking and documenting actions taken by systems, enabling oversight and accountability. In AI, they help ensure that decision-making processes are transparent and adhere to ethical standards.

From financial fraud detection to medical diagnostics, AI’s influence is growing. But how do we verify these systems are acting fairly and without bias? This is where audit trails become indispensable, offering a path to review past actions and ensure compliance.

What Defines a Black-Box AI?

A black-box AI refers to systems whose decision-making processes are hidden or incomprehensible. These are often based on deep learning models, which, despite their accuracy, lack transparency. Even experts sometimes struggle to interpret how specific decisions are made, complicating accountability efforts.

The “black-box” nature creates a paradox: we value AI for its efficiency and scalability but are concerned about its opacity and ethical risks.

Importance of Transparency and Accountability

Without transparency, black-box AI systems can erode trust, especially in high-stakes fields like healthcare or law enforcement. Audit trails ensure:

- Ethical adherence by making processes traceable.

- Error correction by pinpointing flawed decision points.

- Public trust by showing the system’s workings align with fairness and responsibility.

Challenges in Creating Audit Trails for Black-Box AI

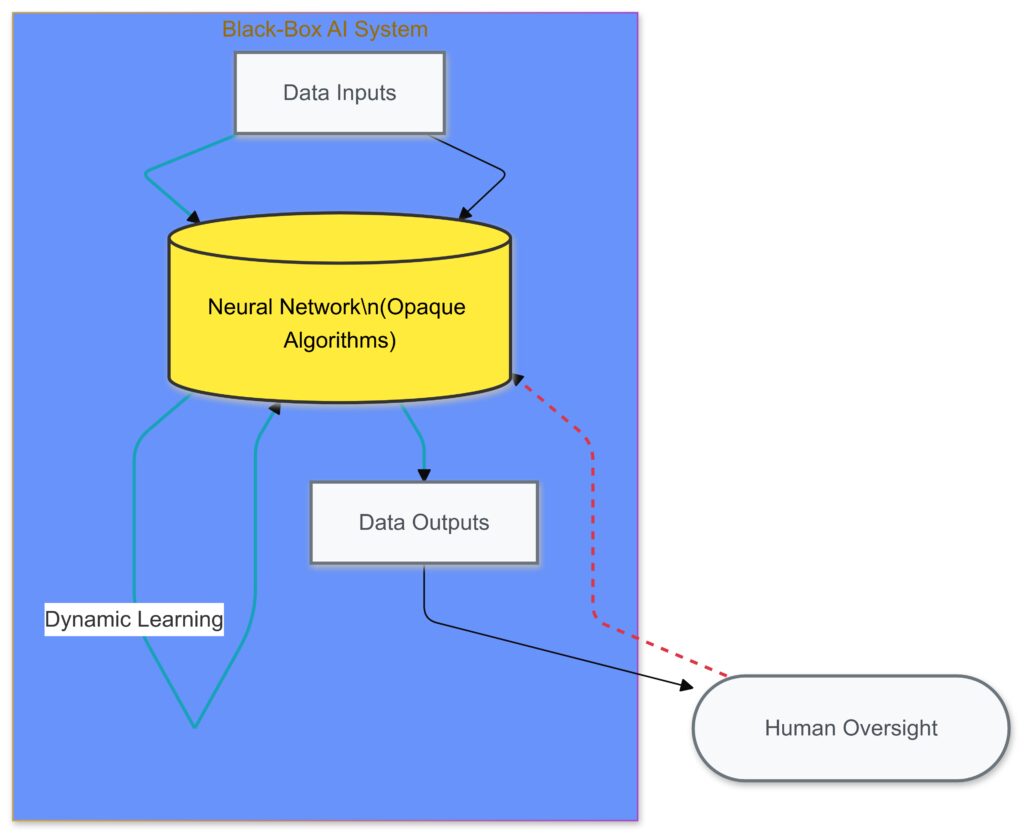

Visualizing the opacity of black-box AI systems and the challenges of creating transparent audit trails.

Opaque Algorithms and Their Complexities

Black-box systems, particularly neural networks, involve millions of parameters working in tandem. Their inner workings are often so intricate that even developers cannot explain how specific outputs arise. This makes traditional auditing techniques, like manual reviews, virtually impossible.

Moreover, their complexity hinders stakeholders from identifying bias or misuse, increasing risks in sensitive applications like criminal sentencing or credit scoring.

Dynamic Learning Systems and Evolving Behavior

AI systems continuously learn and adapt based on new data inputs. This dynamic nature complicates the process of maintaining accurate audit trails. Changes to algorithms might invalidate earlier logs, creating gaps in accountability.

Imagine auditing a system today, only to realize tomorrow’s decisions stemmed from untraceable updates. This evolving nature demands real-time tracking mechanisms, which are still in their infancy.

Data Privacy and Security Concerns

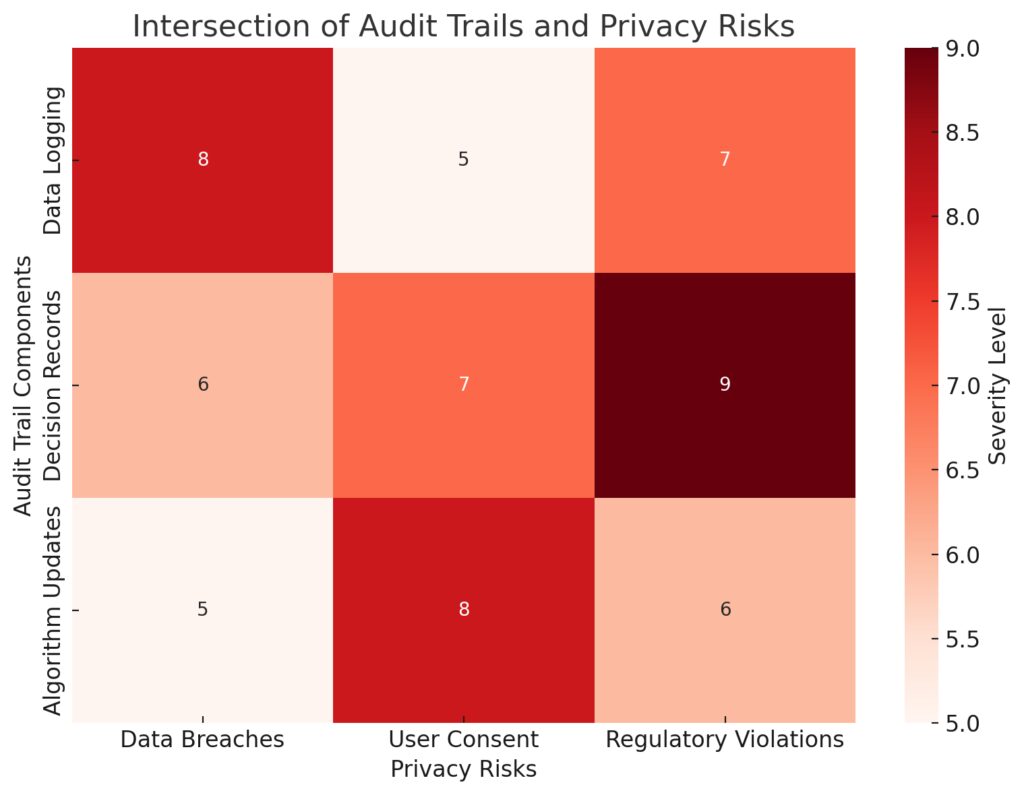

Highlighting the intersection of audit trails and privacy risks. The rows represent audit trail components like “Data Logging,” “Decision Records,” and “Algorithm Updates,” while the columns represent risks such as “Data Breaches,” “User Consent,” and “Regulatory Violations.” The gradient color scheme indicates the severity of each intersection, with darker shades representing higher severity.

Audit trails often require recording large volumes of data, including user interactions, system decisions, and input-output mappings. This raises significant privacy concerns, especially in regions with stringent data protection laws like the GDPR in Europe.

Balancing detailed logs with privacy-preserving mechanisms is a monumental challenge. A poorly designed audit trail could even expose sensitive information during an investigation, defeating its purpose.

Solutions to Improve Auditability in AI

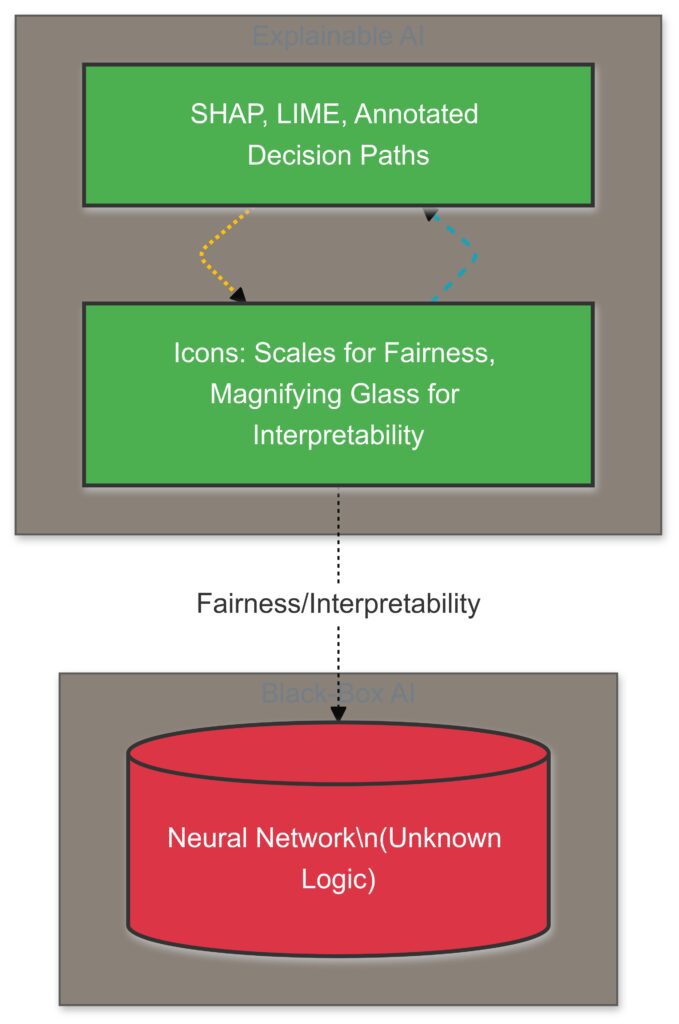

Contrasting traditional black-box AI with Explainable AI, highlighting tools and governance mechanisms.

Explainable AI (XAI) as a Framework

One of the most effective ways to address the black-box problem is through Explainable AI (XAI). This approach focuses on creating models that are inherently interpretable or supplemented with tools that clarify decision-making.

Key strategies include:

- Interpretable models: Using algorithms like decision trees instead of deep learning when possible.

- Post-hoc explainability tools: Implementing techniques like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) to explain black-box outputs.

XAI not only aids in understanding AI decisions but also improves stakeholder trust and compliance.

Advanced Logging and Monitoring Tools

Traditional logs are inadequate for tracking the intricate operations of modern AI systems. Instead, advanced logging tools that integrate AI-specific data can provide deeper insights.

These tools should:

- Capture input-output relationships for every decision.

- Include metadata on model updates, ensuring traceability for algorithm changes.

- Use real-time monitoring for systems with dynamic learning capabilities.

For example, platforms like TensorFlow Extended (TFX) help organizations implement end-to-end traceability in their AI pipelines.

Regulatory Frameworks for AI Accountability

Government and industry standards can drive the adoption of better audit mechanisms. Frameworks like the EU Artificial Intelligence Act and initiatives by the NIST (National Institute of Standards and Technology) emphasize transparency, risk assessment, and auditability.

By mandating audit trails for high-risk AI systems, these regulations encourage organizations to:

- Adopt robust documentation practices.

- Perform third-party audits to ensure unbiased oversight.

- Align their systems with ethical AI principles.

Collaborating with regulators early in the design process can minimize compliance risks while building trust with users.

Case Studies: Success Stories and Lessons Learned

Finance: Tracking Decisions in Automated Loan Approvals

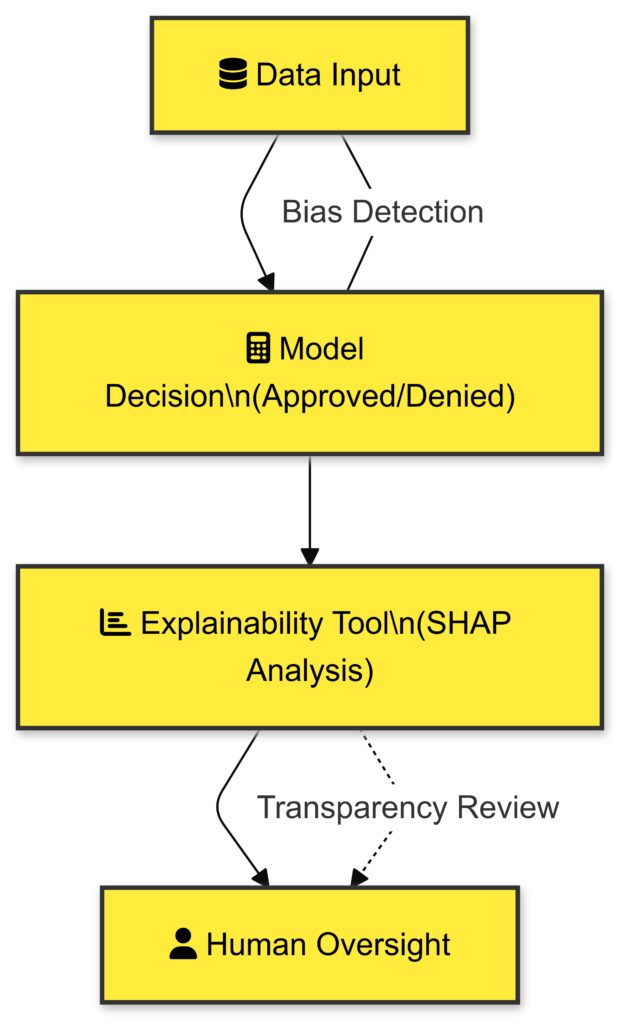

Steps in the audit trail for a loan approval AI system, ensuring fairness and compliance.

Financial institutions often rely on AI for credit scoring and loan approvals. These systems must comply with strict regulatory standards like the Fair Credit Reporting Act (FCRA) in the U.S.

One example is JPMorgan Chase’s use of XAI tools to:

- Explain why certain applications were approved or denied.

- Detect and mitigate potential biases in lending decisions.

This transparency helped the bank enhance its compliance and avoid legal challenges.

Healthcare: Ensuring Transparency in Diagnostic AI Tools

In healthcare, AI tools like IBM Watson for Oncology have faced criticism for their opaque decision-making. However, emerging solutions include:

- Integrating explainability tools into diagnostic workflows.

- Logging every recommendation and its underlying rationale, enabling doctors to challenge or validate AI outputs.

Such measures improve doctor-patient trust and ensure that AI augments, rather than replaces, human expertise.

Government Use Cases: Balancing Security and Ethics

Governments increasingly use AI for surveillance, predictive policing, and welfare distribution. However, these applications are fraught with ethical concerns.

One notable success is the UK government’s use of ethical AI frameworks to audit their welfare fraud detection systems. By ensuring transparency, they reduced public backlash and gained confidence in the system’s fairness.

However, lessons from other regions highlight pitfalls, such as the use of biased training data, which led to unintended discrimination.

The Future of Audit Trails in AI

Emerging Trends in AI Explainability

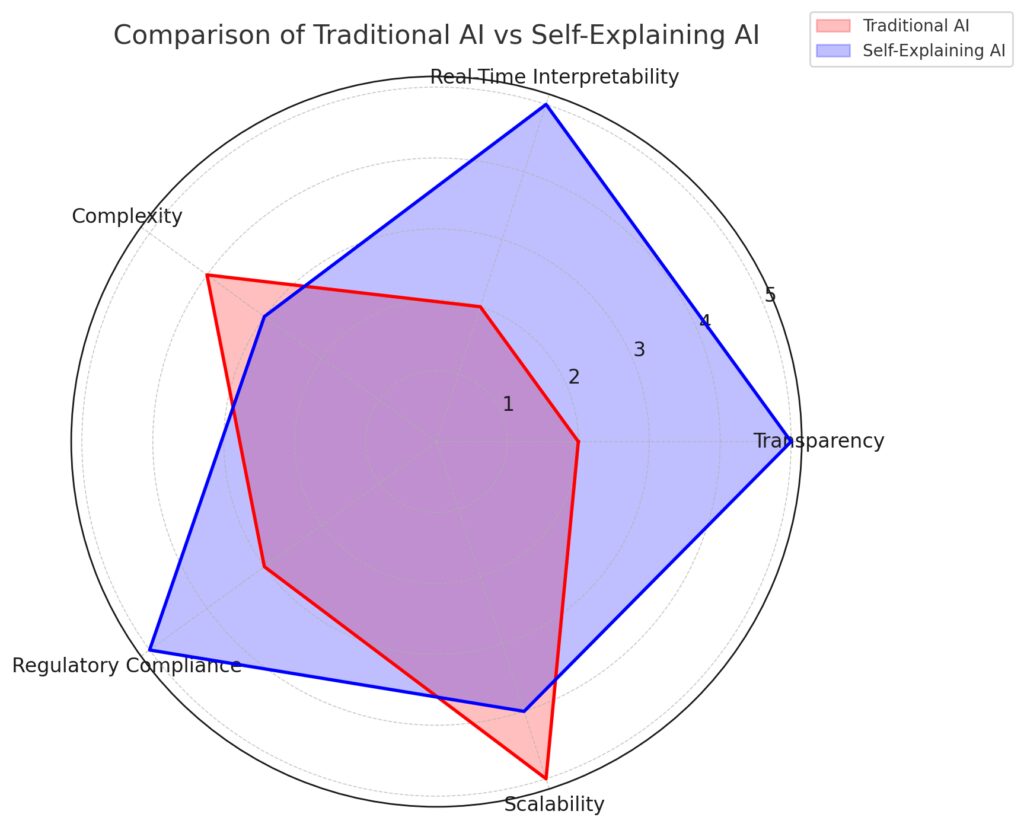

Comparing traditional AI and self-explaining AI across key attributes.

Hybrid Models Combining Accuracy and Interpretability

AI researchers are increasingly working on hybrid models that strike a balance between the accuracy of black-box systems and the interpretability of simpler algorithms.

- Neuro-symbolic AI combines neural networks with rule-based systems to enhance transparency.

- Layer-wise relevance propagation (LRP) pinpoints the specific features influencing AI decisions.

These approaches aim to embed explainability into AI design, reducing reliance on external tools for interpretation.

Decentralized and Blockchain-Based Audit Trails

Blockchain technology is being explored as a means of ensuring tamper-proof audit trails. By storing logs in a decentralized ledger, organizations can guarantee that records remain intact and traceable.

This method is particularly appealing for sectors requiring high levels of integrity, such as elections or supply chain management. It offers:

- Immutable records for decision-making histories.

- Increased trust through distributed verification.

However, scaling this approach for large AI systems is still a challenge.

AI Systems That Self-Explain

Another promising innovation is the development of self-explaining AI systems. These systems are designed to provide insights into their decision-making processes in real-time, without external tools.

For example:

- AI models could generate textual justifications for their outputs, similar to how a doctor explains a diagnosis.

- They might also include confidence scores, giving users a sense of certainty behind recommendations.

These advancements aim to enhance user understanding while maintaining the system’s performance.

AI Governance and Compliance Innovations

Standardizing Audit Frameworks

Currently, no universal standards exist for auditing AI. However, organizations like the ISO and IEEE are working on global benchmarks.

- These standards will include audit protocols tailored to different AI applications.

- They’ll also establish guidelines for cross-border compliance, addressing the challenges of AI’s global reach.

Adopting such standards can foster interoperability and trust between systems and stakeholders.

Human-in-the-Loop Auditing

AI governance frameworks increasingly emphasize the role of human oversight in maintaining accountability.

- Auditors and domain experts can evaluate high-risk decisions flagged by AI systems.

- This approach ensures a blend of human intuition and machine precision, minimizing errors or ethical lapses.

Governance models incorporating human-in-the-loop processes are already being used in sectors like finance and healthcare.

Proactive Risk Management Tools

Modern AI governance tools focus on detecting risks before they escalate. These tools analyze data flows, model behavior, and system interactions to flag potential issues.

Examples include:

- Bias detection algorithms that review datasets for imbalances.

- Continuous compliance platforms that monitor adherence to evolving regulations.

By integrating such tools, organizations can move beyond reactive audits to proactive management.

The Role of Collaborative Efforts in Building Trust

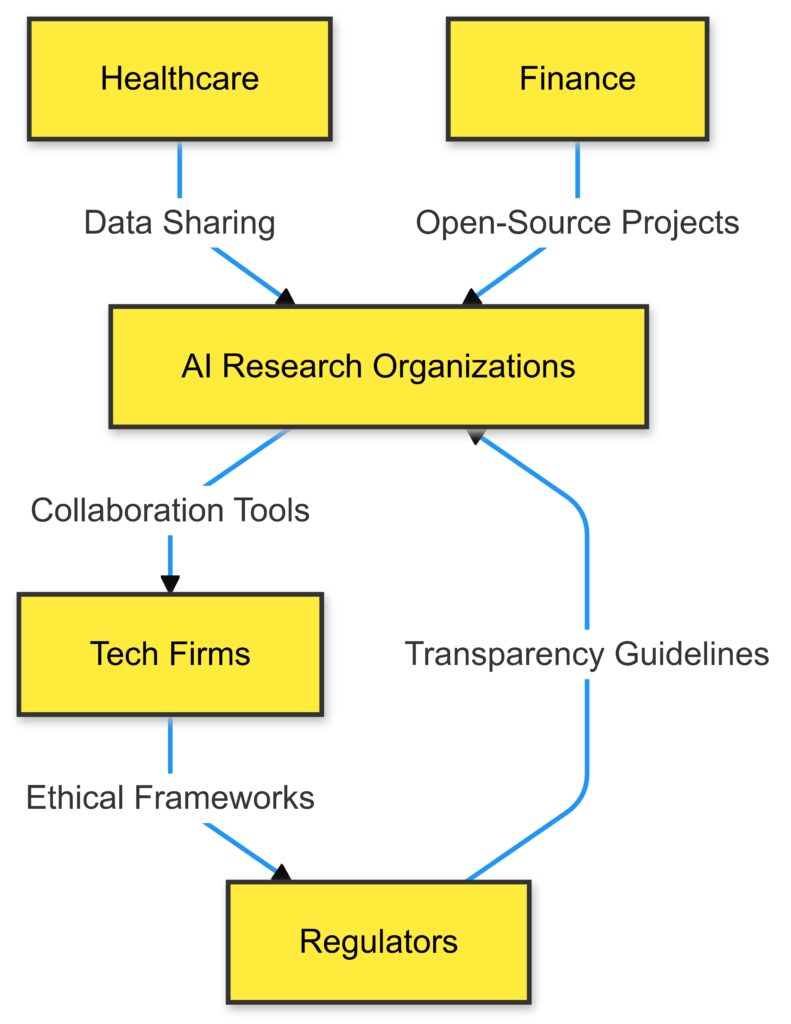

Collaborative efforts among industries and regulators to ensure trust and accountability in AI.

Cross-Industry Alliances

To tackle the challenges of black-box AI, industries must collaborate to share best practices and tools. Initiatives like the Partnership on AI bring together companies, academics, and governments to develop transparent and ethical systems.

Open-Source Platforms for Auditability

Open-source projects like Hugging Face Transformers and PyCaret allow organizations to audit AI models collaboratively. These platforms promote:

- Transparency by exposing code and workflows.

- Community-driven improvements in model explainability.

Such collaboration fosters innovation while holding developers accountable.

Public Engagement and Awareness

Lastly, building trust requires educating the public about AI’s capabilities and limitations. Transparent communication around audit trails and ethical practices can alleviate fears about AI misuse.

Efforts like Google’s AI Principles and IBM’s AI Ethics Board show how organizations can involve the public in shaping AI governance.

Conclusion: Building a Transparent Future for Black-Box AI

Audit trails are more than a technical necessity—they’re a cornerstone of trust and accountability in the age of AI. While black-box systems challenge traditional auditing methods with their opacity and evolving behavior, innovative solutions are paving the way for greater transparency.

From Explainable AI and blockchain-based logs to proactive risk management tools, we are witnessing the emergence of a robust ecosystem designed to demystify AI decisions. Collaborative efforts—spanning industries, regulators, and the public—are essential to ensure that AI systems serve humanity ethically and responsibly.

As these advancements unfold, the ultimate goal remains clear: creating AI systems that are both powerful and trustworthy, with decisions that can be explained and justified to all stakeholders. This balance will shape the sustainable integration of AI into critical domains for decades to come.

FAQs

Can blockchain technology be used for AI audit trails?

Yes, blockchain offers a promising solution for secure and tamper-proof audit trails. By storing logs in a decentralized ledger, it ensures:

- Records cannot be altered or deleted.

- Stakeholders can independently verify the system’s actions.

For example, in supply chain AI, blockchain can log every decision made by the system, such as route optimizations or supplier selections, ensuring transparency across the network.

What role do regulations play in improving AI auditability?

Regulations like the EU Artificial Intelligence Act and GDPR set clear standards for AI audit trails. They require organizations to:

- Document decision-making processes, especially for high-risk applications.

- Ensure compliance with privacy and ethical guidelines.

- Conduct regular audits to verify adherence.

For example, financial institutions using AI for fraud detection must comply with regulations like the Fair Credit Reporting Act, ensuring their systems are explainable and fair.

How can smaller organizations ensure effective AI auditability with limited resources?

Small organizations can adopt open-source tools and frameworks for auditing AI systems. Solutions like TensorFlow Extended (TFX) or PyCaret are cost-effective and support:

- Traceability in AI workflows.

- Model explainability with minimal manual intervention.

Additionally, partnering with external experts for third-party audits can provide insights without straining internal resources. For example, a small startup using AI for hiring can employ open-source bias detection tools to ensure their system treats candidates fairly.

What is the difference between black-box AI and explainable AI?

Black-box AI refers to systems whose decision-making processes are difficult or impossible to interpret, such as deep neural networks. In contrast, Explainable AI (XAI) focuses on creating models or tools that provide insights into how and why decisions are made.

For example:

- A black-box AI might approve a loan but give no clear reason.

- An XAI-enhanced model could explain, “The loan was approved due to a high credit score and stable income.”

How do audit trails help reduce bias in AI systems?

Audit trails help identify and address biases by documenting every decision and its contributing factors. Developers can analyze logs to detect whether:

- Certain groups are consistently disadvantaged.

- Training data contains imbalances that lead to unfair outcomes.

For instance, an audit trail might reveal that an AI hiring tool favors candidates from specific universities, prompting adjustments to ensure equal opportunities for all applicants.

What industries benefit most from AI audit trails?

While all AI applications benefit from audit trails, certain industries rely on them heavily due to ethical and regulatory demands:

- Healthcare: Ensuring diagnostic accuracy and ethical treatment recommendations.

- Finance: Avoiding discriminatory lending practices and maintaining compliance.

- Government: Increasing transparency in public service algorithms, such as welfare distribution.

For example, in healthcare, an AI audit trail could track why an algorithm recommended surgery over alternative treatments, helping physicians explain the choice to patients.

Are audit trails required for compliance with AI regulations?

Yes, audit trails are often mandatory under AI-related regulations, particularly for systems classified as high-risk. These requirements aim to:

- Provide evidence of compliance with ethical guidelines.

- Ensure accountability in decision-making processes.

For instance, under the EU Artificial Intelligence Act, high-risk systems like biometric identification tools must maintain detailed logs to verify decisions are unbiased and lawful.

What tools are available to create and maintain AI audit trails?

Organizations can use a mix of open-source and commercial tools to implement audit trails, such as:

- TensorFlow Extended (TFX): Provides end-to-end traceability in AI workflows.

- Explainability tools: Tools like SHAP, LIME, or IBM’s AI Explainability 360 help interpret black-box decisions.

- Data lineage tools: Platforms like DataRobot track data inputs and outputs.

For example, a retail company using AI for customer personalization might deploy TFX to log how customer data influences product recommendations.

Can AI systems self-audit?

Yes, emerging AI technologies aim to incorporate self-auditing capabilities, allowing systems to generate their own audit trails. These systems:

- Log every decision made, along with the contributing factors.

- Flag potential ethical or compliance issues in real-time.

For example, an AI chatbot might log its responses to customer queries and automatically highlight instances where sensitive topics were discussed, making human review easier.

How do audit trails enhance trust in AI?

Audit trails enhance trust by making AI decisions transparent and verifiable. Users, regulators, and stakeholders can review logs to ensure the system adheres to:

- Ethical standards.

- Regulatory compliance.

- Fair treatment of all individuals.

For instance, public sector AI, such as welfare distribution algorithms, can maintain citizen trust by showing how decisions about benefits were calculated and verified.

What happens if an audit trail reveals an error or bias?

If an audit trail uncovers an error or bias, it allows organizations to take corrective actions such as:

- Adjusting the algorithm or retraining it with balanced data.

- Enhancing input controls to prevent future issues.

- Reporting the findings to regulators or stakeholders to maintain transparency.

For example, if a hiring AI consistently excludes women from leadership roles, the company can use audit logs to pinpoint the issue and retrain the model using diverse and balanced datasets.

Resources

Research Papers and Articles

- “Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities, and Challenges” by Adadi and Berrada (2018)

Published in IEEE Access, this paper delves into the principles of explainable AI, a critical component of audit trails. - “Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims” by DeepMind Ethics and Society Team (2020)

Explains strategies for designing AI systems with transparency and verifiability in mind. - “AI Governance: Balancing Trust and Regulation” by the World Economic Forum (2021)

Focuses on regulatory frameworks and the role of auditability in AI governance.

Tools and Frameworks

- SHAP (SHapley Additive exPlanations)

A popular tool for interpreting predictions made by machine learning models.

Learn more here - LIME (Local Interpretable Model-agnostic Explanations)

Simplifies black-box AI decisions by explaining individual predictions.

Official documentation - TensorFlow Extended (TFX)

Provides workflow management and audit trail creation for machine learning pipelines.

Explore TFX - H2O.ai Explainability Suite

Offers tools to explain machine learning models, including visualizations and interpretability metrics.

Visit H2O.ai

Courses and Tutorials

- Coursera: Explainable AI (XAI) by IBM

Covers methods and tools for creating interpretable AI systems, ideal for those implementing audit trails.

Enroll here - edX: Ethics of AI by Harvard University

Focuses on the ethical implications of AI, including transparency and accountability.

Access course details - Kaggle Tutorials on Model Interpretability

Practical guides to applying interpretability tools in real-world machine learning projects.

Visit Kaggle

Organizations and Initiatives

- Partnership on AI

A global consortium advocating for transparency and ethical AI practices.

Official website - AI Now Institute

Conducts research on the societal impact of AI, focusing on accountability and regulation.

Visit AI Now - NIST (National Institute of Standards and Technology)

Provides guidelines and frameworks for explainable and auditable AI systems.

Explore NIST AI Resources

Webinars and Conferences

- The AI Explainability 360 Webinar Series by IBM

Focuses on open-source tools and techniques for improving explainability. - Global AI Summit (Annually)

Features sessions on auditing AI systems, governance, and emerging technologies in explainability.

Check for upcoming events - NeurIPS (Conference on Neural Information Processing Systems)

Includes workshops on transparency and auditability in machine learning.

Conference details