Simultaneous Localization and Mapping (SLAM) plays a critical role in robotics and autonomous systems, where real-time mapping and location tracking are paramount.

As SLAM algorithms evolve, it’s crucial to understand how to measure their performance accurately and consistently. Benchmarking SLAM with reliable metrics and best practices provides insights into an algorithm’s effectiveness and paves the way for continuous improvement.

Understanding SLAM: What Makes It So Critical?

Why SLAM Matters for Robotics and Autonomous Systems

SLAM is essential for enabling robots to map unknown environments while simultaneously tracking their position. This dual capability is fundamental for applications ranging from self-driving cars to robotic vacuum cleaners. SLAM algorithms are evaluated on their precision, efficiency, and robustness, especially in challenging or dynamic environments.

Core Components of SLAM Algorithms

SLAM consists of two core elements:

- Localization: Keeping track of the robot’s position within the mapped environment.

- Mapping: Building an accurate representation of the environment as the robot explores.

Without these, an autonomous system would struggle to navigate effectively. Hence, benchmarking SLAM is essential for assessing how well an algorithm performs these tasks, especially under varying conditions.

Key SLAM Metrics for Accurate Benchmarking

Absolute Trajectory Error (ATE)

Absolute Trajectory Error (ATE) is a primary metric that measures the global accuracy of a SLAM algorithm by comparing the estimated and ground truth trajectories. A low ATE score indicates high localization accuracy, essential for applications requiring precise navigation.

- Use Case: ATE is valuable in scenarios where a robot’s trajectory is critical, such as drone mapping or autonomous driving.

- Challenges: Although it’s an essential metric, ATE doesn’t capture local inconsistencies in the trajectory.

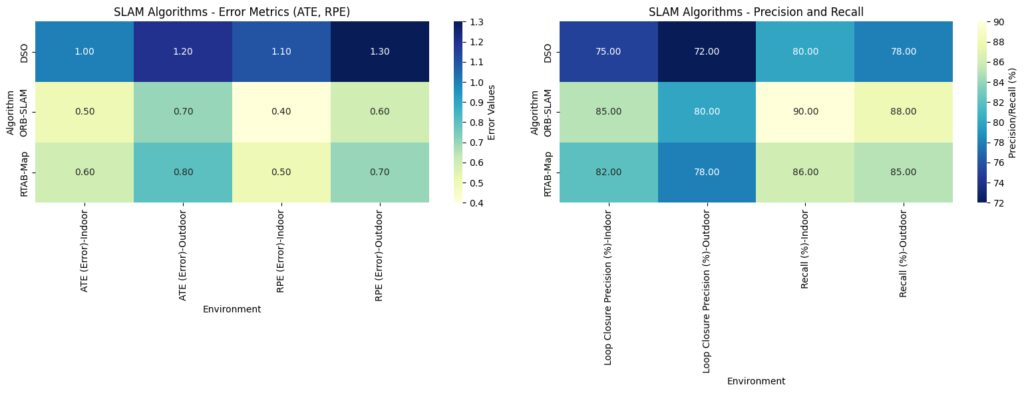

Data Structure: Each SLAM algorithm’s performance in indoor and outdoor environments for four metrics:Error Metrics: Absolute Trajectory Error (ATE) and Relative Pose Error (RPE) (lower is better).

Precision Metrics: Loop Closure Precision and Recall (higher is better).

Heatmap Visualization:Error Metrics Heatmap: Lighter colors represent lower errors, indicating better performance.

Precision/Recall Heatmap: Darker colors represent lower precision or recall, highlighting variations in performance across algorithms and environments.

Relative Pose Error (RPE)

Relative Pose Error (RPE) assesses the accuracy of short-term movement estimates by comparing incremental position changes. It’s useful for understanding local accuracy, crucial in applications where smooth and predictable movement is essential.

- Use Case: This metric is particularly relevant for indoor robots where smaller, frequent movements need precise tracking.

- Limitations: RPE alone may not reflect the overall accuracy of the map, so it’s often used alongside ATE.

Loop Closure Precision and Recall

Loop closure is essential in SLAM, as it helps correct trajectory drift over time. Loop closure precision measures the percentage of correctly identified loop closures, while recall measures the algorithm’s ability to detect all relevant loop closures.

- Importance: High loop closure performance minimizes accumulated drift and improves map accuracy.

- Challenges: Achieving a balance between precision and recall is crucial, as low recall can lead to trajectory errors, while low precision might create false corrections.

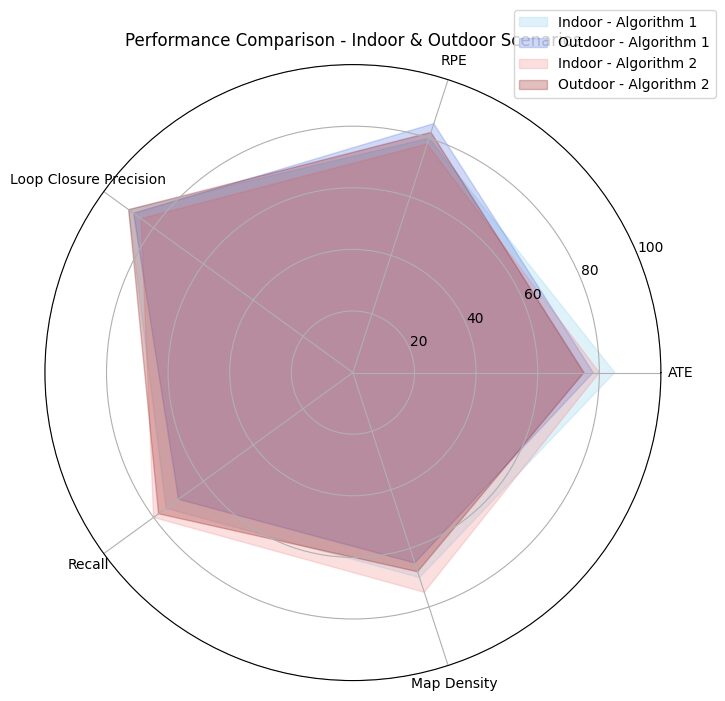

Algorithm 1 is represented in shades of blue:

Light blue for indoor performance.

Dark blue for outdoor performance.

Algorithm 2 is represented in shades of red:

Light red for indoor performance.

Dark red for outdoor performance.

Mapping Quality Metrics

Map Consistency

Map consistency measures how accurately and reliably the SLAM algorithm maps the environment over time. An inconsistent map might show discrepancies that could confuse the robot during localization or navigation.

- Application: In applications where the robot revisits certain areas, map consistency helps in assessing the reliability of the SLAM system over time.

- Evaluation: Consistent mapping typically translates to fewer errors in revisited sections, enhancing reliability.

Map Density and Coverage

Map density refers to the level of detail captured in the map, while coverage measures the completeness of the map. Higher density and coverage mean the map is more informative and practical for navigation.

- Use Case: Both metrics are essential in SLAM systems for inspection or surveying, where detailed and complete maps are required.

- Challenges: Balancing density with processing efficiency is often a challenge, as denser maps can strain computational resources.

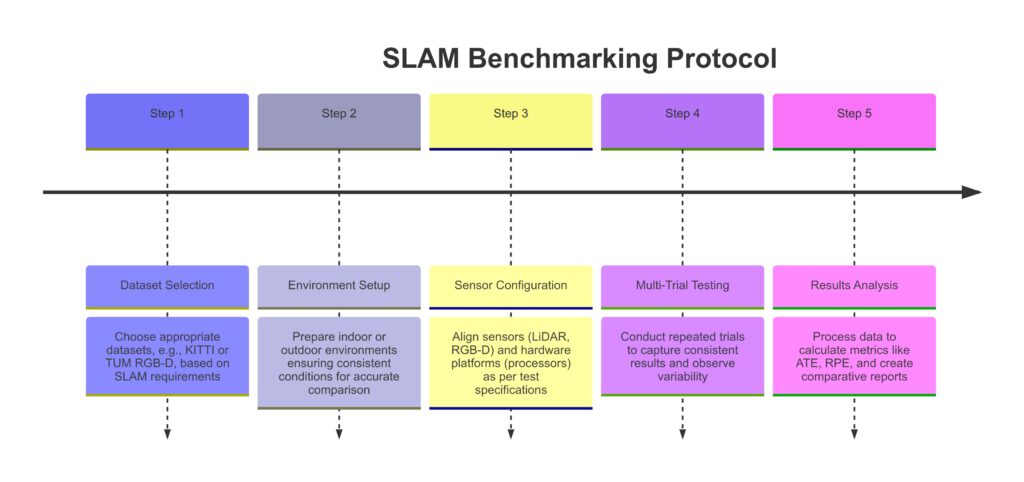

Practical Benchmarking Protocols for SLAM

Standardized Datasets and Benchmarks

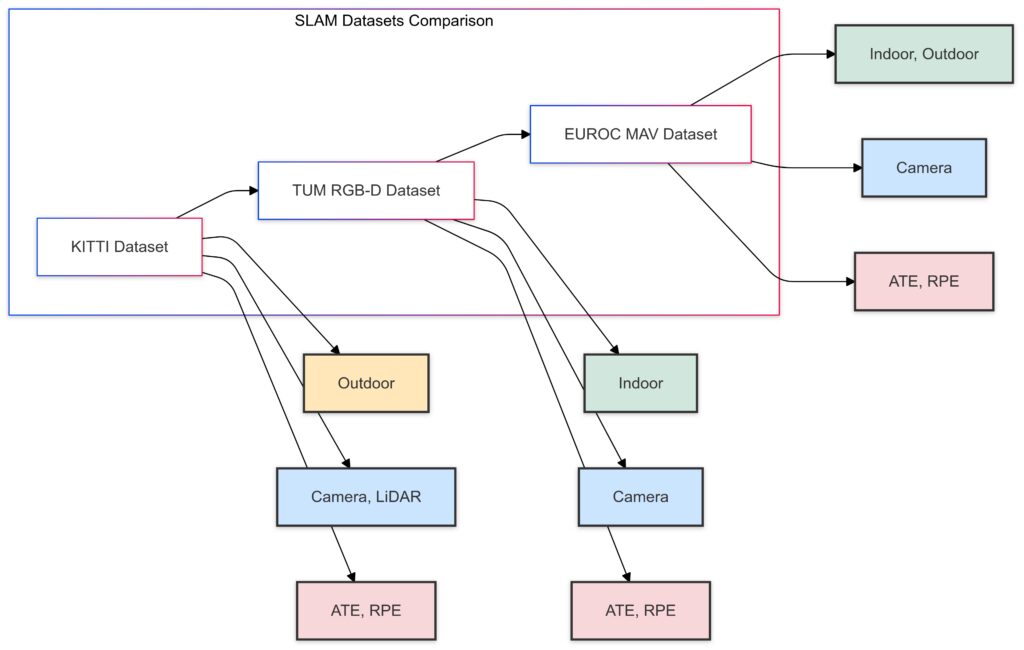

Using standardized datasets, like KITTI for outdoor environments or TUM RGB-D for indoor scenarios, ensures that SLAM algorithms are evaluated under controlled, repeatable conditions.

- Benefit: Standardized datasets help create a common ground for evaluating SLAM performance, aiding in fair comparisons across different algorithms.

- Examples: In addition to KITTI and TUM RGB-D, other datasets like EUROC and ETH Zurich MAV also provide diverse environments for robust testing.

Dataset Type (Indoor/Outdoor)

Sensor Type (Camera, LiDAR)

Key Metrics Measured (ATE, RPE)

Evaluation in Real-World Scenarios

Testing SLAM in real-world conditions is vital for understanding its performance under varying lighting, dynamic objects, and unexpected obstacles.

- Importance: Real-world testing reveals potential limitations and helps fine-tune the SLAM algorithm for practical applications.

- Best Practice: Use scenarios similar to the intended application environment; for example, a SLAM algorithm for autonomous drones should be tested in both open and obstacle-dense areas.

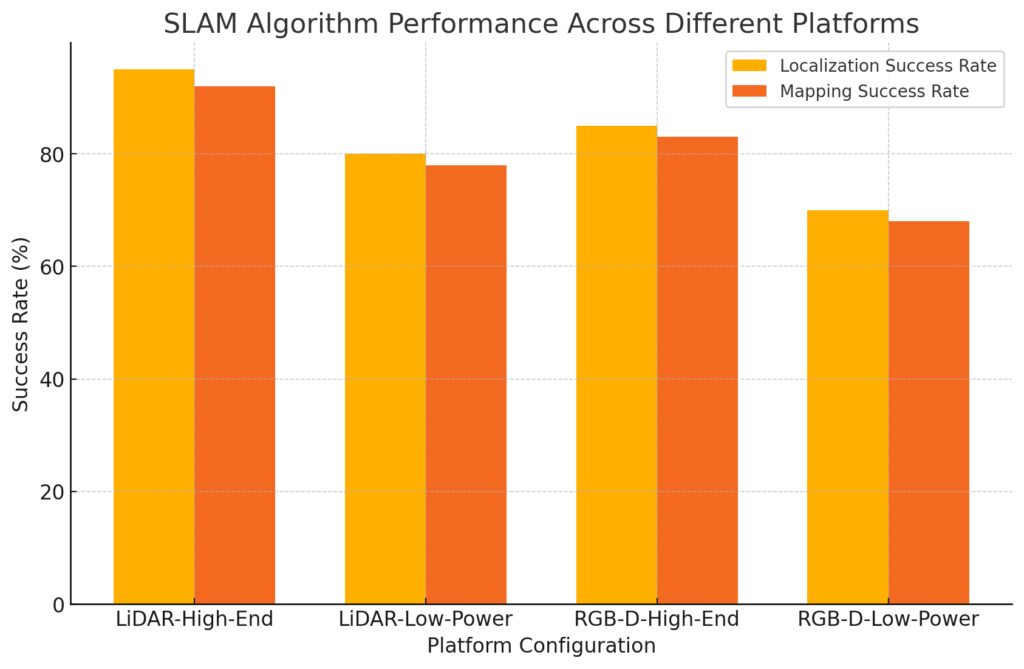

Cross-Platform Testing

Testing SLAM on different hardware configurations—such as various sensors and processing units—ensures compatibility and reliability across platforms.

- Example: A SLAM algorithm optimized for LiDAR may perform differently with visual sensors; testing on both types gives a more comprehensive assessment.

- Advantage: This type of testing is crucial for applications where flexibility in hardware is a benefit, such as autonomous vehicles that might use different sensor suites.

LiDAR-Low-Power: Lower-power devices with LiDAR sensors.

RGB-D-High-End: High-performance processors with RGB-D sensors.

RGB-D-Low-Power: Lower-power devices with RGB-D sensors.

Handling SLAM Challenges in Diverse Environments

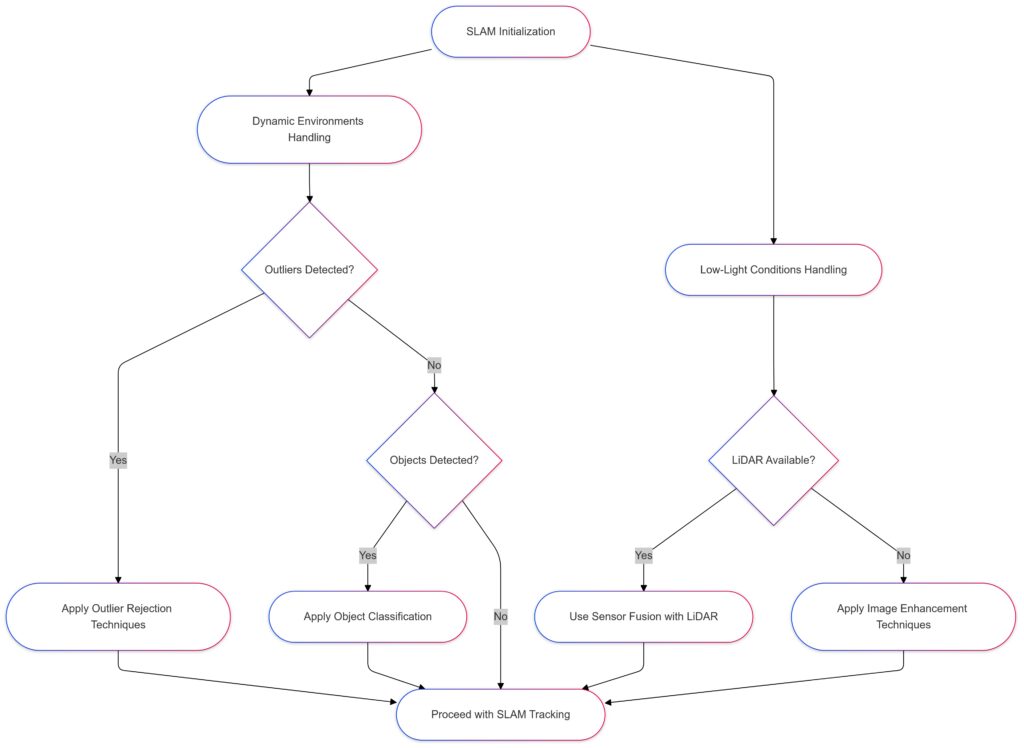

Dynamic Environments

One of the biggest challenges in SLAM is managing dynamic environments with moving objects, like people, vehicles, or animals. Standard SLAM systems often struggle to maintain accuracy in the presence of these variables.

- Solution: Algorithms designed for dynamic environments often rely on outlier rejection methods to ignore moving objects or classify static and dynamic components.

- Example: For autonomous cars, effective dynamic object handling is essential to avoid tracking errors caused by pedestrians or other vehicles.

Low-Light and Low-Texture Environments

SLAM algorithms relying on vision-based sensors can struggle in low-light or low-texture areas (e.g., dark hallways or plain walls), leading to reduced localization accuracy.

- Solution: To address this, some SLAM systems incorporate sensor fusion with sources like LiDAR, which isn’t affected by lighting, or IMU sensors to aid tracking.

- Use Case: Indoor drones or warehouse robots benefit from sensor fusion in low-light areas to maintain performance consistency.

Large-Scale and Outdoor Mapping

For large-scale environments, maintaining consistent mapping accuracy over vast distances is a significant challenge due to the accumulation of error, known as drift.

- Solution: Utilizing loop closures and global optimization techniques helps correct errors by periodically recalibrating the system.

- Best Practice: Outdoor applications like agricultural drones often depend on these methods to avoid errors in large, open environments.

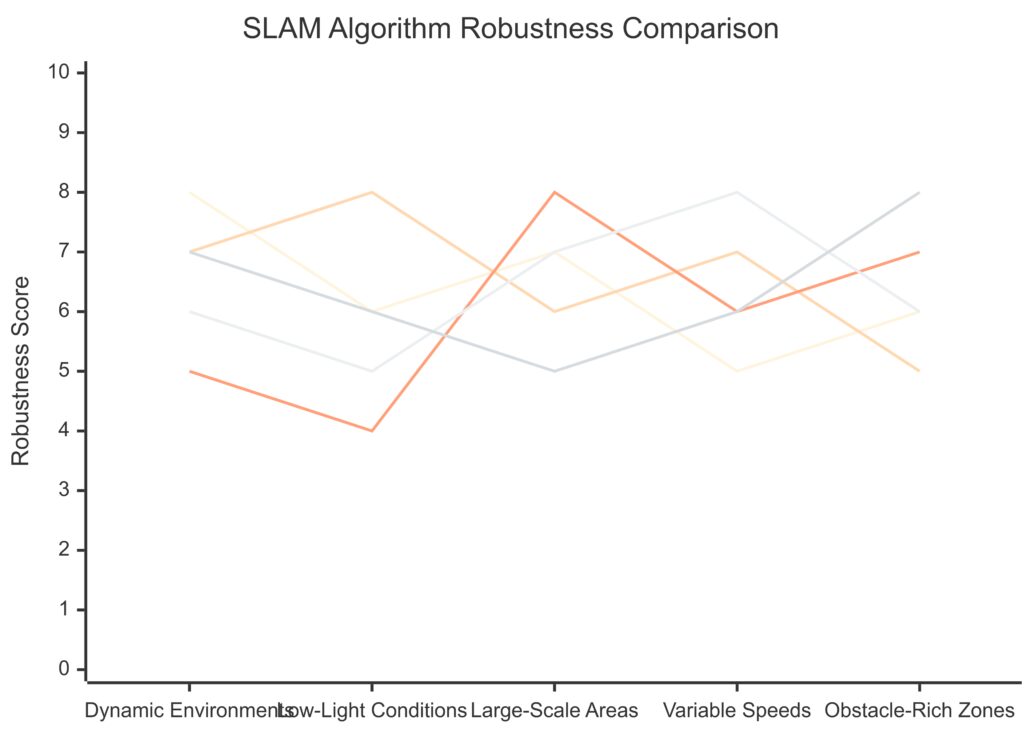

Evaluating Robustness: Stress Testing SLAM Algorithms

Extreme Weather Conditions

Testing SLAM under extreme weather (e.g., rain, fog, snow) is crucial for outdoor applications. Weather affects visual SLAM by obscuring camera input and can even impact LiDAR readings.

- Approach: Artificially creating these conditions in controlled environments or using weather-inclusive datasets can simulate real-world scenarios.

- Case Example: Autonomous cars benefit from this testing, as it prepares SLAM algorithms for adverse driving conditions.

Y-axis: The performance score for each SLAM algorithm across each condition.

Variable Speeds and Accelerations

Different speeds impact SLAM performance, especially in high-speed applications where errors accumulate quickly. Rapid accelerations or abrupt changes in direction can disrupt SLAM’s tracking ability.

- Testing Approach: Evaluate the algorithm at various speeds and maneuvers, from slow and steady to fast and erratic movements.

- Benefit: Robots or drones in environments like search and rescue missions, where speed is critical, require SLAM algorithms tested in this way to avoid critical mapping errors.

Occlusions and Obstacles

Frequent occlusions—objects temporarily blocking the SLAM sensors—pose another significant challenge. These interruptions can hinder the algorithm’s ability to maintain an accurate trajectory.

- Solution: Some SLAM algorithms employ predictive tracking to anticipate the movement when visibility is temporarily lost.

- Use Case: Warehouse robots and indoor drones, where objects like shelves or people may often block sensors, benefit from robust handling of occlusions.

Common Benchmarks and Standards for SLAM Performance

KITTI Dataset for Outdoor Environments

The KITTI dataset is a popular benchmark for evaluating SLAM in outdoor settings. Its real-world sequences include complex scenarios with varied lighting, moving objects, and road types, ideal for autonomous vehicle applications.

- Key Metrics: KITTI measures absolute trajectory error (ATE) and relative pose error (RPE) to test localization accuracy.

- Best Use Case: Autonomous cars and outdoor robots benefit significantly from testing with KITTI due to its real-world relevance.

TUM RGB-D Dataset for Indoor SLAM

For indoor SLAM, the TUM RGB-D dataset is widely used, providing RGB-D (color and depth) data sequences captured in indoor environments. This dataset is excellent for evaluating SLAM algorithms in household or indoor commercial applications.

- Core Metrics: TUM includes ATE and RPE measurements and also offers ground truth trajectory data for accuracy comparisons.

- Ideal Applications: Indoor robots, such as home assistants or warehouse bots, gain significant insights from testing with TUM RGB-D.

EUROC MAV Dataset for Aerial SLAM

The EUROC MAV dataset provides data specifically for micro aerial vehicles (MAVs), such as drones, in indoor and semi-outdoor environments. It includes sequences with varying lighting and textures, testing a SLAM algorithm’s versatility in complex settings.

- Unique Aspects: EUROC includes challenging sequences, such as dimly lit rooms, narrow corridors, and areas with multiple obstacles.

- Perfect Fit: Aerial drones operating in inspection, surveillance, or mapping applications benefit from this dataset, as it closely resembles real-world conditions.

Best Practices for Benchmarking SLAM: Tips for Reliable Results

Consistent Evaluation Settings

To benchmark SLAM algorithms reliably, standardize your evaluation settings across tests. This includes setting up identical sensor configurations, map scales, and environmental conditions where possible.

- Why It Matters: Consistent settings allow for reliable comparisons between different SLAM algorithms, eliminating variability from extraneous factors.

- Example: When testing indoor robots, keep lighting levels and obstacle placement the same to accurately compare performance across SLAM models.

Repeated Testing in Varying Conditions

Perform multiple trials in varied conditions (e.g., different lighting, speeds, and obstacles) to understand an algorithm’s robustness and adaptability to different scenarios.

- Best Practice: SLAM systems with high repeatability in varying conditions demonstrate greater real-world applicability.

- Use Case: Repeated testing in complex indoor spaces helps ensure that the SLAM algorithm is ready for dynamic environments like warehouses or public areas.

Utilizing Ground Truth Comparison

Whenever possible, compare SLAM-generated maps with ground truth data—accurate reference maps that provide a baseline for comparison.

- Advantage: Ground truth comparison allows for precise accuracy measurements, making it easier to identify specific areas for improvement.

- Example: Using ground truth maps for benchmarking ensures accurate trajectory and mapping performance, beneficial for applications requiring high localization precision.

By benchmarking SLAM algorithms with standardized metrics, robust testing protocols, and reliable evaluation datasets, you can gain a clear picture of their strengths and weaknesses. Understanding these factors empowers developers to make informed choices and optimize SLAM algorithms for specific real-world applications.

FAQs

How does SLAM handle dynamic environments?

SLAM algorithms handle dynamic environments using techniques like outlier rejection to ignore moving objects or classifying static and dynamic components to maintain tracking accuracy. Specialized algorithms are often designed to address the challenges of navigating through spaces with frequent changes.

What is the difference between ATE and RPE?

Absolute Trajectory Error (ATE) measures the global accuracy of the entire trajectory by comparing it to a ground truth trajectory, while Relative Pose Error (RPE) focuses on the short-term movement between successive poses, evaluating local accuracy and consistency.

Why is real-world testing important for SLAM?

Testing SLAM in real-world environments—such as dynamic, low-light, or obstacle-filled spaces—reveals limitations and practical challenges that may not appear in controlled settings. Real-world testing ensures SLAM algorithms can perform well in their intended operational conditions.

What does sensor fusion mean in SLAM?

Sensor fusion in SLAM involves combining data from multiple sensors, such as cameras, LiDAR, and IMU sensors, to improve mapping and localization accuracy. This approach enhances performance in challenging environments, like low-light areas, by compensating for the limitations of individual sensors.

How do SLAM algorithms minimize mapping errors over time?

To reduce accumulated errors, or drift, SLAM algorithms use loop closure techniques, which detect previously visited areas to recalibrate the map. Global optimization techniques also help refine the trajectory, improving both mapping accuracy and consistency over extended distances.

What is the role of loop closure in SLAM?

Loop closure is a critical feature in SLAM that helps reduce trajectory drift by recognizing when the system revisits previously mapped areas. By re-aligning to these known points, loop closure corrects cumulative errors and improves the accuracy and consistency of the map, especially over long distances or repeated paths.

How does SLAM perform in low-texture or low-light environments?

In low-texture or low-light environments, visual-based SLAM algorithms can struggle to identify reliable features for localization and mapping. To address this, some SLAM systems use sensor fusion with LiDAR or depth cameras, which are less affected by lighting conditions, allowing the SLAM algorithm to maintain accuracy and stability.

What types of sensors are commonly used in SLAM?

SLAM systems often utilize a combination of cameras (for visual SLAM), LiDAR (for distance measurements), and Inertial Measurement Units (IMUs) for motion data. The choice of sensors depends on the environment and application; for instance, LiDAR is common in autonomous vehicles, while RGB-D cameras are popular in indoor environments.

Why is benchmarking across different platforms important for SLAM?

Cross-platform benchmarking evaluates SLAM algorithms on various hardware setups, like different processors and sensor types. This ensures the SLAM system is hardware-agnostic and can maintain its performance across multiple devices, from high-end servers to low-power embedded systems, making it versatile for various applications.

How do SLAM algorithms address obstacles and occlusions?

SLAM algorithms manage obstacles and occlusions through predictive tracking and outlier rejection techniques, which allow them to maintain accuracy even when objects temporarily block sensors. These capabilities are particularly useful in dynamic environments, like warehouses, where moving objects often create temporary occlusions.

What are Absolute Trajectory Error (ATE) and Relative Pose Error (RPE)?

ATE measures the overall trajectory accuracy of the SLAM system by comparing it to a ground truth path. RPE assesses the algorithm’s short-term accuracy by looking at successive poses, allowing for a more detailed view of how SLAM performs in smaller movements or over shorter distances.

How does SLAM adapt to high-speed movements?

SLAM systems designed for high-speed applications, like drones or autonomous vehicles, rely on fast processing and high-frequency sensor updates to maintain localization accuracy. Techniques like predictive modeling and sensor fusion help adapt SLAM to rapid changes in movement, minimizing errors and ensuring reliable tracking.

Can SLAM algorithms work outdoors as well as indoors?

Yes, but performance can vary depending on the algorithm and sensors used. For example, visual SLAM is sensitive to lighting changes, so it might struggle outdoors in extreme lighting conditions, while LiDAR-based SLAM can perform well both indoors and outdoors. Different SLAM algorithms may be optimized for specific environments, so cross-environment testing is essential for robust performance.

What are best practices for SLAM benchmarking?

To benchmark SLAM accurately, use standardized datasets, maintain consistent evaluation settings, and perform multiple trials in varied conditions. Including real-world testing with ground truth comparisons helps provide a comprehensive assessment, revealing the SLAM system’s adaptability to different scenarios and environmental challenges.

How does map consistency impact SLAM performance?

Map consistency is key to SLAM accuracy and reliability over time, especially when the system revisits certain areas. Consistent mapping reduces errors and ensures the robot or autonomous vehicle has an up-to-date and accurate representation of the environment, improving decision-making and navigation in dynamic spaces.

Resources

Key Datasets for SLAM Benchmarking

- KITTI Vision Benchmark Suite

Description: KITTI provides high-quality data captured from an autonomous vehicle platform, specifically designed for benchmarking outdoor SLAM and vision-based applications. Includes stereo images, LiDAR scans, and GPS information.

Link: KITTI Dataset - TUM RGB-D Dataset

Description: A popular dataset for indoor SLAM, TUM offers RGB-D sequences (color and depth) captured from a handheld Kinect camera. It includes high-quality ground truth trajectory data, making it ideal for testing accuracy in confined spaces.

Link: TUM RGB-D Dataset - EUROC MAV Dataset

Description: Designed for micro aerial vehicle (MAV) applications, EUROC provides sequences from MAV flights in industrial and indoor spaces, featuring complex scenes with varied lighting and obstacles.

Link: EUROC MAV Dataset

Academic Papers and Publications

- “ORB-SLAM: A Versatile and Accurate Monocular SLAM System” by Raul Mur-Artal et al.

Overview: This foundational paper presents ORB-SLAM, a well-known SLAM algorithm for monocular cameras. It covers the technical approach, from feature extraction to loop closure, and discusses its application in real-world scenarios.

Link: PDF on ResearchGate - “A Benchmark for the Evaluation of RGB-D SLAM Systems” by J. Sturm et al.

Overview: This paper introduces the TUM RGB-D dataset and discusses benchmark metrics for evaluating SLAM accuracy. It’s valuable for understanding data requirements and common challenges in indoor SLAM.

Link: Link on IEEE Xplore - “LDSO: Direct Sparse Odometry with Loop Closure” by Jiegen Ling and Liang Zhao

Overview: This paper presents LDSO, an innovative SLAM approach combining sparse odometry with loop closure. It’s useful for researchers interested in odometry-based SLAM and improved loop closure methods.

Link: Link on arXiv

Tools and Software for SLAM

- ROS (Robot Operating System)

Description: ROS is a powerful, flexible framework for developing robot software. It includes a wide range of SLAM packages and is widely used in robotics research and applications.

Link: ROS Wiki - OpenVSLAM

Description: An open-source visual SLAM framework that supports monocular, stereo, and RGB-D cameras. It’s designed to be flexible and works with several SLAM datasets.

Link: OpenVSLAM GitHub - SLAMBench

Description: SLAMBench is a benchmarking framework developed by the University of Edinburgh. It allows researchers to test and evaluate SLAM algorithms on different hardware architectures.

Link: SLAMBench GitHub

Online SLAM Communities and Forums

- SLAM Research on Reddit

Description: A community where SLAM researchers and enthusiasts discuss the latest developments, datasets, tools, and troubleshooting in SLAM.

Link: r/SLAM on Reddit - ROS Discourse

Description: The official discussion forum for the ROS community. It’s an excellent place to connect with other SLAM developers, share insights, and seek advice on ROS-based SLAM projects.

Link: ROS Discourse Forum - GitHub’s Robotics Repositories

Description: GitHub hosts numerous repositories for SLAM algorithms, research papers, and SLAM-related tools. Searching for SLAM projects on GitHub can provide practical insights and access to cutting-edge developments.

Link: GitHub Search for SLAM