Understanding the Problem You’re Solving

Define the business or research goal

Before diving into code, crystal clarity on the objective is non-negotiable. Are you predicting customer churn? Detecting spam? Classifying images?

Write it out plainly—what outcome are you hoping machine learning will drive?

Identify your target variable

This is your predicted output—also called the “label” or “dependent variable.” Think: is it a yes/no? A number? A category?

Clearly knowing your target lets you pick the right kind of algorithm—regression, classification, or clustering.

Know your constraints

Time, computing power, budget, data availability—they all matter. Don’t shoot for a neural network if you only have 500 data points and a laptop from 2016.

Collecting and Preprocessing the Data

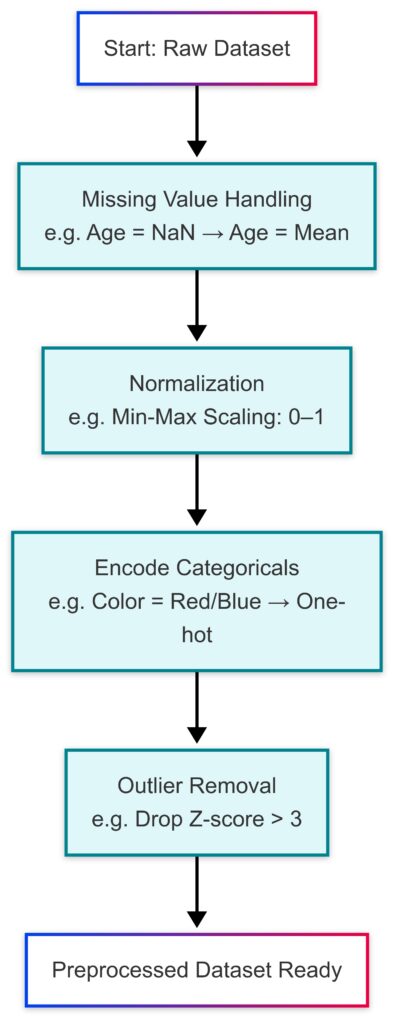

Standard data preprocessing pipeline from raw input to model-ready format, with real-world preprocessing techniques.

Get your hands on the right dataset

You can collect data internally, scrape it from the web, or use public datasets like those from Kaggle or UCI Machine Learning Repository. Quality trumps quantity.

If the data doesn’t align with your problem, it’s back to square one.

Clean it like you mean it

Raw data is messy. Expect missing values, inconsistent formats, and outliers. Use techniques like:

- Imputation

- Outlier removal

- Type conversion

This step is tedious—but it makes or breaks your model.

Feature engineering is your secret weapon

Create meaningful variables from your raw data. This could be:

- Turning dates into weekday/weekend

- Splitting full names into first and last

- Extracting text sentiment

These tweaks often yield huge gains.

Choosing the Right Algorithm

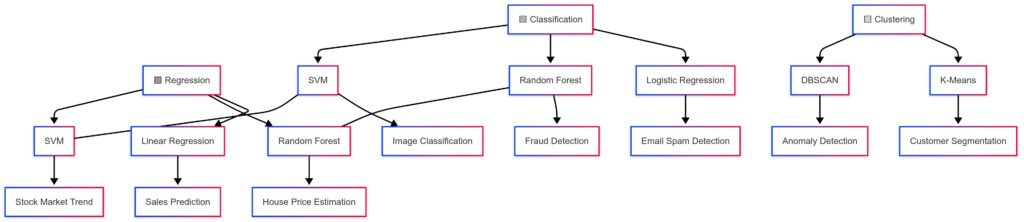

🔗 Structure Overview:

| Task Type | Algorithms | Example Use Cases |

|---|---|---|

| Classification | Logistic Regression, SVM, Random Forest | Email spam, Fraud detection, Image classification |

| Regression | Linear Regression, SVM, Random Forest | Sales prediction, Price estimation, Stock trends |

| Clustering | K-Means, DBSCAN | Customer segmentation, Anomaly detection |

📌 Overlapping models (Random Forest & SVM) are cross-linked between tasks.

Match the algorithm to the task

If you’re predicting categories, go with classification models like Decision Trees, Random Forests, or SVMs. For numerical outcomes? Try Linear Regression or XGBoost.

Want to find hidden patterns? Clustering tools like K-Means or DBSCAN work wonders.

Factor in interpretability vs. performance

A Logistic Regression model might underperform compared to a Neural Network—but it’s far easier to explain.

If stakeholders need transparency, lean towards simpler models.

Experiment, don’t assume

No single model is king. Always try 2–3 and compare them using metrics. Let performance—not bias—decide the winner.

Splitting the Data for Training and Testing

Why train/test splits matter

Training on all your data seems tempting—but then how will you know if your model generalizes? Always split your dataset.

Typical split? 80% training, 20% testing.

Cross-validation for better reliability

K-Fold Cross-Validation helps evaluate your model across multiple chunks of your dataset. It’s slower—but often more accurate.

Go for it when you have enough data and time.

Use stratification if necessary

Especially in imbalanced datasets (like fraud detection), ensure your train and test sets reflect the same class distribution.

This avoids skewed results.

Training the Model

Fit the algorithm to your data

Time to feed your data to the model! With libraries like Scikit-learn, it’s usually just a .fit() call.

But don’t zone out—watch for training time, memory usage, and any warnings.

Track training performance

Keep an eye on:

- Accuracy or error rates

- Loss functions (for deep learning)

- Training duration

Log everything—you’ll thank yourself later.

Overfitting vs. underfitting

Your model might nail the training set but fail in real-world scenarios. That’s overfitting.

If it fails at both? Underfitting. Try different algorithms, tune hyperparameters, or engineer better features.

Evaluating Model Performance

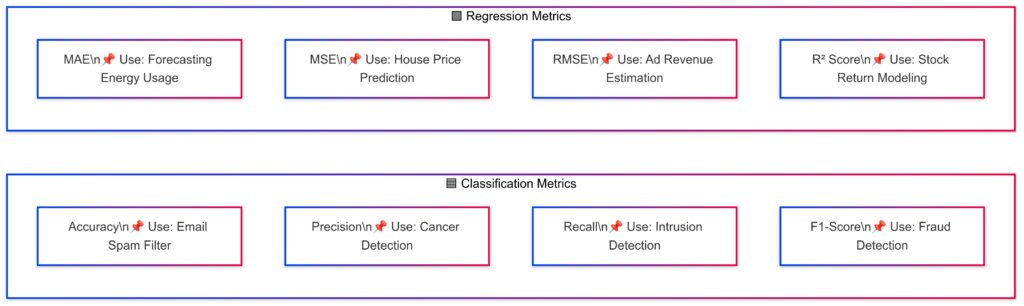

Choose the right metric

For classification, use accuracy, precision, recall, and F1-score. For regression, go for RMSE or MAE.

Don’t just pick one—interpret a few to get the full picture.

Use a confusion matrix

This grid shows how many predictions were correct vs. incorrect by class. It’s a goldmine for understanding where your model messes up.

Visualize performance

Charts like ROC curves, Precision-Recall curves, or residual plots can spotlight issues that numbers might hide.

Use them to explain and refine your model.

Key Takeaways

- Clear problem definitions prevent wasted effort.

- Preprocessing is often more impactful than the algorithm.

- No model is perfect—experiment, tune, and validate.

- The right metrics give the clearest picture of success.

Ready to deploy that model in the wild?

Up next, we’ll dive into hyperparameter tuning, real-world deployment strategies, and how to keep your model sharp over time.

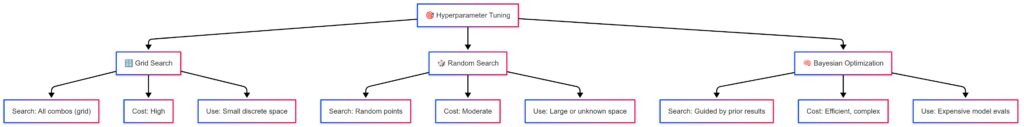

Hyperparameter Tuning for Optimal Performance

What are hyperparameters?

Unlike parameters learned during training, hyperparameters are set beforehand—things like learning rate, tree depth, or number of neighbors in KNN.

Think of them as the dials on your model’s dashboard.

Manual tuning vs. automated search

You can manually tweak settings and eyeball the results, but that’s time-consuming.

Instead, use:

- Grid Search: tries all combinations you define.

- Random Search: picks random combos (faster).

- Bayesian Optimization: smart and adaptive.

Scikit-learn’s GridSearchCV is a popular go-to.

Watch out for overfitting here too

Tuning for better scores on the validation set can accidentally make your model overly specific.

Always test the final tuned model on a separate test set to stay honest.

Deploying the Model to Production

From notebook to real-world app

Models aren’t just academic toys. To bring them to life, you need to wrap them in software—often using Flask, FastAPI, or Django.

These let you expose your model as an API.

Keep performance and scale in mind

You may need to:

- Optimize for speed

- Use caching

- Scale with Kubernetes or Docker containers

If latency or load matters, your deployment method really counts.

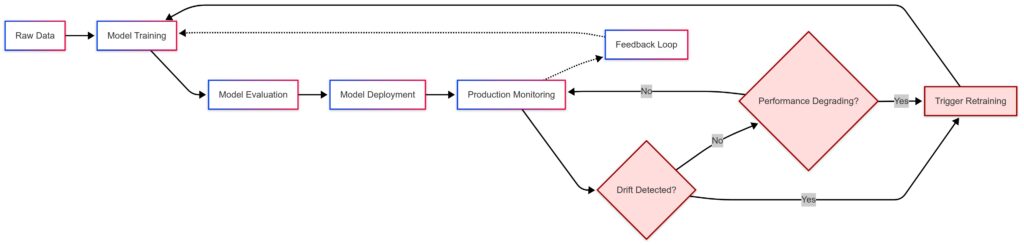

Monitor your model in production

It’s not “train and forget.” Set up logging and performance monitoring from day one.

Keep tabs on model drift, unexpected inputs, or API errors to stay ahead of issues.

Handling Model Drift and Retraining

What is model drift?

Over time, real-world data shifts. Your once-awesome model starts making weird predictions.

This is drift, and it’s super common in live systems.

Monitor input and prediction distribution

Compare live inputs and predictions to what your model saw during training. Tools like Evidently AI can alert you to drift.

Don’t wait until accuracy tanks.

Retraining strategies

You can:

- Retrain on a schedule (weekly, monthly)

- Use triggered retraining (when performance drops)

- Blend new data with old to keep a historical context

Use pipelines to make retraining smooth and repeatable.

Scaling Your ML Pipeline

From prototype to pipeline

Your Jupyter notebook works fine for small-scale dev. But in production, you need:

- Version control

- Reproducibility

- Automation

Tools like MLflow, DVC, or Kedro are worth learning.

Automate with ML pipelines

Use frameworks like Kubeflow, Airflow, or ZenML to automate:

- Data ingestion

- Preprocessing

- Model training

- Deployment

This saves time and slashes errors.

Think modular and reusable

Break tasks into standalone components. You’ll move faster and debug easier.

Reusable pipelines are your shortcut to scalable, maintainable ML systems.

Leveraging Cloud Platforms for ML

Why the cloud makes sense

Need GPUs on demand? Want automated scaling? Cloud platforms like AWS SageMaker, Google Vertex AI, and Azure ML make this accessible.

No hardware hassle—just raw power.

Built-in tools for the full lifecycle

These platforms often include:

- Data storage and preprocessing tools

- Model training and tuning

- One-click deployment

- Monitoring dashboards

All under one roof.

Know the cost trade-offs

While cloud tools are powerful, they can get expensive. Set usage limits and monitor billing dashboards to stay within budget.

Ensuring Model Explainability and Ethics

Why explainability matters

In finance, healthcare, or law, you must explain why your model made a prediction.

Tools like SHAP or LIME offer local interpretations.

Avoid bias in your model

Machine learning models can reflect real-world discrimination if trained carelessly.

Always check for bias in:

- Input features (e.g., gender, race)

- Output disparities across groups

Use fairness auditing tools to stay accountable.

Transparency builds trust

The more transparent your model, the more buy-in you’ll get from users, stakeholders, and regulators.

Build explainability into your process—not as an afterthought.

Did You Know?

SHAP values don’t just show what features mattered—they show how much each one influenced a prediction. It’s like seeing inside your model’s brain.

What’s next after implementation?

Next up, we’ll explore model governance, staying updated with the latest tools, and the future of AutoML and GenAI in ML workflows.

Implementing Model Governance and Version Control

Keep track of every experiment

Without good tracking, you’re flying blind. Use tools like MLflow, Weights & Biases, or Neptune.ai to log:

- Datasets

- Parameters

- Metrics

- Model versions

This makes debugging and collaboration much easier.

Version your models like code

Every model should have:

- A unique ID

- Metadata (who, when, what data)

- Storage location (e.g., cloud bucket, registry)

This ensures reproducibility and auditability.

Document decisions and assumptions

Model governance isn’t just technical—it’s also about transparency. Keep a living document of:

- Why certain models were chosen

- How tuning decisions were made

- Ethical considerations

This protects you and your stakeholders.

Staying Up to Date with ML Best Practices

The field moves fast—stay sharp

Subscribe to trusted sources like:

- ArXiv Sanity Preserver

- KDnuggets

- Towards Data Science

- ML newsletters and YouTube channels

New papers and tools drop weekly—so stay in the loop.

Learn from open-source projects

Explore GitHub repos for production-ready code. Check out:

- Hugging Face transformers

- Fast.ai notebooks

- Scikit-learn examples

Reading code is a shortcut to mastery.

Attend meetups and conferences

Whether virtual or in-person, events like NeurIPS, PyData, or ODSC expose you to new trends and networking opportunities.

When to Use AutoML Tools

What is AutoML?

AutoML automates the process of:

- Selecting algorithms

- Tuning hyperparameters

- Building pipelines

Platforms like Google AutoML, H2O.ai, or Auto-sklearn do the heavy lifting.

Ideal for fast prototyping

Need a quick benchmark model? AutoML is perfect. It’s also great for:

- Non-experts

- Business analysts

- Small teams without data science bandwidth

But it won’t replace deep expertise (yet).

Know its limits

AutoML can’t:

- Replace domain knowledge

- Handle complex feature engineering well

- Guarantee optimal results

Use it as a launchpad—not a crutch.

Integrating Generative AI with ML Pipelines

Use GenAI to supercharge data tasks

Generative AI models like GPT, Claude, or Gemini can:

- Generate synthetic training data

- Write feature engineering code

- Auto-generate documentation

This dramatically cuts dev time.

GenAI helps with explainability too

Want to explain model behavior in plain English? GenAI tools can translate SHAP outputs into user-friendly insights.

Now your dashboards talk human.

Still needs supervision

GenAI is powerful—but error-prone. Always review outputs before use in production.

Treat GenAI as a co-pilot, not an autopilot.

Planning for Long-Term ML Maintenance

Build for sustainability

Your model isn’t done at deployment. Plan ahead with:

- Monitoring tools

- Retraining scripts

- Clear data refresh cycles

Think like an engineer, not just a data scientist.

Teamwork and ownership matter

Assign a model owner who’s responsible for:

- Updates

- Performance checks

- Retraining decisions

Clear ownership keeps things from falling through the cracks.

Build feedback loops

Feed real-world performance metrics back into your model development process. This helps future models learn from past mistakes.

💡 Expert Moves: Machine Learning Tips the Pros Actually Use

Practical importance of each major aspect of machine learning workflows based on real-world impact and effort.

Start with a Dumb Baseline

Always create a baseline model first.

Even a simple rule-based or dummy classifier gives you a performance floor.

Why it matters: You’ll know if your ML model is actually better—or just adding complexity.

Focus More on Data Than Algorithms

80% of success comes from quality data.

A well-engineered feature can outperform a fancy algorithm.

Example: In customer churn prediction, a “days since last login” feature might be more valuable than switching from Random Forest to XGBoost.

Visualize Everything—Early and Often

Use libraries like Seaborn, Plotly, or Pandas Profiling to:

- Spot outliers

- Understand distributions

- Detect data leaks

Tip: Visual anomalies often reveal deeper model problems.

Use Stratified Splits for Classification

Especially in imbalanced datasets, random splitting might skew your results.

Stratified sampling ensures class proportions are preserved in both train and test sets.

Cache Intermediate Results

Long preprocessing steps? Save the cleaned data using joblib, pickle, or feather.

This speeds up experiments and avoids reprocessing headaches.

Automate Hyperparameter Tuning—But Set Smart Bounds

Use GridSearchCV or Optuna, but:

- Define meaningful ranges (don’t blindly test 0.001 to 10)

- Use domain knowledge to narrow the search

Insider Tip: Random Search is often faster and nearly as effective for small-scale tuning.

Use Pipelines to Prevent Data Leakage

Build end-to-end Scikit-learn Pipelines for preprocessing + modeling.

This ensures consistent transformations during cross-validation and deployment.

Leakage is a silent killer—pipelines keep it under control.

Log Everything (Even the Fails)

Use MLflow, Weights & Biases, or even a simple spreadsheet.

Track:

- Parameters

- Metrics

- Notes on bugs or strange outputs

Why? What fails today might succeed tomorrow—with the right tweak.

Use Domain-Specific Metrics

Accuracy isn’t always the answer.

- Fraud detection → Precision, Recall

- Ad CTR prediction → Log Loss, AUC

- Regression → RMSE, MAE

Pro Tip: Metrics should reflect real-world impact.

Build for Maintenance—Not Just the Demo

Structure your code and workflows so retraining is easy.

- Modular scripts

- Clear folder structures

- Config files for parameters

Insider Move: Use tools like hydra, cookiecutter, or kedro to standardize projects.

Final Thoughts: Your Machine Learning Journey

Start small, grow smart

Every seasoned ML pro started with toy datasets and off-the-shelf models. Master the basics, then build up.

One project at a time.

Balance experimentation with structure

Yes, play around. Try weird ideas. But also create repeatable workflows and document your process.

That’s the difference between hacking and engineering.

Stay curious—it’s a lifelong skill

Machine learning evolves fast. What you learn today might change tomorrow.

So stay curious, stay open, and keep building.

Future Outlook

Expect ML to get easier, faster, and more human-friendly.

With AutoML, LLMs, and no-code platforms, more people than ever can build smart systems. But domain knowledge, ethics, and creativity will always matter most.

Get ready for:

- Widespread GenAI integration

- ML democratization

- Smarter, self-healing pipelines

Tomorrow’s ML workflows will be as intuitive as dragging blocks.

Call-to-Action

Have you implemented your first ML model yet?

Or maybe you’re stuck at a specific stage? Drop your questions, project ideas, or tool recommendations in the comments!

Let’s build something brilliant—together. 🚀

FAQs

How important is feature engineering?

It’s everything. Great features often beat fancy models.

Feature engineering turns raw data into useful signals.

Example: Converting timestamps into “morning/evening” or user activity into “days since last login” can drastically boost model performance.

Should I deploy my model as a REST API?

Yes—if it needs to serve real-time predictions.

Use frameworks like:

- Flask or FastAPI (lightweight)

- Django (for full web apps)

Example: A loan eligibility model behind a web form is best deployed as an API that returns results instantly.

Is cloud deployment necessary for ML?

Not always, but it helps when:

- You need scale (large models or datasets)

- You want end-to-end automation

- You’re working with distributed teams

Example: AWS SageMaker can handle model training, tuning, and deployment—all in one platform.

Can I use machine learning on unstructured data like text or images?

Yes—there are specialized algorithms for that.

- Text: Use Natural Language Processing (NLP) models like TF-IDF, Word2Vec, or transformer-based models (e.g., BERT).

- Images: Use Convolutional Neural Networks (CNNs) or pretrained models like ResNet and VGG.

Example: You could build a sentiment analysis tool from customer reviews using Hugging Face transformers or train a model to recognize product defects from photos.

What tools should I use for end-to-end ML projects?

Here’s a solid starter stack:

- Data prep: Pandas, NumPy, Dask

- Modeling: Scikit-learn, XGBoost, PyTorch

- Tracking & versioning: MLflow, DVC

- Deployment: Docker, FastAPI, Streamlit

- Monitoring: Prometheus, Grafana, Sentry

Example: You might use Jupyter for prototyping, MLflow to track experiments, and Docker + FastAPI to push your model live.

How do I pick the “best” model for my problem?

There’s no silver bullet.

Follow these steps:

- Try multiple algorithms

- Compare performance using metrics (accuracy, F1-score, RMSE, etc.)

- Consider speed, interpretability, and resource usage

Example: A random forest may slightly outperform logistic regression, but if you’re in healthcare, transparency might make logistic regression the better choice.

What if my data is imbalanced?

Imbalanced datasets can mislead your model.

Tactics that help:

- Use resampling (oversampling or undersampling)

- Apply class weighting

- Try anomaly detection algorithms if one class is rare

Example: In fraud detection (1 in 1000 transactions), accuracy isn’t helpful. You’ll want precision, recall, or F1-score instead.

Do I need deep learning for most projects?

Nope. Classical ML often does the job better.

Deep learning shines when:

- You have massive datasets

- Data is unstructured (images, video, text)

- You need real-time learning or complex pattern detection

Example: Don’t train a neural net to predict house prices—use linear regression. But for face recognition? CNNs all the way.

Should I build my own ML model or use a prebuilt one?

Depends on your goals.

Use prebuilt if:

- You need speed and scale

- The task is common (e.g., image labeling, text classification)

- You lack data or expertise

Build your own if:

- You need control

- Your problem is niche

- You’re optimizing for competitive advantage

Example: An off-the-shelf chatbot may work for customer service, but a custom model trained on your unique support logs could deliver much better responses.

Resources

📚 Learning Platforms & Courses

Beginner to Intermediate:

- Google’s Machine Learning Crash Course

- Coursera: Andrew Ng’s Machine Learning

- DataCamp: Supervised & Unsupervised ML Tracks

Advanced & Specialized:

- fast.ai: Practical Deep Learning

- CS231n (Stanford): Convolutional Neural Networks for Visual Recognition

- DeepLearning.AI Specializations

📘 Documentation & Libraries

Core Libraries:

- Scikit-learn Documentation

- TensorFlow Guides

- PyTorch Tutorials

- XGBoost Docs

ML Pipelines & MLOps:

- MLflow Docs

- DVC (Data Version Control)

- Kedro (Data pipeline framework)

🛠️ Tools & Platforms

Experiment Tracking:

Model Deployment:

- FastAPI

- Streamlit

- Docker Docs

Monitoring & Drift Detection:

- Evidently AI

- Prometheus

- Seldon Core

🔍 Blogs, News & Community

Stay Current:

- KDnuggets

- Towards Data Science (Medium)

- The Batch by Andrew Ng

- ArXiv Sanity Preserver

Community & Forums:

- r/MachineLearning

- Stack Overflow: Machine Learning tag

- Hugging Face Forum

🧠 Datasets for Practice

- Kaggle Datasets

- UCI Machine Learning Repository

- Google Dataset Search

- Awesome Public Datasets (GitHub)