Dimensionality reduction plays a crucial role in simplifying data for analysis, especially when dealing with high-dimensional datasets. While Principal Component Analysis (PCA) has been a staple technique for decades, newer methods are emerging to address its limitations and cater to more complex data types. Let’s dive into these cutting-edge approaches.

Why Dimensionality Reduction Matters More Than Ever

Tackling the Curse of Dimensionality

As datasets grow in size and complexity, high-dimensional data can become unmanageable. Dimensionality reduction helps minimize redundancy while preserving critical information.

- High dimensions can dilute data significance, making models prone to overfitting.

- Lower dimensions improve interpretability and reduce computational burden.

Limitations of PCA in Modern Contexts

While PCA is powerful, it assumes linear relationships between variables, which doesn’t always hold true.

- It struggles with nonlinear data structures like clusters or curves.

- PCA can be sensitive to scaling, requiring preprocessing that may distort raw data.

t-SNE: Unveiling Nonlinear Structures

What is t-SNE?

t-Distributed Stochastic Neighbor Embedding (t-SNE) is a nonlinear dimensionality reduction technique ideal for visualizing high-dimensional data.

- It maps data points into a lower-dimensional space while preserving local relationships.

- Best used for tasks like clustering, anomaly detection, and exploratory data analysis.

Applications of t-SNE

t-SNE excels in areas like image recognition, genomics, and natural language processing (NLP):

- Visualizing gene expression patterns in biology.

- Revealing hidden clusters in unstructured text or image datasets.

Limitations to Note

Although t-SNE is revolutionary, it has drawbacks:

- Computationally expensive for large datasets.

- Results can vary based on hyperparameter choices (e.g., perplexity).

UMAP: A Versatile Successor to t-SNE

What Makes UMAP Stand Out?

Uniform Manifold Approximation and Projection (UMAP) combines speed and flexibility for dimensionality reduction. It focuses on preserving both local and global data structures.

- UMAP builds a neighborhood graph to learn data embeddings.

- Faster than t-SNE, making it suitable for large datasets.

Real-World Uses of UMAP

UMAP is used in bioinformatics, image processing, and social network analysis:

- Identifying cell populations in single-cell RNA sequencing data.

- Exploring latent features in recommendation systems.

UMAP vs. t-SNE

While both are nonlinear, UMAP typically produces more interpretable embeddings. Additionally, it handles larger datasets with less computational overhead.

Autoencoders: Bridging Neural Networks and Dimensionality Reduction

What Are Autoencoders?

Autoencoders are neural network models designed to learn compressed representations of data. They work by reconstructing the input from its encoded form.

- Ideal for unsupervised learning tasks.

- Can handle nonlinear relationships unlike PCA.

Key Variants of Autoencoders

Autoencoders come in multiple forms, including:

- Denoising autoencoders to extract clean signals from noisy data.

- Variational autoencoders (VAEs) for probabilistic latent space modeling.

When to Use Autoencoders

These models shine in fields like fraud detection, image reconstruction, and generative modeling:

- Generating realistic synthetic data in deep learning pipelines.

- Capturing low-dimensional latent features in massive datasets.

Factor Analysis: Simplifying Statistical Relationships

A Statistical Perspective

Factor Analysis is a classic yet often overlooked method that identifies latent variables influencing observed data.

- It assumes that correlations arise from hidden factors.

- Useful in areas where statistical interpretation is key, such as psychology or finance.

Advantages Over PCA

Unlike PCA, factor analysis models causal relationships, making it a better choice for hypothesis-driven studies.

- Reveals the underlying drivers behind correlations.

- Enhances interpretability in social science research.

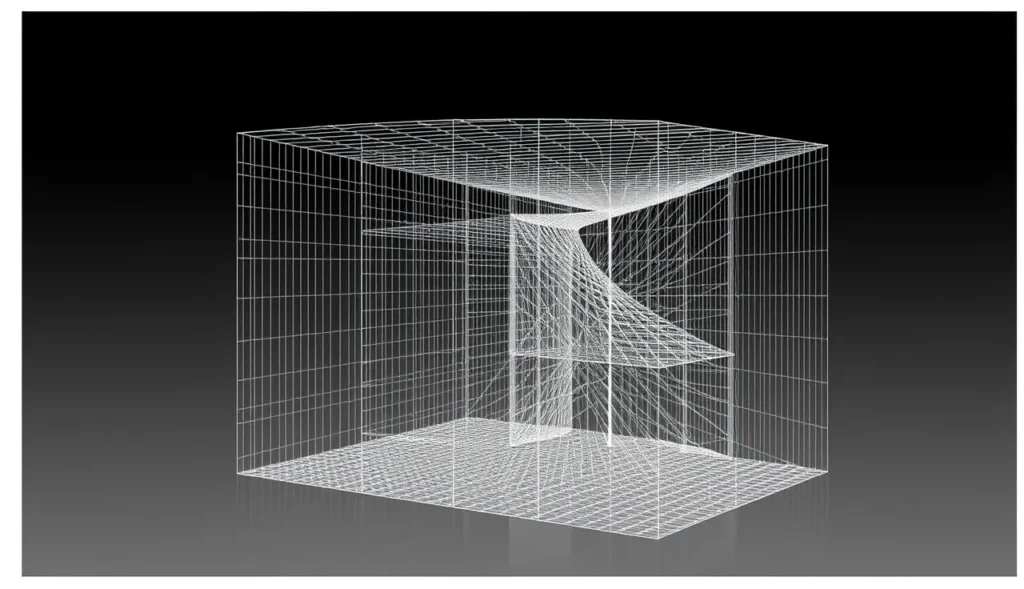

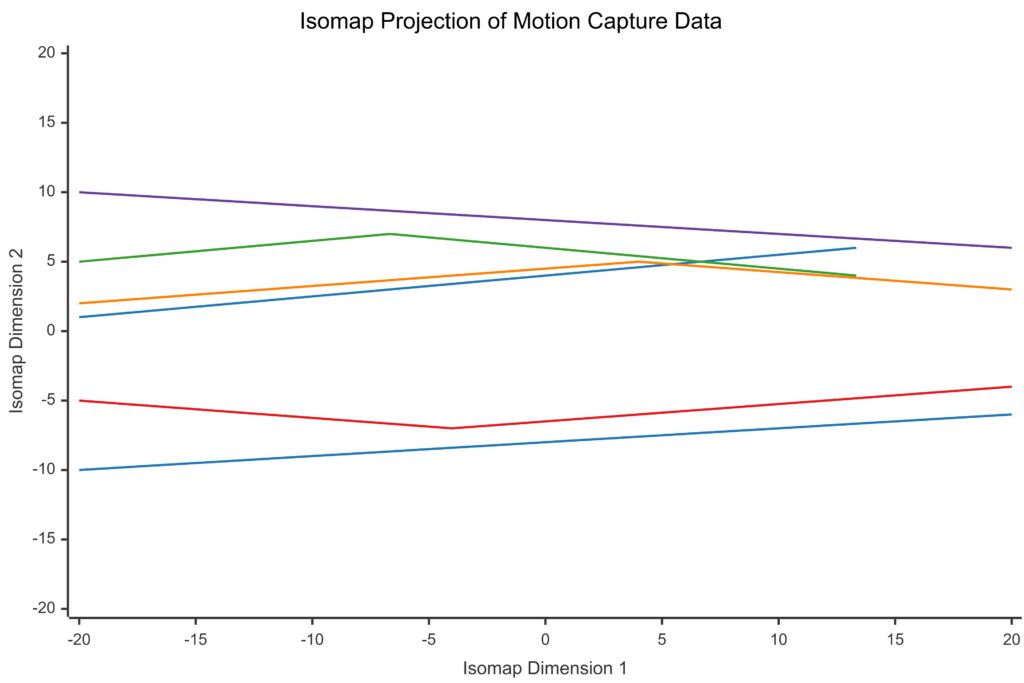

Isomap: Unlocking Geometric Insights

Geometry Meets Dimensionality Reduction

Isomap builds on Multidimensional Scaling (MDS) to preserve global geometric relationships. It is particularly effective for manifold learning.

- Constructs a graph of nearest neighbors to compute low-dimensional embeddings.

- Excellent for discovering intrinsic patterns in nonlinear datasets.

Where Isomap Shines

Isomap finds application in speech analysis, robotics, and 3D visualization:

- Capturing the latent space of human motion for robotics programming.

- Simplifying complex shape data in CAD models.

Drawbacks to Consider

Isomap struggles with very high noise levels and sparse datasets, which may limit its utility in some domains.

Kernel PCA: Enhancing PCA with Nonlinear Transformations

What is Kernel PCA?

Kernel PCA extends traditional PCA by incorporating kernel functions to handle nonlinear data relationships.

- It maps input data into a higher-dimensional space where linear methods like PCA perform better.

- Common kernel functions include Gaussian, polynomial, and sigmoid kernels.

Key Benefits of Kernel PCA

Kernel PCA is particularly effective for datasets with intricate nonlinear patterns:

- Separating overlapping clusters in classification problems.

- Reducing dimensions in highly nonlinear systems like gene expression or speech data.

Applications in the Real World

Kernel PCA is widely used in image denoising, pattern recognition, and NLP:

- Recognizing handwriting in optical character recognition (OCR) systems.

- Improving performance in sentiment analysis pipelines.

LLE: Locally Linear Embedding for Manifold Learning

Understanding LLE

Locally Linear Embedding (LLE) is a nonlinear technique that preserves the local structure of data.

- Focuses on reconstructing each data point using its nearest neighbors.

- Particularly suited for data lying on a low-dimensional manifold within a high-dimensional space.

Real-World Applications of LLE

LLE is an excellent choice for discovering relationships in scientific data:

- Analyzing astrophysical datasets to reveal hidden properties of galaxies.

- Modeling protein folding processes in bioinformatics.

Challenges with LLE

While effective, LLE struggles with datasets containing noise or sparse distributions. It can also be computationally intensive for large-scale problems.

Multidimensional Scaling (MDS): A Classic for Visualization

What is MDS?

Multidimensional Scaling (MDS) seeks to place objects in a low-dimensional space while preserving pairwise distances.

- Focuses on maintaining the relative similarities or dissimilarities between data points.

- Ideal for visualizing proximity-based relationships.

Applications of MDS

MDS has been a staple in domains like marketing and social sciences:

- Mapping customer preferences in market segmentation studies.

- Exploring relational data in network analysis.

Limitations to Address

MDS can be less effective with very large datasets due to computational intensity. It also requires careful preprocessing to ensure meaningful results.

t-TNE: A Faster Take on t-SNE

Introducing t-TNE

Triangular t-SNE (t-TNE) is a more computationally efficient version of traditional t-SNE. It adapts the embedding process for large datasets.

- Utilizes approximations to reduce time complexity.

- Retains t-SNE’s ability to uncover local structures in high-dimensional data.

How t-TNE Solves Problems

This method overcomes the time bottleneck of t-SNE, making it feasible for:

- Real-time analysis of massive data streams.

- Scaling visualization tasks in data exploration workflows.

When to Use t-TNE

t-TNE works well in domains requiring rapid processing without losing accuracy, such as anomaly detection in cybersecurity or real-time recommendation systems.

Spectral Embedding: Simplifying Graph-Like Data

What is Spectral Embedding?

Spectral Embedding leverages graph-based approaches to model the relationships between data points.

- It constructs a similarity graph to embed data into lower dimensions.

- Preserves both local and global relationships effectively.

Key Use Cases

Spectral Embedding thrives in graph-related tasks, including:

- Social network analysis, where relationships matter as much as individual nodes.

- Clustering and segmentation tasks in image processing and bioinformatics.

Potential Drawbacks

While versatile, spectral embedding requires significant computational resources and preprocessing, especially for large-scale graphs.

ICA: Independent Component Analysis for Signal Separation

What is ICA?

Independent Component Analysis (ICA) is a statistical technique that identifies independent components within a dataset. Unlike PCA, which focuses on variance, ICA isolates underlying factors that are statistically independent.

- Particularly effective for separating mixed signals (e.g., audio or EEG data).

- Assumes that the observed data is a linear mixture of independent sources.

Real-World Applications of ICA

ICA has carved a niche in specialized areas, including:

- Blind source separation, such as isolating individual voices in a crowded room.

- Detecting brain activity patterns in neuroimaging (e.g., fMRI or EEG studies).

Limitations of ICA

The method assumes linear mixing, which may not hold true for all datasets. It also requires careful tuning to avoid overfitting.

Random Projection: Simplicity Meets Efficiency

A Lightweight Alternative

Random Projection (RP) is a straightforward, computationally efficient technique for reducing dimensionality.

- It works by projecting data onto a lower-dimensional space using random matrices.

- Despite its simplicity, RP guarantees results close to the original structure due to the Johnson-Lindenstrauss lemma.

Why Use Random Projection?

RP is particularly valuable when speed matters more than precision:

- Preprocessing high-dimensional data in large-scale machine learning pipelines.

- Quickly reducing dimensionality for sparse data like text (e.g., bag-of-words models).

Challenges with Random Projection

While fast, RP can lose interpretability and struggle with preserving exact global structures, making it unsuitable for detailed analysis.

Deep Feature Extraction: Leveraging Pretrained Neural Networks

What Does Deep Feature Extraction Mean?

This method uses layers from deep learning models, such as convolutional neural networks (CNNs), to extract high-level features.

- Dimensionality is reduced by focusing on learned, task-specific representations.

- Works well for image, audio, and text data.

Use Cases of Deep Feature Extraction

Deep feature extraction is widely applied in areas demanding advanced pattern recognition:

- Extracting embeddings for facial recognition or voice authentication.

- Generating compact feature sets for document similarity tasks in NLP.

Considerations for Deep Feature Extraction

This approach depends on the availability of pretrained models and requires significant computational resources for training when customization is needed.

Sparse PCA: Adding Sparsity Constraints

Genes are represented along the x-axis.

Contributions to PC1 are shown as bar heights.

GeneE, associated with a specific disease, is highlighted in red.

What is Sparse PCA?

Sparse PCA modifies traditional PCA by imposing sparsity constraints on the principal components.

- Ensures that resulting components involve fewer variables, enhancing interpretability.

- Balances dimensionality reduction with feature selection.

Applications of Sparse PCA

Sparse PCA is invaluable for high-dimensional datasets, particularly in fields like bioinformatics or finance:

- Identifying key genes or proteins in genomic studies.

- Analyzing financial trends by focusing on fewer, more relevant features.

Sparse PCA vs. Standard PCA

While standard PCA often produces dense components, sparse PCA ensures that only a subset of variables is involved, reducing noise and redundancy.

LargeVis: Scaling Dimensionality Reduction for Big Data

An Overview of LargeVis

LargeVis is a modern approach designed for large-scale datasets, providing fast and efficient visualizations.

- Combines graph construction with edge sampling to create low-dimensional embeddings.

- Designed to handle millions of data points, unlike traditional methods like t-SNE.

Why LargeVis Matters

LargeVis shines in domains requiring large-scale data exploration and visualization:

- Social media analysis to understand vast interaction networks.

- Visualizing extensive datasets in recommendation systems or e-commerce platforms.

Key Benefits Over Similar Methods

Compared to t-SNE and UMAP, LargeVis is significantly faster and can scale to much larger datasets without compromising quality.

Conclusion: Choosing the Right Dimensionality Reduction Technique

As datasets grow more complex, the need for advanced dimensionality reduction methods has become paramount. While PCA remains a go-to tool for simplicity and effectiveness, emerging techniques such as t-SNE, UMAP, and Autoencoders offer solutions tailored to nonlinear, high-dimensional challenges. Additionally, specialized methods like ICA, Kernel PCA, and Sparse PCA address unique requirements in domains such as bioinformatics, neuroscience, and finance.

For scalability, tools like LargeVis and Random Projection bring computational efficiency to the forefront, while techniques like Deep Feature Extraction leverage the power of pretrained neural networks to create rich, compact embeddings.

Ultimately, the right choice depends on the specific dataset and use case. Whether your goal is to uncover hidden clusters, visualize relationships, or streamline feature selection, exploring these advanced methods can unlock new insights and drive better decision-making.

Dive into these techniques, experiment, and harness the power of modern dimensionality reduction to transform your data analysis workflows!

FAQs

Why is dimensionality reduction crucial for machine learning?

Dimensionality reduction minimizes redundancy, improves computational efficiency, and enhances model interpretability. It also reduces the risk of overfitting by eliminating irrelevant features.

Example: For training a machine learning model on image data, dimensionality reduction can condense pixel values into meaningful features, speeding up computations and improving accuracy.

What datasets are ideal for ICA?

ICA is best suited for datasets where the observed data is a combination of independent sources. It’s particularly effective for signal separation tasks.

Example: In audio processing, ICA can separate overlapping voices in a recording. In neuroscience, it isolates brain activity patterns from EEG data.

When should I use Deep Feature Extraction instead of PCA?

Deep feature extraction is ideal when working with unstructured data like images, audio, or text, where deep learning models can capture intricate patterns. PCA, on the other hand, is better suited for structured numerical data.

Example: In facial recognition, using pretrained CNNs like VGG16 for feature extraction yields more meaningful embeddings than PCA.

Is dimensionality reduction always necessary?

No, dimensionality reduction is only necessary when high-dimensional data poses challenges like overfitting, computational inefficiency, or interpretability issues. For smaller datasets or low-dimensional problems, it may not add value.

Example: If a dataset has only 10 features, reducing dimensions might result in information loss without significant performance gains.

What is LargeVis’s primary advantage over t-SNE or UMAP?

LargeVis is designed to handle extremely large datasets efficiently. While t-SNE and UMAP are effective for smaller datasets, LargeVis scales better without sacrificing the quality of embeddings.

Example: LargeVis can process datasets with over 10 million samples, making it ideal for tasks like social media interaction analysis or e-commerce recommendations.

How do autoencoders handle noisy data?

Autoencoders, particularly denoising autoencoders, are designed to reconstruct clean representations of data by learning to ignore noise. They are trained to minimize the difference between the input and the clean target.

Example: In image processing, a denoising autoencoder can remove visual artifacts from corrupted images, such as restoring clarity in low-resolution photos.

What is the relationship between dimensionality reduction and feature selection?

Dimensionality reduction transforms data into a new, lower-dimensional space, while feature selection identifies and retains the most important features without altering the data. They can complement each other in workflows.

Example: Sparse PCA combines both by selecting important features and reducing dimensions simultaneously, making it ideal for high-dimensional genomic datasets.

Can dimensionality reduction improve model performance?

Yes, by reducing the feature space, dimensionality reduction can improve model performance by:

- Reducing noise and irrelevant variables.

- Lowering computational demands.

- Mitigating the risk of overfitting.

Example: In text classification, reducing thousands of word vectors to key components through UMAP can enhance both training speed and model accuracy.

How does dimensionality reduction impact data interpretability?

Dimensionality reduction simplifies data, making patterns and relationships easier to interpret. However, methods like PCA or t-SNE create abstract components, which may lack direct interpretability compared to the original features.

Example: PCA’s first principal component might capture 70% of variance in a dataset but may not correspond to a meaningful, interpretable variable.

Resources

Books for In-Depth Understanding

- “Pattern Recognition and Machine Learning” by Christopher Bishop

Covers foundational concepts in machine learning, including PCA and its probabilistic extensions.

Ideal for: Students and professionals looking for a solid theoretical grounding. - “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

Explores autoencoders and other neural-network-based approaches to dimensionality reduction.

Ideal for: Researchers diving into deep learning applications. - “Elements of Statistical Learning” by Hastie, Tibshirani, and Friedman

A comprehensive guide to PCA, Factor Analysis, and other statistical methods for dimensionality reduction.

Ideal for: Statisticians and data scientists seeking rigorous mathematical insights.

Online Courses and Tutorials

- Coursera: “Dimensionality Reduction Techniques” by Andrew Ng (in Machine Learning Specialization)

Learn the basics of PCA and t-SNE through practical examples and hands-on coding assignments.

Best for: Beginners looking for guided tutorials. - edX: “Data Science Essentials” by Microsoft

Covers various data preprocessing methods, including dimensionality reduction techniques like PCA and t-SNE.

Best for: Practical learners. - Kaggle Learn: “Data Visualization with Dimensionality Reduction”

Offers short, hands-on exercises using real-world datasets and tools like UMAP and t-SNE.

Best for: Practitioners who want fast, applied learning.

Libraries and Tools

- Scikit-learn:

A Python library offering implementations of PCA, Kernel PCA, Isomap, t-SNE, and more. Scikit-learn Documentation

Best for: Quick and easy experimentation with dimensionality reduction methods. - UMAP-learn:

A specialized library for UMAP in Python. Highly optimized for speed and accuracy. UMAP-learn Documentation

Best for: UMAP-specific applications in machine learning. - TensorFlow/Keras:

Useful for building autoencoders and performing deep feature extraction. TensorFlow Guide

Best for: Neural network-based dimensionality reduction.

Research Papers and Articles

- “Visualizing Data using t-SNE” by Laurens van der Maaten and Geoffrey Hinton

A seminal paper introducing t-SNE and its effectiveness in visualizing high-dimensional data.

Read here - “UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction” by McInnes, Healy, and Melville

The original UMAP paper detailing the algorithm’s construction and use cases.

Read here - “Auto-Encoding Variational Bayes” by Kingma and Welling

Introduces Variational Autoencoders (VAEs), a key development in neural-network-based dimensionality reduction.

Read here

Blogs and Tutorials

- Distill.pub: “How to Use t-SNE Effectively”

A beautifully illustrated guide on using t-SNE for visualization, including common pitfalls and tips.

Visit Distill.pub - Analytics Vidhya: “PCA vs. t-SNE vs. UMAP”

A comparative blog explaining when to use PCA, t-SNE, or UMAP with practical examples.

Read here - Towards Data Science on Medium:

Features numerous articles on advanced dimensionality reduction methods, often with code examples.

Visit here

Communities and Forums

- Reddit: r/MachineLearning

Discussions, news, and resources related to dimensionality reduction techniques.

Visit r/MachineLearning - Kaggle Discussions

Engage with data science practitioners about dimensionality reduction workflows and challenges.

Visit Kaggle - Stack Overflow

Ask questions and get answers about implementing and troubleshooting dimensionality reduction methods.

Visit Stack Overflow