AI infrastructures and algorithms need more and more synthetic data, so the trouble with bias arises. Are we perpetuating human errors or using technology to create fairer systems? Let’s dive into this critical topic to explore its nuances.

What Is Synthetic Data and Why Is It Crucial?

Defining Synthetic Data

Synthetic data refers to artificially generated information that mimics real-world data. It’s used in AI training when actual data is insufficient, inaccessible, or too sensitive for use. Think about creating thousands of images to train a facial recognition model without needing personal photos.

The Need for Synthetic Data

Synthetic data shines in scenarios like medical research or autonomous vehicles, where collecting real-world data can be costly or unethical. Moreover, controlled generation helps avoid privacy issues while enabling broader experimentation. But what about bias? If the data is artificial, shouldn’t it also be bias-free?

How Bias Creeps Into Synthetic Data

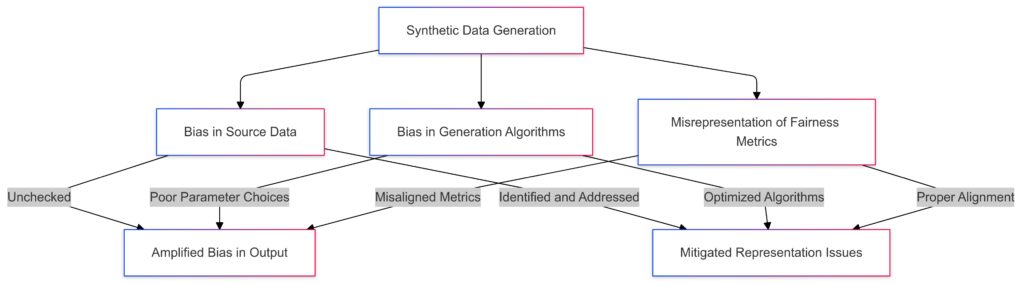

Replicating Bias from Source Data

Synthetic data often mirrors the patterns, including flaws, found in the original dataset. If the source data reflects societal biases—like gender stereotypes or racial inequalities—these biases can seamlessly transfer to the synthetic version.

For example, if hiring algorithms are trained on historical company data where leadership positions are predominantly held by men, synthetic data can replicate this disparity, perpetuating inequities.

Bias in Data Generation Processes

Even when starting from scratch, bias can emerge through the generation models themselves. For instance, AI algorithms tasked with creating synthetic datasets may prioritize statistical accuracy over fairness, unknowingly embedding discriminatory patterns.

Missteps in defining criteria, features, or representation during generation amplify these issues.

Can Synthetic Data Help Correct Bias?

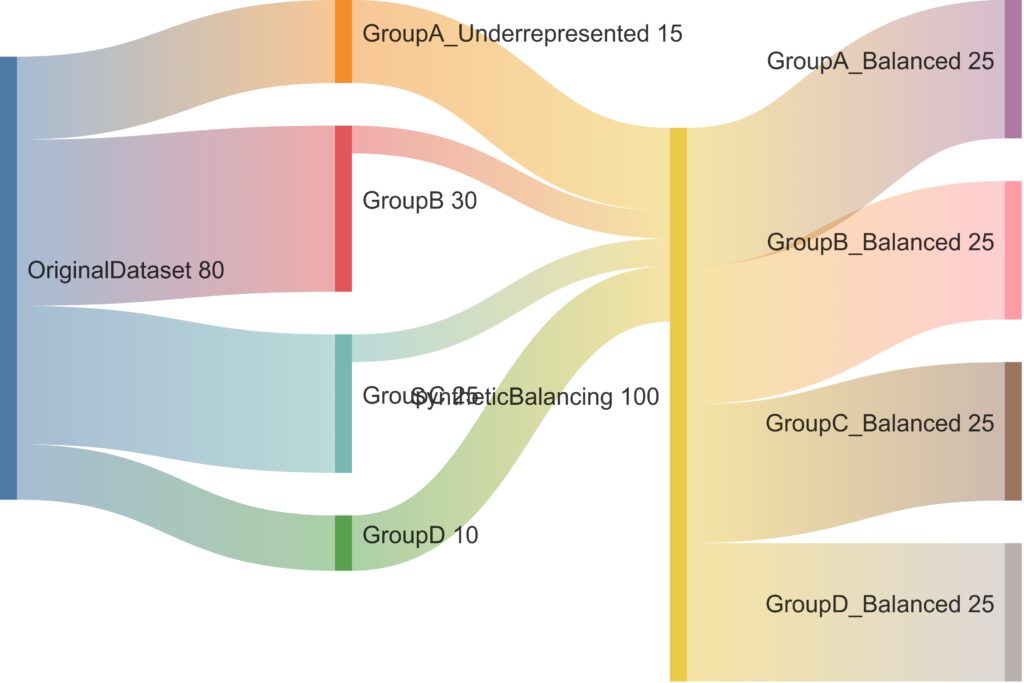

Adjusting Distribution for Fair Representation

One significant advantage of synthetic data is the ability to rebalance underrepresented groups. For example, if real-world data lacks sufficient representation of a minority group, synthetic data can be deliberately engineered to address this gap.

This technique is especially useful in healthcare, ensuring diagnostic models work equally well across different demographic groups.

Testing Models for Bias Detection

Synthetic data is also instrumental in stress-testing AI models. Engineers can simulate edge cases—such as rare but critical scenarios—using synthetic datasets. This helps reveal potential biases in a controlled environment before models are deployed.

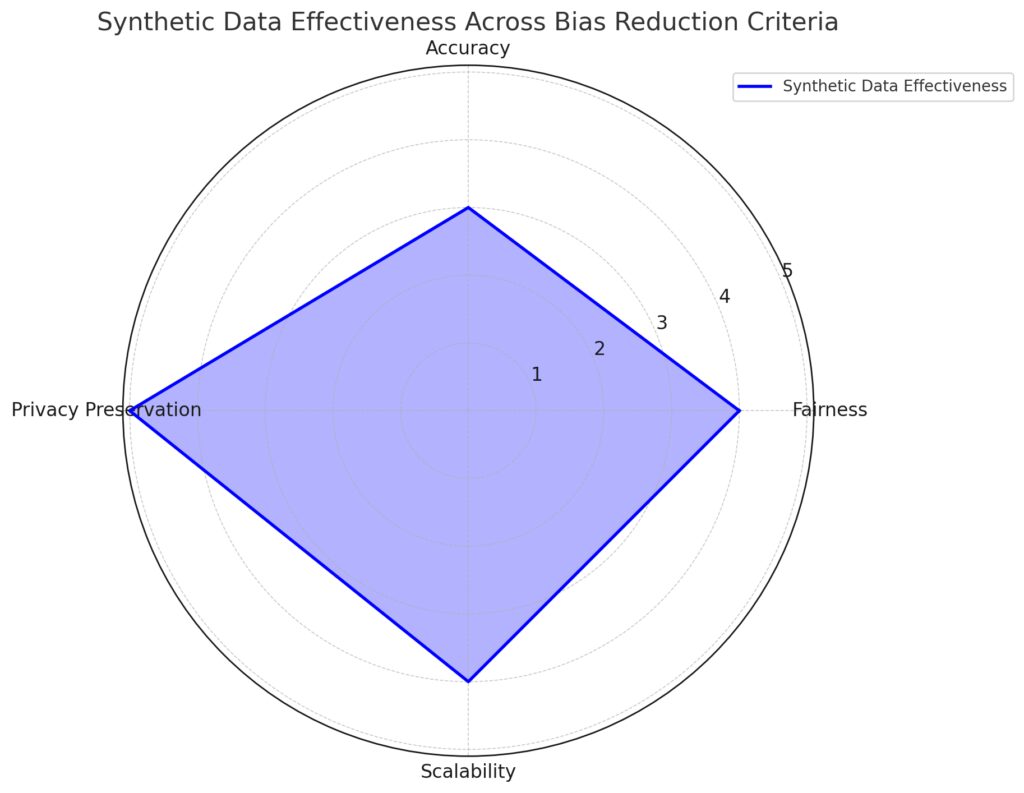

Criteria:

- Fairness: Score 4 (strong effectiveness in promoting fairness).

- Accuracy: Score 3 (moderate impact on maintaining accuracy).

- Privacy Preservation: Score 5 (excellent privacy protection capabilities).

- Scalability: Score 4 (high scalability for large-scale applications).

Visualization:

Blue Area: Represents the overall effectiveness, highlighting strengths and areas for improvement.

The Challenges of Measuring and Correcting Bias

Ambiguities in Defining Fairness

Correcting bias requires defining what “fairness” means, which is not always straightforward. Should fairness aim for proportional representation? Equal outcomes? Or equal opportunities? The lack of consensus often muddles synthetic data generation efforts.

Ethical Dilemmas in Bias Correction

Another challenge lies in deciding whose biases to correct and how. Overcompensation to address certain inequalities might introduce new biases. The subjectivity in selecting corrections raises questions about fairness and ethical boundaries.

Let’s continue exploring this complex intersection between bias in synthetic data and its potential to either perpetuate or correct human errors. Stay tuned for more insights!

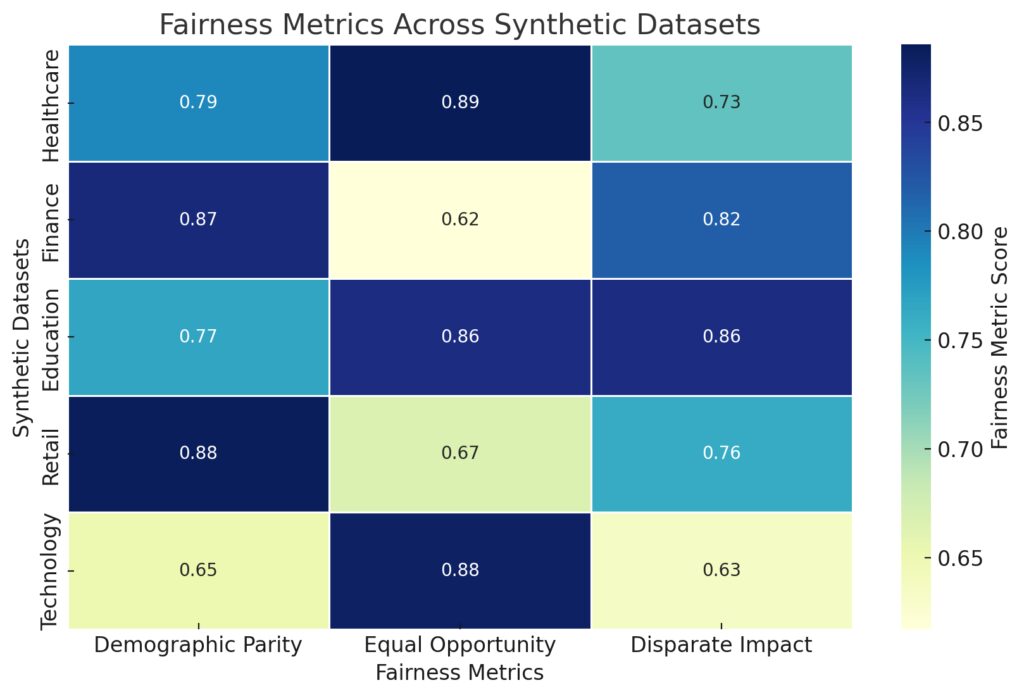

Columns: Represent key fairness metrics:Demographic Parity: Ensures equal representation.

Equal Opportunity: Measures fairness in opportunity across groups.

Disparate Impact: Identifies unintended adverse impacts on specific groups.

Color Gradient:Dark Blue: Higher fairness scores (closer to 1.0, indicating better fairness).

Light Blue: Lower fairness scores (closer to 0.6, indicating potential fairness issues).

The Role of Algorithms in Amplifying Bias

Black-Box Problem in AI Models

A major challenge in synthetic data creation is the black-box nature of AI algorithms. These models often operate in ways that are difficult to interpret, which means biases may be introduced or amplified without developers realizing it. For instance, if an algorithm prioritizes one metric, like accuracy, it may overlook fairness considerations, embedding inequalities in the generated data.

Reinforcement of Historical Inequalities

AI systems trained on synthetic data risk solidifying historical inequities, especially if the generation process relies on historical trends. For example, a model trained on biased judicial data may continue to perpetuate unfair sentencing patterns, even if the data is artificially augmented.

Addressing this requires transparency in model design and ethical oversight during synthetic data creation.

Tools and Techniques to Combat Bias in Synthetic Data

Synthetic Oversampling for Balanced Representation

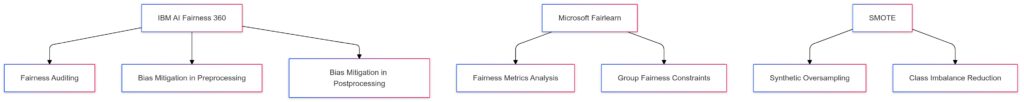

One effective technique to combat bias is synthetic oversampling, where underrepresented groups are deliberately oversampled in the generated data. Tools like SMOTE (Synthetic Minority Oversampling Technique) enable developers to boost representation without distorting the overall dataset integrity.

For instance, in a dataset where women make up only 20% of leadership roles, synthetic data can introduce a 50-50 split to ensure fairer training outcomes.

Bias Mitigation Algorithms

Some advanced tools, such as IBM AI Fairness 360 and Microsoft’s Fairlearn, allow developers to assess and mitigate bias during synthetic data creation. These platforms provide insights into how different variables influence predictions, enabling targeted corrections.

Human-in-the-Loop Approaches

Relying solely on automated processes can exacerbate bias. Instead, incorporating human oversight ensures diverse perspectives guide the data generation process. Ethical review boards or domain experts can assess fairness metrics and refine the synthetic datasets accordingly.

Real-World Applications of Synthetic Data Bias Correction

Healthcare: Bridging Gaps in Diagnosis

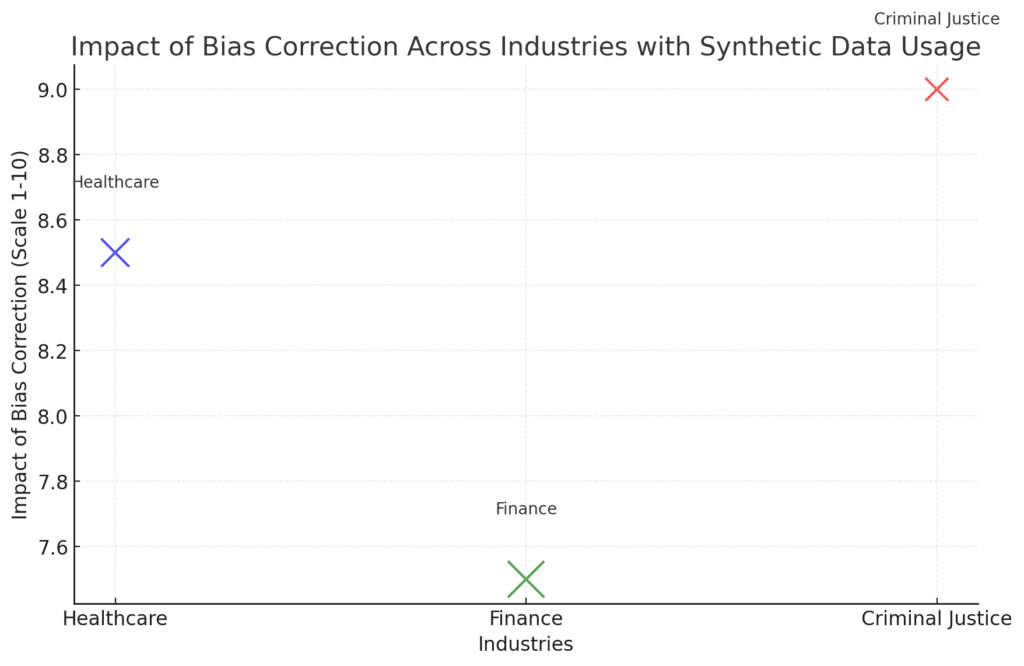

In the healthcare sector, biased data can lead to life-threatening consequences. For example, certain diagnostic tools may underperform for individuals from underrepresented groups. Synthetic data can fill these gaps, ensuring models are equally effective across all demographics.

An excellent example is dermatology AI tools, which often struggle with darker skin tones due to limited real-world data. Synthetic data has been used to improve detection rates by providing additional samples for rare cases.

Y-axis: Impact of bias correction (scale 1-10).

Bubble Size: Frequency of synthetic data use in each industry.

Bubble Colors:Blue: Healthcare.

Green: Finance.

Red: Criminal Justice.

Insights:

Criminal Justice shows the highest impact from bias correction but lower synthetic data use frequency.

Finance has a moderate impact but the highest frequency of synthetic data use.

Healthcare benefits significantly from bias correction and has moderate synthetic data adoption.

Financial Services: Tackling Discrimination

Bias in financial datasets often results in discriminatory practices, like denying loans based on race or gender. Synthetic data, with rebalanced demographic representation, allows for fairer creditworthiness evaluations. Companies like Zest AI are actively using synthetic techniques to reduce discriminatory biases in loan approval processes.

Ethical Considerations in Synthetic Data Usage

Balancing Privacy and Fairness

One of the main appeals of synthetic data is its ability to protect personal privacy. However, even anonymized synthetic datasets can inadvertently reflect the biases present in real-world systems. The ethical dilemma lies in ensuring that while privacy is preserved, fairness is not sacrificed.

Accountability in Bias Correction

When correcting bias in synthetic data, who decides what corrections are necessary? This question brings accountability into focus. Ethical frameworks and transparent decision-making processes must guide any adjustments to prevent arbitrary or politically motivated choices.

Potential for Overreach

While synthetic data holds promise for bias correction, there’s a risk of overreach. Manipulating data to fit predefined notions of fairness may lead to datasets that no longer reflect reality, undermining their utility for predictive models. Striking the right balance is key.

Final Thoughts: Synthetic data has the potential to either amplify systemic inequalities or help correct them. The outcome depends on how responsibly it is designed, implemented, and monitored. Embracing ethical principles and leveraging advanced tools can steer synthetic data toward creating more equitable AI systems.

FAQs

Can synthetic data be entirely free from bias?

It’s unlikely that synthetic data can be completely bias-free. Bias can stem from both the source data and the algorithms used to generate synthetic datasets. However, developers can minimize bias by using fairness-focused techniques and regularly auditing their data creation processes.

Is synthetic data better than real-world data for avoiding bias?

Synthetic data has advantages in bias mitigation because it can be deliberately manipulated to rebalance representation or highlight underrepresented groups. However, poorly designed synthetic data systems may unintentionally replicate or even amplify real-world inequalities.

What industries are most affected by biased synthetic data?

Industries like healthcare, finance, and criminal justice face significant challenges with biased synthetic data. In healthcare, bias could result in misdiagnoses; in finance, it may lead to discriminatory loan approvals. Each sector requires tailored approaches to ensure fairness in synthetic data use.

How can synthetic data be used to detect bias in AI models?

Synthetic data enables developers to stress-test AI models by simulating diverse scenarios and edge cases. By analyzing model performance on these test cases, biases can be identified and addressed before deployment.

What are the ethical risks of manipulating synthetic data to correct bias?

While correcting bias is essential, over-adjustment can lead to datasets that no longer represent reality, causing misleading predictions. Ethical risks also arise when decisions about what constitutes “fairness” are subjective or politically influenced.

Are there tools available to address bias in synthetic data?

Yes, tools like IBM’s AI Fairness 360 and Microsoft Fairlearn offer frameworks to measure and reduce bias during data generation and model training. These tools help ensure that fairness metrics are prioritized in synthetic data workflows.

Can human oversight reduce bias in synthetic data creation?

Absolutely. Human-in-the-loop processes allow for diverse perspectives during data generation, helping identify and mitigate biases that automated systems might overlook. This collaborative approach is key to creating fair and effective synthetic datasets.

How can synthetic data help underrepresented groups?

By deliberately generating data that includes underrepresented groups, synthetic data can address gaps in real-world datasets. This ensures that AI models are more inclusive and perform equitably across different populations, particularly in fields like healthcare and education.

Can synthetic data amplify societal stereotypes?

Yes, synthetic data can unintentionally amplify stereotypes if the generation process relies on biased algorithms or datasets. For example, if an image generator associates certain professions with specific genders, it risks reinforcing harmful societal norms.

Is there a trade-off between fairness and accuracy in synthetic data?

Balancing fairness and accuracy is a common challenge. Adjusting synthetic data to improve fairness might slightly reduce model accuracy, especially when the original dataset is highly skewed. However, the trade-off is often worth it to achieve ethical outcomes.

How is bias in synthetic data measured?

Bias in synthetic data is measured using metrics like demographic parity, equal opportunity, or disparate impact. These metrics evaluate whether the data or model predictions treat different groups fairly based on predefined fairness criteria.

Are synthetic data techniques evolving to address bias?

Yes, advances in synthetic data generation techniques, such as fair representation learning and counterfactual data augmentation, are helping reduce bias. These methods aim to produce datasets that balance representation without sacrificing realism or utility.

Resources

Research Papers and Articles

- “Mitigating Bias in Artificial Intelligence”: Published by the National Institute of Standards and Technology (NIST), this paper explores techniques for addressing bias in AI systems, including synthetic data.

Read here - “Fairness and Bias in Artificial Intelligence” by the European Commission: Offers insights into the ethics of bias in AI, with a section dedicated to synthetic data.

Download the report - “Synthetic Data for Machine Learning: Advantages and Pitfalls”: A detailed overview of synthetic data’s potential and limitations, including bias risks.

Access the paper on Springer

Tools and Frameworks

- IBM AI Fairness 360

A toolkit designed to detect and mitigate bias in datasets and AI models. Useful for auditing synthetic data workflows.

Visit IBM AI Fairness 360 - Microsoft Fairlearn

An open-source Python package that focuses on measuring and improving fairness in machine learning systems.

Explore Fairlearn - SMOTE (Synthetic Minority Oversampling Technique)

A popular technique for addressing class imbalance in datasets by creating synthetic samples.

Learn more on GitHub