What Is Latent Dirichlet Allocation (LDA)?

LDA, short for Latent Dirichlet Allocation, is a popular topic modeling algorithm that helps discover hidden topics in a large corpus of text. Essentially, it looks for patterns in how words co-occur in documents, assuming that each document is a mix of topics and each topic is a mix of words.

Imagine you’re analyzing a huge collection of news articles. Instead of manually reading each article to figure out what it’s about, LDA can automatically group words into topics, revealing the underlying structure of the content.

It’s like giving a name to the conversation, even if you only overheard bits of it.

Why LDA Struggles With Short Texts

LDA works brilliantly with long documents, but it often stumbles when applied to short texts. Think about a tweet or a brief product review—there simply aren’t enough words in these formats for LDA to confidently identify patterns.

Short text is sparse. That means the algorithm doesn’t get the volume of word co-occurrences it typically relies on to infer meaningful topics. You might end up with noisy topics or ones that are too broad.

This is a real pain point for users trying to analyze data like social media posts or customer feedback.

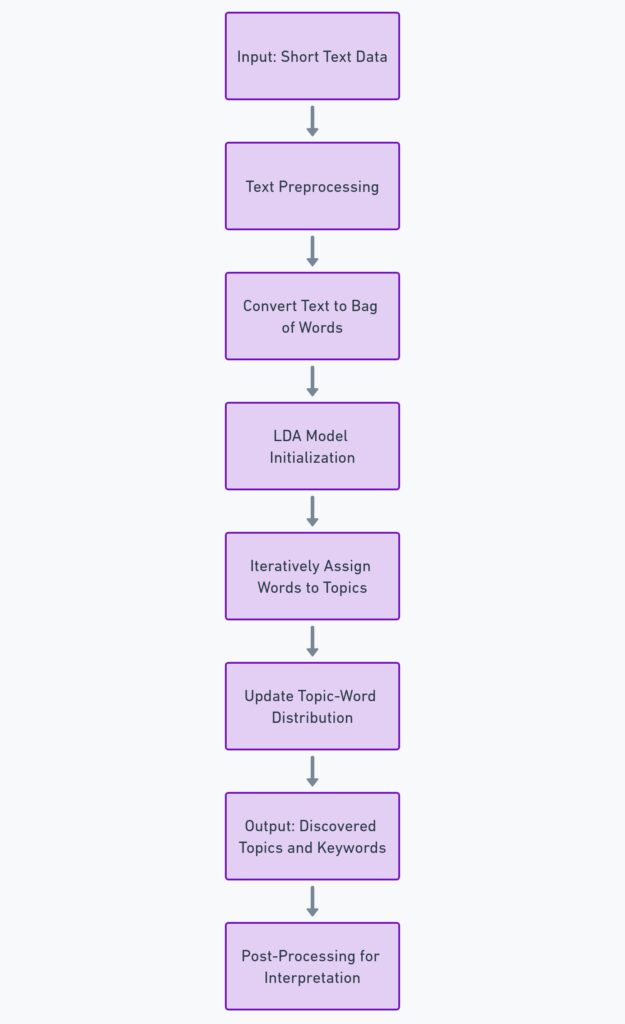

How Does LDA Actually Work?

LDA assumes that each document is made up of multiple topics, and each topic is represented by a set of words. The algorithm then tries to figure out which topics are present in which document and how strongly they appear.

It does this by looking at word distributions across documents and estimating how likely a word is to belong to a topic. If you’re analyzing blog posts about food, for example, one topic might be “desserts,” with high probabilities for words like “cake,” “chocolate,” and “recipe.”

In short texts, however, there’s less data to draw these distributions from, making it harder to confidently assign topics to words.

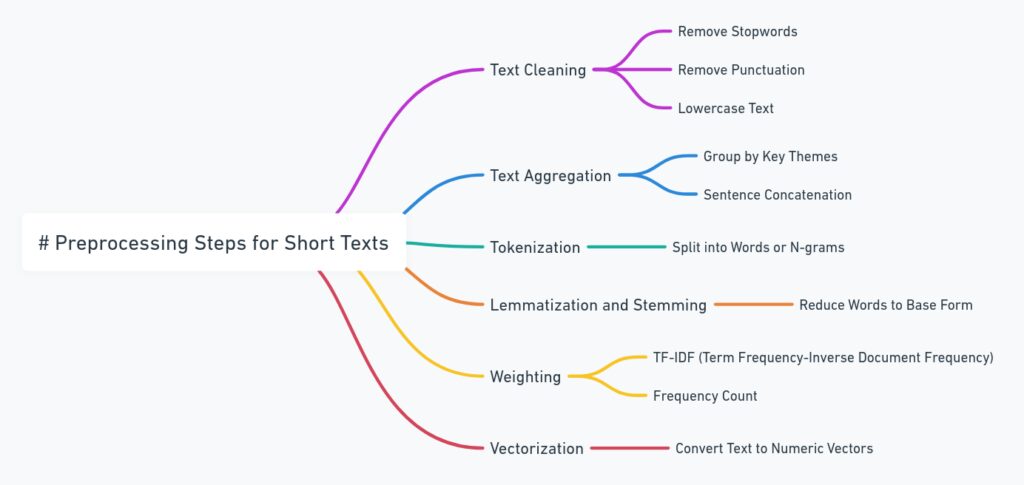

Preprocessing Short Text for Better LDA Results

One way to improve LDA’s performance on short text is to pay extra attention to the preprocessing steps. Start by cleaning the data—remove stopwords, punctuation, and irrelevant symbols.

Another trick is to combine similar short texts into slightly larger documents. For example, you could group tweets by user or merge reviews by the same customer. This gives the algorithm more data to work with and helps LDA find clearer patterns.

Finally, stemming and lemmatization can help by reducing words to their base forms. For instance, “running” and “runs” become “run,” which increases the word count and strengthens topic signals.

Choosing the Right Number of Topics in Short Text

Choosing the optimal number of topics for LDA is tricky, especially with short texts. Too few topics, and the results can be overly broad or irrelevant. Too many, and you risk overfitting, creating topics that don’t really capture meaningful distinctions.

For short text, you may need to experiment more than usual. Try starting with fewer topics and gradually increase the number, keeping an eye on coherence. Topic coherence measures how well the words in a topic fit together, and it’s a good guide for fine-tuning your model.

Common Challenges in Topic Modeling Short Text

Sparsity is one of the biggest issues when applying LDA to short texts. Since short texts only contain a few words, the algorithm struggles to form well-defined topic distributions. This leads to topics that are too general or irrelevant, with little useful insight.

Another challenge is the limited context. In long documents, LDA can rely on a variety of word combinations to identify topics. But in short texts, there’s less context to determine the true meaning of a word. For example, a single tweet mentioning “apple” could refer to the fruit or the tech company, and without more information, LDA might misclassify it.

The randomness in short texts also introduces more noise, leading to less accurate models. Small grammatical errors, slang, or abbreviations common in social media make it harder for LDA to distinguish meaningful patterns from noise.

How to Address the Sparsity Issue in Short Texts

One effective method to combat sparsity is text aggregation. By grouping multiple short texts that share common themes or contexts, you create longer documents that provide LDA with more word associations. For instance, clustering tweets by hashtags or product reviews by category could offer a richer dataset for analysis.

Another approach is to use TF-IDF (Term Frequency-Inverse Document Frequency) weighting before applying LDA. This assigns more weight to words that are unique or informative across documents. TF-IDF reduces the impact of common words that don’t contribute much to the topic structure.

Lastly, there’s additive smoothing, which modifies LDA’s probabilistic model to better handle smaller datasets. This technique ensures that even words with low frequencies are considered, balancing the sparse nature of short text collections.

Best Practices for Implementing LDA on Short Text

To make LDA more effective for short texts, start with extensive preprocessing. Filter out unnecessary words and characters that clutter your data. For example, in social media analysis, hashtags, emojis, and URLs are often non-essential to topic discovery.

You should also test different topic numbers and configurations. Unlike long texts, where a specific number of topics might be obvious, short texts require more experimentation to find the sweet spot. Try using metrics like perplexity and coherence scores to evaluate the quality of the generated topics.

When building your model, remember to focus on interpretable results. It’s tempting to go for more complex models, but if the topics don’t make sense or can’t be used for actionable insights, it defeats the purpose. Keep simplicity in mind, especially if your data is already challenging.

Popular Modifications of LDA for Short Documents

Several modifications of LDA have emerged to better handle short text data. One of the most popular is STTM (Short Text Topic Modeling), which enhances LDA by incorporating word embeddings or external knowledge bases. These modifications allow the model to better grasp semantic relationships between words that don’t co-occur frequently in short texts.

Another useful variant is Biterm Topic Modeling (BTM). BTM focuses on word pairs (or “biterms”) rather than full documents, which allows it to capture word correlations directly from the short texts themselves. This has been particularly effective for data like tweets or forum posts, where traditional LDA fails.

There’s also Dirichlet Multinomial Mixture (DMM), which simplifies topic modeling by assuming each document contains a single topic, which is more appropriate for short texts that usually only convey one primary idea.

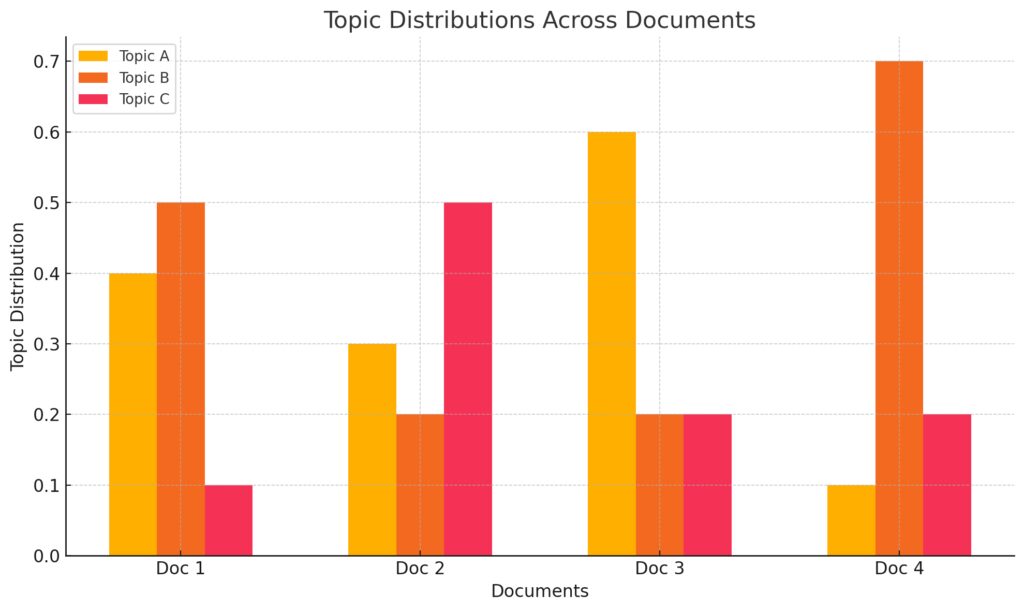

Visualizing Topic Distributions in Short Text

Once you’ve trained your model, it’s important to visualize the results to understand the discovered topics. Tools like pyLDAvis offer interactive visualizations that allow you to explore the relationships between topics and the most relevant words associated with them.

For short text, focusing on the saliency of words—the degree to which a word stands out in a particular topic—can help identify which words are truly representative. Visualization also makes it easier to spot patterns or anomalies that may have been missed by simply reviewing the text output.

Hyperlinking to resources such as articles about BTM or short text preprocessing could also give readers practical steps for enhancing their topic modeling tasks.

Practical Applications of LDA for Short Text

LDA is widely used for analyzing social media posts, such as Twitter or Facebook updates. These platforms generate a massive amount of short, unstructured text, making LDA an effective tool for identifying trending topics or themes in real-time. By uncovering patterns in hashtags, keywords, or phrases, businesses can monitor customer sentiment, gauge public interest, or spot emerging issues.

Another common use is in customer reviews. Whether for products or services, reviews tend to be brief yet highly opinionated. LDA can help identify the most frequently discussed features or pain points, providing companies with insights into what their customers value or dislike.

Beyond business, LDA has found its way into academic research, helping scholars analyze short text collections such as abstracts, conference proceedings, or news summaries. In journalism, it’s often used to scan news headlines and articles, clustering them into topics for more efficient news aggregation.

Combining LDA with Other NLP Techniques

While LDA is powerful on its own, combining it with other Natural Language Processing (NLP) techniques can significantly improve results, especially for short texts. For instance, word embeddings like Word2Vec or GloVe capture semantic meaning by placing words into a high-dimensional space based on their context. By using these embeddings, you can enrich the input data before applying LDA, helping the algorithm identify more nuanced topics.

Another approach is to pair LDA with Named Entity Recognition (NER), which detects names of people, organizations, or locations. This can provide more concrete labels for your topics, making them easier to interpret and more relevant to specific use cases, like analyzing company mentions in news articles.

Additionally, sentiment analysis can be a useful complement to LDA. Once topics are extracted from a set of short texts, sentiment analysis can reveal whether people are discussing these topics in a positive, negative, or neutral way, adding another layer of insight.

Tools and Libraries for Running LDA on Short Text

Several libraries make it easy to implement LDA on short texts. One of the most popular is Gensim, a Python library for topic modeling that offers efficient algorithms and flexibility in handling large datasets. Gensim also allows you to use TF-IDF or Bag of Words representations of your text, making it more adaptable for short texts.

MALLET (MAchine Learning for LanguagE Toolkit) is another powerful tool designed for text classification and topic modeling. It’s highly optimized for LDA and allows for customizations that suit short-text datasets, such as varying the number of topics or iterations.

For visualizing the results, the pyLDAvis library stands out. It offers interactive visualizations that help users explore topic distributions and term relevance, which is particularly useful when working with noisy short texts.

Real-Life Use Cases of LDA on Tweets, Reviews, and More

One notable example of LDA being used effectively is in analyzing tweets during elections. By clustering tweets into topics, political analysts can identify which issues are most discussed and how they shift over time. This was famously done in the 2016 U.S. presidential election, where LDA revealed patterns in voter concerns, from economic issues to healthcare and immigration.

Another interesting use case comes from the e-commerce industry. Companies like Amazon use LDA to sift through thousands of product reviews, summarizing the most discussed aspects, such as quality, shipping speed, or customer service. This helps inform their business strategies and improve customer satisfaction.

In the field of healthcare, LDA has been applied to analyze patient feedback, grouping comments into topics like treatment quality, appointment scheduling, or facility cleanliness. This helps healthcare providers understand where improvements are needed and how patients feel about their care.

Understanding LDA Results for Actionable Insights

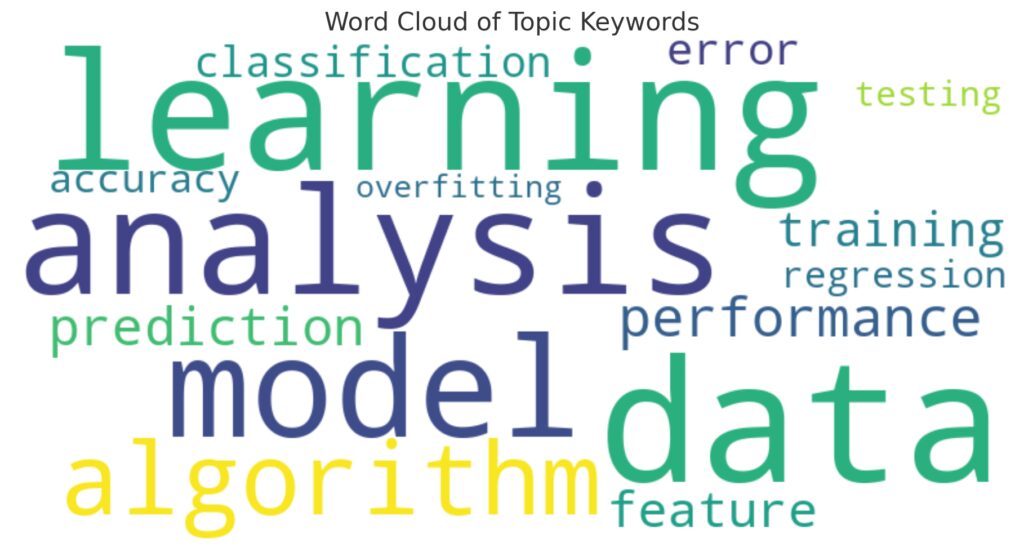

Once you’ve applied LDA, understanding the output is critical for deriving actionable insights. The algorithm gives you a set of topics, each with a list of words that are most associated with that topic. These keywords should give you a good sense of the main theme, but it’s important to dig deeper and interpret the results in context.

For example, if you’re analyzing customer reviews and one of the top topics includes words like “wait time,” “long,” and “hours,” this might indicate dissatisfaction with service delays. From here, you could prioritize changes to improve efficiency and customer experience.

Be sure to evaluate the coherence score of your topics. High coherence means the words in each topic make sense together, while low coherence may suggest the need for more preprocessing or a different model configuration.

Is LDA the Best Tool for Short Text Analysis?

While LDA is widely used, it’s not always the best tool for every short text analysis. In some cases, its reliance on word co-occurrence limits its effectiveness, especially with sparse data or slang-heavy texts, like those from social media.

In such cases, neural topic modeling methods like BERT (Bidirectional Encoder Representations from Transformers) can outperform LDA. BERT uses deep learning to understand the context and semantics of text, making it more suitable for capturing the nuances in short texts like tweets or headlines.

That said, LDA remains an excellent starting point, especially when you need a simple and interpretable model to get a quick sense of the topics hidden in your data.

Alternatives to LDA for Short Texts

If LDA doesn’t quite fit your needs, several alternative models excel at short text analysis. One of the most promising is Non-negative Matrix Factorization (NMF), which uses linear algebra to uncover hidden structures in text data, offering a more fine-tuned approach for short, noisy data.

Another alternative is Hierarchical Dirichlet Process (HDP), which is a non-parametric version of LDA. HDP automatically determines the optimal number of topics for your dataset, which is especially useful when you’re unsure how many topics to aim for in your short texts.

Finally, if you’re analyzing very brief snippets like search queries or chat logs, Deep Learning models such as Latent Semantic Analysis (LSA) or Transformer-based models might offer more accurate topic modeling, especially when combined with word embeddings or transfer learning techniques.

FAQs

What is Latent Dirichlet Allocation (LDA)?

LDA is a machine learning algorithm used to discover hidden topics in a collection of text documents. It works by identifying patterns in word distributions across documents to group related words into topics.

Why does LDA struggle with short texts?

LDA struggles with short texts because they don’t provide enough word occurrences for the algorithm to confidently identify patterns. The lack of word co-occurrence in short texts leads to vague or irrelevant topics.

How can I improve LDA results on short texts?

You can improve LDA’s performance on short texts by preprocessing the data thoroughly, aggregating similar texts into longer documents, and using techniques like TF-IDF weighting to highlight important words.

What modifications of LDA are suitable for short texts?

Modifications like Biterm Topic Modeling (BTM), Dirichlet Multinomial Mixture (DMM), and Topical N-Grams have been specifically developed to handle short texts better by focusing on word pairs, single-topic assumptions, or phrases.

What is the best way to visualize LDA results?

Using tools like pyLDAvis is ideal for visualizing LDA results. It provides interactive visualizations where you can explore the relationships between topics and key terms, making it easier to interpret the discovered topics.

How do I choose the right number of topics in LDA for short text?

Experimentation is key. Start with a small number of topics and gradually increase, using metrics like perplexity or coherence scores to ensure your model produces meaningful, interpretable results.

Can I combine LDA with other NLP techniques?

Yes, combining LDA with techniques like word embeddings, Named Entity Recognition (NER), or sentiment analysis can provide richer, more insightful results, especially for short texts.

What are the alternatives to LDA for short texts?

Alternatives like Non-negative Matrix Factorization (NMF), Hierarchical Dirichlet Process (HDP), and Deep Learning models (such as BERT) may provide better results depending on the nature of your short texts and desired outcome.

Resources

1. Gensim

A powerful Python library for topic modeling, document similarity analysis, and natural language processing. It’s widely used for running LDA on large and small text datasets, including short texts.

Learn more about Gensim

2. pyLDAvis

This library is ideal for visualizing topics generated by LDA. It provides interactive visualizations that help you understand the relationship between topics and the words associated with them.

Check out pyLDAvis on GitHub

3. Biterm Topic Model (BTM)

BTM is a modified LDA approach designed for short text data. It models word co-occurrence directly, making it better suited for tweets, reviews, and other short snippets.

Read about BTM

4. Natural Language Toolkit (NLTK)

An extensive suite of libraries for handling and preprocessing text. It’s commonly used alongside LDA to clean, tokenize, and preprocess text data before topic modeling.

Explore NLTK

5. Introduction to LDA for Topic Modeling

This tutorial provides a detailed explanation of how LDA works, including step-by-step examples and visual aids. It’s great for those new to LDA and looking to understand its fundamentals.

Read the tutorial