The Importance of Handling Categorical Data: Why CatBoost Excels Where Others Struggle

Categorical data poses unique challenges. This data, which includes labels like gender, product types, or countries, is often critical to building predictive models.

Yet, many algorithms struggle to handle it efficiently. CatBoost, an algorithm developed by Yandex, stands out as one that excels where others falter. It effectively deals with the nuances of categorical data, providing improved accuracy and performance.

What Makes Categorical Data So Challenging?

Categorical data isn’t just numbers. It represents discrete values that can’t be ordered or mathematically manipulated in the same way as numerical data. For example, how do you assign a number to “red,” “blue,” and “green” without losing the underlying meaning?

Most machine learning algorithms, such as linear regression or decision trees, need numerical inputs. Converting categorical data into something meaningful, without losing valuable information, becomes a critical task. Common methods like one-hot encoding or label encoding often lead to inefficiencies or degraded performance in models.

One-hot encoding, for instance, can blow up the feature space, especially when you have categories with a large number of unique values (like countries or ZIP codes). Label encoding, on the other hand, can introduce unintended ordinal relationships between categories.

Traditional Methods and Their Struggles with Categorical Data

When working with traditional machine learning algorithms, handling categorical data requires careful preprocessing. Algorithms like Random Forest or XGBoost rely on feature engineering techniques to convert these categories into numerical formats.

- One-hot encoding creates binary columns for each category. While this can work, it leads to high-dimensional datasets, making the model complex and slower to train.

- Label encoding assigns a unique number to each category. This approach works well for ordinal data, but for non-ordinal data, it can introduce unintended biases. For instance, the model may treat the difference between “category 1” and “category 3” as more significant than between “category 1” and “category 2,” even though they may not have any numerical relationship.

- Target encoding tries to resolve this by replacing categories with the mean target value. While it’s a clever technique, it comes with risks of overfitting and data leakage, especially if the encoding process uses information from the test set during training.

These limitations become evident in models that don’t handle categorical data natively. They either add complexity or risk introducing biases that skew results.

Enter CatBoost: The Categorical Data Whisperer

CatBoost (short for Category Boosting) was designed with categorical data at its core. Instead of relying on external preprocessing methods like one-hot or label encoding, it handles categorical features internally, ensuring that the model captures relationships between categories effectively.

CatBoost’s approach is built on two primary advantages:

- Efficient Handling of Categorical Features:

CatBoost uses a technique called ordered boosting, which processes categorical features in a way that reduces the risk of data leakage and overfitting. By learning from previously observed data and encoding categories within the model, it avoids the common pitfalls of one-hot and label encoding. This method allows CatBoost to learn from the relationships between categories without introducing noise. - Handling High Cardinality Features:

One of CatBoost’s strengths lies in its ability to manage high-cardinality categorical features—those with many unique values, like ZIP codes or product IDs. Traditional methods would expand these features into a massive number of columns, but CatBoost efficiently encodes them, reducing dimensionality while preserving the informative power of the data.

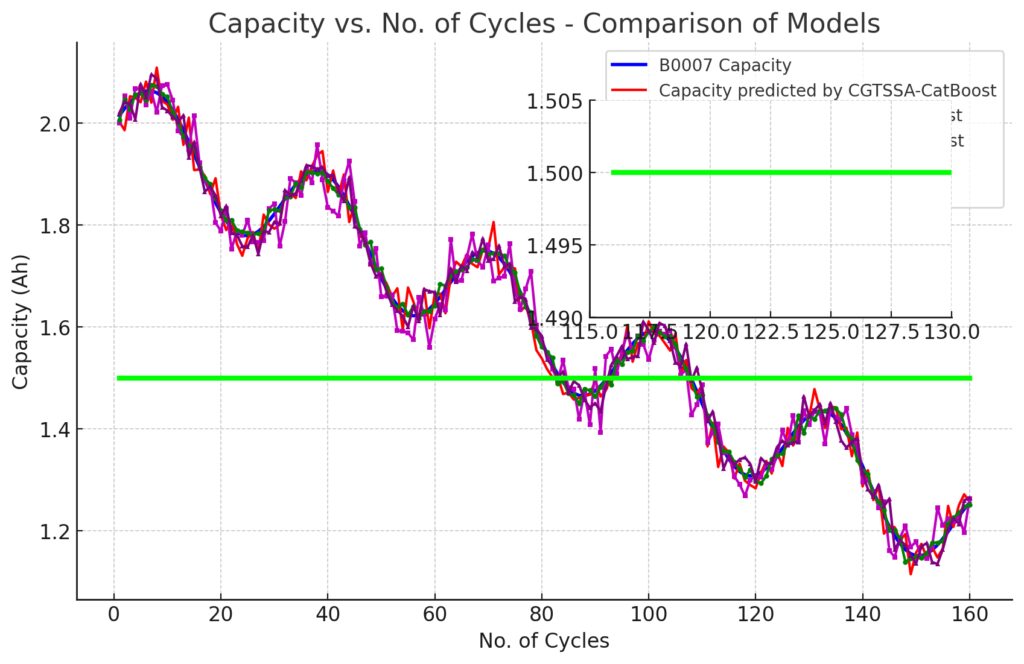

>> CatBoost For Accurate Time-Series Predictions: Here’s How

The Benefits of CatBoost for Categorical Data

Accuracy Without Overfitting

One of CatBoost’s core innovations is reducing overfitting when handling categorical data. Other models that use techniques like target encoding are prone to overfitting, especially when categories have few examples. CatBoost’s approach to encoding mitigates this by learning from previous examples while reducing the signal leakage from the test set, keeping the model more generalizable.

No Need for Extensive Preprocessing

Unlike other algorithms, CatBoost eliminates the need for manual feature engineering or extensive preprocessing. This reduces the time spent on converting categorical data and prevents the common pitfalls of manually encoding categories. With CatBoost, you simply pass in the categorical features directly, and the algorithm does the rest, reducing the chance for human error in the preprocessing phase.

Speed and Efficiency

Another reason why CatBoost excels is its ability to process data efficiently. Traditional methods can bog down with high-dimensional data or require hours of preprocessing. CatBoost’s built-in handling of categorical features results in faster training times, even with large datasets, making it an ideal solution for real-world applications where time is a critical factor.

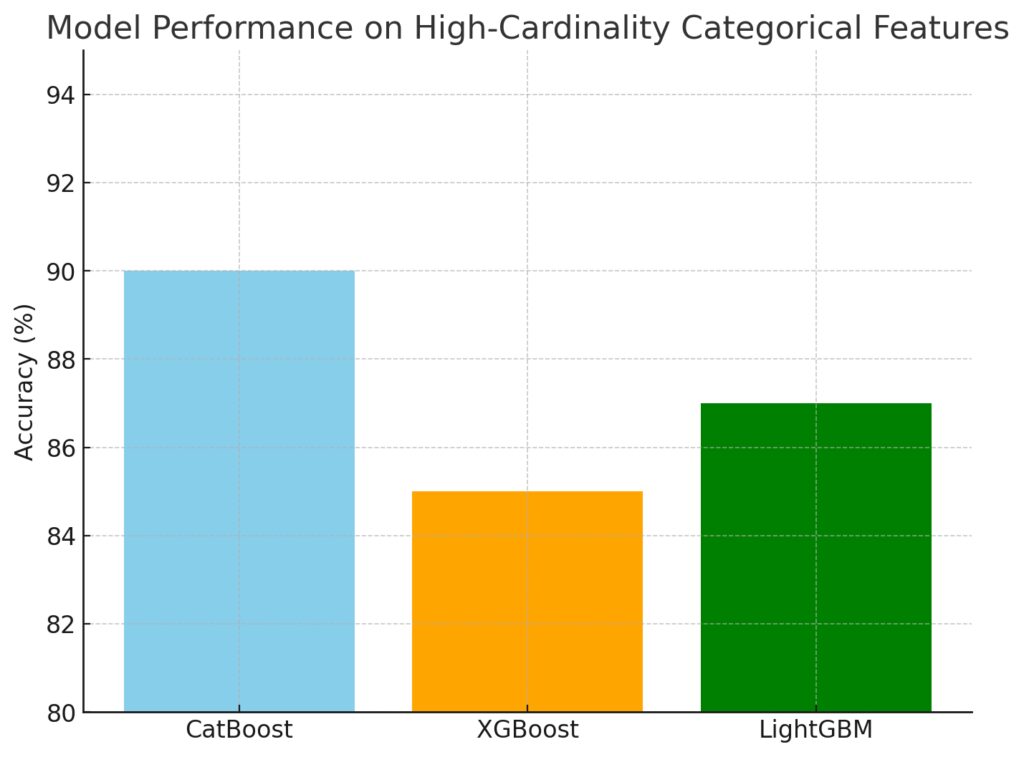

Comparing CatBoost to Other Algorithms

CatBoost has a clear advantage over models like XGBoost or LightGBM when it comes to categorical data. Both of these algorithms are highly popular but require additional work to handle categorical variables. With XGBoost, you might rely on target encoding or one-hot encoding, which can significantly slow down model training and increase the risk of overfitting.

LightGBM has made strides in handling categorical features, but it still doesn’t perform as consistently as CatBoost. LightGBM’s approach to categorical data can sometimes introduce biases, and it struggles with high-cardinality features. In contrast, CatBoost processes these features natively and with greater accuracy, reducing the need for external tuning.

Real-World Applications of CatBoost

Several industries have started adopting CatBoost because of its superior ability to handle categorical variables. In finance, where categorical data such as transaction types, customer categories, and risk ratings are prevalent, CatBoost helps build predictive models that are more accurate without extensive preprocessing. In retail, where customer demographics and product categories are key data points, CatBoost shines in recommendation systems, pricing strategies, and inventory management models.

Moreover, in fields like healthcare and insurance, where categorical data like medical conditions or policy types are abundant, CatBoost enables practitioners to build models that better predict outcomes or assess risks with higher confidence.

Conclusion: Why CatBoost Stands Out

Handling categorical data effectively is crucial for building accurate, reliable machine learning models. Where traditional algorithms like XGBoost or Random Forest require extensive preprocessing and struggle with high-cardinality features, CatBoost excels by natively managing categorical data without sacrificing performance or accuracy.

With its efficient encoding methods, built-in handling of high-cardinality categories, and ability to avoid common pitfalls like overfitting and data leakage, CatBoost offers a seamless solution to one of the biggest challenges in machine learning. As businesses and industries continue to deal with increasingly complex datasets, CatBoost is likely to become the go-to algorithm for categorical data, outperforming competitors where it matters most.

FAQs

Why do other algorithms struggle with categorical data?

Most algorithms, like XGBoost or Random Forest, require categorical data to be converted into numerical formats before they can be used. Techniques like one-hot encoding can lead to bloated feature spaces, slowing down training and sometimes introducing bias. This creates inefficiencies, especially with high-cardinality features.

How does CatBoost avoid overfitting when handling categorical data?

CatBoost uses a method called ordered boosting that reduces the risk of overfitting. This method involves learning from previously observed data, rather than from the entire dataset, which prevents the algorithm from learning patterns that don’t generalize well. This is particularly important for categorical data, where overfitting can be a big problem.

What makes CatBoost better than other algorithms for high-cardinality categorical features?

CatBoost handles high-cardinality features more efficiently by encoding them internally in a way that preserves their informative value without drastically increasing the feature space. Algorithms like XGBoost struggle with these types of features because they require extensive preprocessing, which can introduce noise or lead to model inefficiencies.

Can CatBoost work without much preprocessing?

Yes, this is one of CatBoost’s standout features. While other models require manual preprocessing like one-hot encoding or target encoding, CatBoost is designed to work directly with raw categorical data. This saves time and reduces the chances of errors during the data preparation phase.

How does CatBoost handle real-world categorical data applications?

CatBoost excels in industries like finance, healthcare, and e-commerce, where categorical data such as customer demographics, product categories, or transaction types play a key role. Its ability to handle these features without bloating the feature space makes it ideal for real-time decision-making systems like recommendation engines or fraud detection models.

Does CatBoost only work well with categorical data?

While CatBoost is optimized for categorical data, it is a highly capable gradient boosting algorithm that performs well on both numerical and categorical datasets. It’s often used in a variety of applications, not just those that require categorical data handling.

What are the performance advantages of CatBoost compared to XGBoost or LightGBM?

CatBoost generally provides better accuracy and faster training times when handling categorical data, particularly for datasets with high-cardinality features. Compared to XGBoost or LightGBM, CatBoost requires less preprocessing, making it more user-friendly while also reducing the risk of introducing bias through manual encoding.

How does CatBoost’s ordered boosting differ from traditional boosting methods?

Traditional boosting algorithms use the entire dataset to learn from during each iteration, which can lead to overfitting. CatBoost’s ordered boosting process only uses part of the data (data from previous iterations) to avoid learning from the entire dataset, making it more robust and less likely to fit to noise in the data.

Why is handling high-dimensional data a big issue for machine learning models?

High-dimensional data, especially from one-hot encoding, can drastically increase the number of features in a dataset. This not only increases computational complexity but also makes models more prone to overfitting. CatBoost avoids these issues by managing categorical data internally without needing to expand the feature space unnecessarily.

What kind of datasets benefit the most from using CatBoost?

Datasets with a large number of categorical variables, especially those with high-cardinality features, benefit the most from using CatBoost. These types of datasets are common in industries like retail, finance, and healthcare, where categorical features play an important role in model predictions.

Can CatBoost handle missing values in categorical data?

Yes, CatBoost can handle missing values in both categorical and numerical features. The algorithm processes missing values internally without requiring users to manually impute them, which helps streamline the modeling process and avoids introducing imputation bias.

Is CatBoost open-source?

Yes, CatBoost is an open-source project developed by Yandex. It’s freely available for public use and has a growing community of users. The algorithm is supported across multiple platforms and can be easily integrated into popular machine learning environments like Python and R.

How does CatBoost handle imbalanced data?

CatBoost handles imbalanced datasets by using various internal mechanisms that focus on balancing class weights and dealing with skewed data distributions. This makes it a strong contender for applications where imbalanced data is a concern, such as fraud detection or rare event prediction.

What are the disadvantages of using CatBoost?

While CatBoost excels with categorical data, it can sometimes be slower to train than algorithms like LightGBM on purely numerical datasets. Additionally, CatBoost’s default hyperparameters are highly tuned for specific types of problems, so some users may find it less flexible in terms of hyperparameter tuning compared to other algorithms.

These FAQs cover the most common questions around CatBoost and its superior handling of categorical data, providing a clearer understanding of its advantages and limitations compared to other algorithms.

Resources for Learning More About CatBoost and Categorical Data Handling

If you’re eager to dive deeper into CatBoost and how it handles categorical data, here are some valuable resources that can enhance your understanding of this powerful algorithm:

Official CatBoost Documentation

- The official documentation offers a comprehensive guide on how to use CatBoost, including installation, parameter tuning, and categorical data handling.

- CatBoost Documentation

CatBoost GitHub Repository

- The open-source repository for CatBoost contains the source code, example notebooks, and a community forum for users to collaborate and troubleshoot.

- CatBoost GitHub

Kaggle: Handling Categorical Data in CatBoost

- Kaggle provides a rich library of tutorials and notebooks that demonstrate how to use CatBoost with real-world datasets. This is a great place to see CatBoost in action with categorical data.

- Kaggle CatBoost Notebooks

Research Paper: CatBoost – Machine Learning Algorithm for Categorical Data

- This paper, published by the creators of CatBoost, delves into the technical details of how the algorithm processes categorical data, as well as its advantages over traditional methods.

- Read the Research Paper