Convolutional Neural Networks (CNNs) have come a long way since their introduction, revolutionizing the field of computer vision and branching out to solve more complex tasks in different domains.

What started as a solution to image recognition has grown into a powerful tool for processing a wide variety of data types. Let’s dive into the fascinating evolution of CNNs and how they’ve expanded beyond their original use cases.

The Early Days of CNNs: Focus on Image Recognition

The Birth of CNNs: A Breakthrough in Visual Processing

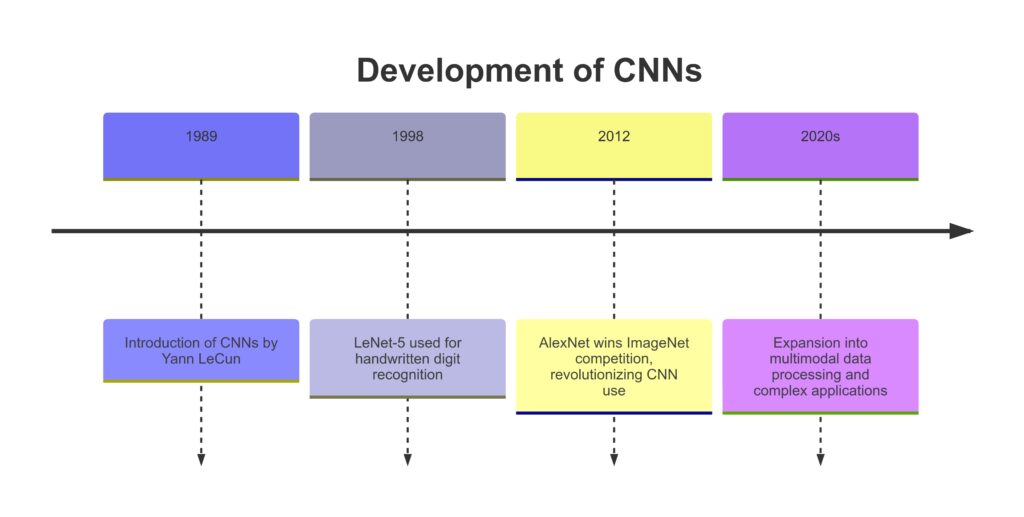

CNNs were first introduced by Yann LeCun in the late 1980s as a solution to automate the process of image classification. Their unique ability to detect patterns and features in images made them an ideal tool for applications like facial recognition and object detection. Early versions of CNNs transformed industries like postal services, where they helped with automated reading of handwritten digits, such as zip codes on mail.

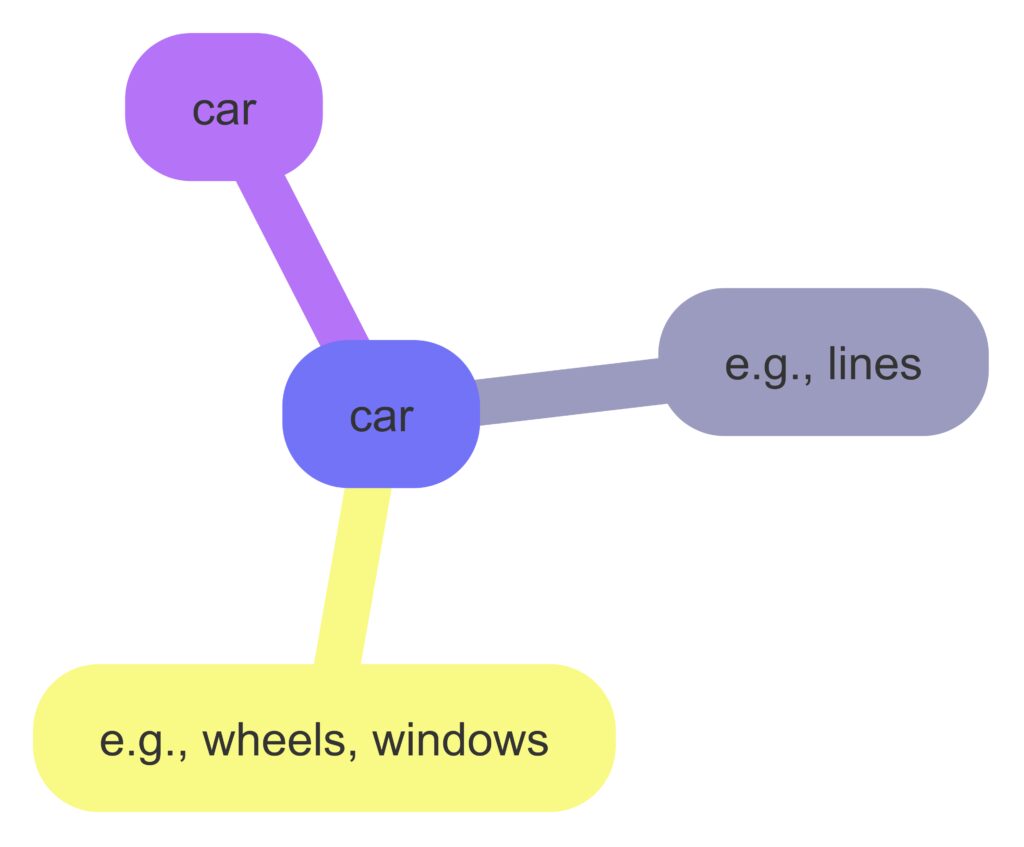

How CNNs “See” Images

CNNs function through a series of convolutional layers, each specializing in recognizing specific features. For example:

- Early layers might detect basic edges.

- Intermediate layers focus on shapes and textures.

- Deeper layers identify complex patterns, like faces or objects.

This hierarchical structure allows CNNs to process images efficiently, making them especially effective for tasks where identifying specific visual patterns is key.

LeNet-5: The First CNN to Gain Popularity

In 1998, LeCun introduced LeNet-5, a CNN architecture designed for handwritten character recognition. It became one of the first successful implementations of CNNs and was widely adopted in optical character recognition (OCR) systems. LeNet-5’s ability to outperform traditional models in image classification demonstrated the enormous potential of CNNs in visual processing.

Expansion Beyond Images: CNNs Meet Complex Data

CNNs in Natural Language Processing (NLP)

Although CNNs were originally built for image data, their ability to extract local patterns also found application in natural language processing. By treating text sequences in a manner similar to image pixels, CNNs can detect relationships between words, making them effective for:

- Text classification

- Sentiment analysis

- Entity recognition

CNNs excel at processing text quickly due to their ability to handle data in parallel, unlike Recurrent Neural Networks (RNNs), which process data sequentially. As a result, CNNs are now often used for tasks like identifying key phrases in a document or determining the sentiment of customer reviews.

Audio and Speech Recognition: CNNs Listen to Sound

CNNs have also been used in the field of speech recognition by analyzing spectrograms—visual representations of audio signals. Much like how they identify patterns in images, CNNs can pick up features in the spectrogram that correspond to different speech sounds or audio signals.

In real-world applications, CNNs play a vital role in:

- Speech-to-text systems

- Voice assistants like Alexa and Google Assistant

- Speaker identification and noise cancellation systems

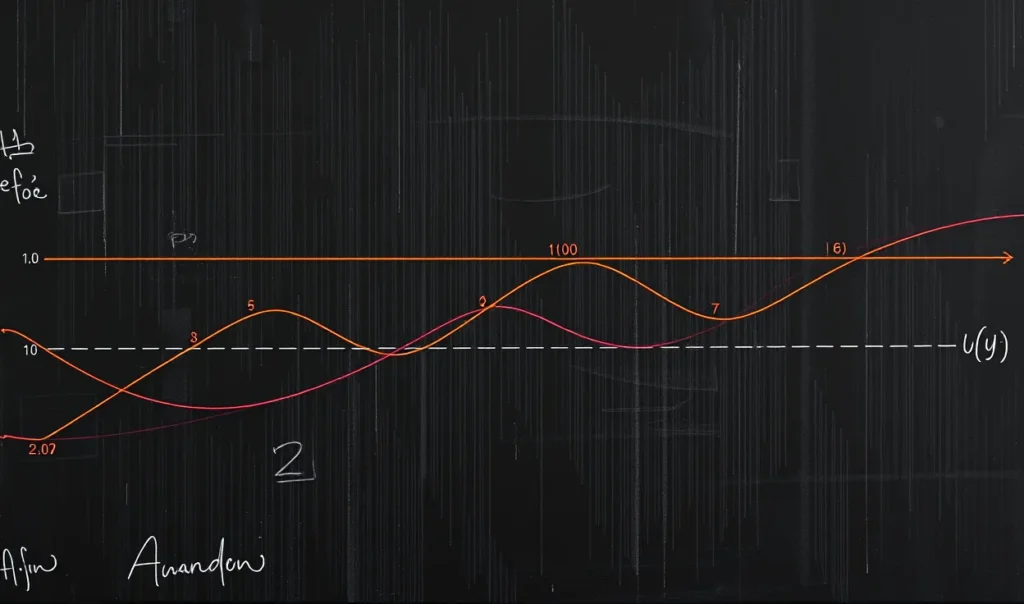

Time Series Data: Forecasting Trends

CNNs have also been adapted to process time series data, making them effective for predicting trends in sequential datasets such as financial markets, weather data, or IoT sensor readings. By learning patterns in historical data, CNNs can forecast future outcomes, which has proven especially useful for:

- Stock market predictions

- Anomaly detection in transactions

- Energy consumption forecasting

This ability to model complex, non-linear relationships in time series data makes CNNs valuable in industries like finance and energy, where precision forecasting can lead to better decision-making.

Medical Imaging: Transforming Healthcare Diagnostics

One of the most impactful applications of CNNs today is in medical imaging. CNNs can analyze medical images, such as X-rays, MRIs, or CT scans, with a high degree of accuracy, making them invaluable for:

- Detecting tumors or abnormalities

- Classifying diseases based on radiological data

- Automating diagnostic workflows

By quickly and accurately analyzing medical data, CNNs assist doctors in diagnosing conditions more efficiently, reducing the likelihood of human error, and speeding up treatment planning.

Recent Advancements: CNNs in Multimodal Data

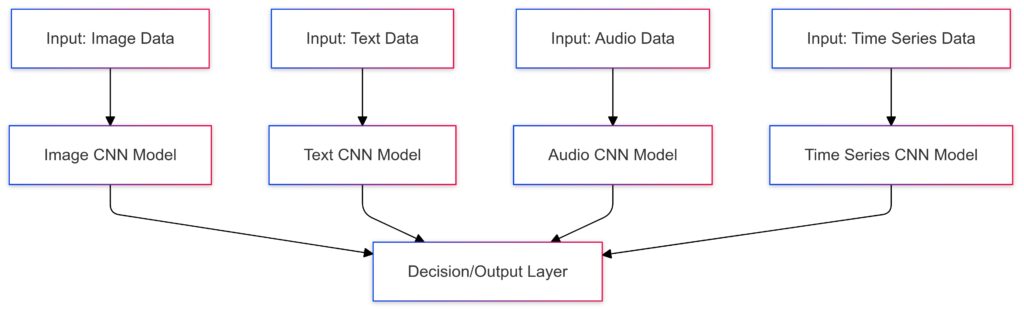

CNNs and Multimodal Learning: Integrating Various Data Types

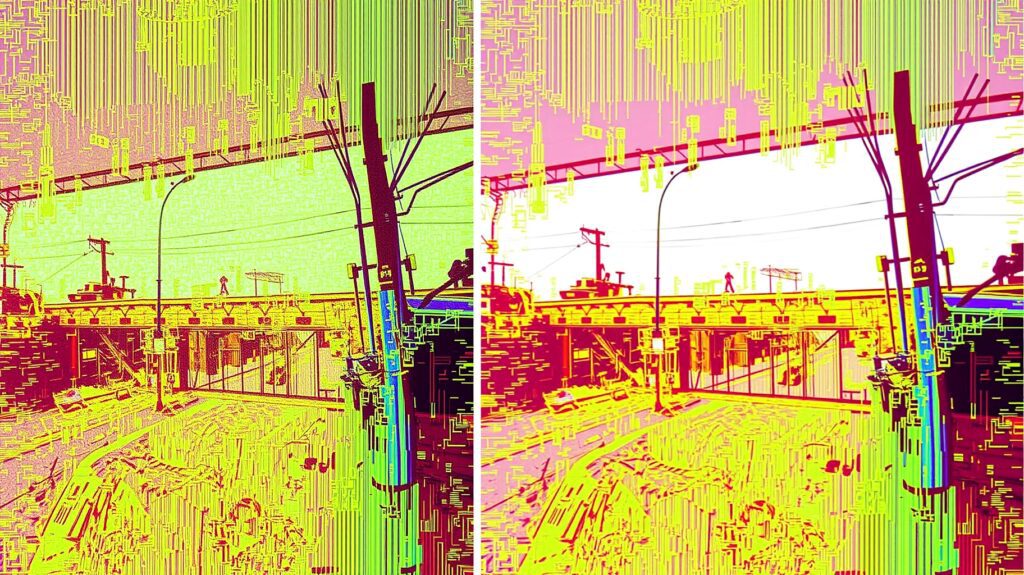

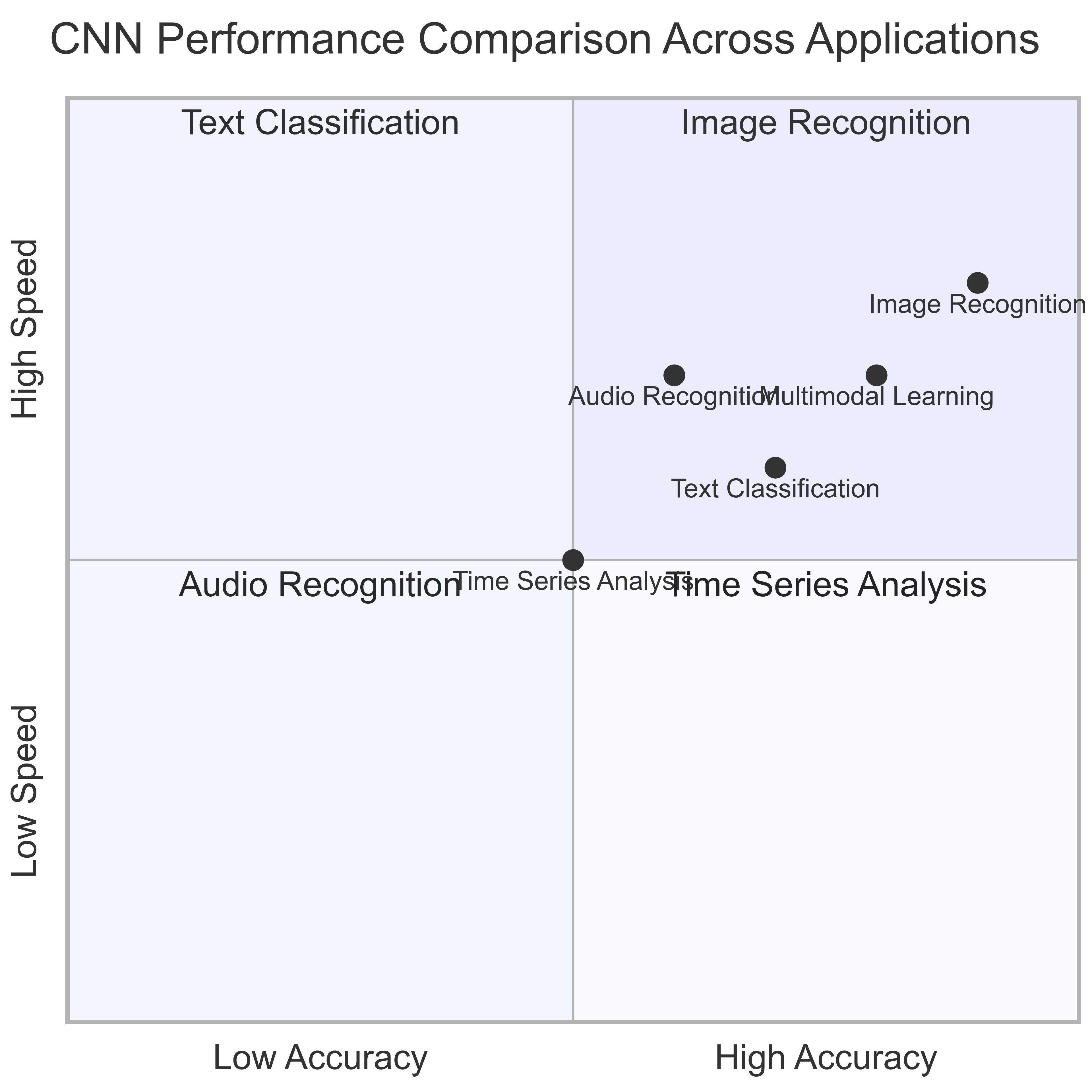

Performance comparison of CNNs across different domains, highlighting their adaptability to various data types.

One of the latest innovations in CNN technology is the integration of multiple types of data—such as combining text, images, and audio into a single system. Known as multimodal learning, this approach leverages CNNs’ versatility to process different forms of input data simultaneously.

For example, self-driving cars use CNNs to process both visual data from cameras and sensor data from radar systems. Similarly, multimodal sentiment analysis can combine video, audio, and text data to understand emotional states more accurately.

Hybrid Architectures: CNNs Meet Transformers

As CNNs continue to evolve, hybrid architectures that combine CNNs with other types of neural networks, such as Transformers, are emerging. These hybrids take advantage of CNNs’ strengths in feature extraction and Transformers’ capabilities in handling long-range dependencies, making them powerful tools for tasks like video analysis or multilingual translation.

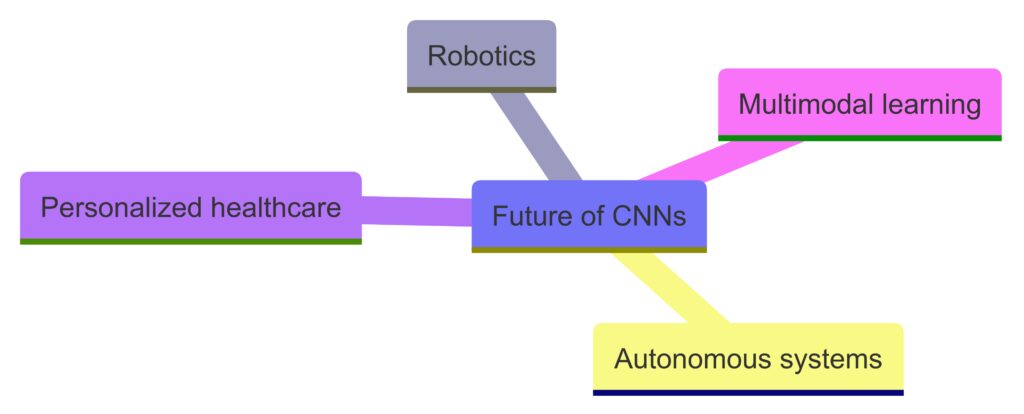

The Future of CNNs: Expanding Horizons

As CNNs continue to evolve, their applications are becoming even more diverse. Researchers are now exploring the potential of CNNs in fields like:

- Robotics for real-time visual and sensory processing.

- Autonomous systems where decision-making based on image and sensor data is critical.

- Personalized healthcare where CNNs could analyze individual patient data to recommend tailored treatments.

The future of CNNs is full of promise as they become more integrated with other technologies and continue to expand into new, complex data domains.

Conclusion: From Pixels to Patterns in Every Domain

What started as a powerful tool for recognizing handwritten digits has evolved into a versatile neural network architecture that can process not only images but also text, audio, time series, and even multimodal data. The journey of CNNs showcases the adaptability of deep learning technologies and their potential to reshape industries far beyond their original scope in image recognition.

Further Reading or Resources:

- Yann LeCun’s Research on CNNs: Learn More

- CNNs in Natural Language Processing: Read Here

- Medical Imaging and AI: Explore Applications

FAQs About the Evolution of CNNs

What are CNNs, and why are they important?

CNNs, or Convolutional Neural Networks, are a type of deep learning model primarily used for analyzing visual data. They have transformed image recognition by automatically detecting patterns like edges, shapes, and textures. Their ability to generalize and learn hierarchies of features makes them incredibly powerful for tasks beyond image classification, such as natural language processing, speech recognition, and medical diagnostics.

How do CNNs work?

CNNs use multiple layers to process data, each layer identifying increasingly complex patterns. Early layers detect basic features like edges, while deeper layers recognize more sophisticated objects or structures. CNNs employ a convolution operation to filter input data, followed by pooling and fully connected layers to make predictions based on learned features.

What was LeNet, and how did it influence the development of CNNs?

LeNet-5, developed by Yann LeCun in 1998, was one of the earliest CNN models designed for handwritten digit recognition. It had a significant impact on the development of CNNs and demonstrated the potential of neural networks in solving practical problems like optical character recognition (OCR).

Can CNNs be used for text and speech data?

Yes, CNNs have been adapted for text and speech processing. In natural language processing (NLP), CNNs can capture important phrases or relationships between words, making them useful for sentiment analysis and text classification. In speech recognition, CNNs analyze spectrograms—visual representations of sound waves—to detect patterns in audio data.

What are some real-world applications of CNNs?

CNNs are widely used in various domains, including:

- Image recognition for tasks like facial detection and object recognition.

- Medical imaging, where CNNs help detect tumors and other abnormalities.

- Speech recognition in virtual assistants like Siri and Google Assistant.

- Time series forecasting, such as stock market predictions or weather trends.

- Autonomous vehicles, where they process visual and sensor data for navigation.

How are CNNs different from traditional neural networks?

While traditional neural networks process data through fully connected layers, CNNs use convolutional layers that are specifically designed for spatial data, such as images. This allows CNNs to handle large inputs efficiently by reducing the number of parameters and focusing on important features like patterns and textures.

What is multimodal learning with CNNs?

Multimodal learning combines different types of data—such as text, images, and audio—into a single model. CNNs are often used in multimodal architectures because of their ability to process visual and sequential data. This is commonly used in self-driving cars and video sentiment analysis, where both visual and audio inputs are analyzed together.

Are CNNs only useful for image data?

No, CNNs have extended their use beyond images. They can process time series data, such as stock prices or sensor data, and are also employed in speech recognition, text processing, and even multimodal learning. CNNs’ ability to generalize across different types of structured data has made them versatile across industries.

What are the current limitations of CNNs?

Despite their versatility, CNNs still have limitations:

- Data dependency: CNNs require large datasets for effective training, which can be resource-intensive.

- Lack of interpretability: CNNs are often seen as “black boxes,” making it hard to explain their decision-making process.

- Computational cost: CNNs, especially deep models, require significant computational power and can be slow to train.

How do CNNs contribute to the future of AI?

CNNs are a core part of many AI advancements, particularly in computer vision and robotics. Their integration into systems like self-driving cars, healthcare diagnostics, and smart cities will only expand as they continue to evolve, becoming more efficient and capable of handling complex tasks.

What role do CNNs play in medical imaging?

CNNs are revolutionizing medical imaging by providing highly accurate diagnostic tools. They analyze medical scans like X-rays, CT scans, and MRIs to detect abnormalities such as tumors, fractures, or lesions. By learning from large datasets of medical images, CNNs can automate diagnostics, assist radiologists, and improve the speed and accuracy of identifying diseases, leading to earlier detection and better treatment outcomes.

How are CNNs used in autonomous vehicles?

CNNs play a critical role in autonomous vehicles by helping the system understand and interpret visual input from the environment. They process data from cameras, LiDAR, and radar sensors to:

- Identify objects such as pedestrians, traffic signs, and other vehicles.

- Interpret road conditions and traffic patterns.

- Make real-time decisions for navigation and safety.

CNNs enable the vehicle to “see” the road, making it possible for the car to operate with minimal human intervention.

How do CNNs handle time series data?

CNNs can be applied to time series data by treating sequential data (e.g., stock prices, weather trends, sensor readings) similarly to how they process image data. By detecting patterns over time, CNNs are effective for:

- Predicting future events based on historical data, such as stock market trends.

- Anomaly detection, identifying irregularities in transactional data or IoT sensor outputs.

- Forecasting demand in industries like energy or retail, where accurate predictions are essential for resource management.

What is the relationship between CNNs and Transfer Learning?

Transfer learning is a technique where a CNN trained on one task (usually with a large dataset) is reused as the starting point for another task, often with a smaller dataset. For example, a CNN trained on millions of general images (like in ImageNet) can be fine-tuned for specific tasks like medical imaging or facial recognition with minimal additional training. This method accelerates model development and improves accuracy, especially when limited data is available.

Can CNNs be combined with other neural networks?

Yes, CNNs are often combined with other types of neural networks for enhanced performance. For example:

- CNNs + RNNs: In sequence-based tasks like video processing or speech analysis, CNNs extract spatial features while RNNs (or LSTMs) capture temporal dependencies, leading to better context understanding.

- CNNs + Transformers: In modern NLP and multimodal tasks, combining CNNs’ feature extraction power with Transformer models (which handle long-range dependencies) can improve performance in complex tasks like machine translation or video sentiment analysis.

What is the difference between CNNs and RNNs?

The main difference between CNNs and Recurrent Neural Networks (RNNs) lies in the type of data they process and how they handle it:

- CNNs: Best suited for spatial data like images or spectrograms, where the relationships between neighboring pixels or data points are important.

- RNNs: Specialized for sequential data like text or time series, where understanding the order and context over time is crucial. Although CNNs have been extended to work with text and time-series data, RNNs (especially LSTMs and GRUs) are still preferred for tasks requiring memory and context across sequences.

How do CNNs contribute to video analysis?

CNNs are critical for tasks like video classification, object detection, and activity recognition in video analysis. By processing individual frames as images, CNNs detect objects or patterns, which can then be analyzed over time. When combined with models like RNNs or 3D CNNs, this enables real-time video processing in applications such as:

- Surveillance for detecting suspicious activity.

- Video content recommendation systems, which categorize and suggest content based on video features.

- Sports analytics, where they track player movements and analyze game strategies.

How are CNNs improving content moderation on social media platforms?

CNNs are instrumental in content moderation by automatically detecting inappropriate or harmful content, such as:

- Explicit images or videos.

- Violent or abusive content.

- Misinformation or harmful symbols.

By analyzing images and video clips, CNNs enable platforms like Facebook, Instagram, and YouTube to flag or remove content that violates community guidelines, ensuring a safer environment for users.

What is pooling in CNNs, and why is it important?

Pooling layers in CNNs are used to reduce the spatial dimensions of the input image, helping to down-sample and reduce the complexity of the model. Two main types of pooling are:

- Max pooling: Selects the maximum value from a set of pixels within a given region, preserving the most prominent features.

- Average pooling: Takes the average of pixel values within a region.

Pooling helps CNNs become computationally efficient and reduces the risk of overfitting by focusing on important features and discarding less relevant information.

What future advancements are expected for CNNs?

The future of CNNs looks promising, with research focused on making them more:

- Efficient: Developing lighter models that require less computational power and can run on mobile devices or edge computing environments.

- Interpretable: Improving transparency and interpretability of CNN decisions, especially in critical applications like healthcare and autonomous driving.

- Multimodal: Advancing their ability to process and combine data from multiple sources (e.g., text, video, and audio) for more complex applications like AI-driven assistants or smart cities.

As CNNs integrate with other machine learning models and AI systems, they will continue to evolve, making them even more integral to technological advancements across industries.