Composable AI and edge computing are revolutionizing how we process and utilize data. Together, they offer unprecedented flexibility and efficiency in modular intelligence systems, making them game-changers for industries everywhere.

Here’s how these dynamic technologies work together and why they matter.

What is Composable AI? The Basics Explained

Breaking Down Composable AI

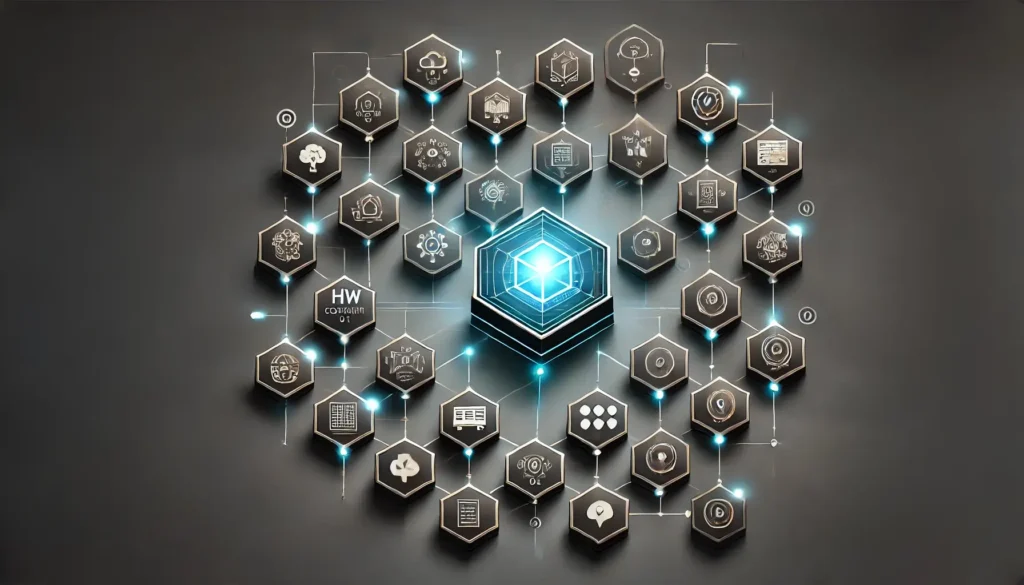

Composable AI refers to an approach where AI capabilities are built as modular components. These pieces can be mixed and matched, like building blocks, to form customized solutions. Instead of one monolithic AI, you get reusable modules for specific tasks, such as natural language processing, computer vision, or predictive analytics.

This modularity fosters adaptability, making it easier to upgrade or integrate new features without starting from scratch.

The Value of Modularity in AI Development

The main perk of composable AI is speed. Organizations can deploy systems faster by reusing existing modules. It also reduces costs, as you can avoid developing components repeatedly.

Additionally, modularity enhances collaboration across teams. Data scientists, engineers, and business leaders can align efforts, plugging in relevant components for specific projects.

How It Complements Edge Computing

Composable AI thrives in environments where data demands vary widely, making it an ideal partner for edge computing. Together, they bring intelligence closer to where data is created, reducing latency and optimizing processing.

Edge Computing: Redefining Data Processing

What Sets Edge Computing Apart

Edge computing refers to processing data at the network’s edge, closer to the data source. Unlike cloud computing, which centralizes data processing, edge computing minimizes delays by handling tasks locally.

This setup is crucial for real-time applications, such as autonomous vehicles, smart cities, or industrial automation.

Why the Edge Needs AI

Without AI, edge computing is like a car without a driver. By embedding intelligence at the edge, devices can make quick decisions based on real-time data. Think of a smart thermostat adjusting to your preferences in milliseconds—that’s edge AI in action.

Edge Computing’s Role in Decentralized AI Systems

Edge computing allows composable AI modules to function independently. For instance, an edge device can run a facial recognition module without needing continuous cloud access. This autonomy enhances reliability, even in low-connectivity areas.

The Benefits of Combining Composable AI and Edge Computing

Real-Time Decision Making

When composable AI modules operate at the edge, they enable lightning-fast decision-making. This capability is invaluable in sectors like healthcare, where seconds can save lives.

Enhanced Scalability and Flexibility

Need to add a new feature or tweak an existing one? With modular AI and edge computing, you can deploy updates seamlessly without disrupting the entire system.

For example, a smart camera can receive a new module for identifying vehicle types while retaining its existing functionality.

Improved Data Privacy

By processing sensitive information locally, edge computing reduces the risks associated with sending data to centralized servers. This approach ensures compliance with privacy regulations like GDPR.

Composable AI plays its part by letting you integrate privacy-focused modules, such as data anonymization.

Applications Across Industries: Real-World Use Cases

Retail and Customer Experience

In retail, composable AI modules can analyze shopper behavior directly on edge devices. Shelf-mounted cameras might run visual analytics to monitor stock levels while offering real-time data to store managers.

Healthcare Innovations

Hospitals can deploy edge devices equipped with AI modules for tasks like monitoring patient vitals. This setup ensures critical decisions are made instantly, without waiting for cloud responses.

Challenges to Address: What’s Holding Back Adoption?

Integration Complexities

Pairing composable AI with edge computing requires careful coordination. Ensuring that different modules work seamlessly across diverse hardware is no small feat.

Resource Constraints at the Edge

Edge devices often have limited processing power compared to cloud systems. Optimizing composable AI for these constraints is critical to unlocking their full potential.

Challenges in Implementing Composable AI at the Edge

Overcoming Compatibility Issues

While Composable AI promotes flexibility, integrating it with edge computing isn’t always seamless. Different hardware, software, and protocols often clash. For example, an AI module designed for a specific chip might not work on another.

To address this, developers are embracing universal standards and middleware solutions. These tools act as translators, ensuring that all components communicate smoothly. Still, achieving full compatibility can be time-consuming.

Resource Constraints at the Edge

Edge devices like IoT sensors or small servers are resource-limited. They have less processing power, storage, and energy compared to cloud systems. Running modular AI on these devices requires lightweight models optimized for efficiency.

Techniques like model compression and low-power AI chips help, but the trade-offs can impact performance. Striking a balance between power and efficiency remains a key challenge.

Security Concerns

Edge computing decentralizes data, which is great for latency but complicates security. With multiple entry points, the risk of cyberattacks increases. AI modules running at the edge must be designed with robust security measures.

Encryption, authentication protocols, and regular updates can mitigate risks. Yet, safeguarding hundreds or thousands of edge devices is no small task.

Future Trends: How This Tech Will Evolve

AI at the Edge: Smarter and Lighter

As technology advances, AI models are becoming smaller and more efficient. Edge devices are evolving to handle increasingly complex AI tasks. Companies like NVIDIA and Google are developing edge-specific AI hardware, paving the way for faster adoption.

In the future, expect self-optimizing AI modules that learn from their environments and refine their algorithms without human intervention.

Rise of Federated Learning

Federated learning is a game-changer for AI at the edge. Instead of collecting data in a central location, it trains AI models across multiple edge devices. This approach improves privacy and reduces the need for massive cloud storage.

For instance, a fleet of autonomous cars can train collectively, sharing insights without compromising individual vehicle data.

Integration with 5G

The rollout of 5G networks is transforming edge computing. Faster, more reliable connectivity allows for larger AI modules to run seamlessly at the edge. This synergy enables new use cases, like real-time augmented reality and smart grids that optimize energy consumption.

Real-World Applications Driving Innovation

Healthcare: Smarter Patient Monitoring

Hospitals are embracing edge-powered AI for real-time monitoring of patient vitals. Devices process data locally, alerting staff instantly if anomalies occur. Composable AI ensures that modules can be updated as medical protocols evolve.

This reduces reliance on centralized servers, improving both response times and patient privacy.

Retail: Hyper-Personalized Shopping

Retailers are deploying AI at the edge to analyze customer behavior in real time. Edge devices in stores track foot traffic, detect purchasing patterns, and adjust displays dynamically. With composable AI, these systems adapt to changing consumer trends effortlessly.

Transportation: Safer Roads with Smarter Systems

Autonomous vehicles and smart traffic systems rely on edge computing to process data on the go. Composable AI ensures that each module—whether it’s for navigation, obstacle detection, or route optimization—performs flawlessly.

This modular approach enables quick updates as roads, regulations, or technology evolve.

Building the Infrastructure for the Future

Scaling the Ecosystem

For Composable AI and edge computing to thrive, a robust infrastructure is essential. Hybrid cloud solutions are emerging as a bridge, enabling edge devices to offload heavy tasks when needed.

Additionally, partnerships between tech companies, governments, and industries are accelerating the deployment of edge-friendly networks.

Democratizing the Technology

Lowering costs and improving accessibility will drive widespread adoption. Open-source AI frameworks and edge computing platforms are already making this tech available to smaller businesses.

As more companies embrace these innovations, expect a surge in tailored applications across industries.

Final Thoughts: The Road Ahead

Composable AI and edge computing are transforming how we approach intelligence. Together, they offer adaptability, efficiency, and real-time decision-making. While challenges remain, the potential for innovation is limitless.

FAQs

What is the main difference between Composable AI and traditional AI?

Composable AI is modular, meaning it consists of independent components that work together. In contrast, traditional AI is often monolithic and hard to adapt. For instance, a bank using composable AI can integrate a fraud detection module today and add a customer sentiment analysis module tomorrow without rewriting the entire system.

How does edge computing reduce latency?

Edge computing processes data closer to its source rather than sending it to a centralized cloud. Imagine a smart home thermostat that adjusts temperature based on real-time occupancy. By processing this data locally, the thermostat responds instantly, avoiding delays caused by cloud-based systems.

Are there security risks with edge computing?

Yes, because edge computing operates through decentralized devices, it creates multiple points of vulnerability. For example, a network of connected cameras in a smart city could be hacked if security measures aren’t strong enough. Regular software updates, encryption, and strong authentication can mitigate these risks.

How are Composable AI and edge computing used together?

Composable AI’s modular nature complements edge computing’s localized processing. Take self-driving cars: one AI module detects objects, another manages navigation, and a third ensures passenger safety. These modules operate locally on the car’s edge systems, reducing dependence on cloud connectivity and enabling faster decisions.

What industries benefit most from this combination?

Industries requiring real-time insights or adaptable systems thrive with Composable AI and edge computing. For example, in healthcare, wearable devices analyze patient vitals locally and send only critical alerts to doctors. Similarly, in retail, edge devices optimize in-store inventory while AI modules personalize customer recommendations.

How does federated learning fit into this framework?

Federated learning trains AI models across multiple edge devices without sharing raw data. For example, a group of smartphones can collectively improve voice recognition software by learning from user interactions, all while keeping personal data secure on each device.

Is edge computing replacing the cloud?

No, edge computing complements the cloud. While edge handles real-time tasks locally, the cloud manages long-term storage and heavy processing. For instance, a delivery drone processes navigation and obstacle detection on the edge but uploads delivery logs to the cloud for analysis.

Can small businesses afford this technology?

Yes, the rise of open-source tools and cost-effective hardware is making these technologies more accessible. For example, a small retailer could use a basic edge device and modular AI to track foot traffic and optimize product placement, improving sales without a massive upfront investment.

What is the role of 5G in edge computing?

5G enhances edge computing by providing faster, more reliable connectivity. This is crucial for use cases like augmented reality shopping apps, where low latency is critical for real-time, interactive experiences.

Are there any environmental benefits?

Yes, edge computing reduces energy consumption by minimizing data transfer to centralized locations. For instance, a solar farm using edge AI can optimize energy distribution locally, reducing waste and cutting down on the need for cloud-based processing.

How does Composable AI improve system scalability?

Composable AI allows businesses to add, remove, or update modules without disrupting the overall system. For example, a logistics company could start with an AI module for route optimization and later integrate a predictive maintenance module to monitor vehicle performance as the fleet grows.

What are lightweight AI models, and why are they important at the edge?

Lightweight AI models are simplified versions of traditional models, designed to run efficiently on devices with limited resources. Consider a drone used for farming: it uses a lightweight model to analyze crop health in real-time, ensuring efficient battery use while processing critical data locally.

How is data privacy enhanced with edge computing?

By processing sensitive data locally, edge computing minimizes exposure to external threats. For instance, a health tracking wearable processes user health metrics directly on the device and shares only aggregate insights, keeping personal information secure.

What are some challenges in managing updates for edge-based AI systems?

Keeping edge devices updated can be tricky, especially when they’re deployed in remote or hard-to-reach locations. For example, wind turbines equipped with AI modules require seamless over-the-air updates to enhance their performance without manual intervention, which demands robust and secure update protocols.

How does Composable AI enable faster innovation?

Since Composable AI uses reusable modules, developers can quickly assemble and deploy new solutions. For instance, a telecom provider could integrate AI modules to optimize 5G network traffic, then swiftly replace them with updated modules when new technology emerges.

What role does IoT play in edge computing?

IoT devices are often the primary beneficiaries of edge computing, as they rely on fast, local processing. For example, smart sensors in a manufacturing plant detect anomalies in equipment and alert operators in real time, avoiding production delays.

Are there cost-saving benefits to adopting these technologies?

Absolutely. By processing data locally, businesses save on bandwidth and cloud storage costs. For instance, a retail chain using edge devices for in-store analytics reduces expenses by only uploading key data summaries to the cloud.

Can Composable AI work offline?

Yes, modular AI systems at the edge can operate offline. For example, autonomous farming equipment can function in remote areas by relying on edge-based AI for tasks like planting, harvesting, and monitoring soil conditions.

How do these technologies support sustainability initiatives?

Edge computing and Composable AI reduce energy waste by processing data locally and optimizing resource use. Consider a smart grid that uses these technologies to analyze energy consumption patterns and dynamically balance loads, lowering environmental impact.

Are there any notable examples of successful implementation?

Yes, companies like Tesla use edge computing and modular AI in their autonomous vehicles. AI modules for navigation, safety, and system diagnostics operate locally, ensuring real-time responsiveness. Similarly, Amazon deploys edge computing in smart warehouses to track inventory and improve delivery efficiency.

Resources

Articles and Blogs

- “The Rise of Composable AI”

A deep dive into the modular approach of Composable AI, its benefits, and real-world applications.

Read on Forbes - “Edge Computing: The Key to Real-Time AI”

This article explains how edge computing complements AI for faster, localized decision-making.

Explore at TechCrunch - “5G and the Future of Edge AI”

Learn how 5G networks are supercharging edge AI applications.

Visit VentureBeat

Open-Source Tools

- TensorFlow Lite

A lightweight version of TensorFlow for deploying AI models on edge devices.

Explore TensorFlow Lite - EdgeX Foundry

An open-source framework for building edge computing solutions.

Visit EdgeX Foundry - NVIDIA Jetson Platform

A popular edge AI platform for developing high-performance solutions.

Discover Jetson

Industry Reports

- “State of Edge 2023”

An annual report offering insights into the latest trends in edge computing.

Download Here - “Composable AI in Enterprise”

A Gartner report detailing how businesses are using composable AI for agility and scalability.

Access on Gartner - “The Role of AI in Edge Computing”

A McKinsey analysis on the convergence of AI and edge technologies.

Read on McKinsey