Stable Diffusion has revolutionized AI-generated art, enabling highly detailed and realistic outputs. But generic models don’t always meet unique creative needs. By fine-tuning Stable Diffusion, you can tailor its outputs to your vision. Here’s how to make it happen effectively.

Understanding Fine-Tuning: What and Why?

What is Fine-Tuning in AI Models?

Fine-tuning is the process of adapting a pre-trained model to specific tasks or domains. In the context of Stable Diffusion, this means customizing the model to improve its output for a defined purpose, such as generating unique styles, consistent character designs, or niche artistic aesthetics.

Why Fine-Tune Stable Diffusion?

The default Stable Diffusion model is versatile but may lack specificity. Fine-tuning unlocks:

- Unique styles: Develop art aligned with a specific aesthetic.

- Efficiency: Save time by reducing the need for extensive prompt engineering.

- Brand consistency: Tailor outputs to fit a personal or corporate identity.

By adapting the model, you gain creative control over the outputs.

Prerequisites for Fine-Tuning Stable Diffusion

Hardware Requirements

Fine-tuning demands computational power. The typical setup includes:

- GPU: At least 8GB VRAM for small-scale projects; 16GB+ for complex fine-tuning.

- RAM: 16GB or more is recommended for smooth operation.

- Storage: SSDs with at least 50GB of free space ensure fast data handling.

For heavy workloads, consider cloud-based solutions like AWS, Google Colab, or Lambda Labs.

Software and Frameworks

To get started, install the following tools:

- Stable Diffusion: Download the official model weights from Hugging Face.

- Python: Ensure version 3.8 or newer.

- Dependencies: Use libraries like PyTorch, Transformers, and Diffusers. Install them via pip.

- Training Data: Curate a dataset that reflects your specific artistic goals.

Having these tools ready simplifies the workflow.

Curating and Preparing Training Data

Why Data Quality Matters

Stable Diffusion relies on high-quality training datasets. Poorly curated data can introduce noise, resulting in subpar outputs. Strive for diversity and relevance in your images.

Steps for Data Collection

- Define the Aesthetic: Identify the style or subject matter you want the model to master.

- Gather Images: Use public domain sources, royalty-free platforms like Unsplash, or generate your own.

- Ensure Licensing Compliance: Avoid copyrighted material unless permitted for use.

Annotating and Preprocessing

Images need to be properly formatted and labeled:

- Resize Images: Aim for uniform resolutions like 512×512 pixels to match Stable Diffusion’s input.

- Labeling: Use descriptive file names or metadata to aid training.

- Image Cleaning: Remove duplicates and irrelevant images to optimize learning.

Tools and Techniques for Fine-Tuning

Leveraging Pre-trained Models

Pre-trained weights form the foundation of your fine-tuned model. Instead of starting from scratch, fine-tuning adjusts these weights for your specific use case, saving time and resources.

Techniques to Customize the Model

- DreamBooth: Ideal for creating a unique artistic identity or specific character designs. Learn more at DreamBooth’s GitHub page.

- Textual Inversion: Focuses on teaching the model new word associations, like linking “zebra unicorn” to a specific look.

- LoRA (Low-Rank Adaptation): Balances between performance and memory use, suitable for lighter hardware setups.

Each technique caters to different creative needs. Choose based on your goals and hardware capabilities.

Setting Up the Fine-Tuning Environment

Installing the Necessary Frameworks

Once you’ve prepared your data, it’s time to configure your fine-tuning environment. The Diffusers library by Hugging Face is a powerful option for this.

- Install Dependencies:

pip install torch torchvision transformers diffusersThese libraries handle Stable Diffusion’s core operations. - Verify GPU Availability: Ensure PyTorch recognizes your GPU:

import torch print(torch.cuda.is_available())If it returnsTrue, you’re good to go. - Load the Pre-trained Model: Use Hugging Face’s tools to download and load the base model:

from diffusers import StableDiffusionPipeline pipeline = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4") pipeline.to("cuda")

Setting Up Your Dataset

Training requires that your dataset be loaded into a format the model can process. Use PyTorch’s DataLoader:

from torch.utils.data import Dataset, DataLoader

from torchvision.transforms import Compose, Resize, CenterCrop, ToTensor

class CustomDataset(Dataset):

def __init__(self, image_paths, transform=None):

self.image_paths = image_paths

self.transform = transform

def __len__(self):

return len(self.image_paths)

def __getitem__(self, idx):

image = Image.open(self.image_paths[idx]).convert("RGB")

if self.transform:

image = self.transform(image)

return image

transform = Compose([Resize(512), CenterCrop(512), ToTensor()])

dataset = CustomDataset(image_paths, transform)

dataloader = DataLoader(dataset, batch_size=8, shuffle=True)

Training the Model

Configuring Hyperparameters

Fine-tuning involves tweaking hyperparameters for optimal results:

- Batch Size: Start with 8 images per batch.

- Learning Rate: A lower rate like

5e-5prevents overfitting. - Epochs: Fine-tune for 10–20 epochs for small datasets.

These values depend on your hardware and dataset size. Monitor performance to adjust as needed.

Training with DreamBooth

DreamBooth allows you to train on a small number of images while retaining the base model’s knowledge. Set up training as follows:

from diffusers import DreamBoothTrainer

trainer = DreamBoothTrainer(

model=pipeline.unet,

dataset=dataloader,

output_dir="./fine_tuned_model",

learning_rate=5e-5,

train_epochs=10,

save_steps=100

)

trainer.train()

This setup saves checkpoints regularly, ensuring you don’t lose progress.

Evaluating Outputs

After training, test the fine-tuned model by generating new images:

prompt = "a surreal landscape with crystal mountains"

generated_image = pipeline(prompt).images[0]

generated_image.save("output.png")

Optimizing and Sharing Your Model

Performance Tuning

- FP16 Precision: Use half-precision floating point for faster generation.

- Prune Weights: Remove redundant weights to reduce the model size.

Exporting and Sharing

Save and share your model with others via Hugging Face’s Model Hub:

huggingface-cli login

Upload your model with detailed documentation for reproducibility.

Best Practices for Fine-Tuning Stable Diffusion

Balancing Creativity and Control

Fine-tuning Stable Diffusion is a balance between overfitting the model to a niche and retaining its versatility. Here’s how to achieve that balance:

- Avoid Overfitting: Use diverse examples that represent your target style or task but still vary enough to maintain generalizability.

- Monitor Loss During Training: A steady decline indicates progress, while spikes or stagnation suggest issues.

- Test Frequently: Generate outputs at regular intervals during training to ensure the model improves as intended.

Incremental Adjustments

Instead of drastic updates, tweak parameters in small steps:

- Gradually increase epochs to avoid unnecessary computation.

- Adjust prompts to test different aspects of the model’s training.

Consistency is key to meaningful fine-tuning.

Deploying Fine-Tuned Models for Real-World Use

Practical Applications

Customizing Stable Diffusion opens doors to exciting possibilities:

- Creative Design: Artists and studios can create unique art styles for branding or entertainment.

- Marketing Content: Brands can generate visuals aligned with their campaigns or values.

- Gaming: Develop consistent character designs or thematic environments for storytelling.

These use cases showcase how fine-tuning adapts Stable Diffusion to specific industry demands.

Deploying in Production

To deploy your fine-tuned model, integrate it into user-friendly interfaces:

- APIs: Use tools like FastAPI or Flask to create a web service for your model.

- Cloud Deployment: Platforms like AWS or Azure simplify scaling for larger workloads.

- Integration with Apps: Embed the model into creative tools like Photoshop via plugins.

Ensure accessibility and scalability for seamless adoption.

Challenges and How to Overcome Them

Ethical Considerations

Fine-tuning generates realistic outputs, raising ethical concerns:

- Misinformation Risk: Avoid training models to mimic real people or propagate false information.

- Content Moderation: Implement safeguards to filter harmful outputs.

Establish ethical guidelines and monitor usage actively.

Computational Costs

Training models can strain resources. To reduce costs:

- Use Pre-trained Models: Begin with solid foundations instead of training from scratch.

- Optimize Hardware Usage: Leverage tools like gradient checkpointing to minimize GPU memory usage.

- Share Workloads: Utilize distributed training on multiple GPUs or cloud setups.

Balancing efficiency with creativity ensures a sustainable workflow.

Advanced Fine-Tuning Techniques for Stable Diffusion

Stable Diffusion’s flexibility shines when advanced fine-tuning techniques are applied. Each method caters to specific needs, offering unique strengths for customization. Let’s break down LoRA, Textual Inversion, and Custom Token Training, including their workflows and use cases.

LoRA (Low-Rank Adaptation)

What is LoRA?

LoRA introduces a lightweight way to adapt Stable Diffusion without retraining the entire model. Instead of updating all parameters, it focuses on learning a smaller subset (low-rank updates) to modify the model effectively. This minimizes computational demands.

How LoRA Works

LoRA inserts trainable layers (rank matrices) into the model’s architecture. During training:

- The base model remains frozen.

- Only the additional layers are trained to adapt the output to specific needs.

This method reduces training time and GPU memory requirements.

Use Cases

- Training on specific styles or textures without overwriting the original knowledge.

- Adapting models for low-resource devices with constrained computational power.

Example Workflow

- Set Up LoRA Layers:

Use frameworks like Hugging Face’stransformerslibrary to define and integrate LoRA layers. - Train with Custom Data:

Load your curated dataset and specify hyperparameters like rank (e.g., rank=8). - Save and Apply:

Merge the trained LoRA layers back into the model for inference.

Textual Inversion

What is Textual Inversion?

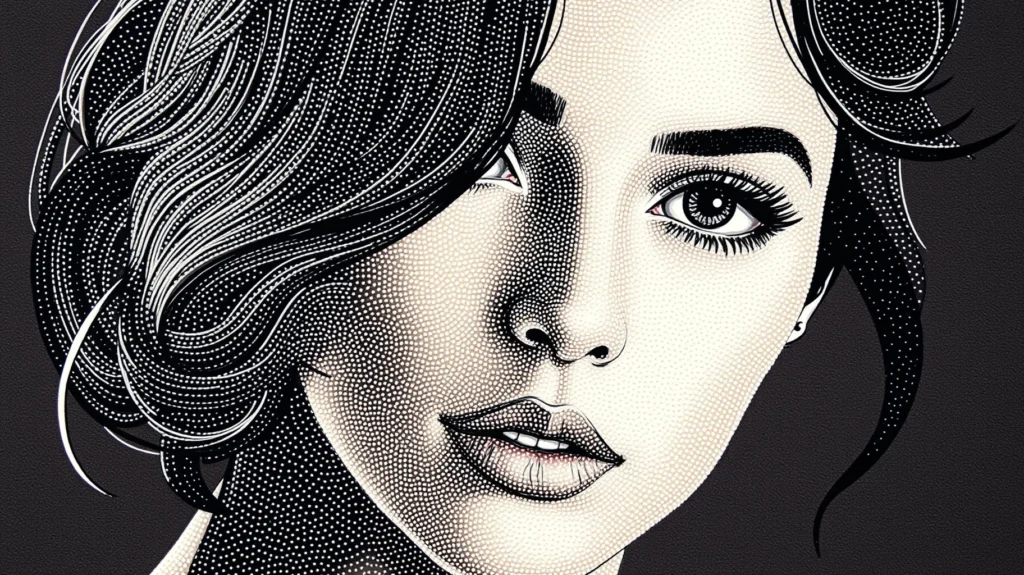

Textual Inversion teaches Stable Diffusion new word associations. For example, linking “zebra unicorn” to a specific look. Unlike LoRA, it focuses on embedding new textual prompts rather than structural changes.

How It Works

Textual Inversion trains a new token (word or phrase) in the model’s vocabulary to represent custom concepts. This token becomes a shorthand for generating specific images.

Use Cases

- Branding and Personalization: Embedding logos or brand-specific styles into image generation.

- Character Design: Teaching the model consistent visual cues for unique characters.

Example Workflow

- Curate and Annotate Data:

Gather images that represent the new concept and annotate them with simple descriptions. - Train a Token:

Use tools like Hugging Face’s Diffusers to train embeddings for a token (e.g.,<my_concept>). - Generate Outputs:

Incorporate the new token into prompts like: arduino

my_concept> in a futuristic cityscapeAdvantages

- Rapid adaptation to specific themes or styles.

- Retains the general versatility of the model.

Custom Token Training

What is Custom Token Training?

Custom token training blends LoRA and Textual Inversion, teaching Stable Diffusion both structural adjustments and specific word associations. It involves more detailed fine-tuning but yields the most tailored results.

How It Works

- New tokens are defined and trained with embeddings.

- Model weights are adjusted alongside the vocabulary, allowing both learned words and structural updates.

Use Cases

- Creating highly specialized models for film production, game design, or scientific visualization.

- Combining unique styles (from abstract art to realism) within a single model.

Workflow Steps

- Prepare Training Data:

Label images with the concepts they represent (e.g.,mountain_sunset,alien_landscape). - Train Combined Tokens:

Use frameworks that support dual-training (e.g., custom PyTorch implementations or enhanced DreamBooth workflows). - Evaluate Output Diversity:

Test how well the tokens integrate into broader prompt combinations.

Comparison of Techniques

| Technique | Focus | Training Time | Best For | Limitations |

|---|---|---|---|---|

| LoRA | Lightweight structural updates | Low | Efficient style training for lightweight models | May lack deep contextual changes |

| Textual Inversion | Embedding new tokens | Medium | Teaching specific concepts via new prompts | Limited to textual additions |

| Custom Token Training | Combined vocab and model tuning | High | Specialized or professional use cases | Demands high-quality datasets |

Choosing the Right Technique

- Use LoRA for quick, memory-efficient tweaks.

- Pick Textual Inversion for custom token or style embeddings.

- Go with Custom Token Training for comprehensive, fine-grained model adaptations.

Optimizing Outputs: Strategies for Improving Image Quality and Coherence

Fine-tuning Stable Diffusion is only half the journey—optimizing the outputs for sharpness, realism, and thematic consistency is just as crucial. Let’s explore strategies that will help you refine your generated images for quality and coherence.

Prompt Engineering: Crafting the Perfect Input

Why Prompts Matter

The prompt is the cornerstone of image generation. A vague or overly complex prompt can result in poor-quality outputs. A well-crafted prompt guides the model effectively.

Tips for Better Prompts

- Be Specific: Include details about style, lighting, mood, and subject. For example:

“A vibrant sunset over a snowy mountain, hyper-realistic, golden hour lighting, cinematic style.” - Use Modifiers Wisely: Words like “hyper-detailed,” “4K,” “photorealistic,” or “surreal” clarify artistic intent.

- Iterate: Experiment with phrasing and structure. Slight changes can yield drastically different results.

Avoid Overloading

Too many details can confuse the model. Strike a balance between clarity and brevity.

Adjusting Sampling Parameters

What Are Sampling Parameters?

Sampling parameters control how the model generates an image from noise. They play a vital role in quality and coherence. The key parameters include:

- Steps: Number of iterations to refine the image.

- Guidance Scale (CFG): How closely the model follows your prompt.

- Sampling Algorithm: The method used to denoise and generate the image.

How to Optimize Parameters

- Increase Steps for Complexity:

Start with 50–100 steps. Higher steps (e.g., 150) may improve intricate details but come at the cost of processing time. - Tweak CFG Scale:

- A lower CFG (5–8) allows creative interpretations.

- A higher CFG (12–15) ensures the model sticks closely to your prompt.

- Experiment with Samplers:

Common options include:- DDIM: Balanced for quality and speed.

- Euler A: Produces smoother, more natural results.

- DPM++ 2M Karras: Ideal for photorealism.

Example Workflow

Test multiple combinations of steps, CFG scale, and samplers. Use tools like Automatic1111’s web UI for convenient tweaking.

Enhancing Composition and Coherence

Prompt Chaining

Break complex concepts into multiple prompts and combine them. For instance:

- Generate a background image:

“A dense forest with sunlight filtering through the leaves, photorealistic.” - Overlay a foreground element:

“A majestic white stag standing in the forest, ethereal glow.” - Merge these layers using external tools like Photoshop or GIMP.

Negative Prompts

Use negative prompts to exclude unwanted elements. For example:

“A serene lake under the moonlight, negative prompts: low resolution, blurry, noisy.”

Aspect Ratios

Vary aspect ratios to suit your subject. Use wide frames (16:9) for landscapes or portrait ratios (3:4) for characters.

Post-Processing for Image Refinement

Upscaling for Better Resolution

Generated images often have a native resolution of 512×512 pixels. Upscaling improves clarity and detail:

- ESRGAN: An open-source AI-based upscaler for realism.

- Topaz Gigapixel AI: Offers professional-grade results for larger resolutions.

Image Editing Tools

Refine outputs further with image editors:

- Photoshop or GIMP for detailed adjustments.

- Affinity Photo for budget-friendly, professional editing.

AI-Based Enhancements

Use AI tools for specific fixes:

- Remini for enhancing facial details.

- Luminar AI for color grading and style matching.

Ensuring Consistency Across Outputs

Reproducibility with Seed Values

Set seed values to ensure consistent outputs for the same prompt. This is crucial for projects requiring visual uniformity, such as branding or sequential illustrations.

Batch Generation

Generate multiple variations of an image by slightly altering the prompt. Then, select the best result or combine elements from different versions.

Fine-Tune for Style

Train or fine-tune the model to produce consistent outputs aligned with your preferred style or aesthetic. Techniques like LoRA and Textual Inversion (explored earlier) are invaluable here.

Debugging Subpar Outputs

Common Issues and Fixes

- Blurriness: Increase sampling steps or use a higher-resolution base model.

- Lack of Detail: Adjust the CFG scale or add detail-rich descriptors like “highly intricate.”

- Artifacts or Noise: Use negative prompts or switch to a different sampler.

Testing the Model

Iteratively test outputs and keep notes on the best-performing parameter combinations for different styles or use cases.

Conclusion: Unlock Your Creative Potential

Fine-tuning Stable Diffusion transforms generic models into tailored artistic tools. By curating quality data, leveraging advanced techniques, and deploying responsibly, you can push the boundaries of AI creativity. Whether you’re an artist, designer, or developer, fine-tuning empowers you to turn unique visions into reality.