Data labeling is the foundation of successful AI model training. Whether you’re building a natural language processing (NLP) application, computer vision system, or a recommendation engine, high-quality labeled data is essential for accurate and reliable performance.

This article explores the best practices for data labeling to help you optimize your AI projects.

Why Data Labeling is Critical for AI

The Role of Data Labeling in Machine Learning

AI models learn from labeled datasets by identifying patterns that correspond to the labels provided. For instance:

- In computer vision, labeled images (e.g., “cat” or “dog”) teach the model to recognize objects.

- In NLP, labeled text (e.g., sentiment: “positive” or “negative”) helps the model understand language nuances.

Without accurate labels, AI models struggle to generalize, resulting in poor real-world performance.

Challenges of Poor Data Labeling

Inadequate labeling leads to:

- Bias in predictions: Flawed or unbalanced labels skew the model’s learning.

- Decreased accuracy: Noisy or inconsistent data confuses the training process.

- Wasted resources: Training on poorly labeled data results in additional costs for retraining and error correction.

Best Practices for Effective Data Labeling

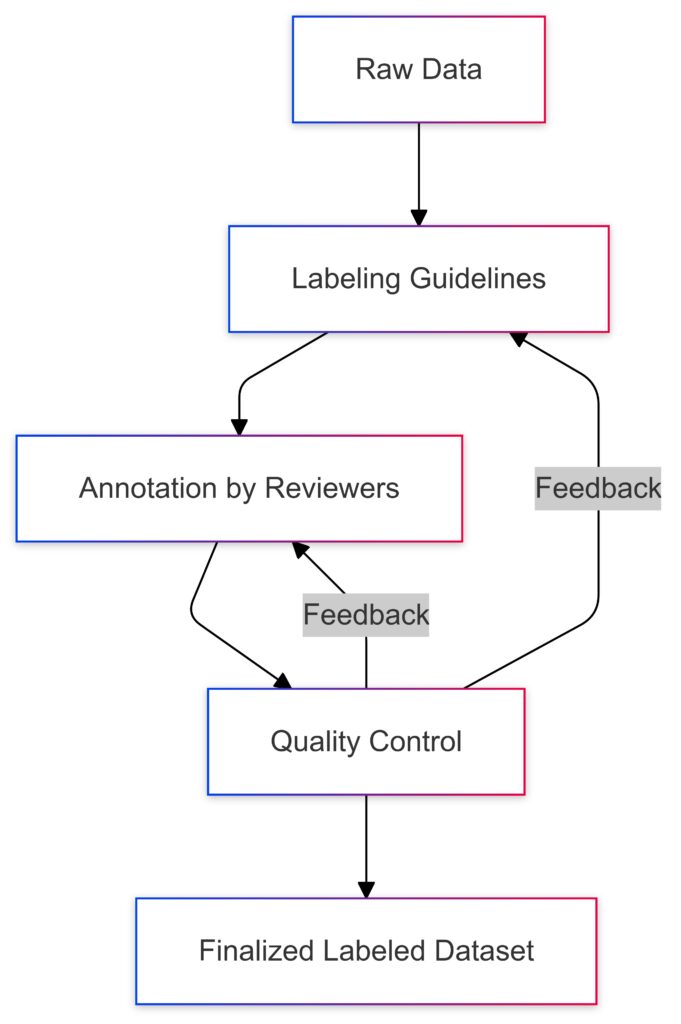

Define Clear Labeling Guidelines

Establish unambiguous labeling instructions to ensure consistency. Include:

- Label definitions: Describe each category clearly. For example, “positive sentiment” includes phrases like “I loved it,” but not “It’s okay.”

- Edge cases: Define how to handle ambiguous situations. For instance, “How do we label sarcastic comments in a sentiment analysis task?”

Clear guidelines reduce confusion among labelers and improve data quality.

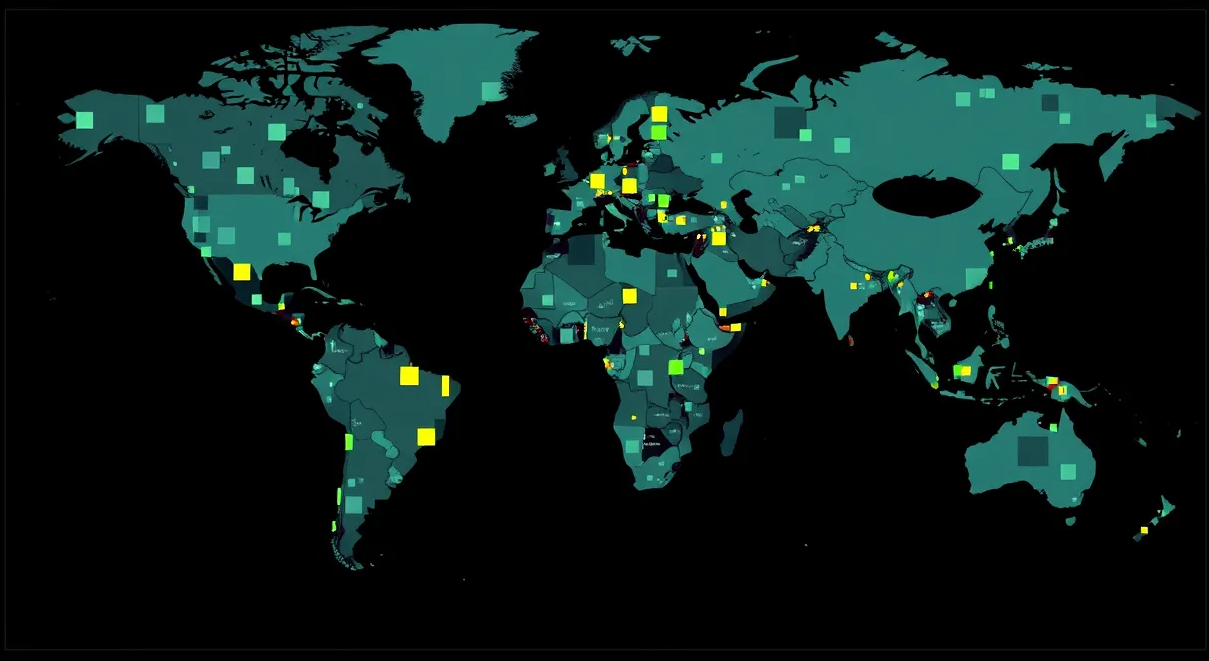

Visualizing a systematic data labeling process to ensure consistency and accuracy.

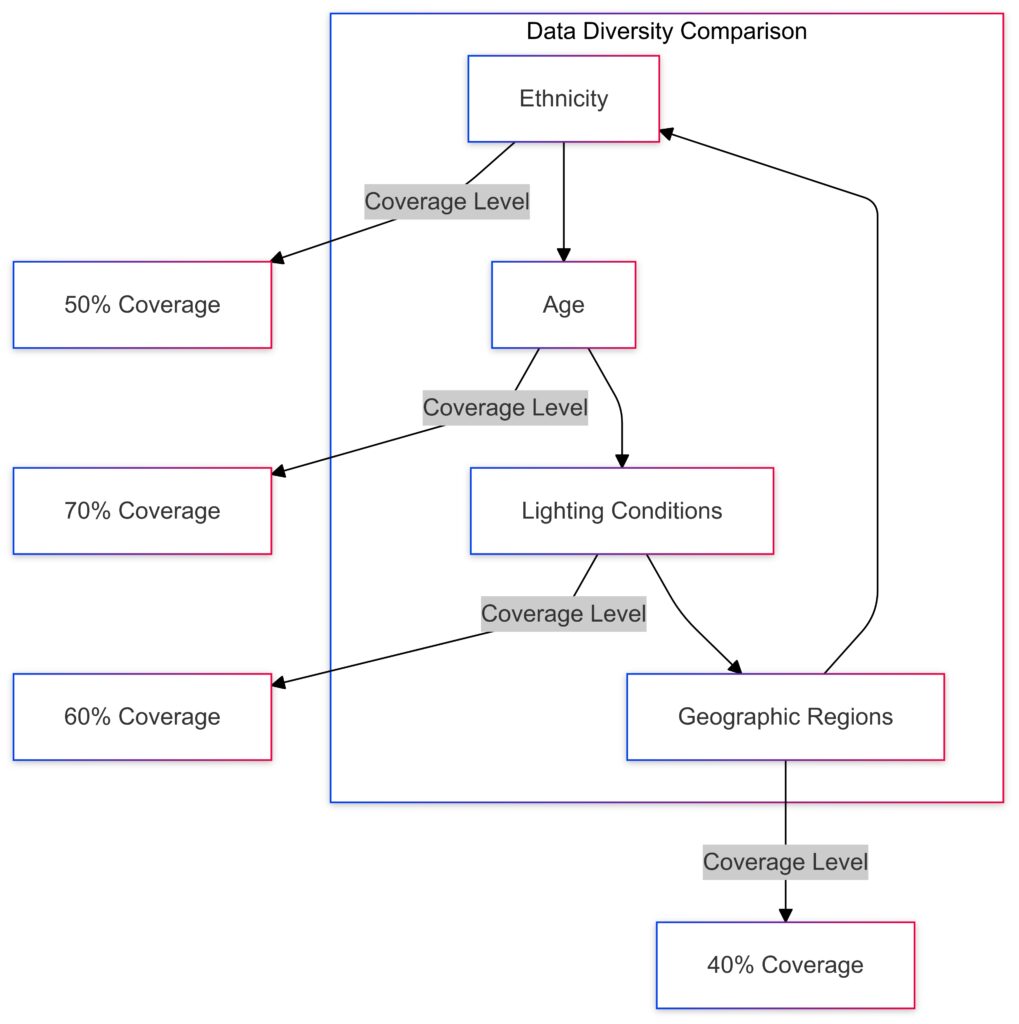

Use Diverse and Representative Data

Ensure your dataset reflects the real-world diversity of the task. For example:

- In a facial recognition project, include images across different ethnicities, ages, and lighting conditions.

- For speech recognition, gather audio samples from speakers with varying accents and dialects.

This reduces bias and improves the model’s performance across diverse scenarios.

Examining data diversity to identify coverage gaps for balanced training datasets.

Invest in Quality Assurance

Implement a robust quality control process to catch errors early:

- Consensus labeling: Have multiple labelers annotate the same data and choose the majority label.

- Spot-checking: Randomly review samples to identify inconsistencies.

- Audit trails: Maintain logs of who labeled what and how disputes were resolved.

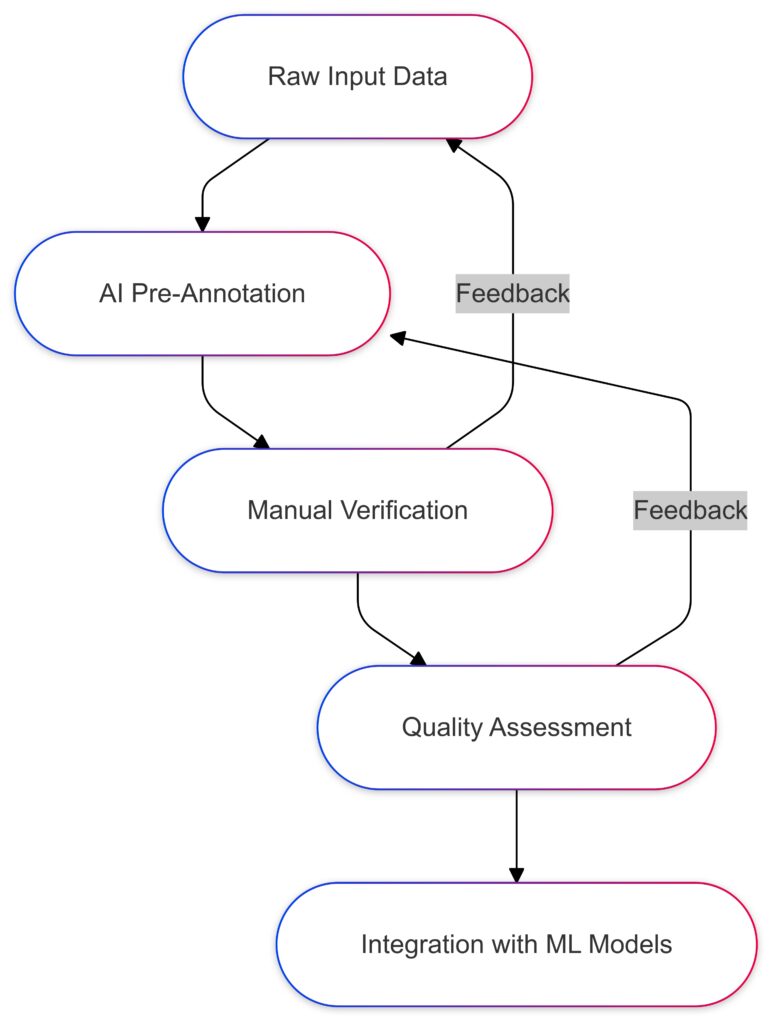

Leverage Labeling Tools and Automation

Adopt modern tools to streamline the process:

- Annotation platforms like Labelbox, Prodigy, or Amazon SageMaker Ground Truth provide intuitive interfaces for labeling tasks.

- Semi-automated tools use pre-trained models to provide initial labels, which human labelers refine. For example, bounding boxes in object detection can be pre-drawn by AI.

Depicting how semi-automated tools streamline the data annotation process.

Train and Monitor Labelers

Provide detailed onboarding for human labelers, emphasizing:

- Task objectives and dataset importance.

- How to use annotation tools effectively.

Continuously monitor their work for trends in errors or inconsistencies. Feedback loops can enhance labeler accuracy over time.

Balancing Accuracy and Efficiency

Prioritize Critical Data

Not all data requires equal attention. Focus on edge cases or frequently misclassified instances to maximize labeling impact.

Example: For an autonomous vehicle project, prioritize labeling images with pedestrians in unusual scenarios, such as jaywalking.

Use Active Learning

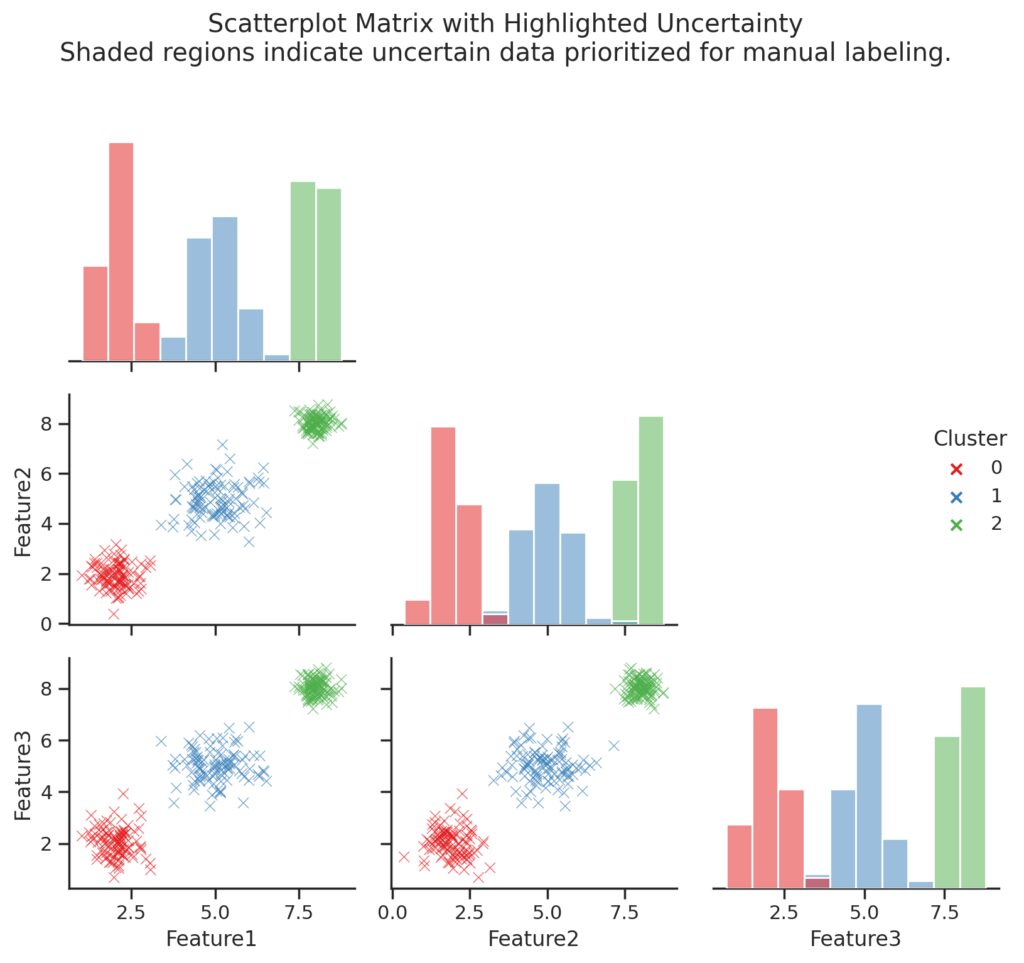

Active learning involves training a model on a small labeled dataset, then using its predictions to identify the most uncertain samples. Labeling these samples first improves the model efficiently.

For instance, an image classification model might struggle with distinguishing wolves from huskies. Focus on labeling ambiguous images where the model shows low confidence.

The shaded regions highlight areas of uncertainty where active learning prioritizes manual labeling, as indicated in the annotations. This visualization demonstrates how these regions improve training models by focusing on ambiguous data.

Scale Incrementally

Start small, test results, and expand the labeled dataset iteratively. This ensures early mistakes don’t scale across the entire dataset.

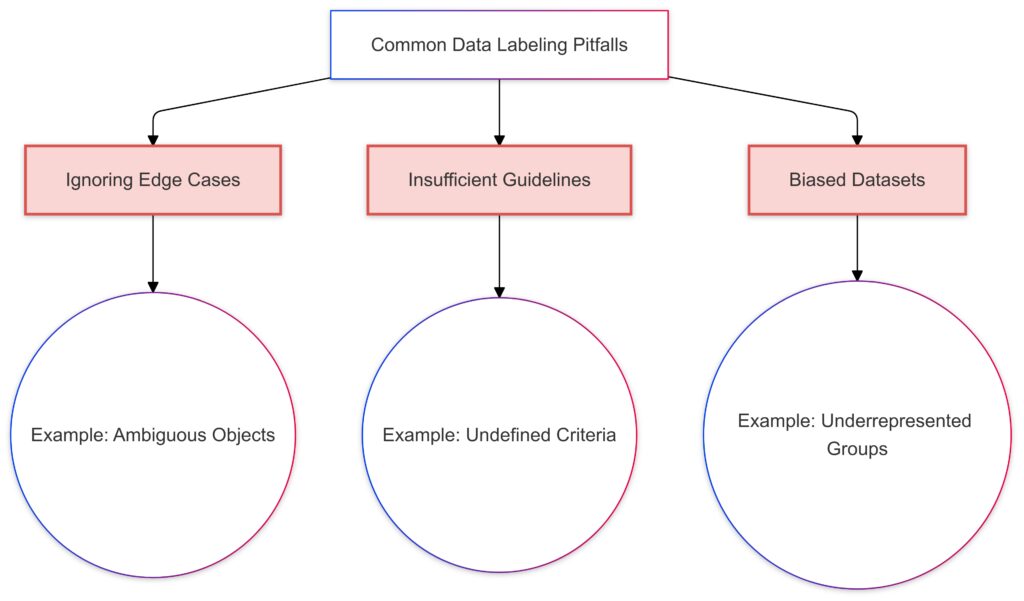

Common Pitfalls in Data Labeling

Ignoring Context

Labelers need sufficient context to make informed decisions. For example, in sentiment analysis, the sentence “Thanks a lot” can be positive or sarcastic, depending on the surrounding text.

Overlooking Bias

Bias in labeled data perpetuates bias in AI models. For instance:

- A facial recognition system trained mostly on light-skinned individuals may underperform on darker-skinned individuals.

To address this, review datasets for underrepresented groups and label additional data as needed.

Inadequate Tooling

Manual labeling without appropriate tools leads to inefficiency and inconsistency. Use tools designed for your specific task, such as video annotation platforms for object tracking or audio labeling software for speech projects.

Measuring Data Labeling Success

Evaluate Label Quality

Assess the quality of your labeled data using metrics like:

- Inter-annotator agreement (IAA): Measures consistency between labelers. A higher IAA indicates clearer guidelines and better-trained labelers.

- Model performance: If the model underperforms, revisit the labeled dataset to check for issues.

Continuous Improvement

Regularly update labeling guidelines and tools based on project feedback. As your dataset grows, refine your processes to maintain quality and adapt to evolving model requirements.

Advanced Techniques in Data Labeling: Beyond Traditional Methods

While traditional data labeling remains essential for training accurate AI models, advanced techniques like zero-shot learning, self-supervised learning, and synthetic data generation are transforming how datasets are created and utilized. These methods reduce dependency on manual labeling, improve scalability, and open new frontiers in AI development.

Zero-Shot Learning: Training Without Task-Specific Labels

What is Zero-Shot Learning?

Zero-shot learning (ZSL) enables AI models to perform tasks they were not explicitly trained for by leveraging pre-existing knowledge. For instance, a model trained on animal species might correctly classify a new species without specific examples, based on its semantic relationships to known classes.

How It Works

ZSL uses semantic embeddings, like word vectors, to connect known and unknown classes. By interpreting relationships between concepts, the model generalizes its understanding to new, unseen data.

Applications

- Text Classification: Automatically labeling emails as “urgent” or “spam” without predefined examples.

- Image Recognition: Identifying rare diseases in medical images using a general understanding of symptoms.

- Recommendation Systems: Suggesting new products to users based on preferences without specific labels.

Benefits and Challenges

- Pros: Reduces the need for extensive labeled datasets; highly adaptable to dynamic environments.

- Cons: Relies on the quality of semantic relationships, which may not always be accurate or intuitive.

Self-Supervised Learning: Letting Data Label Itself

What is Self-Supervised Learning?

Self-supervised learning (SSL) is a subset of unsupervised learning where the data itself generates labels. Models create tasks (pretext tasks) that allow them to learn meaningful representations without external labels.

How It Works

For example, in computer vision, an SSL model might predict the orientation of an image (e.g., rotated by 90°) as its pretext task. By solving such tasks, the model learns features like edges, textures, or shapes that are useful for downstream applications.

Applications

- Language Models: Pretrained models like GPT use SSL, predicting the next word in a sentence to learn language structure.

- Vision Tasks: Models like SimCLR train on contrastive learning, where images are compared to find similar patterns.

- Audio Processing: Speech models infer missing segments in audio files to learn linguistic features.

Benefits and Challenges

- Pros: Reduces the need for labeled data; achieves state-of-the-art performance in many applications.

- Cons: Computationally expensive and requires substantial fine-tuning for specific tasks.

Synthetic Data Generation: Creating Custom Datasets

What is Synthetic Data?

Synthetic data refers to artificially generated data that mimics real-world data. It’s particularly useful for tasks where gathering labeled data is expensive, time-consuming, or infeasible.

How It Works

Synthetic data is created using algorithms or simulations. For example:

- 3D Rendering: Generating labeled images for autonomous vehicle training with varied lighting, weather, and traffic conditions.

- Text Augmentation: Creating new textual data by paraphrasing or modifying existing sentences.

- Generative Models: Tools like GANs (Generative Adversarial Networks) create realistic images or audio for training.

Applications

- Healthcare: Synthetic medical images help train models without exposing patient data.

- Autonomous Vehicles: Simulated driving environments teach cars to recognize rare scenarios like jaywalking or accidents.

- Retail: Virtual customer data helps optimize recommendation systems without using real-world purchase histories.

Benefits and Challenges

- Pros: Scales easily, addresses data privacy concerns, and generates data for rare edge cases.

- Cons: May lack the variability and noise of real-world data, risking overfitting to synthetic patterns.

Combining Techniques for Optimal Results

The real power of these advanced methods lies in combining them with traditional and other advanced techniques. For example:

- ZSL + Synthetic Data: Use synthetic examples to improve zero-shot learning performance by offering a bridge between known and unknown classes.

- SSL + Traditional Labels: Fine-tune a self-supervised model with a small set of high-quality labeled data for maximum accuracy.

- Synthetic + Real Data: Blend synthetic data with real-world examples to ensure robustness and generalizability.

Choosing the Right Approach

When to Use Advanced Techniques

- Limited Data: Use ZSL or SSL when labeled data is scarce but related knowledge exists.

- Cost Constraints: Opt for synthetic data when labeling costs are prohibitive.

- Edge Cases: Generate synthetic data for rare or dangerous scenarios that are hard to capture in real life.

When Traditional Labeling Is Better

- Simple Tasks: For straightforward tasks like binary classification, traditional labeling may still be the most efficient option.

- Critical Accuracy Needs: In high-stakes scenarios, like legal or medical decisions, human-labeled data ensures reliability.

Incorporating advanced techniques into your data labeling strategy can significantly enhance your AI models’ performance and efficiency. Whether you’re looking to reduce costs, improve scalability, or tackle rare edge cases, these approaches offer powerful alternatives to traditional methods.

Tool Comparison: Popular Data Labeling Tools and Platforms

Choosing the right data labeling tool is critical for training accurate and efficient AI models. The ideal platform depends on your project’s complexity, scale, and team requirements. Here’s a detailed comparison of some of the most popular data labeling tools across various parameters.

Labelbox

Overview

Labelbox is a versatile platform offering annotation tools, workflow automation, and collaboration features for image, text, and video labeling. It’s widely used for computer vision and NLP tasks.

Features

- Pre-Built Tools: Supports bounding boxes, polygons, keypoints, segmentation, and text annotations.

- Quality Assurance: Built-in consensus scoring and review features for quality control.

- Data Management: Centralized data storage with version tracking.

- Integration: Compatible with AWS, GCP, and Azure for seamless workflows.

Pros

- User-friendly interface for both beginner and advanced users.

- Customizable workflows for specific project needs.

- Strong quality assurance tools.

Cons

- Pricing can escalate for large-scale projects.

- Limited offline capabilities for sensitive data.

Best For

Teams focused on image and video labeling with high-quality control needs.

Amazon SageMaker Ground Truth

Overview

Amazon SageMaker Ground Truth offers managed data labeling with automation features. It’s tightly integrated into the AWS ecosystem, making it an excellent choice for projects already using AWS services.

Features

- Semi-Automated Labeling: Leverages pre-trained models to assist human labelers.

- Scalable Infrastructure: Supports large datasets with dynamic scaling.

- Built-In QA: Automated data quality checks and worker consensus mechanisms.

- Variety of Tasks: Handles images, text, and videos.

Pros

- Seamless integration with AWS tools and services.

- Cost-effective for high-volume projects using automation.

- High scalability and reliability.

Cons

- Requires familiarity with AWS ecosystem.

- Limited customization compared to standalone platforms.

Best For

Organizations deeply invested in AWS infrastructure needing scalable and cost-efficient labeling solutions.

Scale AI

Overview

Scale AI specializes in high-quality labeling for enterprise-level projects. It provides human-in-the-loop annotations combined with automation to streamline workflows.

Features

- End-to-End Support: Offers a combination of tooling, workforce, and model integration.

- Custom Workflows: Tailored workflows for diverse use cases like LIDAR point clouds, sentiment analysis, and medical imaging.

- Managed Workforce: Access to a network of trained annotators.

Pros

- High-quality annotations with minimal oversight.

- Extensive support for 3D and geospatial data labeling.

- Excellent for projects requiring highly specialized labeling.

Cons

- Premium pricing targeted at large enterprises.

- Limited flexibility for small-scale projects or startups.

Best For

Enterprises handling complex projects like autonomous vehicles or large-scale NLP applications.

SuperAnnotate

Overview

SuperAnnotate is a collaborative annotation platform designed for teams. It supports a wide range of data types, including images, videos, and point clouds.

Features

- Collaboration Tools: Team workflows, task assignments, and comments for efficient teamwork.

- Advanced Analytics: Provides insights into annotation progress and quality metrics.

- Custom Plugins: Supports integration with other ML pipelines.

Pros

- Excellent for team-based annotation tasks.

- Real-time performance tracking and analytics.

- Strong focus on UI and usability.

Cons

- Limited automation compared to platforms like SageMaker.

- Relatively smaller community support.

Best For

Teams requiring collaboration and project management features in their data labeling process.

Prodigy

Overview

Prodigy is a developer-centric annotation tool ideal for small teams and technical users. It’s known for its efficiency in handling NLP tasks like text classification, named entity recognition, and sentiment analysis.

Features

- Active Learning: Suggests high-value samples for labeling to reduce workload.

- Custom Scripts: Programmable workflows for maximum flexibility.

- Integration: Works seamlessly with Python and ML frameworks like TensorFlow and PyTorch.

Pros

- Highly customizable for technical users.

- Ideal for NLP and small-scale projects.

- Affordable for small teams or startups.

Cons

- Steeper learning curve for non-developers.

- Limited support for non-text annotations (e.g., images, video).

Best For

Developers working on NLP-focused projects or experiments.

Diffgram

Overview

Diffgram is an open-source data labeling and management tool that offers flexibility and control for customized workflows.

Features

- Annotation Types: Supports images, text, and videos.

- Open-Source: Fully customizable for teams with technical expertise.

- Audit Logs: Tracks labeling activity for accountability.

Pros

- Cost-effective for small teams due to open-source availability.

- Full control over workflows and data storage.

- Suitable for industries with strict data privacy requirements.

Cons

- Requires technical expertise to set up and manage.

- Fewer pre-built automation features compared to commercial tools.

Best For

Teams needing high customization and handling sensitive data in secure environments.

Hive Data

Overview

Hive Data provides AI-powered data labeling services for large-scale projects. It’s designed for high-speed annotation with automation features.

Features

- Speed Optimization: AI-assisted workflows significantly reduce turnaround time.

- Specialized Tasks: Strong focus on industries like media, advertising, and e-commerce.

- Managed Workforce: Access to Hive’s team of professional annotators.

Pros

- Extremely fast labeling for high-volume projects.

- Strong industry-specific support.

- Affordable for large datasets.

Cons

- Limited flexibility for smaller-scale or niche projects.

- Reliance on Hive’s workforce may not suit teams preferring in-house annotation.

Best For

Organizations handling large datasets requiring quick turnaround times.

Summary Comparison Table

| Tool | Best For | Key Features | Strengths | Limitations |

|---|---|---|---|---|

| Labelbox | Image/video labeling | Pre-built tools, QA workflows | Easy to use, customizable workflows | Pricing for large projects |

| SageMaker Ground Truth | AWS users, scalable projects | Semi-automated labeling | Seamless AWS integration, cost-efficient | AWS familiarity required |

| Scale AI | Enterprise, autonomous vehicles | End-to-end support | High-quality annotations, LIDAR support | Premium pricing |

| SuperAnnotate | Collaborative team labeling | Team workflows, analytics | Collaboration-friendly, real-time tracking | Limited automation |

| Prodigy | NLP, developer-centric tasks | Active learning, custom scripts | Programmable, affordable | Steep learning curve |

| Diffgram | Open-source, privacy-sensitive data | Custom workflows, audit logs | Fully customizable, cost-effective | Technical expertise required |

| Hive Data | Large datasets, fast labeling | AI-powered, managed workforce | High-speed annotation, industry-specific | Limited flexibility |

Choosing the right data labeling tool depends on your project scale, budget, and requirements. Evaluate these tools based on their compatibility with your workflows and their ability to handle your specific data types and annotation needs.