Introduction to Data Science

Data Science is a game-changing field that blends programming, statistics, and domain expertise to extract insights from data. From healthcare to marketing, industries rely on data-driven decision-making to stay competitive.

With demand for data-savvy professionals skyrocketing, building the right skills is more important than ever. Let’s embark on a roadmap to help you master this field step by step.

Why Python Is Your Best Friend

A Beginner-Friendly Language

Python is loved for its simplicity. Its clean, readable syntax lowers the learning curve, making it a favorite among beginners and experts alike.

Extensive Libraries for Data Science

Popular libraries like Pandas (data manipulation), NumPy (numerical operations), and Matplotlib (visualization) make Python indispensable. These tools streamline workflows and supercharge productivity.

Versatility and Community Support

Python’s flexibility allows you to build prototypes quickly. Plus, its vast community offers tutorials, forums, and open-source projects that are just a click away.

The Power of Statistics & Probability

Core Statistical Concepts

Understanding statistics is like unlocking the language of data. Key concepts such as mean, median, mode, and variance form the backbone of analysis.

Advanced Techniques

Dig deeper into probability theory, including Bayes’ theorem and hypothesis testing, to evaluate data trends and make predictions.

Real-World Applications

Statistics empowers you to assess risk, optimize processes, and draw valid conclusions—skills that every Data Scientist needs in their toolkit.

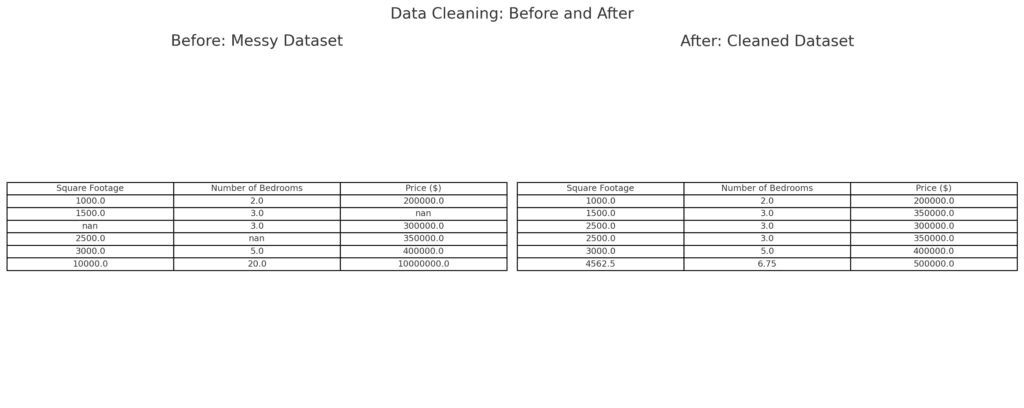

Exploring Data Cleaning & Feature Engineering

Data Cleaning Essentials

Real-world datasets are messy. Learn to handle missing values, outliers, and inconsistent formatting to prepare your data for analysis.

Transforming Raw Data

Feature engineering transforms raw datasets into insightful inputs. Techniques like scaling, encoding, and dimensionality reduction can make or break model performance.

Tools and Techniques

Tools like Python’s Pandas simplify data cleaning, while feature engineering techniques like one-hot encoding turn categorical data into actionable insights.

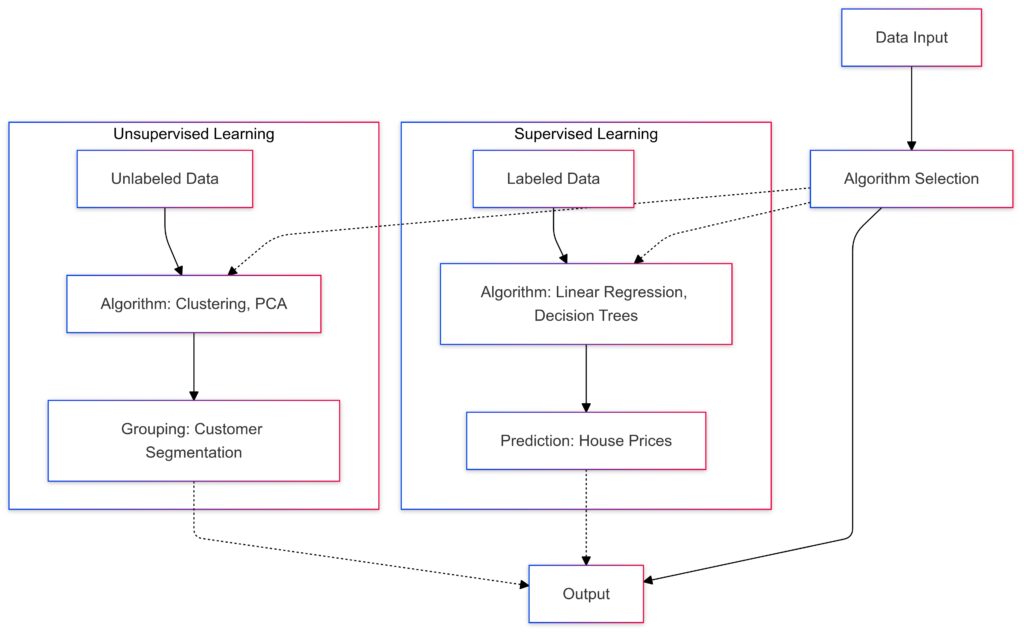

Diving into Machine Learning Basics

Core Concepts

Machine Learning is the engine driving Data Science. Start by exploring supervised (e.g., Linear Regression) and unsupervised learning (e.g., clustering).

Key Algorithms

Get hands-on with algorithms such as Decision Trees, Random Forests, and Support Vector Machines to understand how machines learn patterns.

Model Evaluation

As you experiment, evaluate your models with metrics like accuracy, precision, and recall to gauge their effectiveness.

Learning Model Evaluation & Optimization

Importance of Evaluation

Building a model is just the beginning. To ensure its reliability, you must test it rigorously. Use cross-validation to check performance across different data splits, reducing overfitting.

Fine-Tuning Models

Hyperparameter tuning, such as adjusting learning rates or tree depths, can significantly improve accuracy. Techniques like Grid Search or Random Search can help identify the best configuration.

Key Metrics

Metrics like F1 score, ROC-AUC, and confusion matrices provide insights into how well your model handles classification tasks or imbalanced data.

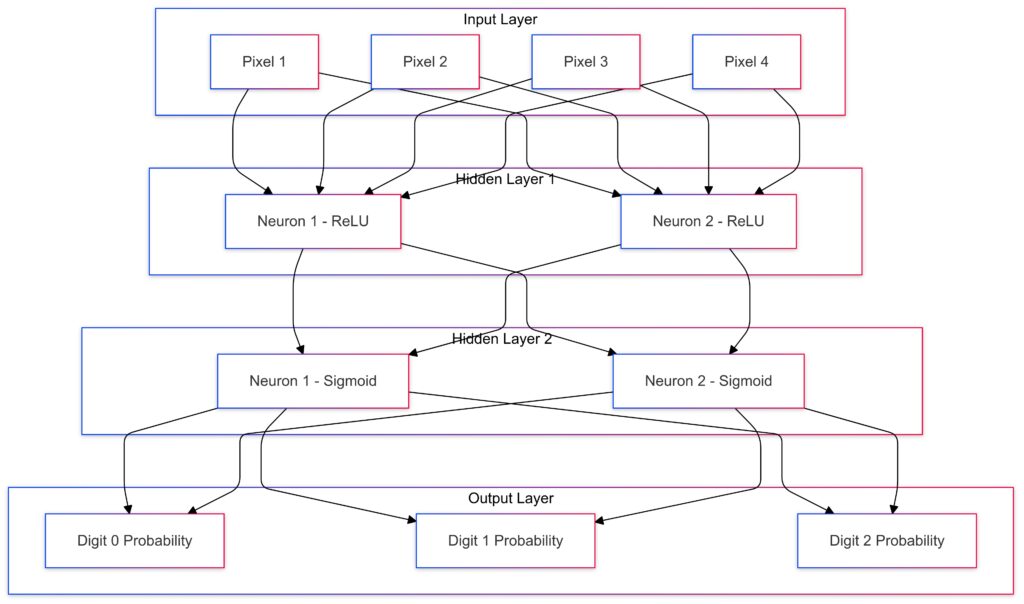

Stepping into Deep Learning

Represents pixels from an image of handwritten digits.

Each input node corresponds to a pixel value.

Hidden Layers:

Layer 1: Uses ReLU (Rectified Linear Unit) activation functions.

Layer 2: Uses Sigmoid activation functions.

Output Layer:

Outputs probabilities for each digit (e.g.,

0, 1, 2).Data Flow:

Data flows from the input layer, through the hidden layers, and finally to the output layer.

Arrows depict the connections and computations across layers.

Fundamentals of Neural Networks

Deep Learning goes beyond traditional algorithms. Start with understanding layers, weights, and activation functions to grasp how these networks learn.

Frameworks to Explore

Libraries like TensorFlow, Keras, and PyTorch simplify building complex models. These tools are pivotal for tasks like image recognition or natural language processing.

Applications in the Real World

From self-driving cars to speech recognition, deep learning powers groundbreaking innovations. Mastering this skill opens doors to cutting-edge projects.

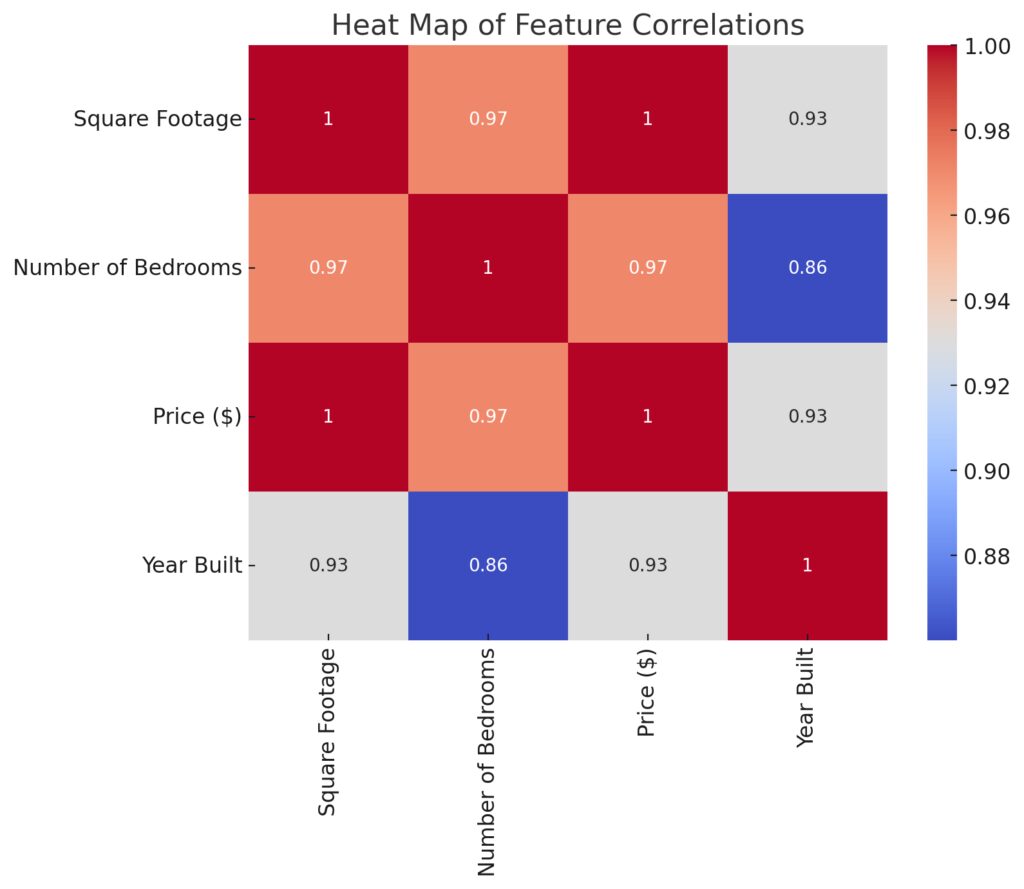

Getting Comfortable with Data Visualization

Features included: Square Footage, Number of Bedrooms, Price, and Year Built.

Red represents strong positive correlations.

Blue indicates strong negative correlations.

Lighter shades signify weaker relationships.

Why Visualization Matters

A picture speaks a thousand words. Data visualization transforms raw numbers into compelling narratives, making insights easier to grasp.

Tools of the Trade

Learn to create visuals using Matplotlib, Seaborn, or Plotly. These tools let you build everything from basic line charts to interactive dashboards.

Best Practices

Focus on clarity. Use color effectively, avoid clutter, and always design with your audience in mind—whether it’s a stakeholder or a peer.

Harnessing the Potential of NLP

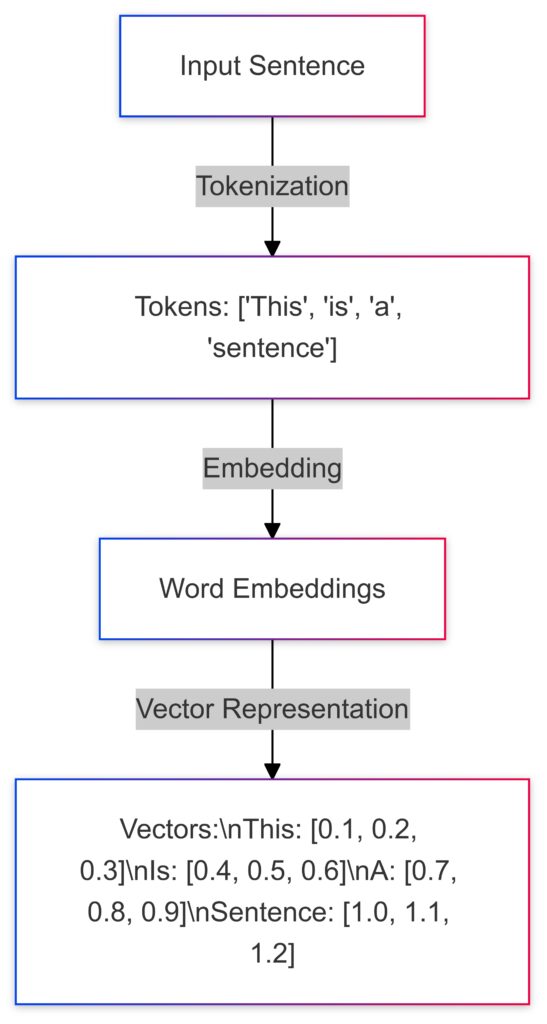

Steps Illustrated:

Sentence: [1.0, 1.1, 1.2]

Input Sentence: The process starts with a text sentence.

Example: "This is a sentence"

Tokenization:

The sentence is broken into individual tokens: ['This', 'is', 'a', 'sentence'].

Word Embeddings:

Each token is transformed into numerical embeddings.

Vector Representation:

Example embeddings for tokens:

This: [0.1, 0.2, 0.3]

Is: [0.4, 0.5, 0.6]

A: [0.7, 0.8, 0.9]

Understanding Text Data

Natural Language Processing enables machines to understand human text. Start with basics like tokenization, stemming, and lemmatization to process text effectively.

Building Blocks of NLP

Techniques like TF-IDF and Bag-of-Words models help in text classification or sentiment analysis. For deeper insights, explore word embeddings like Word2Vec.

Advanced NLP Techniques

Move beyond basics with transformers or pre-trained models like BERT and GPT. These models excel in tasks such as summarization and translation.

Working with Big Data & the Cloud

Geographic visualization of cloud data storage costs and capacities across leading providers like AWS, GCP, and Azure.

The Need for Scalability

Modern datasets can be massive. Learn to handle Big Data efficiently with tools like Apache Spark that process data in distributed systems.

Leveraging Cloud Platforms

Platforms like AWS, GCP, or Azure offer scalable solutions for data storage, analysis, and machine learning deployments.

Bridging the Gap

Integrate Python with cloud-based tools for seamless workflows. This combination underpins many real-world data science applications.

Strengthening SQL & Database Skills

Mastering SQL Basics

SQL remains a cornerstone for managing structured data. Start with foundational operations like SELECT, WHERE, and JOIN to extract meaningful subsets of data.

Advanced Querying

Level up with window functions, subqueries, and aggregations. These techniques enable complex analysis directly within the database.

Integrating SQL with Python

Combine SQL’s power with Python using libraries like SQLAlchemy or Pandas. This integration allows seamless querying and manipulation of datasets for further analysis.

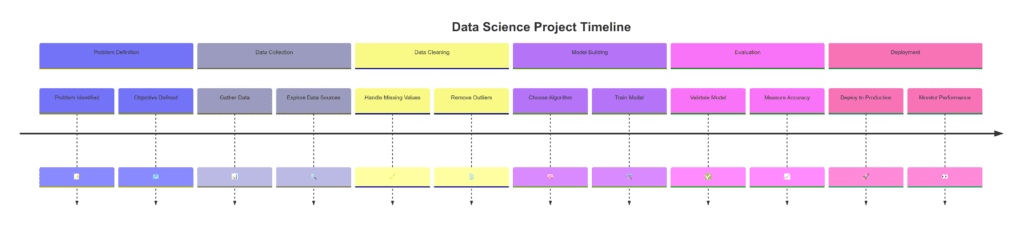

Building Real-World Projects

Problem Definition:📝 Problem Identified

🗺️ Objective Defined

Data Collection:📊 Gather Data

🔍 Explore Data Sources

Data Cleaning:🧹 Handle Missing Values

🗑️ Remove Outliers

Model Building:🧠 Choose Algorithm

🛠️ Train Model

Evaluation:✅ Validate Model

📈 Measure Accuracy

Deployment:🚀 Deploy to Production

👀 Monitor Performance

Practical Learning by Doing

Theories and tutorials only take you so far. Apply what you’ve learned by working on real-world projects, like predicting stock prices or classifying emails.

Tools for End-to-End Projects

Leverage web scraping tools like Beautiful Soup to gather data, and platforms like Kaggle to test and showcase your models.

Portfolio Building

Document each project. Create a portfolio to display your process, solutions, and results, giving potential employers tangible proof of your expertise.

Polishing Communication & Storytelling

Crafting Clear Narratives

Data insights are only as impactful as their delivery. Develop the ability to explain what the data reveals in simple terms.

Tools for Storytelling

Use presentation tools like PowerPoint or interactive dashboards like Tableau to communicate findings effectively to diverse audiences.

Tailoring to Your Audience

Adapt your storytelling. Focus on data-driven solutions for technical audiences and actionable recommendations for non-technical stakeholders.

Emphasizing Ethics & Privacy

The Role of Ethics in Data Science

Data Science isn’t just about models and numbers—it affects people. Always consider the ethical implications of your work.

Handling Sensitive Data

Follow established privacy frameworks like GDPR and anonymize data when possible. Mishandling sensitive information can have severe repercussions.

Mitigating Bias

Bias in datasets can lead to harmful predictions. Regularly audit your data and models to ensure fairness and minimize discrimination.

Continuous Learning & Networking

Staying Updated

The Data Science landscape evolves rapidly. Follow industry blogs, take online courses, and participate in competitions to keep your skills sharp.

Joining Communities

Engage with like-minded individuals in forums like Reddit or Data Science Meetups. Networking opens doors to collaborations and job opportunities.

Contributing to Open Source

Collaborate on open-source projects to gain exposure and feedback. Contributions not only enhance your skills but also build your reputation in the community.

Conclusion

Data Science offers a world of opportunities, but the journey requires dedication. By following this roadmap—from Python and Machine Learning to ethics and storytelling—you’ll build a robust skill set that opens doors to a promising career. Now’s the time to ignite your future in Data Science!

FAQs

Is Python the only programming language for Data Science?

No, but it’s the most popular choice. Alternatives like R are excellent for statistical analysis, while SQL is crucial for database queries. Julia is gaining traction for its performance in numerical computations. However, Python’s versatility and vast ecosystem make it the top recommendation for beginners and professionals alike.

Do I need a strong math background for Data Science?

A solid understanding of mathematics, especially linear algebra, calculus, and probability, is helpful. For instance, concepts like gradient descent (a calculus application) are essential for Machine Learning. However, many tools and libraries abstract these complexities, allowing you to learn as you go.

How do I practice Data Cleaning effectively?

Start by working on real-world datasets available on platforms like Kaggle or UCI Machine Learning Repository. Practice handling missing values, removing duplicates, and standardizing formats. For example, clean a messy dataset by replacing missing customer ages with the median and converting dates to a standard format.

Which Machine Learning algorithm should I learn first?

Start with Linear Regression, as it introduces foundational concepts like features, coefficients, and predictions. Then progress to Decision Trees and k-Nearest Neighbors. Each algorithm provides unique insights into how models learn patterns in data.

What are the best tools for Deep Learning?

Popular frameworks include TensorFlow, Keras, and PyTorch. For example, TensorFlow offers flexibility for advanced research, while Keras provides a user-friendly interface for beginners. Choose the tool that aligns with your goals and project requirements.

How do I showcase my Data Science skills to employers?

Build a portfolio showcasing your projects. Include examples like a customer churn prediction model, a data visualization dashboard, or an NLP sentiment analysis tool. Host your work on GitHub and create a polished LinkedIn profile to highlight achievements.

Can Data Science be self-taught?

Absolutely. Many professionals start their journey through online courses, tutorials, and projects. Websites like Coursera, edX, and YouTube offer excellent resources. For instance, a self-paced Python course coupled with hands-on projects can provide a strong foundation.

How do I stay updated in this rapidly evolving field?

Subscribe to newsletters like Towards Data Science or KDnuggets, and follow thought leaders on platforms like LinkedIn. Participate in forums such as Reddit’s r/datascience or Kaggle discussions to stay in the loop. For example, attending a webinar on the latest in Deep Learning can provide fresh insights.

Why is ethical consideration crucial in Data Science?

Data Science impacts real people. For instance, an algorithm used for loan approvals might inadvertently discriminate against certain groups. By emphasizing ethics, such as avoiding biased training data or respecting user privacy, you ensure fair and responsible outcomes.

What are some real-world examples of Data Science applications?

Data Science is everywhere! Netflix uses it to recommend shows by analyzing your viewing history. Amazon predicts what you might buy next based on past purchases. In healthcare, predictive models help forecast disease outbreaks. In sports, teams use analytics to improve performance and game strategies.

What is the difference between supervised and unsupervised learning?

In supervised learning, the model is trained on labeled data (e.g., predicting house prices based on square footage and location). In unsupervised learning, the model identifies patterns in unlabeled data, like grouping customers with similar purchasing habits. For example, clustering algorithms can identify different customer segments for targeted marketing.

How do I choose the right evaluation metric for my model?

It depends on your problem. For example:

- Use accuracy for balanced datasets.

- Apply precision and recall for imbalanced datasets like fraud detection.

- Use the ROC-AUC score for ranking problems.

For instance, in spam detection, recall is vital to ensure spam emails are caught, even if a few are misclassified.

What role does SQL play in Data Science?

SQL is essential for working with structured data stored in databases. For instance, you might use SQL to filter customers who made purchases last month or calculate the average sales per region. It’s often the first step in preparing data before analysis or machine learning.

Can I use Excel for Data Science tasks?

Yes, but it has limitations. Excel works well for small datasets and initial explorations. However, for larger datasets or complex analyses, Python and tools like Pandas are more efficient. For instance, Excel might handle a 10,000-row dataset, but a million-row dataset requires more robust tools.

What is the importance of data visualization in Data Science?

Data visualization helps uncover trends, outliers, and patterns that raw data alone might not reveal. For example, a scatter plot could highlight a correlation between marketing spend and sales growth, while a heatmap might reveal areas with higher customer density.

How can I get started with Big Data tools?

Start by learning Apache Spark, which processes large datasets quickly by distributing computations across multiple machines. For example, Spark can analyze terabytes of web traffic logs to identify peak activity times. Cloud platforms like AWS and Google Cloud also offer user-friendly interfaces for Big Data processing.

What is the role of NLP in Data Science?

NLP (Natural Language Processing) analyzes and derives meaning from text data. For instance, an airline might analyze customer reviews to detect frequent complaints. Tools like NLTK and spaCy are popular for tasks like sentiment analysis, topic modeling, and text classification.

What resources can I use to practice Data Science?

- Kaggle: Participate in competitions and practice on datasets like Titanic survivors or house prices.

- Google Colab: A free platform for coding and experimenting with Python notebooks.

- UCI Machine Learning Repository: A treasure trove of datasets for learning and experimentation.

What are the job prospects in Data Science?

Data Science roles are booming across industries like tech, finance, healthcare, and marketing. Positions range from Data Analyst to Machine Learning Engineer. For instance, Glassdoor consistently ranks Data Scientist as one of the top jobs due to demand, salary, and job satisfaction.

How important is domain knowledge in Data Science?

Domain expertise adds context to your analyses. For example, understanding finance helps when analyzing stock trends, while healthcare knowledge is crucial for building predictive models for patient care. It bridges the gap between technical skills and meaningful business solutions.

What’s the difference between Data Science and Data Analytics?

Data Analytics focuses on analyzing historical data to uncover trends (e.g., monthly sales growth). Data Science goes further, building predictive models to forecast future outcomes (e.g., predicting next month’s sales). For example, an analyst might report a decline in website traffic, while a Data Scientist identifies factors causing it and suggests solutions.

How can I stand out in a Data Science interview?

- Showcase real projects, like a customer segmentation model or a recommendation engine.

- Explain your thought process clearly—how you approached data cleaning, model selection, and evaluation.

- Be prepared to solve coding problems or SQL queries on the spot.

For example, sharing a GitHub repository with a polished project demonstrates both technical skills and initiative.

What are the most common challenges in Data Science projects?

- Dirty Data: Real-world datasets often have missing values, duplicates, or inconsistencies. For instance, cleaning a customer database might involve filling missing ages or removing invalid entries.

- Overfitting Models: A model might perform well on training data but fail on new data. Cross-validation and regularization help mitigate this issue.

- Interpreting Results: Communicating complex insights to non-technical stakeholders is often tricky. Effective data visualization and storytelling bridge this gap.

What is feature engineering, and why is it crucial?

Feature engineering involves transforming raw data into useful inputs for a model. For instance, instead of using a raw date column, you might extract the day of the week or month to improve a time-based model’s accuracy. Thoughtful feature engineering can significantly enhance model performance.

What tools should I use for time-series analysis?

Tools like statsmodels, Prophet, and Python’s pandas excel at time-series analysis. For example, a retailer might use these tools to predict future sales based on historical trends. Visualization libraries like Matplotlib can help plot seasonal patterns or anomalies.

What is the difference between Data Engineering and Data Science?

Data Engineers focus on building infrastructure and pipelines for collecting, storing, and processing data. For example, they might design a system that processes terabytes of streaming data from IoT devices. Data Scientists use this data to build predictive models and derive insights. Both roles are interdependent but distinct.

How do I handle imbalanced datasets?

Imbalanced datasets can skew results. For example, in fraud detection, there may be far fewer fraud cases than legitimate transactions. Techniques like resampling, SMOTE (Synthetic Minority Oversampling Technique), or weighting model classes help address this. Algorithms like XGBoost also have built-in options for handling imbalance.

Can I transition to Data Science from a non-technical background?

Yes, many Data Scientists come from fields like economics, business, or biology. Start with the basics:

- Learn Python or R.

- Understand statistics and probability.

- Practice on beginner-friendly projects, like predicting house prices.

For example, someone with a marketing background could analyze campaign data to identify key drivers of ROI.

How long does it take to become proficient in Data Science?

It depends on your starting point and dedication. For full-time learners, gaining foundational skills can take 6-12 months, including Python, SQL, statistics, and basic Machine Learning. Building expertise through projects and advanced techniques like Deep Learning might require an additional year or more.

What are the career paths in Data Science?

- Data Analyst: Focuses on interpreting data and creating reports.

- Data Scientist: Develops predictive models and deeper insights.

- Machine Learning Engineer: Specializes in deploying and optimizing models at scale.

- Business Intelligence Analyst: Translates data into actionable business strategies.

For example, a Data Scientist might predict customer churn, while a Machine Learning Engineer ensures the model runs efficiently in production.

How do I choose the right dataset for practice?

Start with clean, well-documented datasets for beginners, like those on Kaggle or Google Dataset Search. As you gain confidence, move to messier, real-world datasets with missing values or anomalies. For example, try analyzing Titanic survivor data as a beginner, then move to a dataset on housing prices with mixed types of data.

What’s the difference between batch and real-time data processing?

- Batch Processing: Data is collected, processed, and analyzed in chunks (e.g., daily sales reports). Tools like Hadoop or Apache Spark are common here.

- Real-Time Processing: Data is processed as it’s generated (e.g., fraud detection during transactions). Tools like Apache Kafka or Flink are ideal for such tasks.

What industries benefit most from Data Science?

Almost every industry benefits from Data Science.

- Healthcare: Predicting disease outbreaks or analyzing patient data.

- Finance: Fraud detection, credit risk modeling, and portfolio optimization.

- Retail: Customer segmentation, demand forecasting, and supply chain optimization.

For instance, airlines use Data Science to optimize flight schedules and pricing dynamically.

How can I find a mentor in Data Science?

Join communities like LinkedIn Groups, Kaggle, or local meetups to connect with professionals. Attend webinars, hackathons, or conferences like PyData or ODSC. Reach out respectfully, expressing your goals and interest in learning from their experience. For instance, asking for feedback on a project can initiate a meaningful conversation.

What is the role of APIs in Data Science?

APIs (Application Programming Interfaces) allow you to access external data and functionalities. For example:

- Use the Twitter API to analyze tweet sentiment.

- Leverage the Google Maps API to visualize geographic data.

APIs make it easy to integrate external tools and datasets into your workflows.

What’s the future of Data Science?

The field is evolving toward automation (AutoML), integration with IoT, and ethical AI. Skills in cloud computing, NLP, and advanced neural networks are becoming increasingly valuable. For example, the rise of generative AI models like ChatGPT showcases the growing capabilities and opportunities in this space.

Resources

Online Learning Platforms

- Coursera: Offers courses from top universities, such as Andrew Ng’s famous Machine Learning course from Stanford.

- edX: Provides programs like Professional Certificate in Data Science from Harvard University.

- Udemy: Features budget-friendly courses on Python, SQL, and Machine Learning, often with lifetime access.

- DataCamp: Specializes in interactive learning for Python, R, and data visualization skills.

Practice Platforms

- Kaggle: Participate in competitions, explore datasets, and learn with tutorials on popular Data Science techniques.

- DrivenData: Tackle real-world challenges with social impact, like predicting water pump functionality.

- HackerRank: Focuses on algorithmic and technical interview prep, including SQL and Python challenges.

- LeetCode: Ideal for brushing up on programming and algorithm skills used in Data Science interviews.

Books

- Python for Data Analysis by Wes McKinney: A deep dive into Pandas and NumPy, perfect for hands-on learners.

- Introduction to Statistical Learning (ISLR): A beginner-friendly guide to statistical models and Machine Learning.

- Deep Learning by Ian Goodfellow: A comprehensive resource for mastering neural networks and advanced techniques.

- Data Science for Business by Foster Provost and Tom Fawcett: Explains data-driven decision-making for professionals.

Tools and Libraries Documentation

- Python: Official Python Documentation provides thorough explanations and tutorials.

- Pandas: Pandas Documentation for mastering data manipulation.

- Scikit-Learn: Scikit-Learn Guide for Machine Learning algorithms.

- TensorFlow: TensorFlow Tutorials to get started with Deep Learning.

Communities and Forums

- Reddit: Subreddits like r/datascience and r/MachineLearning are active hubs for discussions.

- Stack Overflow: Perfect for troubleshooting code and learning from experienced developers.

- LinkedIn: Join groups like Data Science Central to connect with professionals and stay updated on industry trends.

- KDnuggets: A blog and community offering tutorials, opinions, and news about Data Science.

Free Resources

- Google Colab: A free platform to run Python notebooks in the cloud without setup hassles.

- UCI Machine Learning Repository: A repository of free datasets for practice.

- Fast.ai: Offers a free course on practical Deep Learning and AI applications.

- YouTube: Channels like StatQuest, Sentdex, and Corey Schafer are excellent for step-by-step guides.

Certifications

- Google Data Analytics Certificate: An affordable and beginner-friendly program for data skills.

- Microsoft Certified: Azure Data Scientist Associate: Focuses on using cloud tools for Machine Learning.

- AWS Certified Machine Learning – Specialty: Highlights skills in deploying scalable ML models in the cloud.

- IBM Data Science Professional Certificate: Covers Python, SQL, and real-world project applications.

Advanced Resources

- ArXiv: Explore the latest research papers on Machine Learning and AI.

- Distill.pub: Beautiful, interactive explanations of complex Machine Learning concepts.

- Papers with Code: Combines cutting-edge research with code implementations for hands-on learning.