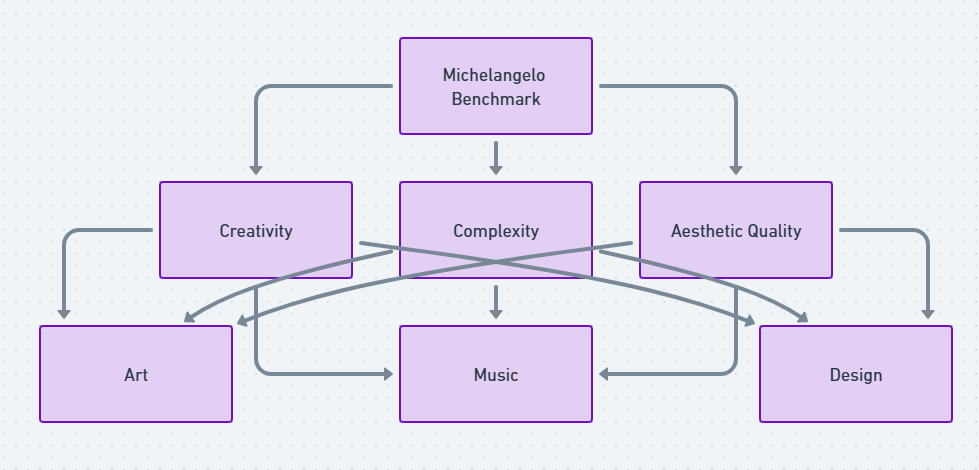

What Is the Michelangelo Benchmark? Developed by DeepMind, – designed to assess how well artificial intelligence (AI) can replicate human creativity. It focuses in creative fields such as art, music, and design.

Named after the Renaissance genius Michelangelo, this benchmark reflects the AI’s ability to not only generate creative content but also to exhibit depth, complexity, and a near-human understanding of artistic principles.

DeepMind, already known for its groundbreaking developments in AI research, has chosen this name as a tribute to Michelangelo’s mastery, symbolizing the pursuit of excellence in creative tasks. Just as Michelangelo set a benchmark for artistic skill centuries ago, DeepMind aims to set the standard for AI’s capabilities in creative domains.

Why Does Creativity Matter in AI?

Traditionally, AI has been seen as a tool for performing logical or repetitive tasks—things like pattern recognition or data analysis. But creativity? That’s always been seen as a uniquely human trait, something too elusive or intuitive for a machine to grasp.

However, the rise of creative AI models has challenged that assumption. The Michelangelo Benchmark plays a key role here, focusing on how well AI can mimic human artistry, compose music, or even develop new ideas across various disciplines. Creativity matters because it’s the frontier of AI evolution, where machines move beyond mechanical tasks into the realm of imagination and originality.

This is why DeepMind’s Michelangelo Benchmark represents a significant leap. It measures AI’s performance not just by accuracy or speed, but by its ability to generate something new and beautiful—a true benchmark of intelligence.

The Role of Neural Networks in Creative AI

To meet the Michelangelo Benchmark, AI needs more than raw computational power. It requires the sophisticated learning models that neural networks provide. Neural networks are designed to mimic the human brain’s ability to learn from experience, making them crucial to DeepMind’s advancements in creative AI.

In recent years, neural networks have been refined to the point where they can generate original paintings, compose music, and even write literature. These models learn from vast datasets of human-created works, extracting patterns, styles, and techniques that they then use to create new content. The Michelangelo Benchmark evaluates how well these AI-generated works compare to the creative output of humans, testing whether machines can evoke emotion or create meaning the way we do.

Just like how Michelangelo’s masterpieces are defined by their depth and precision, the AI needs to exhibit a similar balance of technical skill and artistic flair.

How the Michelangelo Benchmark Is Evaluated

So, what exactly does the Michelangelo Benchmark measure? The evaluation process is comprehensive and involves several key components:

- Creativity: How original is the AI’s output? Does it merely replicate existing works, or does it create something new and inspiring?

- Complexity: Does the AI demonstrate an understanding of intricate artistic or musical structures? Can it handle the subtleties of form, light, and emotion in visual arts, or the layers of harmony and rhythm in music?

- Aesthetic Quality: This is where the human touch comes in. Evaluators judge the AI’s creations not just on technical accuracy but on their beauty and emotional impact—just as we judge human art.

- Consistency: Can the AI produce high-quality, creative work repeatedly, across different contexts and challenges?

Each of these areas ensures that the AI is tested against the full range of human creativity, pushing the boundaries of what we thought possible for machine learning.

Benchmark Evaluation Comparison Table

| Metric | Definition | Scoring Method |

|---|---|---|

| Creativity | Measures originality, uniqueness, and innovation in the output. | Rated on a scale of 1 to 10 based on uniqueness and innovation. |

| Complexity | Evaluates the level of sophistication and detail in the task. | Rated on a scale of 1 to 10 based on complexity and depth of detail. |

| Aesthetic Quality | Assesses the visual appeal and artistic quality of the result. | Rated on a scale of 1 to 10 based on visual and artistic standards. |

Applications Beyond Art: The Michelangelo Benchmark in Other Fields

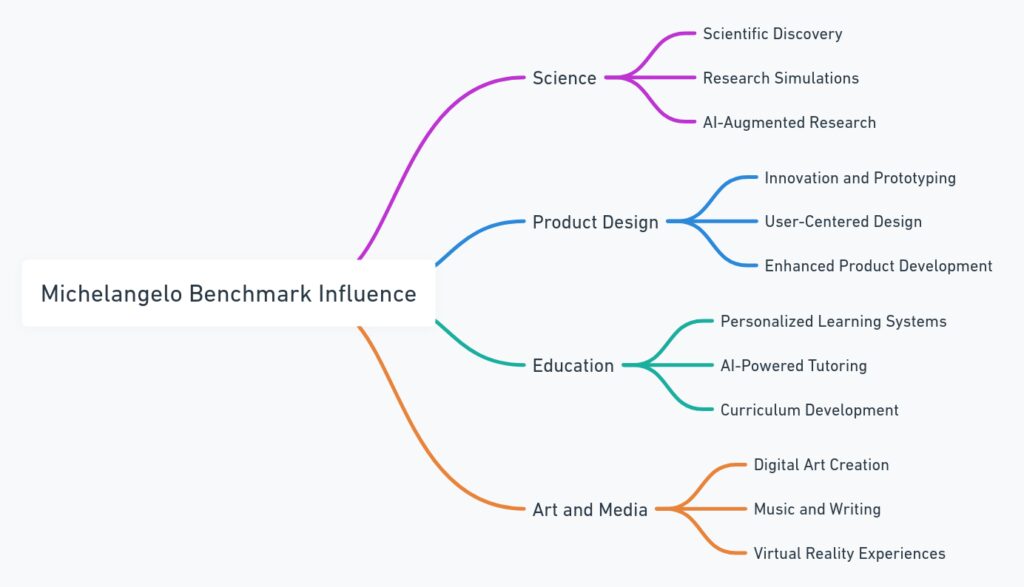

While the Michelangelo Benchmark emphasizes artistic creativity, its implications extend far beyond the arts. In fact, DeepMind envisions this benchmark influencing everything from scientific research to product design and even education. Here’s how:

- Science: AI that meets the Michelangelo Benchmark can aid in discovering new solutions, whether in medicine or material science. Imagine an AI capable of creatively formulating hypotheses or designing new drugs based on its understanding of biology and chemistry.

- Design: In architecture or product design, creative AI could generate innovative structures or objects that are both functional and beautiful, much like how Michelangelo’s sculptures were technically brilliant and aesthetically powerful.

- Education: By integrating the Michelangelo Benchmark into educational tools, students could engage with AI that helps them explore their own creativity—whether in music, art, or storytelling.

The benchmark is pushing AI’s potential into areas that require both rational and creative thinking.

The Future of AI Creativity: What Comes Next?

The Michelangelo Benchmark is not just about how well AI can imitate human creativity today—it’s about where AI is heading. DeepMind and other AI research institutions are working towards models that don’t just replicate what’s been done, but innovate in ways humans never imagined.

Future iterations of this benchmark might explore how AI can develop entirely new artistic genres or contribute to collaborative creativity, where machines and humans work together to produce something neither could achieve alone.

In essence, DeepMind’s Michelangelo Benchmark could mark the beginning of a new era in artificial intelligence, one where machines become not just tools but partners in the creative process. It’s not just about copying Michelangelo anymore; it’s about creating the next Michelangelo.

FAQs

What does the Benchmark measure?

The benchmark evaluates AI in several key areas:

- Creativity: Can the AI generate original, innovative content?

- Complexity: How well does the AI handle intricate structures and forms in art or music?

- Aesthetic Quality: Does the AI produce visually or emotionally impactful works?

- Consistency: Can the AI consistently create high-quality outputs across different creative tasks?

How is AI creativity different from human creativity?

AI creativity, as evaluated by the Michelangelo Benchmark, stems from neural networks that learn patterns and techniques from large datasets of human works. While AI can generate new and compelling content, it often lacks the intuitive emotional depth and personal experience that define human creativity.

Can AI surpass humans in creative tasks?

While AI can produce impressive and innovative results, the Michelangelo Benchmark suggests that AI still mimics human creativity rather than surpasses it. AI can be extremely efficient in generating art or music, but human nuance and emotional connection remain unmatched, at least for now.

How does DeepMind train AI to meet the Michelangelo Benchmark?

DeepMind uses neural networks and machine learning models trained on vast datasets, including artwork, music, and designs. These models learn the underlying patterns, styles, and techniques and apply them to generate new creative outputs.

In what fields can be applied?

While initially focused on art, music, and design, the Michelangelo Benchmark has applications in:

- Science: Assisting in drug discovery or new scientific hypotheses.

- Product Design: Innovating functional yet aesthetically pleasing products.

- Education: Helping students explore creativity through AI-driven tools.

Is it publicly available?

Currently, the Michelangelo Benchmark is a part of DeepMind’s internal AI research framework. Public access is limited, but you can follow DeepMind’s publications for updates.

How does it differ from other AI benchmarks?

Most AI benchmarks evaluate models based on accuracy, speed, or task completion. The Michelangelo Benchmark specifically focuses on AI’s ability to perform creative tasks, making it unique in its assessment of aesthetic and emotional qualities, not just technical performance.

Can AI and humans collaborate creatively?

Yes! One exciting potential of the Michelangelo Benchmark is fostering collaborative creativity between humans and AI. AI can act as a tool to enhance or inspire human creativity, offering new ideas and possibilities that artists and designers can refine and personalize.

Resources

DeepMind’s Official Website

DeepMind regularly publishes research papers, blog posts, and updates on their breakthroughs in artificial intelligence. This is a great place to dive deep into their various projects, including the Michelangelo Benchmark.

AI and Creativity Research Papers

Academic papers on AI’s role in creative industries often provide valuable insights. You can search through platforms like arXiv and Google Scholar for the latest publications on AI’s capabilities in art, music, and design.