As the world moves deeper into the age of the Internet of Things (IoT) and ubiquitous computing, the ability to deploy AI models on microcontrollers is transforming how we interact with everyday devices. From your smartwatch analyzing your sleep patterns to industrial sensors predicting equipment failures, AI is finding its way into devices that are smaller and more resource-constrained than ever before. But how do you effectively deploy AI on such tiny platforms? This guide will walk you through the essentials—from understanding the importance and challenges to practical steps and strategies.

Why Deploy AI on Microcontrollers?

The Growing Need for Edge AI

In an increasingly connected world, edge AI—the processing of data on the device where it is generated, rather than sending it to the cloud—offers significant benefits. By deploying AI models on microcontrollers, we can create intelligent systems that make decisions in real-time, with minimal latency and enhanced privacy. This is crucial for applications in areas like wearable technology, home automation, healthcare, and industrial monitoring.

For example, consider a wearable fitness tracker that analyzes heart rate and motion data to provide immediate feedback on your workout. By processing this data locally on a microcontroller, the device can respond in real-time without the delays associated with cloud processing. Furthermore, because data never leaves the device, user privacy is better protected.

Cost-Effectiveness and Scalability

Deploying AI on microcontrollers also presents a cost-effective solution. Microcontrollers are inexpensive, readily available, and consume far less power than larger processors. This makes them ideal for mass deployment in large-scale IoT networks, where cost and power efficiency are critical. Whether it’s in agriculture, smart cities, or environmental monitoring, the scalability of microcontroller-based AI solutions offers enormous potential.

Real-World Applications

Smart home devices are a prime example of microcontroller-based AI in action. A smart thermostat that learns your heating preferences and adjusts automatically based on time of day or occupancy would be inefficient if it had to rely on cloud-based AI. By embedding the AI directly into the thermostat’s microcontroller, it can respond faster and operate even when offline.

In industrial settings, AI-powered microcontrollers are used for predictive maintenance. Sensors embedded in machinery can analyze vibrations and other parameters in real-time, predicting failures before they occur, thus minimizing downtime and maintenance costs.

Challenges of Deploying AI on Microcontrollers

Limited Computational Resources

One of the most significant challenges in deploying AI on microcontrollers is dealing with limited computational resources. Microcontrollers typically have very modest CPU power, memory, and storage compared to the processors used in smartphones or computers. A typical microcontroller might only have a few kilobytes of RAM and a clock speed measured in megahertz, compared to the gigabytes of RAM and gigahertz speeds in more powerful devices.

This limitation requires AI models to be highly optimized, both in terms of size and computational efficiency. Standard AI models, particularly deep neural networks, can be massive, with millions of parameters requiring substantial computational resources. To run these on a microcontroller, the models must be drastically reduced in size.

Power Consumption

Another critical challenge is power consumption. Many microcontroller-based devices, such as wearables or remote sensors, rely on battery power. Running complex AI algorithms can quickly drain these batteries if not carefully managed. This necessitates the use of energy-efficient algorithms and low-power microcontroller units (MCUs).

For instance, a fitness tracker with AI capabilities needs to balance processing power with battery life. The AI must be efficient enough to run on the limited power available while still providing meaningful insights. Power optimization techniques are crucial to ensuring that the device can function for days or even weeks without needing a recharge.

Real-Time Processing Requirements

Many AI applications on microcontrollers require real-time processing. Whether it’s a gesture recognition system in a smart TV remote or an anomaly detection system in a factory, the AI model needs to process data and make decisions instantaneously. The challenge is to design AI models and algorithms that can operate within the stringent timing constraints imposed by these real-time applications.

Compatibility and Integration

Finally, there’s the challenge of compatibility and integration. Not all microcontrollers are suitable for running AI models, and not all AI models are designed to run on microcontrollers. Furthermore, integrating the AI model with the rest of the application—whether it’s controlling hardware, communicating over networks, or interfacing with sensors—can be complex and requires careful design and testing.

Strategies for Deploying AI on Microcontrollers

Model Optimization Techniques

To overcome the resource limitations of microcontrollers, model optimization is essential. Several techniques can be employed to reduce the size and complexity of AI models, making them more suitable for deployment on microcontrollers.

Quantization

Quantization is a technique that reduces the precision of the model’s parameters from floating-point to lower-bit representations, such as 8-bit integers. While this reduces the model’s accuracy slightly, it drastically reduces the model’s size and computational requirements. For instance, converting a model from 32-bit floating point to 8-bit integer reduces the memory requirement by a factor of four, allowing the model to fit within the constrained memory of a microcontroller.

Quantization can be applied at different stages of the model development process. Post-training quantization is the most common approach, where the model is first trained using full precision and then quantized. Some frameworks also support quantization-aware training, where the model is trained with quantization in mind, often resulting in better accuracy after quantization.

Pruning

Pruning involves removing less important connections or neurons in a neural network, effectively “thinning” the model without significantly impacting its performance. This technique reduces both the size of the model and its computational load, making it more feasible to run on microcontrollers.

Pruning can be done in various ways, such as removing weights below a certain threshold or eliminating entire layers that contribute little to the model’s output. After pruning, the model often undergoes a fine-tuning process to regain some of the accuracy lost during pruning.

Knowledge Distillation

Knowledge distillation is another optimization technique where a smaller “student” model is trained to mimic the behavior of a larger “teacher” model. The student model learns to approximate the teacher model’s output, effectively compressing the model while retaining much of the original performance. This smaller model can then be deployed on a microcontroller.

Bringing AI to microcontrollers opens up a world of possibilities in edge computing and IoT, enabling smarter, more efficient devices.

— Embedded Systems Expert

Choosing the Right AI Framework

Selecting the appropriate AI framework is crucial for deploying models on microcontrollers. Several frameworks have been developed specifically for this purpose, each offering tools and resources to streamline the deployment process.

TensorFlow Lite for Microcontrollers

TensorFlow Lite for Microcontrollers is an optimized version of TensorFlow Lite, designed specifically for running machine learning models on microcontrollers and other resource-constrained devices. It supports a wide range of microcontrollers and includes tools for quantizing and converting models.

TensorFlow Lite for Microcontrollers provides pre-built kernels optimized for common microcontroller operations, such as convolution and matrix multiplication. These kernels are highly efficient, ensuring that the AI model can run smoothly even on low-power devices. The framework also supports a wide range of hardware accelerators, allowing developers to take advantage of specialized processing units available on some microcontrollers.

MicroTensor

MicroTensor is another lightweight framework that focuses on deploying AI models on microcontrollers. It is designed to be highly modular and configurable, making it a good choice for custom applications where specific optimization is required.

MicroTensor supports various optimization techniques, such as quantization and pruning, and is compatible with a wide range of microcontroller platforms. It also provides tools for testing and validating the AI model on the target hardware, ensuring that the model performs as expected in real-world scenarios.

Arm CMSIS-NN

CMSIS-NN is a neural network library optimized for Arm Cortex-M processors. It provides a collection of highly optimized functions for running neural networks on Arm microcontrollers, leveraging the architecture’s capabilities to improve performance and reduce power consumption.

CMSIS-NN is particularly well-suited for developers using Arm Cortex-M microcontrollers, as it integrates seamlessly with the broader CMSIS ecosystem. It supports a wide range of neural network layers and operations, making it a flexible choice for deploying AI models on microcontrollers.

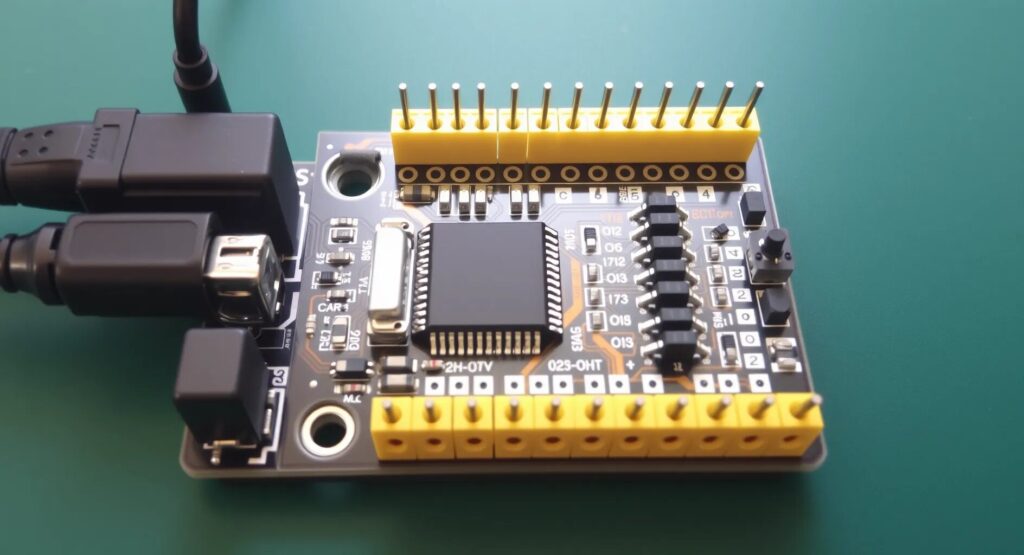

Hardware Selection and Considerations

Selecting the right microcontroller is as important as optimizing the model. Not all microcontrollers are created equal, and the choice of hardware can significantly impact the performance of your AI application.

Processing Power and Memory

When selecting a microcontroller for AI deployment, consider the processing power and memory available. Microcontrollers with higher clock speeds and more RAM can handle more complex AI models, but they also consume more power and may be more expensive.

For instance, the Arm Cortex-M4 and Cortex-M7 series offer a good balance of processing power and efficiency, making them popular choices for AI applications. These microcontrollers often include DSP (Digital Signal Processing) instructions that can accelerate certain AI operations.

Power Efficiency

Power efficiency is a critical consideration, especially for battery-operated devices. Look for microcontrollers that support low-power modes and dynamic voltage scaling. These features allow the microcontroller to reduce power consumption when the full processing power is not required.

Some microcontrollers also include specialized hardware accelerators for AI operations, such as the Arm Ethos-U series. These accelerators can perform AI computations more efficiently than the general-purpose CPU, reducing both power consumption and execution time.

Peripheral Support

Consider the peripherals required by your AI application. For instance, if your AI model processes sensor data, ensure that the microcontroller has sufficient ADC (Analog-to-Digital Converters), I2C, SPI, or other interfaces to connect to the sensors. Additionally, some microcontrollers offer hardware security modules that can be useful for protecting the AI model and data

from tampering.

Efficient Code Implementation

Optimizing the AI model is only part of the challenge. Efficiently implementing the model on the microcontroller is equally important. This involves writing code that is optimized for the specific architecture of the microcontroller, minimizing memory usage, and avoiding unnecessary operations.

Memory Management

Memory management is a critical aspect of deploying AI on microcontrollers. Given the limited RAM, careful allocation and management of memory are essential. This includes using memory pools, avoiding dynamic memory allocation where possible, and minimizing the stack and heap usage.

For instance, if your AI model uses large arrays or buffers, consider placing them in static memory to avoid fragmentation. Additionally, you can use memory-mapped I/O to directly access peripheral data without copying it to RAM, reducing memory overhead.

Leveraging Hardware Acceleration

If your microcontroller includes hardware accelerators, such as DSP instructions or AI-specific cores, make sure to leverage them. These accelerators can perform certain operations, such as matrix multiplication or convolution, much faster than the general-purpose CPU, freeing up the CPU for other tasks.

Parallel Processing

Where possible, use parallel processing techniques to speed up the AI computations. For example, if your microcontroller has multiple cores or supports SIMD (Single Instruction, Multiple Data) operations, you can parallelize certain parts of the AI model, such as the execution of different layers in a neural network.

Practical Deployment Example: Building a Smart Doorbell

To bring all these concepts together, let’s walk through a practical example: deploying an AI model on a microcontroller to build a smart doorbell that can recognize visitors using facial recognition.

Step 1: Selecting the AI Model

For this application, we need a lightweight convolutional neural network (CNN) capable of recognizing faces. A model like MobileNet, which is designed for mobile and embedded applications, can be a good starting point. The model should be trained on a dataset of faces, such as the LFW (Labeled Faces in the Wild) dataset, and fine-tuned to recognize the specific faces you want to identify.

Step 2: Optimizing the Model

Once the model is trained, we need to optimize it for deployment on a microcontroller. First, apply quantization to reduce the model size. Next, prune any unnecessary layers or neurons that do not significantly contribute to the model’s performance. Finally, consider using knowledge distillation to create a smaller student model if the original model is still too large.

Step 3: Choosing the Microcontroller and Framework

For this application, an Arm Cortex-M7 microcontroller with sufficient processing power and memory would be suitable. We can use TensorFlow Lite for Microcontrollers to convert the optimized model into a format that can run on the microcontroller.

Step 4: Implementing the AI Model

The optimized model is then implemented on the microcontroller. This involves loading the model into the microcontroller’s flash memory and writing code to process the camera input in real-time. The camera data is passed through the AI model, which outputs a prediction of the visitor’s identity.

Step 5: Testing and Iteration

After implementation, extensive testing is required to ensure that the smart doorbell works reliably in various lighting conditions and with different visitors. Monitoring the power consumption during operation is also important, especially if the doorbell is battery-powered. Based on the test results, further optimizations may be needed to balance accuracy, speed, and power efficiency.

Future Trends in AI on Microcontrollers

The deployment of AI on microcontrollers is still in its early stages, but several trends suggest that this field will continue to grow rapidly in the coming years.

Advanced Model Compression Techniques

As AI models become more sophisticated, so too will the techniques for compressing and optimizing them for microcontrollers. Research into new compression algorithms and hardware-software co-design is likely to yield even more efficient models, capable of running on the smallest and most power-constrained devices.

Integration with 5G and Edge Computing

The rollout of 5G and the growth of edge computing will further enhance the capabilities of microcontroller-based AI. With faster, low-latency connections, microcontrollers will be able to offload some tasks to nearby edge servers, enabling more complex AI models to be used without compromising real-time performance.

AI-Specific Microcontrollers

We can also expect to see the development of AI-specific microcontrollers, equipped with built-in AI accelerators and optimized for running machine learning models. These microcontrollers will offer greater performance and efficiency, making AI deployment on microcontrollers more accessible and widespread.

Conclusion

Deploying AI models on microcontrollers is a challenging yet highly rewarding endeavor. By overcoming the limitations of computational resources, power consumption, and real-time processing, we can unlock a new era of intelligent devices that are smaller, more efficient, and more responsive than ever before.

Whether you’re developing a smart doorbell, a wearable health monitor, or an industrial sensor, the ability to deploy AI on microcontrollers opens up a world of possibilities. With careful planning, optimization, and implementation, you can create devices that not only enhance our daily lives but also drive the future of technology forward.

The journey from model development to deployment on a microcontroller is complex, but with the right tools and techniques, it’s entirely achievable. As AI technology continues to advance, the possibilities for microcontroller-based AI are virtually limitless, and the future looks incredibly promising.

Resources for Deploying AI Models on Microcontrollers

To help you on your journey of deploying AI models on microcontrollers, I’ve compiled a list of resources that cover various aspects of the process—from model optimization to selecting the right hardware and frameworks. These resources include documentation, tutorials, research papers, and tools that will provide you with the knowledge and support you need.

1. TensorFlow Lite for Microcontrollers

- Website: TensorFlow Lite for Microcontrollers

- Description: This is the official documentation and resource hub for TensorFlow Lite for Microcontrollers. It includes setup guides, model conversion tools, and examples that show you how to deploy machine learning models on microcontrollers.

- Key Features: Step-by-step guides, pre-trained models, and community support.

2. Edge Impulse

- Website: Edge Impulse

- Description: Edge Impulse provides a platform for developing and deploying machine learning models on edge devices, including microcontrollers. They offer a cloud-based environment where you can collect data, build models, and deploy them directly to your devices.

- Key Features: No-code environment, support for various microcontroller platforms, and real-time testing.

3. Arm CMSIS-NN

- Website: Arm CMSIS-NN

- Description: CMSIS-NN is a collection of optimized neural network kernels for Arm Cortex-M processors. The official documentation provides details on how to integrate these kernels into your AI applications.

- Key Features: Optimized performance on Arm microcontrollers, example implementations.

4. MicroTensor

- Website: MicroTensor

- Description: MicroTensor is a lightweight machine learning framework tailored for microcontrollers. This GitHub repository contains code and examples for running TensorFlow models on various microcontroller platforms.

- Key Features: Flexibility, lightweight design, open-source.

5. TinyML: Machine Learning on IoT Devices

- Book: TinyML: Machine Learning on IoT Devices

- Authors: Pete Warden and Daniel Situnayake

- Description: This book provides an in-depth introduction to TinyML, focusing on deploying machine learning models on resource-constrained devices. It covers the entire process, from data collection and model training to deployment on microcontrollers.

- Key Features: Practical examples, case studies, and in-depth explanations.

6. Edge AI and Vision Alliance

- Website: Edge AI and Vision Alliance

- Description: This website is a resource hub for professionals working in the field of edge AI and computer vision. It includes tutorials, webinars, and articles on deploying AI at the edge, including on microcontrollers.

- Key Features: Industry news, technical resources, and community networking.

7. Arduino AI Frameworks

- Website: Arduino Nano 33 BLE Sense with TensorFlow Lite

- Description: Arduino’s official guide to using TensorFlow Lite on the Nano 33 BLE Sense microcontroller. The site provides detailed instructions on how to train and deploy models using the Arduino environment.

- Key Features: Step-by-step tutorials, compatibility with Arduino hardware.

8. Google Colaboratory (Colab)

- Website: Google Colab

- Description: Google Colab provides a cloud-based environment where you can train machine learning models for free. While not directly a microcontroller resource, Colab is invaluable for the model development and training phase before optimization and deployment.

- Key Features: Free cloud GPU/TPU, seamless TensorFlow integration.

9. Quantization and Model Optimization Techniques

- Website: Model Optimization Toolkit

- Description: TensorFlow’s Model Optimization Toolkit provides tools and techniques for quantization, pruning, and other optimizations that are essential for deploying AI on microcontrollers.

- Key Features: Tutorials, API documentation, and best practices for optimizing models.

10. Research Papers and Articles

- Paper: “TinyML: Enabling Mobile and Embedded Machine Learning at the Internet of Things”

- Link: TinyML Research Paper

- Description: This research paper provides an overview of the TinyML field, discussing challenges, current solutions, and future directions. It’s a great resource for understanding the broader context of deploying AI on microcontrollers.

- Article: “Deploying Deep Learning Models on Microcontrollers”

- Link: Deep Learning on Microcontrollers

- Description: This article by Microsoft Research discusses the deployment of deep learning models on microcontrollers, focusing on the challenges and techniques involved.

11. Development Boards and Kits

- STM32 AI

- Website: STM32 AI

- Description: STM32 AI is a toolchain for optimizing and deploying AI models on STM32 microcontrollers. It provides resources for converting pre-trained models and running them efficiently on STM32 hardware.

- Key Features: Integration with STM32CubeMX, model optimization tools.

- Nordic Thingy:91

- Website: Nordic Thingy:91

- Description: The Nordic Thingy:91 is a prototyping platform for cellular IoT applications. It supports machine learning at the edge, making it a suitable choice for deploying AI models on microcontrollers.

- Key Features: LTE-M and NB-IoT connectivity, built-in sensors.

These resources should provide you with a strong foundation to start or advance your journey in deploying AI models on microcontrollers. Whether you’re a beginner looking to understand the basics or an experienced developer seeking advanced optimization techniques, these tools and materials cover a broad spectrum of needs.