The field of machine learning (ML) has witnessed extraordinary advancements in recent years, particularly in how models are designed and optimized. Among the latest and most compelling developments is Direct Preference Optimization (DPO). This technique stands as a potential game-changer, enabling AI systems to better align with human preferences, thereby creating outputs that are not only accurate but also deeply personalized and relevant. This deep dive into Direct Preference Optimization explores its mechanisms, applications, challenges, and future implications, shedding light on why this approach could be pivotal in the next era of AI development.

Understanding the Basics of Direct Preference Optimization

At its core, Direct Preference Optimization (DPO) is an approach that directly incorporates user preferences into the model optimization process. Unlike traditional machine learning models, which often optimize for predefined objectives like accuracy or loss minimization, DPO focuses on learning from explicit human preferences to guide the model towards outcomes that better satisfy user needs.

DPO can be seen as a specialized form of reinforcement learning, where the reward signal is derived from human feedback rather than from a static objective function. This feedback can be in the form of pairwise comparisons, rankings, or even explicit ratings, which the model uses to iteratively adjust its parameters. Over time, this leads to a model that is not only optimized for technical performance but also for user satisfaction.

Theoretical Foundations of DPO

The theoretical underpinnings of Direct Preference Optimization are rooted in concepts from reinforcement learning (RL), multi-objective optimization, and interactive machine learning. In traditional RL, an agent learns to make decisions by receiving rewards or penalties from its environment. These rewards are typically predefined and tied to specific outcomes, such as maximizing profit or minimizing error. However, in DPO, the reward function is dynamic and informed by human input, making it inherently more flexible and adaptable.

DPO also draws from multi-objective optimization, where multiple criteria must be balanced simultaneously. Here, the challenge is not just to optimize a single metric but to find a solution that best satisfies a range of often conflicting user preferences. This involves complex trade-offs and requires sophisticated techniques to balance these competing objectives effectively.

Mathematical Formulation of DPO

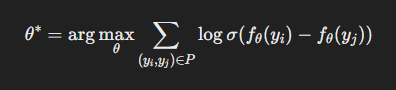

Formally, Direct Preference Optimization can be represented as a multi-objective optimization problem where the objectives are derived from human preferences. Let’s denote the model’s parameters as θ, the space of possible outputs as Y, and the preference feedback as P. The goal is to find the optimal set of parameters θ* that maximize the likelihood of generating outputs that align with the given preferences P.

The optimization problem can be expressed as:

Where:

- σ(·) is the logistic sigmoid function.

- f_θ(·) is the model’s scoring function, parameterized by θ.

- (y_i, y_j) are pairs of outputs where y_i is preferred over y_j.

This formulation captures the essence of DPO, where the model is trained to rank outputs in accordance with user preferences. The optimization process involves iteratively adjusting θ to increase the likelihood that the model’s predictions align with the observed preferences.

Mechanisms of Preference Collection

A critical aspect of Direct Preference Optimization is the method by which preferences are collected. Effective DPO relies on capturing authentic, unbiased, and representative preferences from users. There are several approaches to collecting this feedback, each with its own strengths and challenges:

- Pairwise Comparisons: Users are presented with two options and asked to choose the one they prefer. This method is simple and intuitive, making it easy for users to provide feedback. However, it may not capture the full complexity of user preferences.

- Ranking: Users rank a set of options from most to least preferred. This method provides more detailed information than pairwise comparisons but can be more cognitively demanding for users.

- Rating Scales: Users rate options on a numerical scale. This approach can capture the intensity of preferences but may introduce bias due to differences in how individuals use scales.

- Implicit Feedback: Preferences are inferred from user behavior, such as clicks, time spent on a page, or purchase history. While less intrusive, this method can be noisy and may not always accurately reflect true preferences.

How Does Direct Preference Optimization Differ from Other Optimization Techniques?

To fully appreciate the uniqueness of Direct Preference Optimization, it’s essential to compare it with other popular optimization techniques used in machine learning. Below are some key distinctions:

1. Objective Functions vs. Preference-Based Optimization

In traditional optimization methods like Gradient Descent, Stochastic Gradient Descent (SGD), or Genetic Algorithms, the objective function is predefined and static. These techniques optimize models based on clear mathematical goals such as minimizing loss functions, maximizing likelihood, or improving specific metrics like accuracy, precision, or recall.

DPO diverges significantly from this approach by utilizing a preference-based objective. Instead of optimizing for a single, rigid objective, DPO focuses on maximizing a utility function that is informed by user preferences. This allows the model to prioritize outcomes that are most valuable to users, even if they are not the most mathematically optimal. In essence, DPO turns the optimization process into a more dynamic and user-centered task.

2. Supervised Learning vs. Preference-Driven Learning

In supervised learning, models are trained on labeled data where the correct output is known in advance. The model’s performance is measured by its ability to produce the correct labels during training and testing. The optimization goal here is to reduce the difference between the predicted outputs and the actual labels.

DPO, however, shifts the focus from labeled data to preference data. Rather than training a model to predict the correct label, DPO models learn to predict outputs that users prefer. This shift is crucial in scenarios where the “correct” answer is subjective and varies between individuals. DPO therefore enables models to learn directly from user preferences, making the learning process more flexible and context-aware.

3. Static vs. Dynamic Optimization Goals

Traditional optimization techniques often assume that the goal remains consistent throughout the training process. For example, an optimization algorithm might aim to minimize a fixed loss function across the entire dataset. This static approach works well when the objectives are clear and unchanging.

In contrast, Direct Preference Optimization often deals with dynamic and evolving objectives. As user preferences can change over time, DPO models must be able to adapt to new information and adjust their optimization strategies accordingly. This dynamic nature of DPO allows for more responsive and adaptable models that can evolve alongside user needs, rather than being locked into a fixed goal.

4. Handling Trade-offs

In traditional optimization techniques, trade-offs between different objectives are often handled through multi-objective optimization or by combining multiple objectives into a single weighted sum. These approaches work well when the trade-offs are clearly defined and understood.

DPO, however, inherently deals with subjective trade-offs between different user preferences. For instance, in a recommendation system, a user might prefer a movie that is less popular but aligns more closely with their taste. DPO models are designed to navigate these complex trade-offs, prioritizing the aspects of the outcome that matter most to the user, even if it means compromising on other metrics that traditional models would optimize.

Training DPO Models: A Step-by-Step Guide

Training a model using Direct Preference Optimization involves several key steps:

Step 1: Define the Problem and Environment

The first step in training a DPO model is to clearly define the problem you want to solve and the environment in which the model will operate. This involves specifying the state space, action space, and the transition dynamics. The state space represents all possible configurations of the environment, while the action space includes all possible actions the model can take. Transition dynamics define how the environment changes in response to actions.

Step 2: Develop a Symbolic Planner

Next, you’ll need to develop a symbolic planner. This planner is responsible for generating high-level strategies to achieve the desired goal. The symbolic planner should be capable of mapping out a sequence of actions that can lead from the initial state to the goal state. In many cases, this involves creating a set of logical rules or constraints that guide the planning process.

Step 3: Integrate Differentiable Components

With your symbolic planner in place, the next step is to integrate it with differentiable components. These components allow the model to learn and optimize its planning strategies through gradient-based methods. Typically, this involves embedding the symbolic planner within a neural network architecture. The neural network then uses backpropagation to adjust its parameters, improving the planner’s performance over time.

Step 4: Define a Reward Function

A crucial part of training any model is defining a reward function. The reward function guides the model by assigning values to different actions based on how well they contribute to achieving the goal. For DPO models, the reward function needs to balance both short-term gains and long-term planning. This might involve rewarding the model for actions that bring it closer to the goal while penalizing actions that lead to dead ends or unnecessary complexity.

Step 5: Training the Model

Once all components are in place, you can begin training the model. This typically involves running the model through numerous simulations or episodes, allowing it to refine its strategies. During training, the model will repeatedly perform actions, receive feedback from the reward function, and adjust its parameters accordingly. This process is known as reinforcement learning, where the model learns by interacting with the environment and improving its performance over time.

Step 6: Monitor and Evaluate Performance

As the model trains, it’s essential to continually monitor and evaluate its performance. This can be done by tracking metrics like accuracy, convergence rate, and reward accumulation. Monitoring these metrics will help you identify any issues in the training process, such as overfitting or slow convergence. Additionally, it’s important to validate the model on unseen data to ensure that it generalizes well to new situations.

Step 7: Fine-Tuning and Optimization

After the initial training, you may need to fine-tune the model to enhance its performance. Fine-tuning might involve adjusting hyperparameters, modifying the reward function, or experimenting with different network architectures. The goal is to optimize the model so that it performs consistently well across a variety of scenarios. In some cases, transfer learning can be applied, where the model is fine-tuned on a specific task after being pre-trained on a related task.

Step 8: Deployment

With a well-trained and optimized model, the final step is deployment. Depending on the application, this could involve integrating the model into a larger system, deploying it to edge devices, or running it on cloud platforms. During deployment, it’s crucial to ensure that the model operates efficiently in real-time and continues to perform as expected.

Common Challenges in Training DPO Models

Training DPO models is not without its challenges. Computational complexity is one of the biggest hurdles, as these models often require significant processing power and memory. Additionally, ensuring that the model can generalize from training data to real-world scenarios is another common challenge. Balancing exploration and exploitation during training is also critical, as the model needs to explore new strategies while also refining known ones.

Best Practices for Success

To succeed in training DPO models, follow these best practices:

- Start simple: Begin with a basic model and gradually increase complexity.

- Iterative testing: Continuously test and refine the model during the training process.

- Leverage pre-trained models: Utilize existing models as a starting point to reduce training time.

- Collaborate and share: Engage with the community to share insights and learn from others’ experiences.

Real-World Applications of DPO

Direct Preference Optimization has broad applications across various industries. Here are some key areas where DPO is making a significant impact:

1. Recommendation Systems

DPO is particularly well-suited for recommendation systems, where the goal is to provide personalized suggestions that align with user preferences. Traditional recommendation algorithms often rely on collaborative filtering or content-based methods, which can sometimes miss the mark in terms of personalization. DPO enhances these systems by directly incorporating user feedback, leading to more relevant and satisfying recommendations.

For example, in streaming services like Netflix or Spotify, DPO can help fine-tune recommendations based on explicit user ratings or the relative preference of different content items. This ensures that the recommendations are more closely aligned with the user’s tastes and current mood, leading to higher user satisfaction and engagement.

2. Personalized Healthcare

In the healthcare sector, DPO can be used to tailor treatment plans to individual patient preferences. Traditional clinical decision support systems often prioritize medical efficacy, sometimes at the expense of patient comfort or preference. DPO allows for the creation of treatment plans that balance medical outcomes with the patient’s personal values and preferences.

For instance, in managing chronic conditions like diabetes, a DPO-driven system could help design a treatment regimen that considers not only the most effective medication but also the patient’s lifestyle, dietary preferences, and comfort with certain procedures. This leads to better adherence to treatment plans and overall improved patient outcomes.

3. Finance and Investment

DPO is also finding applications in the finance industry, particularly in the development of personalized investment strategies. Traditional algorithmic trading models typically optimize for financial metrics such as return on investment or risk-adjusted returns. However, these models may not always align with the individual preferences of investors, such as ethical considerations or risk tolerance.

By incorporating investor preferences directly into the optimization process, DPO can help create investment portfolios that are not only financially sound but also closely aligned with the investor’s personal goals and values. This can lead to greater investor satisfaction and potentially better long-term financial outcomes.

Challenges in Implementing Direct Preference Optimization

While Direct Preference Optimization offers numerous benefits, its implementation is not without challenges. Some of the most significant hurdles include:

1. Quality of Preference Data

The effectiveness of DPO heavily depends on the quality of the preference data collected. Poorly designed feedback mechanisms can result in biased or inaccurate preferences, leading to suboptimal model performance. Ensuring that the feedback is representative, unbiased, and truly reflective of user preferences is critical.

2. Computational Complexity

DPO often involves solving complex optimization problems that require significant computational resources. This is particularly true when dealing with large-scale datasets or highly complex models. Efficient algorithms and high-performance computing infrastructure are essential to manage this complexity.

3. Interpretability

As with many advanced machine learning models, DPO models can sometimes be seen as “black boxes,” where it is difficult to understand how they arrive at their decisions. Ensuring that these models are interpretable and transparent is crucial, especially in sensitive applications such as healthcare and finance.

4. Ethical and Privacy Concerns

DPO relies on personal preferences, which can include sensitive data. Ensuring that this data is handled securely and ethically is paramount. Privacy-preserving techniques, such as differential privacy or secure multi-party computation, may be necessary to protect user data while still enabling effective optimization.

Ethical Implications of Direct Preference Optimization

The ethical implications of Direct Preference Optimization are profound, particularly as AI systems become more deeply integrated into daily life. One of the key ethical considerations is ensuring that DPO models are used to enhance user autonomy rather than manipulate it. This involves designing systems that respect user preferences and make it easy for

users to understand and control how their preferences are used.

Moreover, DPO models must be designed to avoid reinforcing harmful biases. For example, if a DPO system in a hiring platform learns from biased preferences, it could perpetuate discrimination. Ensuring that these models are fair and inclusive is a critical ethical challenge.

Future Directions in Direct Preference Optimization

The future of Direct Preference Optimization is bright, with several exciting avenues for research and development. Some of the key areas of focus include:

1. Advancing Preference Elicitation Techniques

As the success of DPO depends on accurately capturing user preferences, there is ongoing research into more sophisticated preference elicitation techniques. This includes methods that can capture more complex and nuanced preferences, as well as techniques that minimize the cognitive load on users.

2. Scalability

Improving the scalability of DPO methods is another important area of focus. This involves developing more efficient algorithms and leveraging advances in hardware, such as GPU computing or quantum computing, to handle the computational demands of large-scale DPO.

3. Integrating DPO with Other AI Techniques

There is significant potential in combining Direct Preference Optimization with other AI techniques, such as transfer learning, meta-learning, or explainable AI. These integrations could lead to even more powerful and versatile models that can learn more effectively from fewer preferences and provide more transparent and understandable outputs.

Conclusion

Direct Preference Optimization represents a significant evolution in the field of machine learning. By focusing on optimizing models based on human preferences, DPO offers a way to create AI systems that are not only technically proficient but also closely aligned with the needs and desires of users. As AI continues to play an increasingly central role in our lives, the ability to create systems that respect and reflect human preferences will be critical.

While there are challenges to be addressed, particularly around the quality of preference data, computational complexity, and ethical considerations, the potential benefits of Direct Preference Optimization are immense. From more personalized recommendation systems to better-tailored healthcare and investment strategies, DPO has the potential to revolutionize how AI interacts with and supports humans.

As research and development in this area continue, Direct Preference Optimization is likely to become an essential tool in the AI toolkit, enabling the creation of more personalized, ethical, and effective AI systems. The future of AI may well be defined by its ability to learn and optimize directly from our preferences, making Direct Preference Optimization a truly transformative approach in the world of machine learning.

For further reading and to delve deeper into the concepts discussed, explore these resources: