Unlocking the Power of AI: Key Tools and Techniques for Visual Data Analysis

Convolutional Neural Networks (CNNs): A Deep Dive into Image Recognition

When it comes to visual data analysis, few tools match the power and versatility of Convolutional Neural Networks (CNNs). These deep learning models revolutionize image processing, advancing fields such as object detection, face recognition, and image classification.

The Magic Behind CNNs

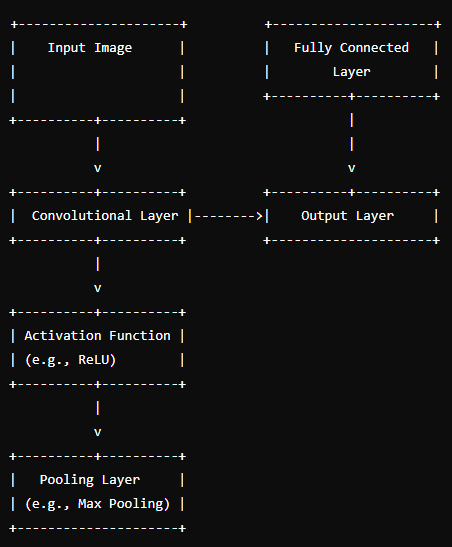

CNNs handle the grid-like structure of images, using layers that identify various features. Here’s how their unique architecture works:

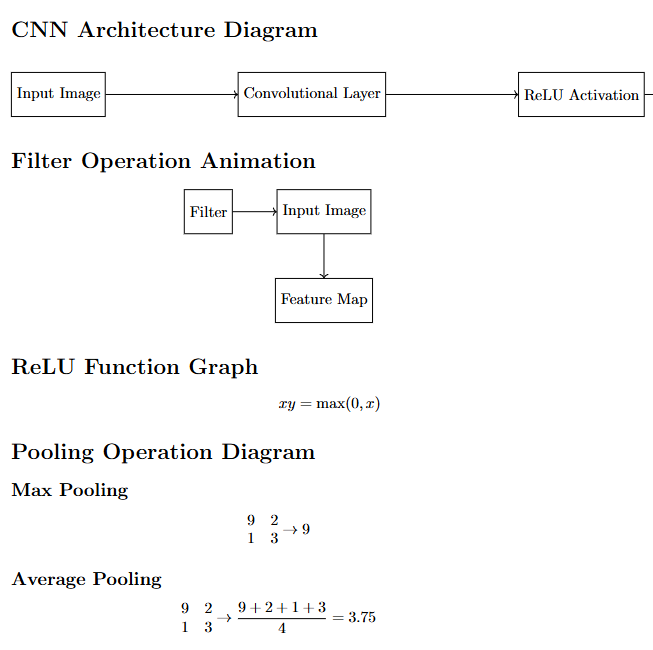

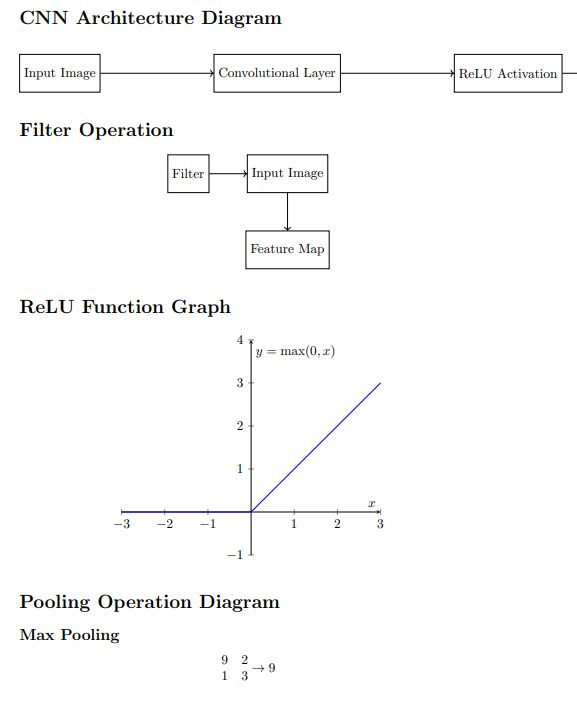

Convolutional Layers: Filters slide over the input image, producing feature maps that highlight different aspects such as edges, textures, and patterns.

Activation Functions: Functions like ReLU (Rectified Linear Unit) introduce non-linearity, allowing the network to learn complex patterns.

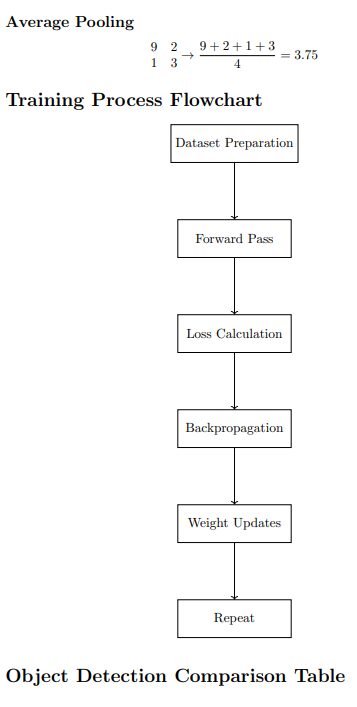

Pooling Layers: These reduce the spatial dimensions of the feature maps, minimizing computational load and preventing overfitting.

Fully Connected Layers: These layers integrate the extracted features to make a final prediction, such as class probabilities in image classification tasks.

Training CNNs

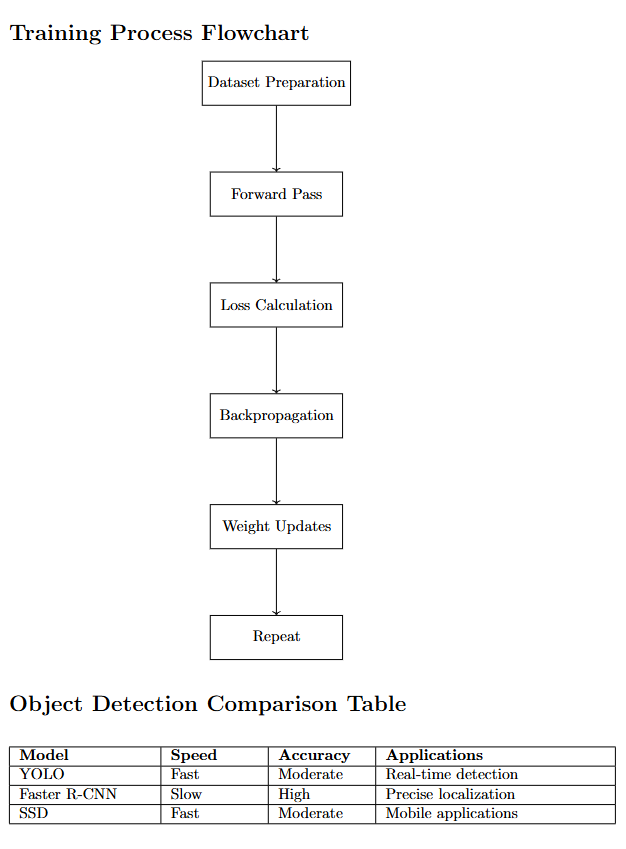

Training a CNN involves using a large dataset of labeled images. The network learns by adjusting its weights to minimize the error between predicted and actual labels, a process known as backpropagation. This iterative training refines the network’s ability to recognize patterns in new data.

Applications of CNNs

Object Detection: Models like YOLO (You Only Look Once), Faster R-CNN, and SSD (Single Shot MultiBox Detector) are pivotal. YOLO divides the image into a grid and predicts bounding boxes and probabilities, making it incredibly fast. Faster R-CNN combines a region proposal network with a detector, ensuring high accuracy, while SSD strikes a balance between speed and precision.

Face Recognition: Tools like FaceNet and DeepFace use CNNs to map face images to a compact Euclidean space, facilitating accurate face recognition. These models are used in security systems and social media tagging.

Image Classification: Networks like VGG (Visual Geometry Group) and ResNet (Residual Networks) are renowned. VGG models use deep networks with small convolutional filters, while ResNet introduces skip connections to train very deep networks efficiently.

Detailed Example: CNN in Action

Step-by-Step Process:

- Input Image: The image is resized to a fixed dimension, typically 224×224 pixels.

- Convolutional Layer: Filters slide over the image to produce feature maps.

- Activation Layer: ReLU activation is applied.

- Pooling Layer**: This step down-samples the feature maps.

- Fully Connected Layer: Flattens the maps into a vector and passes through fully connected layers.

- Output: Softmax function generates a probability distribution over classes.

CNN Architecture Diagram:

Expanding Horizons: CNNs Beyond Traditional Images

CNNs are not limited to structured image data. Innovations have expanded their applications to unstructured data environments like graphs, text, and audio:

Text Processing: In tasks like sentiment analysis, topic categorization, and language translation, CNNs capture hierarchical patterns in text data, mirroring human language comprehension.

Audio Processing: For speech recognition, sound classification, and music composition, CNNs process time-series data to extract intricate patterns in sound.

Medical Imaging: CNNs excel in tasks like X-ray image analysis, cancer detection, and 3D medical image segmentation, aiding in the early diagnosis and treatment of diseases.

Fully Connected Layer Example: This diagram shows how fully connected layers connect every neuron in one layer to every neuron in the next layer, providing an example with input, hidden, and output layers.

Pooling Layer Example: A simple representation of a pooling layer, showing how a 2×2 matrix is reduced to a single value using max pooling.

Real-World Impact of CNNs

A recent study on Human Activity Recognition (HAR) showcased a CNN-based technique for interpreting sensor sequence data, achieving an impressive accuracy rate of 97.20%. This application exemplifies how CNNs can transform various fields, from healthcare to security.

Conclusion

Convolutional Neural Networks are at the forefront of visual data analysis, driving innovations across numerous fields. Their ability to learn and recognize patterns in visual and non-visual data makes them indispensable tools in today’s AI landscape.

For more insights into the latest developments and applications of CNNs, explore the detailed resources available at Viso.ai and Vitalflux.