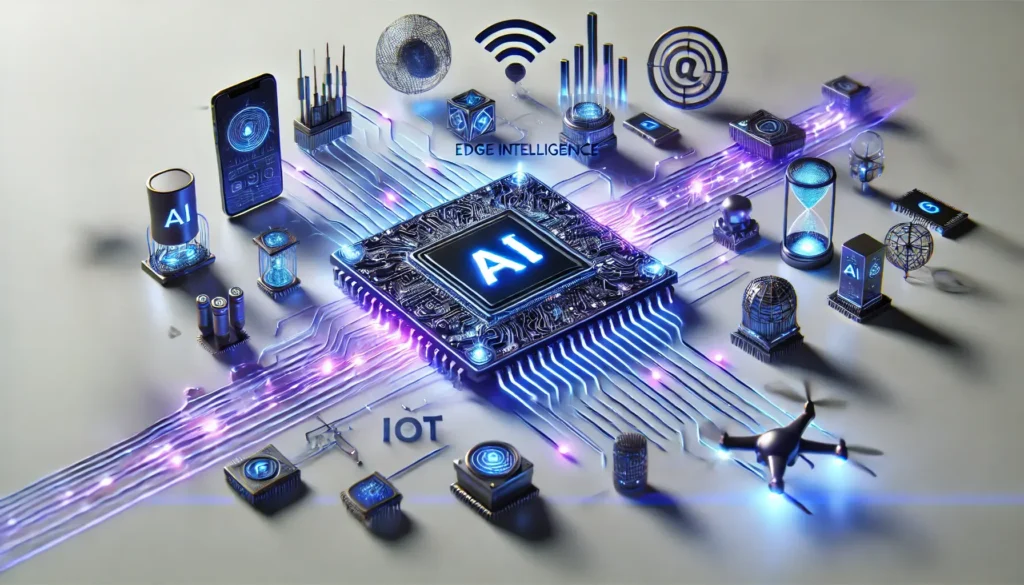

What Is Edge Intelligence?

Edge intelligence refers to the ability of devices at the “edge” of a network—like smartphones, IoT gadgets, and industrial machines—to process data locally rather than relying solely on the cloud. This paradigm enables faster decision-making and reduces latency, making real-time operations possible.

At its core, edge intelligence is about efficiency. Think of autonomous cars reacting instantly to obstacles or wearable devices analyzing health metrics on the fly. Without edge processing, many of these technologies wouldn’t function effectively.

Why Does Edge Intelligence Matter?

As the world becomes more connected, traditional cloud-based systems struggle with delays and bandwidth limitations. By moving computation closer to the data source, edge computing minimizes latency, enhances privacy, and reduces reliance on centralized servers.

For industries like healthcare, finance, and retail, this localized approach means quicker insights, smoother operations, and less dependency on internet connectivity.

The Challenges of Edge Computing

However, edge intelligence isn’t without its hurdles. Traditional processors often can’t keep up with the demand for real-time analytics and AI workloads. Power consumption, thermal management, and hardware scalability remain ongoing concerns.

This is where specialized AI chips step in, bridging the gap between capability and practicality.

What Are Specialized AI Chips?

Designed for AI-Driven Workloads

Unlike general-purpose CPUs, AI chips are custom-built to handle complex algorithms like machine learning and neural networks. These chips excel at repetitive tasks, matrix operations, and parallel processing, which are essential for AI models.

Popular examples include Google’s Tensor Processing Units (TPUs) and NVIDIA’s GPUs. These chips enable devices to process large datasets efficiently while conserving energy.

Compact and Efficient by Design

For edge devices, size and energy efficiency are crucial. Specialized AI accelerators, like the Edge TPU or Apple’s Neural Engine, are designed with these constraints in mind. They pack advanced capabilities into small, low-power modules suitable for edge environments.

This balance ensures that devices can process AI tasks on-site without draining batteries or requiring extensive cooling systems.

Key Technologies Powering AI Chips

Specialized AI chips rely on:

- ASICs (Application-Specific Integrated Circuits): Tailored for specific tasks, offering maximum efficiency.

- FPGAs (Field-Programmable Gate Arrays): Adaptable chips that can be reprogrammed as needed.

- Neuromorphic Computing: Mimicking the human brain’s neural architecture for ultra-efficient processing.

How AI Chips Revolutionize Edge Devices

Faster Processing, Real-Time Decisions

One of the standout benefits of AI chips is their ability to process data in real-time. For applications like robotics or smart surveillance, the difference between milliseconds and seconds can be critical.

By performing computations locally, edge devices powered by AI chips eliminate the need to send data back to the cloud, ensuring split-second decision-making.

Enhanced Privacy and Data Security

With data processed locally on the device, the risks associated with transmitting sensitive information to remote servers are significantly reduced. This advantage is particularly valuable in healthcare, where protecting patient data is paramount, or in smart cities, where surveillance data needs tight security.

Reduced Costs and Network Strain

AI chips also help reduce the dependency on high-speed internet. This lowers operational costs for businesses by minimizing bandwidth usage and scaling down expensive cloud infrastructure.

For environments with unreliable internet, such as remote industrial sites, edge devices with AI chips ensure uninterrupted functionality.

Industries Driving Adoption of AI Chips

Healthcare and Wearables

From heart rate monitors to predictive analytics in hospitals, edge AI devices powered by specialized chips are transforming patient care. They offer instant diagnostics, improving response times and outcomes.

Autonomous Vehicles

Self-driving cars are arguably the flagship use case for edge AI chips. These vehicles rely on split-second computations to interpret road conditions, navigate traffic, and avoid collisions—all without depending on the cloud.

Retail and Smart Sensors

Retailers use edge devices to analyze foot traffic, personalize customer experiences, and optimize inventory in real time. AI chips ensure these processes happen smoothly, even during peak times.

Would you like to dive deeper into any of these sections or explore how edge AI chips compare to traditional architectures?

Comparing Specialized AI Chips to Traditional Architectures

General-Purpose CPUs vs. AI Chips

General-purpose CPUs (Central Processing Units) handle a variety of tasks but aren’t optimized for high-performance AI workloads. Their versatility comes at the expense of speed and energy efficiency, making them less ideal for edge computing scenarios.

In contrast, specialized AI chips focus on parallel processing and matrix multiplication—key components of machine learning. This specialization allows them to deliver greater efficiency and processing power in a smaller footprint.

For example:

- A CPU might handle thousands of operations per second.

- A specialized chip, like a TPU, can manage millions simultaneously.

GPUs and Their Limitations

While GPUs are often the go-to for training and running AI models, they have limitations in edge environments. GPUs consume more power and generate significant heat, requiring advanced cooling systems.

Specialized AI chips, on the other hand, offer a middle ground. They’re more energy-efficient than GPUs but still provide the computational muscle needed for real-time analytics.

The Role of ASICs and FPGAs

- ASICs (Application-Specific Integrated Circuits): Built for specific AI tasks, they are highly efficient but lack flexibility. Once designed, they can’t be reprogrammed.

- FPGAs (Field-Programmable Gate Arrays): Offer adaptability, letting developers reconfigure the chip as AI models evolve. While not as efficient as ASICs, FPGAs shine in dynamic, edge environments.

AI Chips and Sustainability: A Perfect Match

Lower Energy Consumption

Energy efficiency is a critical advantage of AI chips. Unlike traditional hardware, which often requires significant power, specialized chips consume minimal energy while maintaining high performance.

For edge devices like drones, mobile phones, and IoT gadgets, this efficiency translates to longer battery life and reduced environmental impact.

Reducing E-Waste with Longevity

AI chips designed for edge use are built to last. Their robust construction and ability to handle evolving algorithms (in the case of FPGAs) reduce the need for frequent replacements. This longevity curtails electronic waste, a growing environmental concern.

Sustainable Innovation

As industries prioritize sustainability, AI chips enable smarter energy grids, resource-efficient manufacturing, and precise monitoring in agriculture. By enabling intelligent edge solutions, these chips actively contribute to greener operations.

Future Trends in AI Chips for Edge Intelligence

Miniaturization and Integration

As demand for smaller, more powerful devices grows, AI chips are becoming even more compact. Chiplet architectures, which stack multiple functionalities into one module, are gaining popularity. This integration ensures top-tier performance without increasing device size.

Edge AI and 5G: A Symbiotic Relationship

The rollout of 5G networks will amplify the potential of edge devices. High-speed connectivity complements on-device AI processing, allowing seamless communication between edge devices and central servers when necessary.

AI chips play a vital role here, managing workloads locally while leveraging 5G for occasional data sharing or updates.

Democratization of Edge AI

Thanks to advancements in chip manufacturing and open-source AI frameworks, edge AI is becoming more accessible. Affordable AI chips are empowering smaller businesses to adopt edge computing solutions, leveling the playing field in tech innovation.

Exploring Specific Edge Applications

Smart Manufacturing and Industry 4.0

Enhancing Predictive Maintenance

Edge devices equipped with AI chips monitor industrial machinery, analyzing data like vibration, temperature, and pressure in real time. This enables predictive maintenance, where issues are detected and resolved before costly breakdowns occur.

For example, factories using AI-driven edge sensors can reduce unplanned downtime by up to 50%, ensuring seamless operations.

Real-Time Quality Control

In production lines, computer vision powered by AI chips ensures immediate defect detection. Cameras and sensors process images on-site, flagging inconsistencies without relying on the cloud.

This localized processing saves time, reduces waste, and maintains high-quality standards.

Optimizing Workflow Automation

Robotic arms and automated conveyor systems benefit from low-latency AI decision-making at the edge. AI chips allow these systems to adapt dynamically, streamlining operations and increasing throughput.

Agriculture and Smart Farming

Precision Farming with Edge AI

AI chips in drones and IoT devices help farmers monitor soil health, crop growth, and weather conditions. These devices analyze data instantly, enabling precise irrigation, fertilization, and pest control.

This approach increases yields while conserving resources like water and chemicals.

Livestock Monitoring

Edge-enabled wearables track livestock health, movement, and feeding patterns. By processing this data locally, farmers receive real-time alerts for issues like illness or stress, ensuring better animal welfare.

Autonomous Agricultural Machines

Self-driving tractors and harvesters powered by AI accelerators make farming more efficient. They can navigate fields, avoid obstacles, and perform tasks like plowing or planting with minimal human intervention.

Smart Retail and Customer Experience

Real-Time Inventory Management

AI chips in edge-enabled smart shelves monitor stock levels and send alerts for replenishment. They also analyze purchasing patterns to optimize inventory management, reducing waste and increasing sales.

Personalized Shopping Experiences

Cameras and sensors in stores analyze customer behavior and demographics on-site. This enables tailored advertising and promotions without compromising customer privacy, as all processing occurs locally.

Checkout Automation

AI chips power cashier-less checkout systems, recognizing products and processing payments instantly. By eliminating queues, retailers enhance customer satisfaction and streamline store operations.

Healthcare and Wearable Devices

On-Device Health Analysis

Wearable devices, like fitness trackers and medical monitors, use AI chips to process health data instantly. They can analyze heart rate, blood oxygen levels, and ECGs, providing real-time insights without requiring cloud connectivity.

Remote Patient Monitoring

Edge AI in home medical devices enables continuous monitoring of patients with chronic conditions. Alerts are generated locally for anomalies, such as irregular heartbeats or low oxygen levels, ensuring timely interventions.

Smart Imaging in Diagnostics

Portable diagnostic tools equipped with AI accelerators perform tasks like X-ray analysis and ultrasound imaging directly on the device. This reduces delays, especially in remote areas with limited internet connectivity.

Exploring a Specific Edge Device: NVIDIA Jetson Orin Series

Overview of the Jetson Orin Platform

The NVIDIA Jetson Orin series is a powerful suite of edge AI devices designed for robotics, autonomous machines, and industrial applications. These systems integrate high-performance computing capabilities with energy efficiency, making them ideal for edge intelligence tasks.

Key Specifications:

- AI Performance: Up to 275 TOPS (Tera Operations Per Second) for AI workloads.

- Processor: ARM Cortex-A78AE CPU combined with NVIDIA Ampere GPU architecture.

- Memory: Up to 32GB of LPDDR5 memory for multitasking and model execution.

- Low Power Consumption: Operates efficiently, consuming less than 15 watts in some configurations.

This combination allows Jetson Orin devices to handle advanced tasks like computer vision, speech recognition, and autonomous navigation in real time.

Use Cases for Jetson Orin

Autonomous Robotics

Jetson Orin powers robots in warehouses, factories, and agriculture. With its edge AI capabilities, robots can:

- Identify and sort objects dynamically.

- Avoid obstacles in complex environments.

- Collaborate with humans in shared workspaces.

For example, robots equipped with Jetson Orin NX modules are used for automating tasks like picking and packaging items in e-commerce warehouses.

Smart Surveillance

Jetson Orin devices enable smart security systems that analyze video feeds locally, reducing latency and enhancing privacy.

- Cameras equipped with Orin modules can detect suspicious activity or recognize faces instantly.

- The localized processing eliminates the need for constant data uploads to the cloud, saving bandwidth and ensuring compliance with privacy regulations.

Healthcare Applications

In medical imaging, Jetson Orin modules process diagnostic scans like X-rays and MRIs at the point of care.

- These devices enable faster diagnostics in remote clinics where cloud connectivity may be limited.

- They also support real-time analysis for wearable devices like health monitors.

Focusing on Implementation Strategies for Edge AI Technologies

Key Steps to Deploy Edge AI

1. Choose the Right Hardware

Select AI chips or edge devices based on the specific requirements of your application:

- Low-power devices for wearables or IoT sensors.

- High-performance processors like NVIDIA Jetson or Google Edge TPU for complex tasks like video processing or deep learning.

2. Optimize AI Models for Edge

AI models need to be lightweight for edge devices to handle them efficiently.

- Use techniques like quantization, pruning, or knowledge distillation to reduce model size without compromising accuracy.

- Tools like TensorFlow Lite or PyTorch Mobile can help adapt models for edge deployment.

3. Leverage Hybrid Architectures

Implement a hybrid approach, where critical, latency-sensitive tasks are processed locally, while non-critical tasks are offloaded to the cloud.

- For instance, an autonomous drone processes flight navigation locally while uploading mapping data to the cloud for future analysis.

4. Prioritize Security

Since edge devices process sensitive data locally, securing them is crucial.

- Use hardware-based encryption provided by modern AI chips.

- Implement software updates and patch vulnerabilities regularly to protect against attacks.

5. Monitor and Maintain Devices

Deploying edge devices at scale requires robust monitoring tools to track performance, detect anomalies, and manage updates remotely.

- Use platforms like NVIDIA Fleet Command for Jetson devices to orchestrate operations efficiently.

Exploring More Edge Devices: Google’s Edge TPU and Intel’s Movidius VPU

Google’s Edge TPU

Overview

The Google Edge TPU (Tensor Processing Unit) is a purpose-built chip designed to accelerate machine learning inference directly on edge devices. It is part of Google’s Coral platform, which offers hardware and software for edge AI applications.

Key Features:

- Optimized for TensorFlow Lite: The Edge TPU is designed to run TensorFlow Lite models efficiently, making it ideal for edge deployments.

- Performance: Up to 4 TOPS (Tera Operations Per Second) with a power efficiency of 2 TOPS per watt.

- Low Power: Edge TPUs consume minimal energy, making them suitable for IoT devices, smart cameras, and drones.

- Compact Design: Available as USB accelerators, PCIe cards, and modules for integration into custom hardware.

Applications:

- Smart Home Devices: AI-powered security cameras and voice assistants that process data locally for real-time responses.

- Retail Analytics: Edge TPUs power smart shelves and checkout systems to analyze customer behavior and inventory without cloud dependence.

- Healthcare: Portable diagnostic devices use Edge TPUs for instant analysis of medical images or patient data.

Intel’s Movidius VPU

Overview

The Movidius Vision Processing Unit (VPU) is Intel’s offering for vision-centric AI applications at the edge. It is known for its energy-efficient, high-performance capabilities, particularly in computer vision tasks.

Key Features:

- High-Efficiency Vision Processing: Specifically designed for tasks like object detection, facial recognition, and image segmentation.

- Low Power Consumption: VPUs are optimized for embedded applications, consuming less than 2 watts on average.

- Neural Compute Stick: A plug-and-play USB device based on the Movidius VPU, designed for rapid prototyping of AI solutions.

- Versatility: Supports multiple AI frameworks, including OpenVINO, TensorFlow, and Caffe, for flexibility in deployment.

Applications:

- Drones and Robotics: Movidius VPUs help drones navigate obstacles and robots identify objects in dynamic environments.

- Smart Cameras: Used in industrial settings for quality control and security monitoring, with real-time analytics on-site.

- Augmented Reality (AR) Devices: Powering AR glasses to recognize objects and overlay contextual information in real time.

Comparison: Edge TPU vs. Movidius VPU

| Feature | Google Edge TPU | Intel Movidius VPU |

|---|---|---|

| Primary Use Case | General AI inference at the edge | Vision-focused AI applications |

| Power Efficiency | 2 TOPS per watt | < 2 watts for vision tasks |

| Supported Frameworks | TensorFlow Lite | OpenVINO, TensorFlow, Caffe |

| Form Factors | USB, PCIe, system-on-modules | USB stick, embedded modules |

| Specialization | TensorFlow-specific workloads | Advanced computer vision |

Use Cases and Industry Adoption

Industrial IoT

- Edge TPU: Ideal for low-power IoT sensors that run machine learning models on-site.

- Movidius VPU: Perfect for industrial cameras that need real-time image analysis for defect detection.

Healthcare

- Edge TPU: Powers portable medical devices that process TensorFlow Lite models for diagnostic insights.

- Movidius VPU: Enhances medical imaging devices by providing advanced image segmentation capabilities.

Autonomous Systems

- Edge TPU: Drones and robots that perform general AI inference tasks benefit from its versatility.

- Movidius VPU: Excels in vision-based tasks like obstacle detection and path planning in autonomous vehicles.

Deep Technical Comparison: Google Edge TPU vs. Intel Movidius VPU

Architecture and Design

Google Edge TPU

- Core Design: The Edge TPU is a custom-built ASIC (Application-Specific Integrated Circuit) tailored for running TensorFlow Lite models.

- Specialization: Optimized for matrix multiplications and tensor operations, making it highly efficient for inference workloads.

- Memory: Utilizes dedicated SRAM (Static RAM) for rapid data access, minimizing latency during operations.

- Performance Efficiency: Up to 4 TOPS, with a power efficiency of 2 TOPS per watt, making it one of the most energy-efficient chips for general AI tasks.

Intel Movidius VPU

- Core Design: The Movidius VPU is designed as a hybrid processing unit combining traditional CPU-like logic and specialized parallel compute units for vision tasks.

- Specialization: Excels in computer vision, with dedicated units for image processing, optical flow analysis, and 3D depth sensing.

- Memory: Features a large internal memory pool and support for external DRAM, which provides flexibility for complex workloads.

- Performance Efficiency: Optimized for vision tasks, consuming under 2 watts of power for workloads like object detection and image segmentation.

Supported Workloads

Google Edge TPU

- Machine Learning Models: Designed to execute TensorFlow Lite models, particularly those optimized with 8-bit quantization.

- Inference Focus: Supports classification, detection, and natural language processing at the edge.

- Use Cases: Versatile for IoT, smart home devices, and lightweight edge AI applications.

Intel Movidius VPU

- Computer Vision: Specializes in advanced image processing, including depth estimation, optical flow, and facial recognition.

- AI Models: Compatible with multiple frameworks, including OpenVINO, TensorFlow, and Caffe, allowing for diverse deployment scenarios.

- Use Cases: Tailored for applications requiring sophisticated visual analysis, such as robotics, drones, and surveillance.

Framework and Software Compatibility

| Feature | Google Edge TPU | Intel Movidius VPU |

|---|---|---|

| Framework Focus | TensorFlow Lite | OpenVINO, TensorFlow, Caffe |

| Optimization Tools | Edge TPU Compiler for TensorFlow Lite | OpenVINO toolkit for model optimization |

| Programming Interface | Python API, Coral ML Library | OpenVINO Runtime, Neural Compute SDK |

Key Difference:

The Edge TPU’s tight integration with TensorFlow Lite simplifies deployment for TensorFlow-based AI applications. Meanwhile, the Movidius VPU’s compatibility with multiple frameworks makes it more flexible for developers using diverse AI ecosystems.

Power Efficiency and Thermal Management

Google Edge TPU

- Optimized for low-power IoT devices, making it suitable for battery-powered applications like smart cameras and sensors.

- Minimal heat output, eliminating the need for active cooling in most use cases.

Intel Movidius VPU

- Designed for vision-heavy workloads at the edge, balancing performance and energy consumption.

- Efficient thermal design allows passive cooling for embedded applications, such as drones and robotics.

Performance Benchmarks

| Task | Google Edge TPU | Intel Movidius VPU |

|---|---|---|

| Image Classification | Processes models like MobileNet at 100+ FPS | Optimized for visual tasks, handles MobileNet at 60 FPS |

| Object Detection | Runs SSD MobileNet models at near real-time speeds | Handles YOLO models with advanced visual optimization |

| Latency | Sub-10 ms for inference tasks | Sub-20 ms for computer vision tasks |

Deployment Scenarios

| Feature | Google Edge TPU | Intel Movidius VPU |

|---|---|---|

| Best For | General-purpose AI inference tasks at the edge | Vision-centric applications with visual complexity |

| Form Factor | USB accelerators, PCIe cards, system-on-modules | USB sticks, embedded chips for integration |

| Scalability | Suitable for low-power IoT deployments | Adaptable for both consumer and industrial robotics |

Strengths and Weaknesses

Google Edge TPU

- Strengths: Energy efficiency, seamless TensorFlow Lite support, and low-cost deployment for IoT devices.

- Weaknesses: Limited flexibility for frameworks outside TensorFlow Lite, and less suitable for vision-specific workloads.

Intel Movidius VPU

- Strengths: Advanced vision processing capabilities and compatibility with multiple frameworks.

- Weaknesses: Lower general AI inference performance compared to Edge TPU and slightly higher power consumption for comparable workloads.

Choosing the Right Device

- Edge TPU: Best for developers working in TensorFlow Lite who need an energy-efficient, cost-effective solution for IoT or general AI tasks.

- Movidius VPU: Ideal for industries requiring advanced vision capabilities, such as robotics, autonomous vehicles, and industrial cameras.

Comparing Leading Edge AI Processors: Google Edge TPU, Intel Movidius VPU, NVIDIA Jetson Nano, and Hailo-8

Google Edge TPU

Architecture and Performance

- Design: Custom ASIC optimized for TensorFlow Lite models.

- Performance: Capable of 4 trillion operations per second (TOPS) with a power efficiency of 2 TOPS per watt.

- Memory: Utilizes on-chip memory for low-latency data access.

Applications

- IoT Devices: Ideal for low-power applications like smart home devices and sensors.

- Real-Time Inference: Suitable for tasks requiring immediate processing, such as image recognition.

Strengths

- Energy Efficiency: Low power consumption makes it suitable for battery-operated devices.

- Integration: Seamless compatibility with TensorFlow Lite simplifies deployment.

Limitations

- Flexibility: Primarily optimized for TensorFlow Lite, limiting versatility with other frameworks.

Intel Movidius VPU

Architecture and Performance

- Design: Hybrid processing unit combining CPU-like logic with specialized parallel compute units for vision tasks.

- Performance: Optimized for computer vision workloads with low power consumption, typically under 2 watts.

- Memory: Features a large internal memory pool and supports external DRAM.

Applications

- Computer Vision: Excels in tasks like object detection, facial recognition, and image segmentation.

- Embedded Systems: Used in drones, robotics, and smart cameras.

Strengths

- Versatility: Supports multiple AI frameworks, including OpenVINO, TensorFlow, and Caffe.

- Low Power: Efficient thermal design allows passive cooling in embedded applications.

Limitations

- General AI Tasks: May not perform as well in non-vision AI workloads compared to other processors.

NVIDIA Jetson Nano

Architecture and Performance

- Design: Features a 128-core NVIDIA Maxwell GPU and a quad-core ARM Cortex-A57 CPU.

- Performance: Delivers 472 GFLOPS of compute performance.

- Memory: Equipped with 4GB of LPDDR4 memory.

Applications

- Robotics: Powers autonomous robots with real-time AI processing.

- Edge Computing: Suitable for applications requiring substantial computational power at the edge.

Strengths

- Developer Support: Extensive resources and a large community facilitate development.

- Flexibility: Supports various AI frameworks, including TensorFlow, PyTorch, and Caffe.

Limitations

- Power Consumption: Higher power requirements may not be ideal for battery-powered devices.

Hailo-8

Architecture and Performance

- Design: Specialized AI processor with a unique architecture tailored for deep learning tasks.

- Performance: Offers up to 26 TOPS with an efficiency of 3 TOPS per watt.

- Memory: Includes on-chip memory optimized for AI workloads.

Applications

- Automotive: Used in advanced driver-assistance systems (ADAS) for real-time processing.

- Industrial IoT: Powers smart cameras and sensors in industrial settings.

Strengths

- High Efficiency: Combines high performance with low power consumption.

- Scalability: Suitable for a range of applications, from consumer electronics to industrial automation.

Limitations

- Ecosystem: Smaller developer community compared to more established platforms.

Comparative Summary

| Feature | Google Edge TPU | Intel Movidius VPU | NVIDIA Jetson Nano | Hailo-8 |

|---|---|---|---|---|

| Performance | 4 TOPS | Optimized for vision tasks | 472 GFLOPS | 26 TOPS |

| Power Efficiency | 2 TOPS per watt | <2 watts | Higher power consumption | 3 TOPS per watt |

| Primary Use Case | General AI inference | Computer vision | Robotics and edge computing | Deep learning tasks |

| Framework Support | TensorFlow Lite | OpenVINO, TensorFlow, Caffe | TensorFlow, PyTorch, Caffe | Various AI frameworks |

| Form Factor | USB, PCIe, modules | USB stick, embedded modules | Developer kit | PCIe card, modules |

| Developer Ecosystem | Moderate | Moderate | Extensive | Growing |

Choosing the Right Processor

Selecting the appropriate edge AI processor depends on specific application requirements:

- Low-Power IoT Devices: Google Edge TPU offers energy efficiency for simple AI tasks.

- Advanced Computer Vision: Intel Movidius VPU excels in vision-centric applications.

- Robotics and High-Performance Edge Computing: NVIDIA Jetson Nano provides substantial computational power.

- Deep Learning Applications with Efficiency: Hailo-8 balances high performance with low power consumption.

Understanding the unique strengths and limitations of each processor ensures optimal performance and efficiency in edge AI deployments.

Key Takeaway

Edge AI processors like the Google Edge TPU, Intel Movidius VPU, NVIDIA Jetson Nano, and Hailo-8 are redefining the way devices handle artificial intelligence tasks by enabling faster, more efficient, and localized computations.

Choosing the Right Processor:

- Google Edge TPU shines in energy-efficient IoT applications and TensorFlow Lite integration.

- Intel Movidius VPU is the go-to for computer vision tasks, offering versatility across multiple frameworks.

- NVIDIA Jetson Nano delivers robust performance for robotics and high-demand edge applications, with an extensive developer ecosystem.

- Hailo-8 combines exceptional deep learning capabilities with power efficiency, making it ideal for demanding industrial and automotive applications.

The Future of Edge AI

The diverse strengths of these processors make edge AI more accessible across industries like healthcare, robotics, IoT, and autonomous systems. Selecting the right processor depends on your application’s specific needs, from low-power IoT sensors to high-performance autonomous machines.

Investing in edge AI technology today positions businesses to harness faster decision-making, enhanced privacy, and reduced reliance on cloud infrastructure—essential advantages in the connected world of tomorrow.

Resources

Learning Resources

- AI at the Edge: A Beginner’s Guide: Edge AI Overview – Introduction to the concepts and benefits of edge AI computing.

- Optimizing AI Models for Edge Devices: Model Optimization Techniques – Official guide to pruning, quantization, and compression techniques.

- Industry Applications of Edge AI: Industry Reports – Reports and insights on AI adoption trends across industries.

Community Forums and Support

- NVIDIA Developer Forums: NVIDIA Community – Engage with the Jetson developer community for troubleshooting and project inspiration.

- Intel AI Community: Intel Developer Zone – Get insights and advice on Intel AI platforms.

- Coral AI Forum: Coral Community – Discuss projects and get support for Google Edge TPU development.