Imagine a world where your smartphone could sense your frustration and offer a calming suggestion, or where customer service bots could detect and adapt to your mood, ensuring a smoother, more human-like interaction. This isn’t the plot of a sci-fi movie; it’s the emerging reality of Emotion AI. As technology evolves, so does its ability to understand us—not just our words, but our emotions. Welcome to the fascinating journey of how machines are learning to decode human feelings.

The Early Days: From Sentiment Analysis to Emotion Detection

In the beginning, computers were as emotionally intelligent as a brick wall. They could process data, but understanding human feelings? That was light-years away. The first step towards Emotion AI was sentiment analysis, a technique that allowed machines to assess whether a piece of text was positive, negative, or neutral. This was groundbreaking, but still, it barely scratched the surface of true emotional understanding.

Sentiment analysis relied heavily on keyword detection. If you tweeted, “I love this new phone!” the system would pick up on the word “love” and tag it as a positive sentiment. Simple, right? But what if you said, “I love this phone, but the battery life is awful”? Suddenly, the analysis becomes more complicated. Understanding the nuance of human emotion—where one sentence could carry mixed feelings—was beyond the reach of early systems.

The Evolution: Recognizing Complex Emotions

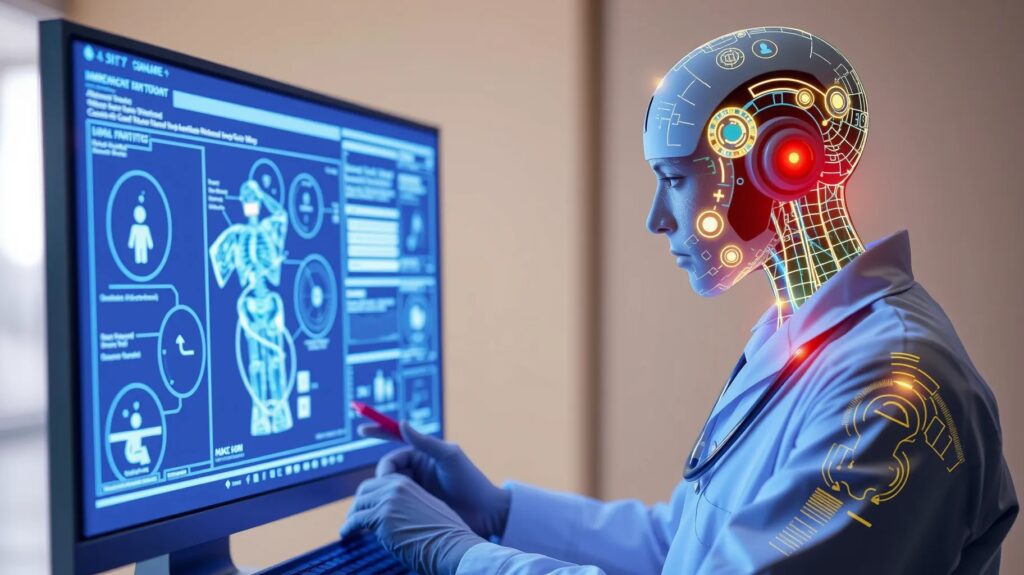

As demand for more nuanced interactions grew, so did the sophistication of Emotion AI. Facial recognition technology made it possible for machines to analyze expressions—smiles, frowns, and everything in between. This was a significant leap forward. Imagine a customer service bot that can see you’re frustrated and adjust its tone accordingly. This isn’t just helpful; it’s revolutionary.

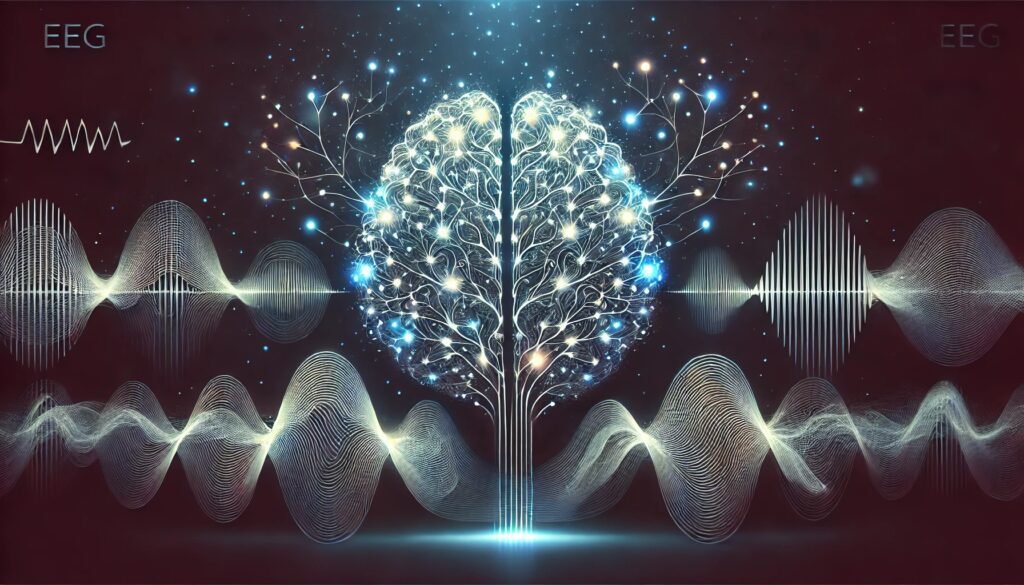

Around the same time, voice analysis began to gain traction. It’s not just what we say, but how we say it. Subtle changes in pitch, tone, and rhythm can reveal a lot about our emotions. With voice analysis, machines could begin to understand the difference between a happy “hello” and a sarcastic one. These advancements brought us closer to creating systems that could genuinely understand and react to our emotional states.

Breakthroughs in Multimodal Emotion Recognition

The real game-changer, however, has been the development of multimodal emotion recognition. This approach combines data from multiple sources—text, voice, facial expressions, and even physiological signals like heart rate—to form a comprehensive picture of a person’s emotional state. Think of it as a detective piecing together clues from different places to solve a case. The more data points the AI has, the better it can understand and predict human emotions.

One of the key milestones in this field was the introduction of deep learning algorithms. These algorithms can process vast amounts of data and learn from it in ways that mimic the human brain. They have enabled Emotion AI to move beyond basic detection to understanding complex emotions, such as ambivalence or conflicted feelings. For instance, these systems can now distinguish between a nervous smile and a genuinely happy one—a subtlety that was once impossible for machines to grasp.

Current Applications and Future Possibilities

Today, Emotion AI is not just a novelty—it’s becoming a crucial component in various industries. Healthcare providers are using it to monitor patients’ mental health by analyzing their tone of voice and facial expressions. In education, AI can gauge a student’s frustration or confusion, allowing teachers to intervene more effectively. Even in marketing, understanding consumer emotions helps brands tailor their messages more precisely, creating ads that resonate on a deeper emotional level.

But what does the future hold? As Emotion AI continues to advance, we could see its integration into everyday devices. Imagine smart home systems that adjust the lighting based on your mood or virtual assistants that offer emotional support during stressful times. The possibilities are endless, but they also raise important ethical questions. How much should machines know about our feelings? And how do we ensure this technology is used responsibly?

Ethical Challenges in Emotion AI: Privacy, Consent, and the Manipulation of Human Feelings

At the heart of Emotion AI lies the collection of vast amounts of personal data. To accurately gauge emotions, AI systems must analyze facial expressions, vocal tones, physiological signals, and even our text-based communications. This data is intimate—often revealing our innermost thoughts and feelings. But who controls this data? And how is it protected?

Privacy is a significant concern when it comes to Emotion AI. Unlike traditional forms of data collection, where users might knowingly share information, the emotions detected by AI can be inferred without explicit consent. For example, an AI-driven customer service bot might analyze your frustration during a call, but did you agree to have your emotions monitored in the first place? This blurs the line between what’s ethical and what’s invasive.

Moreover, there’s the risk of this emotional data being misused. In the wrong hands, such information could be exploited for nefarious purposes, from targeted advertising that plays on your vulnerabilities to more sinister forms of surveillance. The question then arises: how do we ensure that our emotions remain private in a world where AI can so easily read them?

The Importance of Consent: Should Machines Ask Before They Feel?

Linked closely to privacy is the issue of consent. Informed consent is a cornerstone of ethical practice in many fields, from medicine to marketing. But how does this translate to Emotion AI? Should users be explicitly asked for permission before their emotions are analyzed? And if so, how should this consent be obtained?

The problem is that most people are not fully aware of how Emotion AI works, let alone the extent to which their emotions are being monitored. This lack of understanding makes true informed consent challenging. It’s one thing to agree to share your location with an app, but quite another to consent to having your emotional state tracked in real-time.

One possible solution is the development of clear and transparent guidelines that outline how Emotion AI systems collect and use emotional data. These guidelines should be easy to understand and accessible to all users, ensuring that consent is truly informed. However, this raises another ethical dilemma: if users refuse to consent, does that limit their access to services? And if they do consent, are they fully aware of the potential implications?

The Dark Side: Manipulation and Emotional Influence

Perhaps the most controversial aspect of Emotion AI is its potential to manipulate human emotions. When machines can detect how we feel, they can also be programmed to influence those feelings. This opens up a Pandora’s box of ethical issues, particularly when it comes to marketing, politics, and social media.

Imagine a political campaign that uses Emotion AI to tailor messages based on voters’ emotional states. By exploiting fears or anxieties, these messages could sway public opinion in ways that feel eerily manipulative. Similarly, in the world of marketing, ads could be designed to trigger emotional responses, making consumers more likely to buy products they don’t need. This kind of emotional manipulation raises serious ethical questions about autonomy and free will.

While some argue that emotional influence is nothing new—after all, marketers and politicians have been playing on our emotions for decades—the precision and scale at which Emotion AI can operate is unprecedented. The ability to manipulate emotions with such accuracy could lead to a society where our feelings are constantly being nudged in one direction or another, often without our conscious awareness.

Ethical Safeguards: Where Do We Draw the Line?

So, where do we draw the line? Should there be strict regulations governing the use of Emotion AI, particularly when it comes to emotional manipulation? And if so, who should be responsible for enforcing these regulations?

Many experts argue that there needs to be a robust ethical framework in place to guide the development and deployment of Emotion AI. This framework should prioritize transparency, consent, and accountability. For instance, companies that use Emotion AI should be required to disclose how they collect and use emotional data, and users should have the ability to opt out if they choose.

There’s also a growing call for the creation of independent bodies that can oversee the ethical use of Emotion AI. These bodies could be responsible for auditing AI systems to ensure they comply with ethical standards and for investigating any potential misuse. Additionally, they could help develop guidelines for when and how Emotion AI can be used to influence emotions, ensuring that such influence is only ever used for positive and beneficial purposes.

Conclusion: Navigating the Ethical Minefield

The rise of Emotion AI is a double-edged sword. On one hand, it has the potential to revolutionize how we interact with technology, making our experiences more personalized and human-like. On the other hand, it poses significant ethical challenges that we cannot afford to ignore.

As we move forward, it’s crucial that we strike a balance between innovation and ethical responsibility. We must ensure that the power of Emotion AI is used to enhance human well-being, not to exploit or manipulate it. This means developing clear guidelines, enforcing strict privacy protections, and prioritizing informed consent. Only then can we harness the true potential of Emotion AI—while safeguarding our most personal asset: our emotions.

Websites and Blogs

- AI Ethics Lab

- AI Now Institute

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems