Basics of Ensemble Learning

What is Ensemble Learning?

Ensemble learning combines multiple models to improve overall performance. Instead of relying on a single predictor, it aggregates results from various models, leveraging their strengths and reducing weaknesses.

Think of it as consulting multiple experts to make a decision—you get more reliable outcomes by combining their opinions.

Common Ensemble Methods

Ensemble methods are broadly categorized into:

- Bagging: Focuses on reducing variance. For example, Random Forest trains multiple decision trees on random data subsets and averages their predictions.

- Boosting: Aims to reduce bias. Algorithms like AdaBoost train weak learners sequentially, correcting previous errors.

- Stacking: Combines predictions from different model types (e.g., SVM, neural networks) using a meta-model for final output.

Each method addresses overfitting differently, making ensembles highly versatile.

Overfitting: The Achilles’ Heel of Machine Learning

Overfitting occurs when a model learns the noise and specific patterns of training data rather than generalizable trends. This leads to poor performance on unseen data.

For example, a single decision tree trained without constraints may fit training data perfectly but fail miserably on test data.

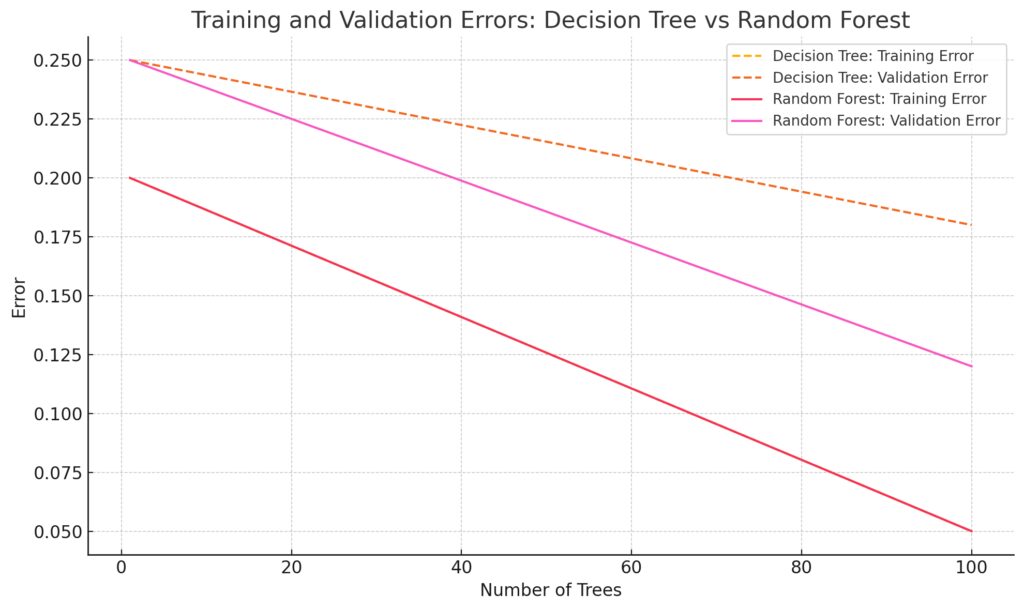

Decision Tree:

Training error decreases steadily as the tree grows more complex.

Validation error decreases slightly but eventually plateaus, showing overfitting.

Random Forest:

Training error decreases similarly to the decision tree.

Validation error decreases significantly and stabilizes, showing reduced overfitting due to ensemble learning.

How Ensembles Mitigate Overfitting

Ensembles prevent overfitting by introducing diversity:

- Bagging averages predictions, reducing the risk of any one model’s overfitting impacting the ensemble.

- Boosting minimizes training errors iteratively while penalizing complexity to avoid overfitting.

- Stacking uses a meta-model to combine diverse base models, balancing their strengths.

In short, ensembles smooth out individual errors, leading to more robust generalization.

Real-World Applications of Ensembles

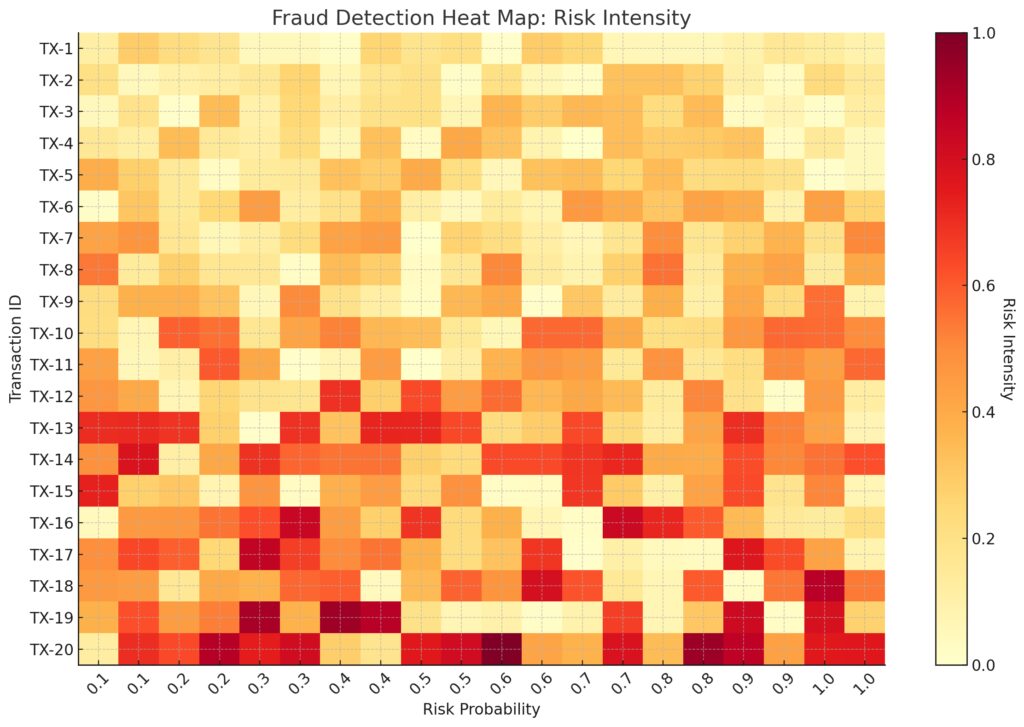

Axes:

Transaction IDs: Labels on the y-axis (e.g., TX-1, TX-2).

Risk Probabilities: Labels on the x-axis (e.g., 0.1 to 1.0).

Color Intensity:

Low Risk: Light yellow regions.

Medium Risk: Orange regions.

High Risk: Deep red regions.

- Healthcare: Ensembles help predict patient outcomes using multiple algorithms, improving diagnostic accuracy.

- Finance: Fraud detection systems use ensembles to identify patterns in transaction data, reducing false positives.

- Marketing: Predicting customer churn with boosted models ensures better retention strategies.

Stage 2: Advanced Techniques in Ensemble Learning

Bagging in Depth

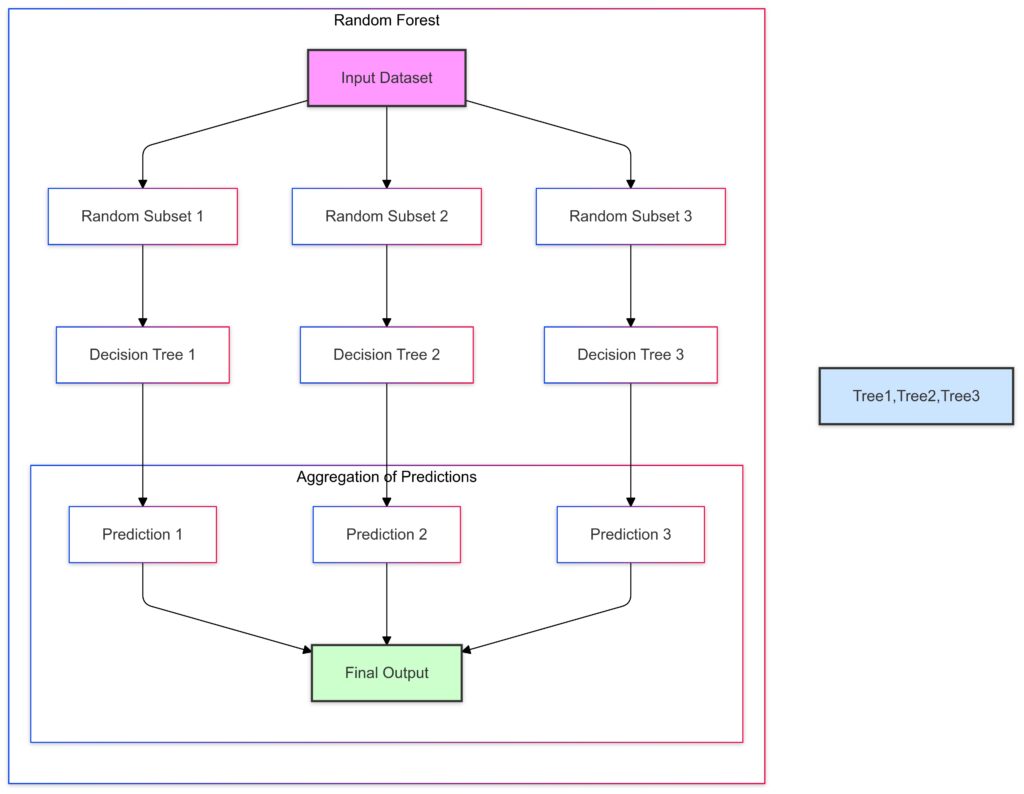

Decision Trees: Each subset is used to train an individual decision tree.

Predictions: Each tree produces predictions for the input data.

Aggregation: The predictions are combined:Majority Voting: For classification.

Averaging: For regression.

Bagging, or Bootstrap Aggregating, reduces variance by training multiple models on random subsets of the data. Each model, often a decision tree, learns different patterns. Their predictions are then averaged (for regression) or voted on (for classification).

Random Forest, the most popular bagging algorithm, improves on decision trees by:

- Training multiple trees with different subsets of features and samples.

- Averaging predictions to reduce overfitting, especially in noisy datasets.

Key hyperparameters to optimize:

- Number of trees: More trees improve stability but increase computation time.

- Max features: Limits the number of features each tree uses, boosting diversity.

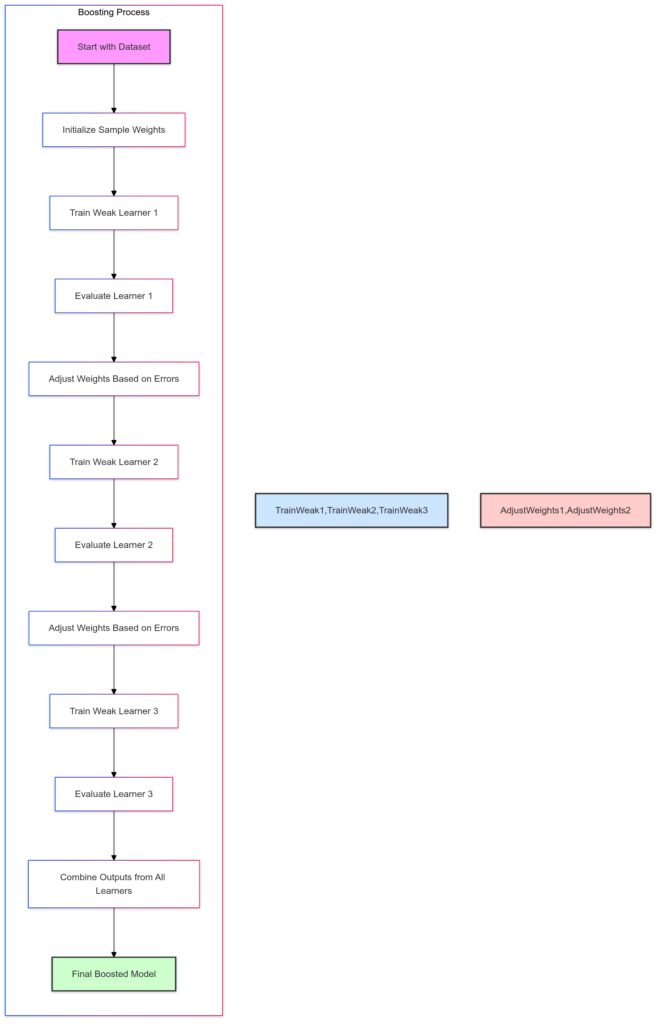

Boosting in Depth

Train Weak Learner: Train the first weak learner on the weighted dataset.

Evaluate Learner: Assess the weak learner’s performance.

Adjust Weights: Increase the weights of misclassified samples to focus on them in the next round.

Iterative Training: Train subsequent weak learners, iteratively improving the model.

Combine Outputs: Aggregate predictions from all learners.

Final Boosted Model: The ensemble model, which leverages the strengths of all weak learners.

Boosting builds models sequentially, where each model focuses on correcting errors made by its predecessor. This process reduces bias and improves accuracy.

XGBoost, a leading boosting algorithm, uses:

- Gradient boosting to minimize loss functions like mean squared error.

- Regularization (L1 and L2) to avoid overfitting, even on complex datasets.

Other examples:

- AdaBoost assigns weights to misclassified samples, making them the focus of subsequent learners.

- LightGBM is faster and optimized for large datasets by using histogram-based learning.

Boosting shines in tasks like financial forecasting and medical diagnostics, where high precision is critical.

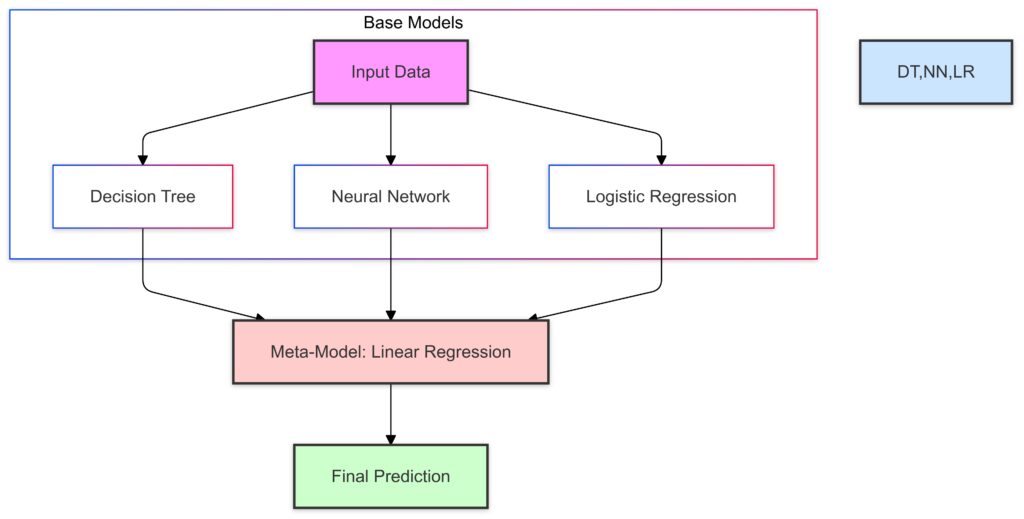

Stacking and Blending

Meta-Model:Outputs from the base models are passed to a meta-model (Linear Regression).

Final Prediction:The meta-model combines the outputs of the base models to produce the final prediction.

Stacking combines predictions from multiple models using a meta-model for the final output. It’s more flexible than bagging or boosting because it allows diverse base models (e.g., SVMs, neural networks, and decision trees).

Steps in stacking:

- Train several base models on the training data.

- Use their predictions as inputs for a meta-model.

- Train the meta-model to combine base predictions optimally.

For example, in Kaggle competitions, stacking often outperforms single models, blending CNNs, tree-based models, and logistic regressions for maximum accuracy.

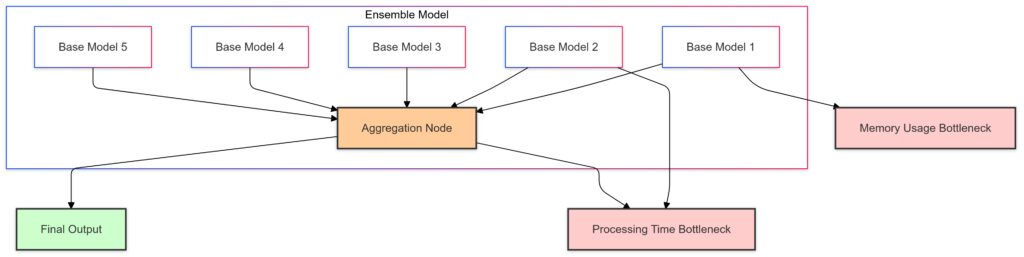

Challenges in Ensemble Learning

Nodes for individual base models represent computations done independently.

Examples: Decision Trees, Logistic Regression, etc.

Aggregation Node:

Central node aggregates predictions from all base models.

Example methods: Weighted voting or averaging.

Bottlenecks:

Memory Usage: Potential issues in handling large datasets for multiple base models.

Processing Time: Increased computational time as ensemble size grows.

Final Output:

Aggregated predictions produce the final model output.

While ensembles are powerful, they come with challenges:

- Computational cost: Training multiple models is resource-intensive. For instance, a Random Forest with 500 trees requires significant memory and processing power.

- Correlation among base models: Highly similar models provide limited diversity, reducing ensemble benefits.

Tools and Libraries for Ensembles

- Scikit-learn: Offers implementations of Random Forest, AdaBoost, Gradient Boosting, and Voting classifiers.

- XGBoost and LightGBM: Industry favorites for gradient boosting, known for speed and efficiency.

- H2O.ai: A platform for scalable, distributed ensemble models with AutoML capabilities.

Insights, Practical Tips, and Future Trends

Tips for Building Effective Ensembles

- Ensure Model Diversity

The success of an ensemble relies on combining diverse models. Use models with different architectures, feature subsets, or training techniques. For example, blending a Random Forest with a Neural Network can leverage their unique strengths. - Avoid Over-Complexity

While ensembles aim to improve accuracy, overly complex models can lead to diminishing returns. Optimize the number of base learners and their configurations for balanced performance and efficiency. - Balance Between Bagging and Boosting

Choose bagging for high-variance data (e.g., noisy datasets) and boosting for high-bias scenarios (e.g., underperforming base models). - Use Cross-Validation for Robustness

Validate ensemble performance with k-fold cross-validation to ensure generalization and minimize overfitting risks.

Use Cases That Shine with Ensembles

- Climate Forecasting: Combining multiple weather models to predict rainfall or temperature trends with higher precision.

- Image Recognition: Blending CNNs trained on different data subsets to improve object classification in diverse environments.

- Natural Language Processing: Stacking transformers and traditional models for tasks like sentiment analysis or text summarization.

Future Trends in Ensemble Learning

- Automated Machine Learning (AutoML)

Tools like Google’s AutoML and H2O AutoML are integrating ensembles by default, offering pre-built solutions that optimize model diversity and performance automatically. - Hybrid Models with Deep Learning

Ensembles combining traditional machine learning and deep learning frameworks are emerging. For instance, using tree-based models for structured data and CNNs for images within the same pipeline. - Dynamic Ensembles

Ensembles that adapt to incoming data streams or changing distributions are gaining popularity in real-time systems like fraud detection and stock trading. - Eco-Friendly Ensembles

Efforts to reduce computational costs are driving innovations in lightweight and resource-efficient ensemble methods, making them more accessible for edge devices and smaller organizations.

When Not to Use Ensembles

- Small Datasets: Ensembles require sufficient data to train multiple models effectively. On limited datasets, a single well-optimized model often performs better.

- Resource Constraints: If computational resources are limited, simpler models like logistic regression or a single decision tree may suffice.

Ensemble learning remains a cornerstone of modern machine learning, offering flexibility, robustness, and enhanced accuracy. By combining models thoughtfully and leveraging cutting-edge tools, practitioners can unlock its full potential.

FAQs

What is the main advantage of ensemble learning?

The main advantage of ensemble learning is its ability to improve accuracy and robustness by combining predictions from multiple models. Each model compensates for the weaknesses of others, resulting in better generalization.

For example, in weather forecasting, an ensemble of different models might combine predictions from satellite imagery, historical weather patterns, and real-time sensor data to provide a more accurate prediction than any single model.

How does ensemble learning reduce overfitting?

Ensemble learning reduces overfitting by aggregating results from diverse models. This approach ensures that individual errors or biases don’t dominate the final prediction. Techniques like bagging and boosting play key roles:

- Bagging (e.g., Random Forest) reduces variance by training models on random subsets of data and averaging their outputs.

- Boosting (e.g., AdaBoost) sequentially corrects errors while preventing overfitting through regularization.

For instance, in fraud detection, combining models trained on different features ensures the ensemble doesn’t overfit specific patterns unique to training data.

When should you use boosting over bagging?

Use boosting when the base model has high bias (underfitting), as boosting focuses on improving weak learners iteratively. Bagging, on the other hand, is more effective for high-variance models prone to overfitting.

For example:

- Boosting works well in tasks like predicting customer churn, where initial models might struggle with accuracy.

- Bagging is better suited for noisy data, such as sensor readings in IoT applications.

How does stacking differ from bagging and boosting?

Stacking combines predictions from multiple diverse models (e.g., Random Forest, SVM, Neural Networks) using a meta-model, while bagging and boosting primarily refine or aggregate similar models.

For example, in image classification, a stacking ensemble might combine the strengths of a CNN (good at detecting shapes) and a tree-based model (effective with structured data). The meta-model optimizes how these predictions are weighted.

What are the computational challenges of ensembles?

Ensembles often require significant computational resources because multiple models must be trained and combined. This can lead to longer training times and increased memory usage.

For instance, training a Random Forest with 500 trees takes far more time and memory than a single decision tree. Similarly, boosting algorithms like XGBoost, though efficient, may struggle with massive datasets on limited hardware.

Can ensembles work with small datasets?

Ensembles are typically most effective with large datasets, as they rely on diverse training data to produce complementary models. However, techniques like cross-validation can help make ensembles viable for smaller datasets.

For example, in a medical study with limited patient data, cross-validation can ensure that all data is used efficiently during training, making ensemble methods more applicable.

What industries benefit most from ensemble learning?

Ensemble learning is valuable across industries:

- Healthcare: Predicting disease risks using data from genetic, clinical, and lifestyle sources.

- Finance: Detecting fraud and forecasting stock prices with combined signals.

- Retail: Personalizing recommendations by blending models trained on user preferences and browsing history.

For example, an e-commerce site might use a stacked ensemble to combine collaborative filtering and content-based recommendation models, improving user experience.

How do you ensure model diversity in ensembles?

Model diversity can be achieved by:

- Using different algorithms (e.g., Random Forest, Neural Networks).

- Training models on different subsets of data or features.

- Varying hyperparameters to create unique decision boundaries.

For example, in a housing price prediction task, you could train one model on location features, another on historical price trends, and a third on economic indicators. Their combined predictions would yield a robust final result.

Are ensembles always better than single models?

Not always. Ensembles shine when individual models have complementary strengths or when the problem requires high accuracy. However, for simple tasks or small datasets, a single well-tuned model may perform just as well with less computational cost.

For example, predicting whether a coin toss is heads or tails doesn’t require an ensemble; a simple logistic regression would suffice.

Can ensembles handle imbalanced datasets effectively?

Yes, ensembles are effective for imbalanced datasets. Techniques like boosting, particularly AdaBoost or XGBoost, focus on misclassified samples, which are often minority class examples.

For instance, in a medical dataset with rare disease cases, boosting ensures that the model gives more attention to correctly predicting these minority cases. Combining ensemble methods with sampling techniques (e.g., SMOTE) further enhances performance.

How do voting ensembles work?

Voting ensembles combine predictions from multiple models to make a final decision. Two main types are:

- Hard voting: Chooses the majority class label from all models.

- Soft voting: Averages the predicted probabilities, selecting the class with the highest average.

For example, in spam detection, if three models classify an email as spam with probabilities of 0.8, 0.9, and 0.7, soft voting would result in a confident classification as spam.

What is the trade-off between ensemble size and performance?

While increasing the number of models in an ensemble can improve accuracy, the gains diminish beyond a certain point. Large ensembles may also suffer from higher computational costs and reduced interpretability.

For example, a Random Forest with 100 trees might perform almost as well as one with 500 trees, but the latter would take significantly longer to train. Finding the optimal size through validation is key.

Can ensemble methods be used for unsupervised learning?

While ensembles are more common in supervised learning, they can be adapted for unsupervised tasks like clustering. Techniques like consensus clustering combine results from multiple clustering algorithms to improve robustness.

For example, in customer segmentation, combining k-means and hierarchical clustering results might yield more stable and meaningful groupings.

How does feature importance work in ensembles?

Ensemble methods like Random Forest and Gradient Boosting provide feature importance scores by analyzing how much each feature contributes to the model’s accuracy.

For instance, in predicting house prices, a Random Forest might show that “location” and “square footage” are more important than “year built,” guiding better feature selection and domain insights.

Are ensembles prone to overfitting?

While ensembles reduce overfitting compared to single models, they’re not immune. Boosting methods, in particular, can overfit if too many iterations or overly complex base models are used.

For example, an overly deep decision tree in Gradient Boosting might memorize the training data, reducing the generalization of the ensemble. Regularization techniques like shrinkage or early stopping help mitigate this risk.

How does bagging ensure diversity in base models?

Bagging achieves diversity by training each base model on a random subset of data. These subsets are drawn with replacement (bootstrap sampling), so each model sees slightly different data.

For instance, in a Random Forest, one tree might learn patterns in urban housing prices, while another focuses on rural areas, making the ensemble more robust overall.

What are weighted ensembles?

Weighted ensembles assign different importance to individual models based on their performance. For example, a highly accurate model might have more influence on the final prediction compared to weaker models.

For example, in a loan approval system, a neural network might handle complex patterns better and receive higher weight, while simpler models like logistic regression contribute less to the final decision.

What are out-of-bag errors, and how do they relate to bagging?

Out-of-bag (OOB) errors are a measure of model performance calculated on data points not included in a specific model’s training subset during bagging. This provides a built-in validation metric without needing a separate test set.

For instance, in a Random Forest, approximately one-third of data points are left out during each tree’s training and can be used to evaluate its accuracy.

How do you debug an underperforming ensemble?

To debug an underperforming ensemble:

- Analyze base model performance—ensure each is trained effectively.

- Check for overfitting or underfitting by comparing training and validation errors.

- Optimize hyperparameters like the number of trees or learning rate.

For example, if a stacking ensemble underperforms, the meta-model might need retraining, or the base models may lack sufficient diversity. Tools like SHAP or Grad-CAM can help identify bottlenecks.

Resources

Foundational Articles and Tutorials

- “An Introduction to Ensemble Learning” by Towards Data Science

- A beginner-friendly article explaining key ensemble techniques like bagging, boosting, and stacking, with real-world applications.

- Read the Article

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron

- A must-read book with practical guides to implementing ensemble methods like Random Forest, Gradient Boosting, and Voting Classifiers.

- View the Book

- “Understanding Ensemble Learning Algorithms” on Analytics Vidhya

- Explores the differences between bagging, boosting, and stacking, with Python code snippets.

- Read the Article

Hands-On Tools and Frameworks

- Scikit-learn

- Provides easy-to-use implementations for bagging (e.g., Random Forest), boosting (e.g., AdaBoost), and voting ensembles.

- Visit Scikit-learn

- XGBoost

- A high-performance gradient boosting library widely used in competitions and production environments.

- Visit XGBoost

- LightGBM

- Known for its speed and efficiency, especially on large datasets, LightGBM is excellent for boosting tasks.

- Visit LightGBM

- H2O.ai

- Offers advanced tools for automated machine learning (AutoML) and ensemble modeling, including stacking and bagging options.

- Visit H2O.ai

Video Tutorials and Online Courses

- Coursera: Machine Learning Specialization by Andrew Ng

- Covers bagging and boosting in detail, with practical examples. Ideal for those new to ensemble learning.

- Explore the Course

- Kaggle Learn: Ensemble Learning

- Short, practical lessons on building ensemble models with Python. Includes exercises on boosting and Random Forests.

- YouTube: StatQuest with Josh Starmer

- Simplifies complex topics like Random Forests and Gradient Boosting using animations and easy-to-understand explanations.

- Watch StatQuest

Research Papers and Advanced Concepts

- “Random Forests” by Breiman (2001)

- The original paper introducing Random Forests, explaining how bagging improves generalization and reduces variance.

- “A Tutorial on Boosting” by Yoav Freund and Robert Schapire

- A foundational work detailing boosting concepts and the development of AdaBoost.

- Read the Paper

- “XGBoost: A Scalable Tree Boosting System” by Chen and Guestrin

- The seminal paper introducing XGBoost, focusing on speed and efficiency in gradient boosting.

- Read the Paper

Online Communities and Forums

- Kaggle

- Offers datasets and kernels (notebooks) for practicing ensemble techniques. The forums are excellent for discussions on boosting and stacking strategies.

- Visit Kaggle

- Stack Overflow

- A go-to resource for troubleshooting implementation issues in ensemble methods.

- Visit Stack Overflow

- Reddit: r/MachineLearning

- A community for discussions about ensemble learning advancements, applications, and tutorials.

- Visit Subreddit

Practical Guides and Code Repositories

- GitHub: Ensemble Learning Repositories

- Search for repositories with pre-built examples of Random Forests, XGBoost, and stacking models.

- Example: Scikit-learn Ensemble Examples

- KDNuggets Ensemble Learning Guide

- Combines theoretical insights and Python code snippets to help beginners and professionals implement ensembles.

- Visit KDNuggets