Businesses need to process vast amounts of raw data to uncover actionable insights. Two crucial concepts—ETL pipelines and data mining—play a significant role in achieving this.

Let’s break down these topics and understand how they work together to fuel decision-making.

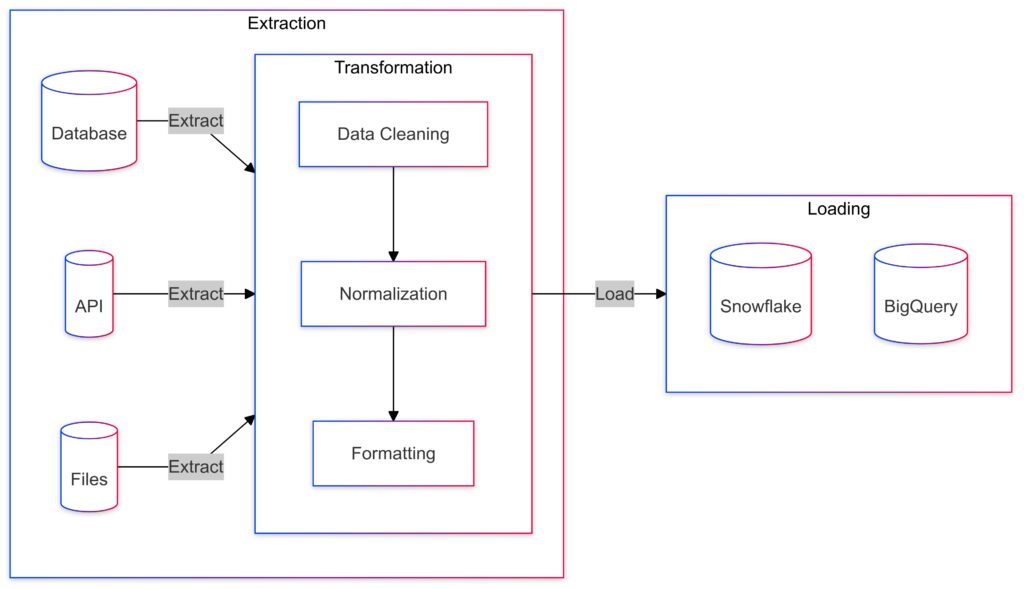

What Are ETL Pipelines? The Backbone of Data Processing

Extracting Data from Various Sources

ETL stands for Extract, Transform, Load. It starts with extracting data from diverse sources like databases, APIs, or flat files. These sources might vary in format, ranging from structured SQL databases to unstructured data like JSON logs or CSV files.

- Extraction tools like Apache Nifi or Talend simplify this process.

- APIs are key for accessing real-time or external data.

The goal? To consolidate scattered information into a manageable format.

Transforming Data for Consistency

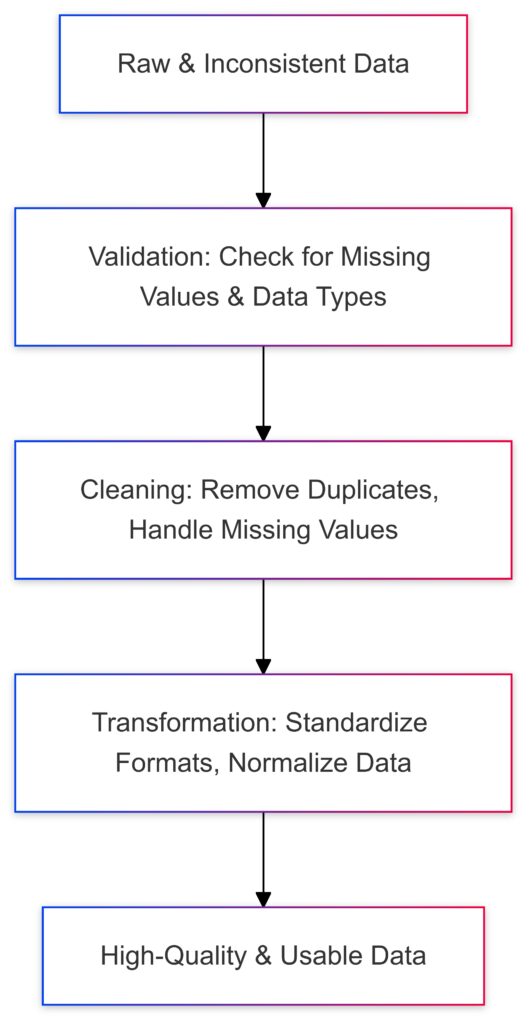

Once extracted, raw data is transformed. This involves cleaning, normalizing, and structuring the data to ensure compatibility. For example:

- Removing duplicates or incomplete records.

- Converting data types (e.g., turning text dates into timestamps).

Tools like Python’s Pandas library or specialized ETL software handle these tasks efficiently.

Loading into Destination Systems

Finally, the transformed data is loaded into a destination system, like a data warehouse or a cloud-based platform (e.g., Snowflake or Google BigQuery). This ensures that it’s ready for analysis or further processing.

Data Mining: Extracting Gold from Processed Data

Identifying Patterns and Trends

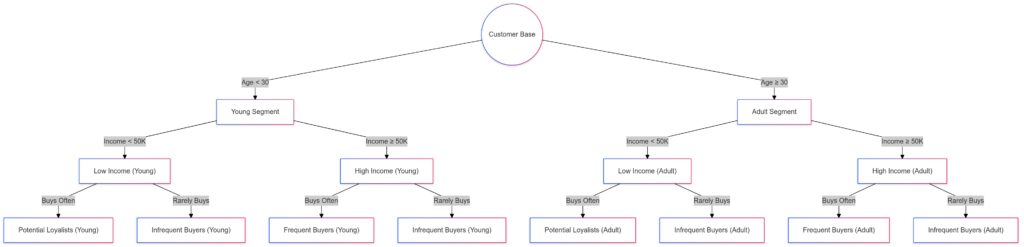

Data mining focuses on finding patterns, correlations, and trends in cleaned data. Think of it as digging through a treasure trove of information to discover gems like customer purchase behavior or operational inefficiencies.

- Algorithms like decision trees and clustering techniques power these discoveries.

- Tools like RapidMiner and KNIME streamline the mining process.

Predictive and Prescriptive Insights

The insights gained can be predictive (forecasting future outcomes) or prescriptive (recommending actions). For example:

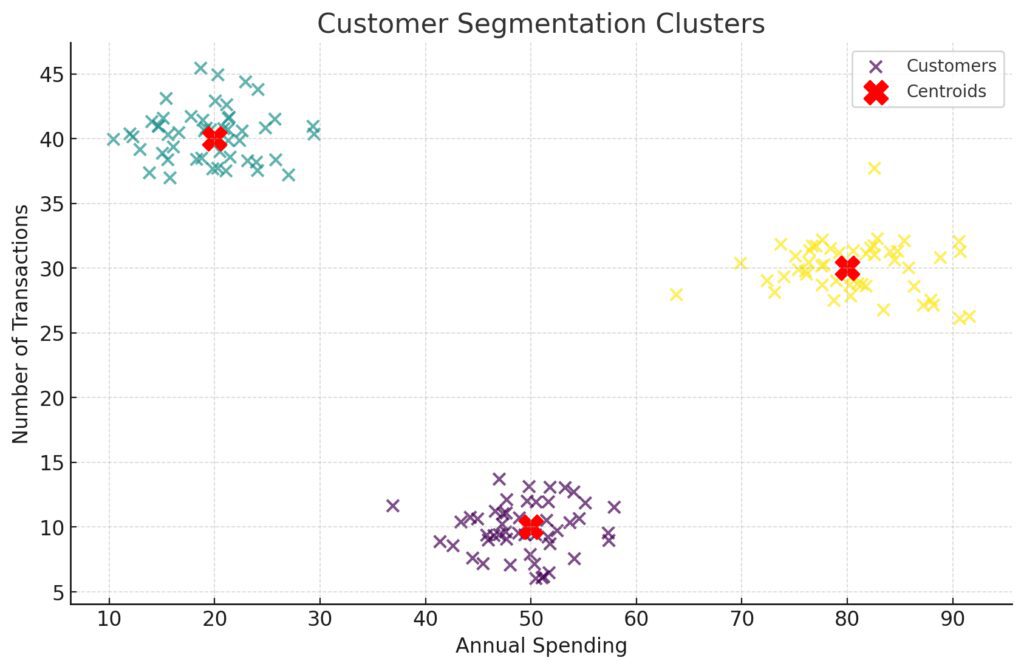

- Predictive: Analyzing customer churn likelihood based on past interactions.

- Prescriptive: Suggesting marketing strategies based on customer clusters.

Real-World Applications of Data Mining

From fraud detection in banking to personalization in e-commerce, data mining impacts industries globally. Its role is expanding as AI and machine learning integrate with mining techniques.

Why Combine ETL Pipelines and Data Mining?

Preparing the Stage for Discovery

ETL pipelines are essential for providing clean and structured data—an absolute prerequisite for effective data mining. Without robust ETL processes, raw data could lead to misleading results.

Automating Data Workflows

Integrating ETL pipelines with data mining creates an automated workflow. For instance:

- ETL pipelines handle ongoing data ingestion and transformation.

- Automated mining tools continuously update insights in real-time.

By combining the two, businesses gain efficiency and accuracy.

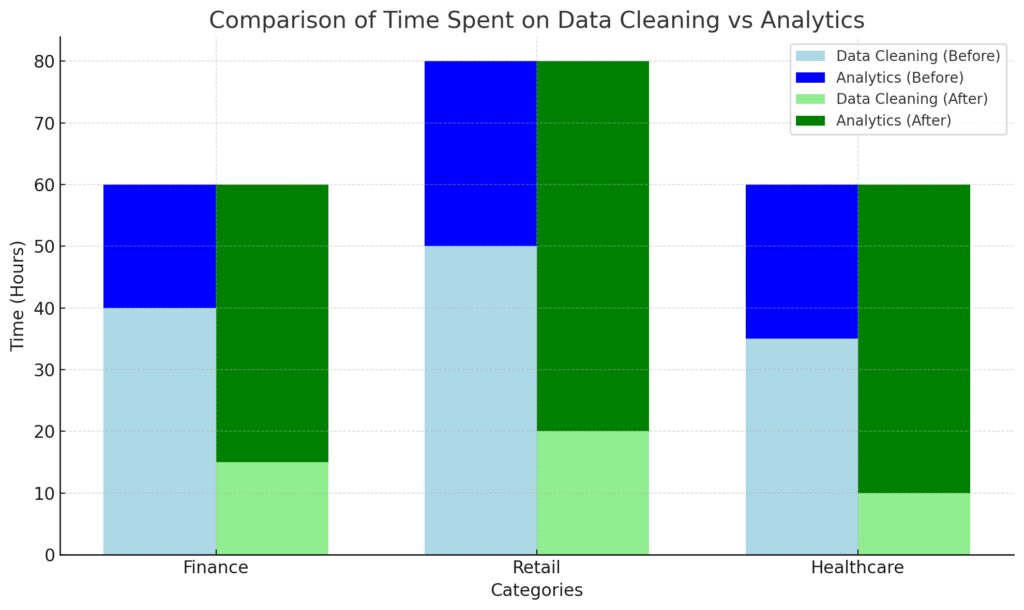

How ETL Pipelines Power Business Intelligence

Time: Measured in hours (on the y-axis).

Legend: Differentiates between “Before” and “After” states for data cleaning and analytics.

Centralizing Data for Analytics

ETL pipelines play a pivotal role in business intelligence (BI). By centralizing data from disparate sources into one repository, they simplify access for analytics teams. A unified database allows faster insights and smoother collaboration.

- BI tools like Tableau or Power BI thrive on well-organized data.

- ETL ensures metrics like revenue and customer engagement align across departments.

This step minimizes inconsistencies and maximizes trust in the data.

Supporting Real-Time Decision Making

Modern ETL pipelines often integrate real-time data processing. This capability enables businesses to respond quickly to changes, such as inventory shifts or market trends.

- Streaming ETL tools like Apache Kafka process data as it arrives.

- Businesses gain an edge with faster updates to dashboards or alert systems.

Enhancing Scalability for Big Data

With the rise of big data, scalability is critical. ETL pipelines built on platforms like AWS Glue or Apache Airflow can handle exponential growth. They offer flexibility to add new data sources without overhauling existing systems.

Techniques and Algorithms Driving Data Mining

Supervised vs. Unsupervised Learning

Data mining algorithms fall into two categories, each with distinct strengths:

- Supervised learning: Uses labeled data for training. Popular for predictions like sales forecasting.

- Unsupervised learning: Identifies hidden patterns in unlabeled data. Great for customer segmentation or anomaly detection.

Key Algorithms to Know

- Clustering (e.g., K-Means): Groups similar data points, revealing trends.

- Association Rules (e.g., Apriori Algorithm): Identifies relationships, like products often bought together.

- Decision Trees: Simplifies complex decisions by visualizing choices.

Importance of Feature Engineering

Effective data mining depends on feature engineering—choosing and creating the right variables. For instance:

- Deriving average purchase value from transaction history.

- Adding time-series features to capture seasonal effects.

Without meaningful features, even advanced algorithms struggle to produce actionable insights.

Challenges and Best Practices for ETL and Data Mining

The data quality pipeline ensures clean, consistent datasets for reliable insights.

Common Challenges in ETL Processes

- Data silos: Isolated data sources make integration difficult.

- Data quality: Inconsistent or dirty data undermines reliability.

- Resource constraints: Large-scale ETL operations demand significant computing resources.

Best practices include:

- Automating validation checks for quality assurance.

- Regularly auditing pipeline performance for bottlenecks.

Tackling Data Mining Hurdles

- Overfitting: Algorithms too tuned to training data fail in real-world scenarios.

- Scalability: Processing millions of rows demands efficient algorithms.

- Bias in data: Skewed input can lead to unfair predictions.

Combating these requires balanced datasets, thorough testing, and algorithm optimization.

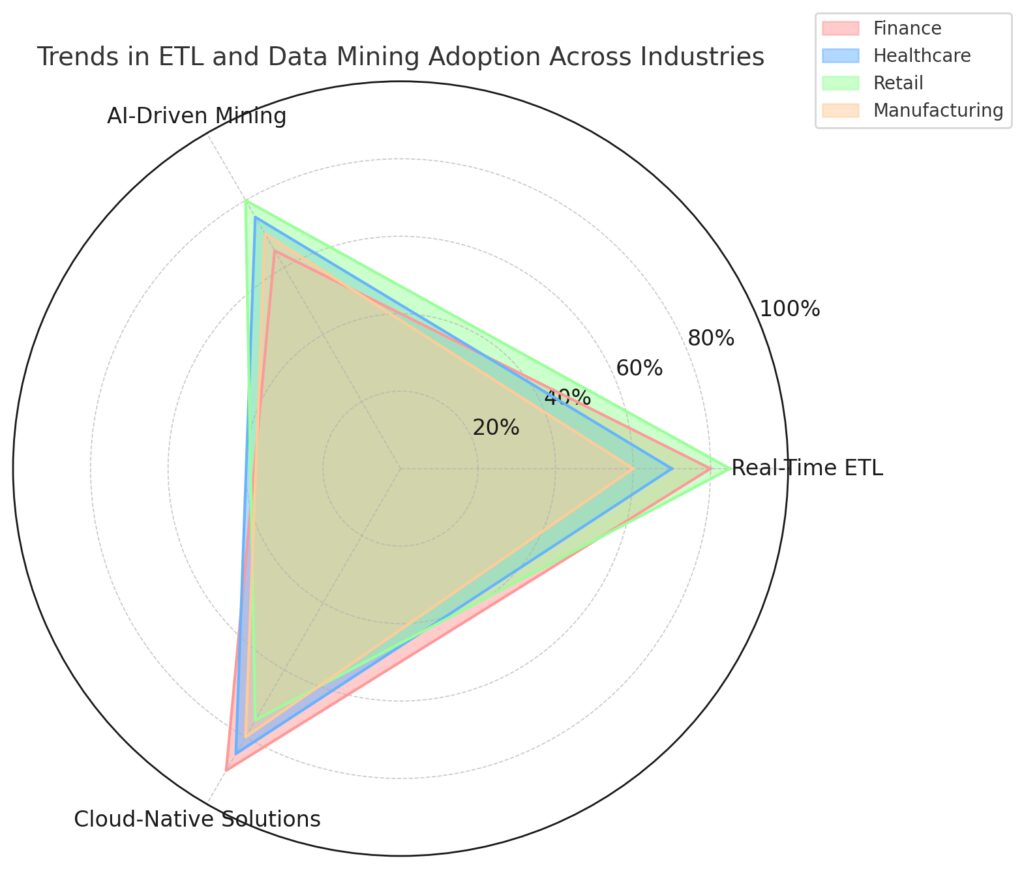

Emerging Trends in ETL and Data Mining

Cloud-Native ETL Solutions

The rise of cloud computing has transformed how businesses approach ETL processes. Modern cloud-native tools like AWS Glue, Google Dataflow, and Azure Data Factory offer:

- Scalability: Handle massive data volumes without overhauling infrastructure.

- Flexibility: Integrate with diverse data sources, from IoT devices to social media streams.

- Cost Efficiency: Pay-as-you-go models reduce upfront expenses.

Cloud-based ETL also accelerates innovation by supporting real-time and event-driven architectures.

Factors: Real-Time ETL, AI-Driven Mining, and Cloud-Native Solutions.

Industries: Differentiated by vibrant colors.

Adoption Levels: Percentage values for each trend.

AI-Driven Automation in Data Mining

Artificial Intelligence is reshaping data mining with capabilities like automated model selection and deep learning.

- AutoML tools (e.g., Google AutoML) simplify machine learning model training for non-experts.

- Neural networks enable mining unstructured data, such as text or images, for advanced insights.

AI integration ensures more accurate predictions and personalized analytics, driving smarter decision-making.

Data Privacy and Compliance

As businesses collect and process more data, regulatory compliance is becoming a focal point. Laws like GDPR and CCPA enforce strict rules on data handling, requiring:

- Built-in anonymization techniques in ETL pipelines.

- Transparent processes for how mined insights are generated.

Incorporating ethical frameworks ensures businesses maintain trust while deriving value from data.

How Businesses Gain Competitive Edge

Driving Innovation with Data

By combining ETL pipelines and data mining, companies create a feedback loop of continuous improvement:

- ETL pipelines ensure high-quality, unified data streams.

- Data mining transforms that data into game-changing insights for innovation.

For example:

- Retailers optimize inventory by analyzing purchasing trends.

- Healthcare providers predict patient outcomes, improving treatment plans.

Reducing Operational Costs

Automation in both ETL and data mining reduces manual effort, saving time and money. Efficient data processes cut down waste and improve resource allocation.

Enabling Proactive Strategies

Businesses equipped with robust ETL and data mining systems can anticipate challenges and opportunities, enabling proactive responses. This agility translates to a stronger market position.

Unlocking the Hidden Power of ETL and Data Mining

1. The Future Lies in Data Mesh Architectures

Traditional ETL pipelines rely on centralized data warehouses. However, data mesh architectures are gaining traction. They distribute data ownership across teams, turning them into “data product owners.”

- Decentralization reduces bottlenecks and fosters agility.

- ETL pipelines adapt by operating at a microservice level, aligning with mesh principles.

This approach is particularly suited for businesses scaling quickly in complex environments.

2. Embracing Real-Time Analytics Through Streaming ETL

Static reports are being replaced by real-time dashboards. ETL pipelines are evolving to include streaming capabilities with tools like Apache Kafka and AWS Kinesis.

- Streaming ETL enables instant insights from live data, crucial for industries like finance, retail, and IoT.

- Pairing streaming ETL with machine learning models ensures timely, actionable predictions.

3. The Role of Synthetic Data in Data Mining

When real-world data is scarce or sensitive, synthetic data is becoming an innovative solution. Generated by AI, synthetic data mimics real datasets without compromising privacy.

- Accelerates model training for machine learning in fields like healthcare.

- Overcomes data imbalance, enhancing mining accuracy.

4. Explainable Data Mining for Transparency

As algorithms grow more complex, explainability is emerging as a key priority. Black-box models limit trust in data-driven decisions. To counter this, businesses are adopting explainable AI (XAI).

- Tools like LIME or SHAP clarify why a model made a specific decision.

- Transparency fosters user confidence and aids compliance with regulations.

5. DataOps: The Next Step in ETL Evolution

DataOps, a cousin of DevOps, emphasizes continuous delivery and collaboration in data pipeline management. By integrating CI/CD principles, it:

- Reduces errors in ETL pipeline development.

- Speeds up iterations for mining-ready datasets.

Organizations using DataOps frameworks find it easier to keep pace with rapidly changing business needs.

These insights highlight how ETL and data mining are evolving beyond their traditional roles, unlocking greater efficiency, scalability, and innovation. Businesses that embrace these trends will stay ahead in the competitive data landscape.

Conclusion: From Data to Action

ETL pipelines and data mining are the cornerstones of a data-driven organization. Together, they enable businesses to turn raw data into actionable insights, driving innovation and efficiency.

As technologies like cloud computing and AI evolve, the synergy between these two processes will

FAQs

What are the top tools for ETL pipelines?

Popular tools for building ETL pipelines include:

- Talend: User-friendly, suitable for beginners.

- Apache Airflow: Open-source, ideal for orchestrating complex workflows.

- Informatica: Enterprise-grade, with robust data governance features.

- AWS Glue: Serverless and integrates seamlessly with other AWS services.

Example: A startup may use Apache Airflow for cost-effective automation, while a large bank might prefer Informatica for its governance capabilities.

Why is data quality important in data mining?

Poor-quality data leads to unreliable insights, impacting decision-making. Ensuring data quality involves cleaning and validating data during the ETL process.

- Issues: Duplicates, missing values, or incorrect formatting can skew patterns.

- Best Practices: Use tools like OpenRefine or Python’s Pandas for preprocessing.

Example: A marketing campaign based on flawed customer segmentation data could lead to wasted ad spend and poor ROI.

Can data mining handle unstructured data?

Yes! Modern data mining techniques analyze unstructured data, including text, images, and videos.

- Tools: Natural language processing (NLP) for text, computer vision for images.

- Applications: Mining customer sentiment from reviews or detecting defects from product images.

Example: A fashion retailer uses sentiment analysis on social media comments to gauge customer opinions about a new collection.

How do ETL pipelines improve decision-making?

By transforming scattered, raw data into structured formats, ETL pipelines create a reliable foundation for analysis. This ensures that decisions are based on accurate and consistent information.

Example: A logistics company consolidates shipment tracking data from multiple vendors using ETL pipelines, enabling real-time insights into delivery performance.

What industries benefit the most from ETL and data mining?

Industries leveraging large datasets reap the most benefits, including:

- Healthcare: Predicting patient outcomes through mining historical data.

- Retail: Personalizing customer experiences with recommendation engines.

- Finance: Detecting fraudulent transactions via anomaly detection.

Example: A bank uses ETL pipelines to aggregate transaction data, then applies mining algorithms to flag suspicious activities in real-time.

How secure are ETL and data mining processes?

Security is a critical concern for both ETL pipelines and data mining. Steps to ensure security include:

- Encryption: Protect data during transfer and storage.

- Access Control: Restrict who can access sensitive data.

- Anonymization: Mask personal information when mining sensitive datasets.

Example: A healthcare provider anonymizes patient records before applying data mining techniques to comply with HIPAA regulations.

What is the role of machine learning in data mining?

Machine learning amplifies data mining by automating pattern recognition and enhancing predictions. Algorithms like clustering, decision trees, and neural networks uncover deeper insights.

Example: An online platform uses ML-powered mining to predict user preferences, driving personalized content recommendations.

Can ETL pipelines handle real-time data?

Yes, streaming ETL pipelines process real-time data as it arrives. This is critical for industries that rely on up-to-the-minute insights.

Example: A stock trading platform uses streaming ETL to process live market data, enabling traders to make split-second decisions.

How does data mining differ from data analytics?

While they overlap, data mining and data analytics serve different purposes:

- Data Mining: Focuses on discovering patterns, relationships, or anomalies in data using algorithms. It’s exploratory in nature.

- Data Analytics: Uses insights (often derived from mining) to answer specific questions or guide decisions. It’s more goal-oriented.

Example: Data mining might reveal that customers aged 25–34 prefer a certain product. Analytics would assess the ROI of targeting this group with promotions.

Can small businesses benefit from ETL pipelines?

Absolutely! ETL pipelines are not just for enterprises. Affordable tools and cloud solutions make them accessible for small businesses too.

- Cloud platforms like Google BigQuery or Snowflake scale with usage, keeping costs manageable.

- Tools like Zapier or Matillion simplify integration for businesses without technical expertise.

Example: A local café uses an ETL pipeline to consolidate POS data and monitor customer trends for menu optimization.

How does data mining improve customer experience?

By uncovering hidden insights, data mining helps businesses tailor their services to customer needs.

- Personalization: Recommend products based on past purchases or browsing behavior.

- Customer Segmentation: Group customers by preferences to create targeted marketing campaigns.

- Sentiment Analysis: Use text mining to gauge customer satisfaction from reviews.

Example: A streaming service mines viewing patterns to suggest shows or movies, increasing user engagement.

What are the challenges of integrating ETL pipelines with legacy systems?

Legacy systems can complicate ETL processes due to outdated architectures and limited compatibility. Challenges include:

- Lack of APIs for seamless data extraction.

- Inconsistent data formats requiring extensive transformations.

- Performance issues when handling large data volumes.

Solutions: Use middleware tools or modern ETL platforms like Fivetran that adapt to legacy systems.

Example: A manufacturing company modernizes its ETL process by using APIs to pull data from an old ERP system into a cloud-based warehouse.

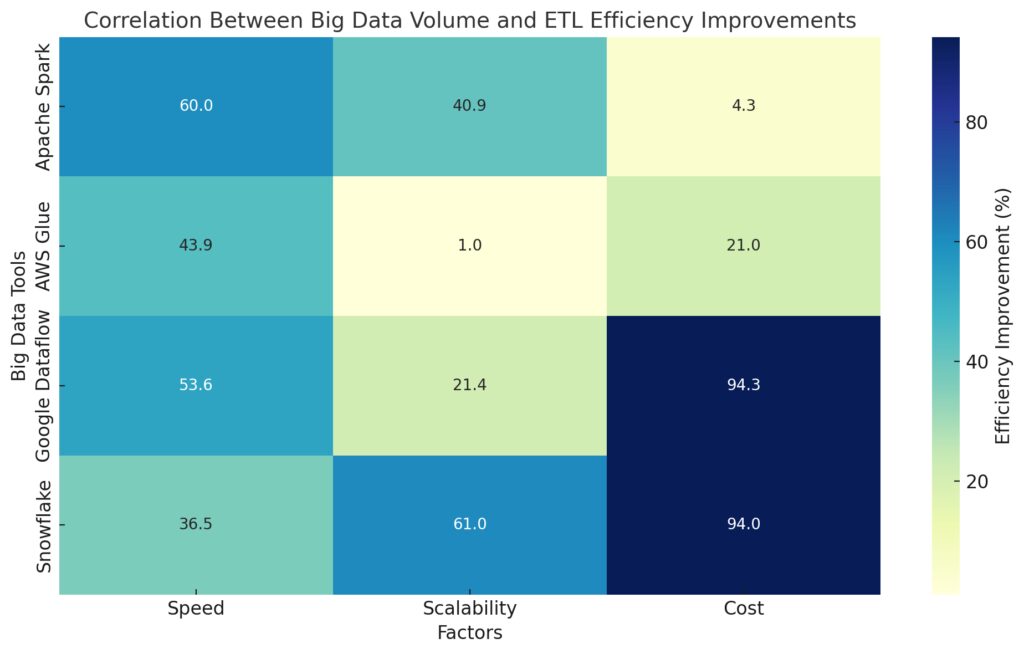

How does big data influence ETL and data mining?

Big data technologies enhance ETL efficiency, enabling faster and more scalable processing.

Big data amplifies the need for efficient ETL pipelines and advanced mining techniques.

- ETL tools now support distributed computing (e.g., Apache Spark) to process massive datasets.

- Data mining leverages machine learning to extract insights from petabytes of structured and unstructured data.

Example: Social media platforms mine user-generated content to track trending topics or identify viral content in real time.

Can data mining uncover bias in datasets?

Yes, data mining can identify and help mitigate biases. However, the mining process itself can introduce bias if not handled carefully.

- Detection: Algorithms like clustering can highlight underrepresented groups in datasets.

- Correction: Rebalancing datasets ensures fairer outcomes.

Example: A hiring algorithm trained on biased historical data might favor certain candidates. Mining and analysis reveal the issue, leading to corrective measures.

What is ETL orchestration, and why is it important?

ETL orchestration refers to automating and scheduling the various steps of an ETL pipeline to ensure seamless execution.

- Importance: Orchestration handles dependencies between tasks, like ensuring data extraction finishes before transformations start.

- Tools: Apache Airflow and Prefect are popular choices for building orchestrated workflows.

Example: A retail chain uses orchestration to schedule nightly ETL jobs, consolidating sales data from all stores for next-day analysis.

How does cloud ETL compare to on-premises solutions?

Cloud ETL offers scalability, flexibility, and reduced maintenance, whereas on-premises ETL provides greater control and data security.

- Cloud Benefits: Automatic updates, pay-as-you-go pricing, and global accessibility.

- On-Premises Benefits: Complete control over hardware and data, critical for industries with strict compliance requirements.

Example: A tech startup opts for AWS Glue to reduce upfront costs, while a healthcare firm uses Informatica on-premises to maintain HIPAA compliance.

How is data governance integrated into ETL pipelines?

Data governance ensures that data processed by ETL pipelines remains secure, compliant, and high-quality.

- Features: Role-based access controls, audit logs, and data lineage tracking.

- Best Practices: Establish a data governance framework before building ETL processes.

Example: A financial institution integrates access controls into its ETL pipelines to ensure only authorized personnel can view sensitive customer data.

What’s the role of visualization tools in data mining?

Visualization tools help make complex mining insights understandable. They translate patterns and trends into actionable visuals like graphs or heatmaps.

- Tools: Tableau, Power BI, and D3.js.

- Impact: Visualization bridges the gap between technical insights and business strategy.

Example: A sales team uses a dashboard to visualize mined patterns in purchasing behavior, leading to more focused marketing campaigns.

Resources

Books for Deep Understanding

- “Data Warehousing in the Age of Big Data” by Krish Krishnan: Focuses on modern ETL approaches for big data environments.

- “Data Mining: Concepts and Techniques” by Jiawei Han, Micheline Kamber, and Jian Pei: A must-read for understanding the foundations of data mining.

- “The Data Warehouse Toolkit” by Ralph Kimball and Margy Ross: An essential resource for ETL design patterns and best practices.

Open-Source Tools and Frameworks

- Apache Airflow: A popular tool for ETL orchestration. Learn more at Apache Airflow.

- KNIME: A user-friendly platform for data mining and ETL. Explore at KNIME.

- Talend Open Studio: An open-source ETL solution with an intuitive drag-and-drop interface. Find it at Talend.

These tools are free to use and backed by active communities for support.

Communities and Forums

- Reddit: Subreddits like r/dataengineering and r/datascience host discussions on ETL, data mining, and real-world applications.

- Stack Overflow: A go-to platform for troubleshooting ETL pipelines and algorithm issues.

- LinkedIn Groups: Join professional groups like “Big Data, ETL, and Data Mining Professionals” for networking and industry insights.

Engaging in these communities can help you stay updated on trends and best practices.

Industry Reports and Whitepapers

- Gartner Magic Quadrant for Data Integration Tools: Provides insights into the leading ETL tools in the market. Access via Gartner.

- McKinsey & Company’s Data Analytics Insights: Regular reports on the role of analytics in business. Visit McKinsey Insights.

- IBM’s Data Science and AI Blogs: Articles and whitepapers on advanced data mining techniques. Explore IBM Blogs.

These resources are excellent for understanding the strategic impact of ETL and data mining on businesses.

Interactive Tools for Learning

- Google Colab: Free notebooks to practice ETL scripts and mining algorithms using Python.

- RapidMiner: Offers an interactive environment to test and apply data mining workflows.

- Tableau Public: A free version of Tableau for visualizing mined data insights.