Why Explainability Matters in AI

Demystifying the Black Box Problem

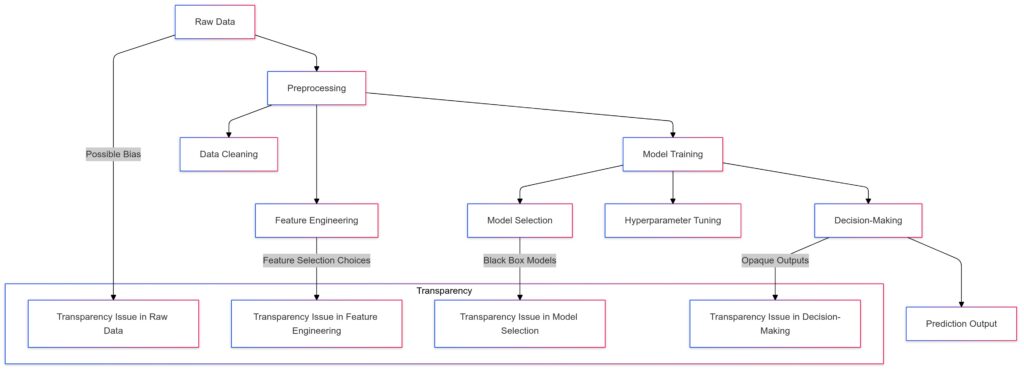

AI systems often operate as “black boxes,” where their inner workings are obscure, even to experts. This lack of transparency creates challenges for accountability, especially in critical areas like healthcare, finance, and criminal justice.

When decisions carry life-altering implications, stakeholders need clarity on how those decisions are made. Without explainability, AI risks losing public trust and may perpetuate biases or errors.

Bridging Trust Through Transparency

Explainability transforms the “black box” into a “glass box”, allowing users to understand an AI’s reasoning. Transparent systems instill confidence by showing that they operate fairly and effectively.

By fostering transparency, companies align with ethical principles and meet regulatory expectations, strengthening trust with both users and oversight bodies.

Enhancing Decision Accountability

Explainable AI (XAI) ensures decision-makers can justify outcomes when questions arise. This is crucial in sectors where regulations demand human oversight, such as the EU’s General Data Protection Regulation (GDPR).

Key takeaway? Clear explanations enable accountability, bridging the gap between innovation and ethical responsibility.

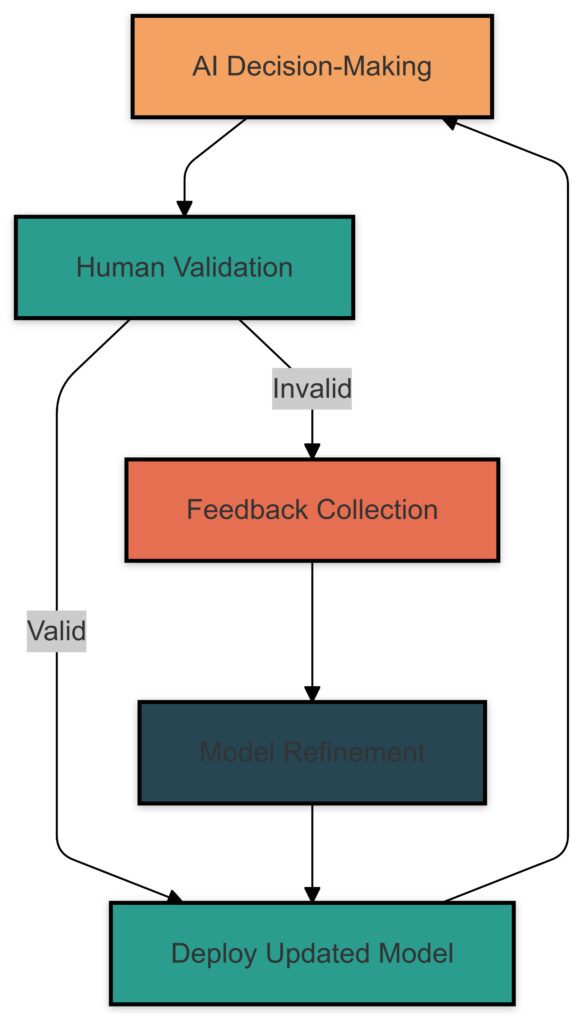

Human Validation:Humans validate the AI’s decisions.

If valid, proceed to deploy the model; if invalid, collect feedback.

Feedback Collection:Gather insights to identify errors or areas of improvement.

Model Refinement:Refine the AI model using the feedback received.

Deploy Updated Model:Deploy the refined model and feed it back into the AI decision-making process.

This loop ensures that AI systems improve continuously, leveraging human insights to enhance performance and trustworthiness.

The Evolution Toward Glass Box AI

Advances in Explainable Algorithms

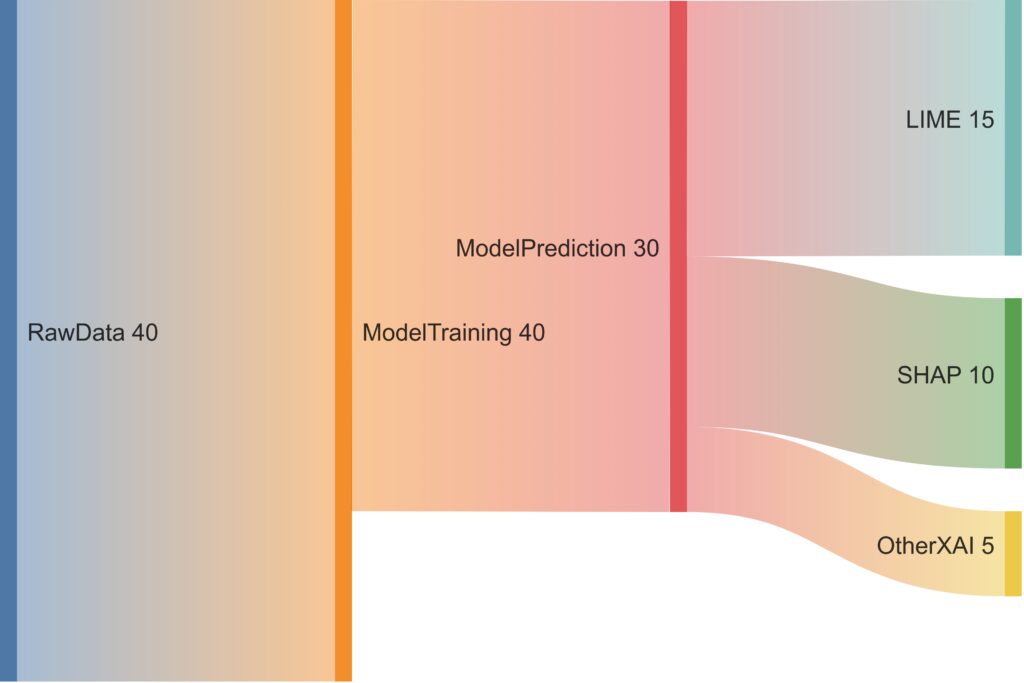

Emerging frameworks, like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (Shapley Additive Explanations), have revolutionized interpretability. These tools break down complex AI predictions into human-readable insights.

Such methods help developers identify biases and improve system performance, making explainability integral to iterative design.

Shifting from Accuracy to Explainability

Traditionally, AI has prioritized accuracy, often at the cost of transparency. Today, explainability is emerging as an equally critical metric, particularly in high-stakes applications like self-driving cars or medical diagnostics.

By balancing accuracy and interpretability, organizations can deliver robust AI solutions that are both effective and understandable.

Regulatory Pressures Demand Explainability

Governments worldwide are introducing laws mandating AI accountability. For instance, the U.S. National Institute of Standards and Technology (NIST) emphasizes explainability in its AI Risk Management Framework.

Organizations failing to adapt risk legal consequences and reputational damage—making explainability a competitive advantage.

Black-Box Models vs. Glass-Box Models

| Attribute | Black-Box Models | Glass-Box Models |

|---|---|---|

| Interpretability | ❌ Low Difficult to understand how predictions are made due to complex internal structures. | ✅ High Transparent and easy to interpret for stakeholders. |

| Accuracy | ✅ Often High Excels in large datasets and complex tasks, leveraging advanced techniques. | ❌ Moderate May sacrifice accuracy for simplicity and transparency. |

| Ease of Debugging | ❌ Difficult Hard to trace errors due to lack of visibility into internal operations. | ✅ Easy Simplified structure allows for quicker identification and resolution of issues. |

| Regulatory Compliance | ❌ Challenging Difficult to meet strict transparency requirements in regulated industries. | ✅ Better Fit Transparency aligns with regulatory standards, such as GDPR or explainability laws. |

| Training Complexity | ✅ High Requires sophisticated algorithms and often substantial computational resources. | ❌ Low to Moderate Simpler algorithms with reduced computational demands. |

| Scalability | ✅ Scalable Adapts well to large datasets and complex problem domains. | ❌ Limited May struggle with scaling in high-dimensional or large datasets. |

The Human-AI Collaboration Revolution

Empowering Non-Technical Stakeholders

One challenge in AI is the communication gap between technical teams and non-technical stakeholders. Explainable AI bridges this divide, offering clear, actionable insights.

For example, in finance, XAI can demystify loan decisions, enabling customers to understand why they were approved or denied.

Enhancing Trust in Automated Systems

When users grasp how AI works, they’re more likely to trust it. Imagine a healthcare provider explaining a diagnosis aided by AI. If patients see the logic, they’re more inclined to accept the outcome.

Transparency doesn’t just inform—it empowers users to feel confident in automated decisions.

From Control to Partnership

Explainability fosters a shift from viewing AI as a tool to treating it as a collaborative partner. By understanding AI’s reasoning, humans can guide systems to align better with ethical and operational goals.

This partnership paves the way for smarter, fairer, and more accountable AI deployments.

Challenges in Achieving Explainable AI

Balancing Complexity and Clarity

AI models like deep learning thrive on complexity, but simplifying their processes can dilute accuracy. This creates a trade-off: how much transparency is too much?

Over-simplification risks omitting key details, while overly complex explanations alienate users. Striking this balance remains a significant challenge.

Bias Detection and Correction

Explainability helps uncover hidden biases in data, but detecting them doesn’t automatically resolve them. Developers must pair explainability tools with strategies for bias correction and equitable outcomes.

This process requires ongoing vigilance and collaboration between technical and ethical experts.

Scalability of Explainable Models

Not all models are easily interpretable, and scaling explainable solutions across diverse applications can be daunting. Industries face the challenge of integrating XAI without compromising efficiency or performance.

Innovative tools and techniques are critical to overcoming this hurdle, making explainability more accessible at scale.

Tools and Techniques Driving Explainability

LIME and SHAP: Breaking Down Decisions

LIME (Local Interpretable Model-agnostic Explanations) and SHAP (Shapley Additive Explanations) are among the most widely used tools for understanding AI predictions.

- LIME generates simple, localized explanations by analyzing the behavior of a model in specific scenarios.

- SHAP assigns importance scores to input features, showing how each contributes to a prediction.

Both frameworks translate opaque algorithms into insights that non-technical users can grasp. However, they work best in controlled contexts and may struggle with real-time or large-scale applications.

Visualizing AI: From Decision Trees to Heatmaps

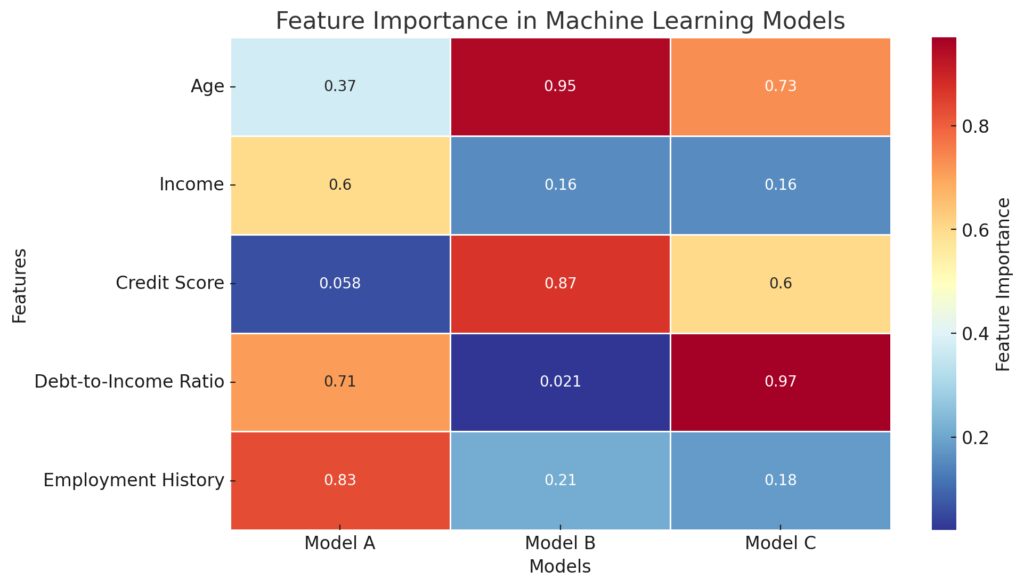

Visual tools like decision trees, feature attribution heatmaps, and attention mechanisms enhance explainability by making data visually intuitive. For instance:

- Decision trees provide step-by-step logic flows.

- Heatmaps highlight parts of an input (like a photo or sentence) that influenced the output.

These tools enable faster identification of anomalies, such as biases or faulty predictions, fostering accountability.

Rows: Input features (e.g., Age, Income, Credit Score, etc.).

Columns: Models (e.g., Model A, Model B, Model C).

Color Gradient:Blue: Indicates low predictive power (less importance).

Red: Indicates high predictive power (greater importance).

Hybrid Approaches: Combining Models with Simpler Layers

Complex models can integrate simpler, interpretable layers to provide transparent explanations without compromising accuracy. For example:

- In healthcare, hybrid models clarify diagnosis pathways while leveraging deep learning for prediction accuracy.

- In finance, they help identify fraud while providing rationale behind flagged transactions.

This fusion of complexity and clarity is key to explainability without sacrificing functionality.

Practical Applications of Explainable AI

Building Trust in Healthcare Decisions

AI tools in healthcare, such as diagnostic systems, demand explainability to ensure trust between patients and providers.

Imagine an AI system that identifies tumors in medical imaging. With transparent processes, doctors can validate the diagnosis, spot errors, and convey findings confidently to patients.

Regulatory agencies like the FDA also require explainable algorithms for approval, making XAI an operational necessity.

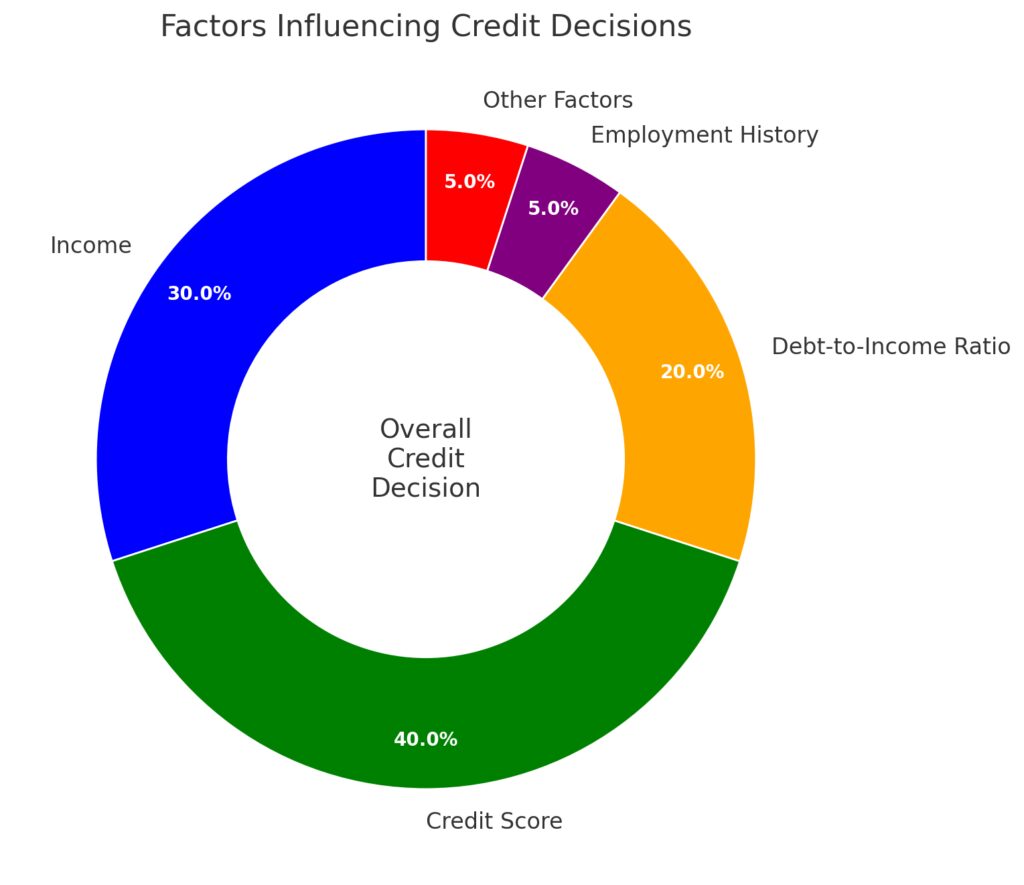

Transparency in Financial Services

Financial institutions rely on AI for tasks like credit scoring, fraud detection, and investment advice. Explainability is critical here, as decisions often directly impact individuals’ lives.

For example, when a loan application is denied, XAI tools can detail the reasons—credit history, income, or debt ratios—allowing consumers to take corrective actions.

This chart visually emphasizes the weight of each factor in determining creditworthiness.

Ethics in Criminal Justice Systems

AI-driven tools like predictive policing or risk assessments are reshaping the criminal justice landscape. Without explainability, these systems risk perpetuating racial and socioeconomic biases.

XAI frameworks allow transparency into how data is used, helping ensure fairness and reducing systemic inequality. By making processes visible, justice systems can align technology with their core values.

The Future of Explainable AI

AI Legislation and Global Standards

As AI adoption grows, governments and organizations are working toward unified explainability standards. Initiatives like the EU’s AI Act and ISO standards emphasize transparency, fairness, and accountability.

- Businesses that prioritize explainability today gain a competitive edge, avoiding legal pitfalls and fostering customer loyalty.

- Future regulations may require explainability for AI certifications, driving demand for compliance-ready models.

Democratizing AI for Everyday Users

As tools for XAI become more accessible, everyday users—teachers, students, employees—will interact confidently with AI in their daily tasks.

For instance, educational AI tools that clearly explain learning outcomes or job application systems that justify their recommendations can empower users across industries.

Toward Ethical AI Ecosystems

The ultimate goal of explainability is to create ethical AI systems that reflect human values. By prioritizing transparency, we can reduce bias, build trust, and pave the way for responsible AI innovation.

Explainability isn’t just a technical feature—it’s a cornerstone of ethical AI development, ensuring that humans remain at the heart of decision-making processes.

Exploring Specific XAI Tools

LIME (Local Interpretable Model-agnostic Explanations)

LIME is a powerful tool that helps explain predictions made by complex models.

- How it works: LIME approximates the behavior of a complex model by creating a simpler, interpretable model (like linear regression) for a specific instance.

- Strengths: It is model-agnostic, meaning it works across different types of AI algorithms, from neural networks to random forests.

- Limitations: LIME focuses on local interpretability, explaining individual predictions but not the model’s overall behavior.

Use case: A doctor using an AI tool to diagnose diseases can use LIME to see which symptoms contributed most to the AI’s diagnosis.

SHAP (Shapley Additive Explanations)

SHAP is based on cooperative game theory, offering a mathematically sound way to explain AI predictions.

- How it works: SHAP assigns importance scores (Shapley values) to input features, showing their contribution to the model’s output.

- Strengths: It provides global and local explanations, making it versatile for both high-level insights and detailed analysis.

- Limitations: Calculating Shapley values can be computationally expensive, especially for large datasets.

Use case: In financial applications, SHAP can explain why a loan was approved or denied, helping customers and regulators understand decision criteria.

Counterfactual Explanations

Counterfactual explanations describe what changes in input data would lead to a different prediction.

- How it works: By tweaking certain variables (e.g., income or credit score), counterfactuals show how the output would shift.

- Strengths: These explanations are intuitive, as they resemble “what if” scenarios, offering actionable insights.

- Limitations: Generating meaningful counterfactuals requires careful consideration of the data’s real-world constraints.

Use case: A job applicant rejected by an AI hiring system can use counterfactuals to understand what qualifications would lead to success.

Saliency Maps and Attention Mechanisms

These techniques are primarily used in AI models processing images or text.

- Saliency maps: Highlight regions of an image that influenced the prediction.

- Attention mechanisms: Pinpoint words or phrases in text data that were most significant for the model’s decision.

Use case: A radiologist using an AI-powered image recognition tool can see the regions of a scan that led to a cancer diagnosis.

InterpretML

InterpretML is a comprehensive library offering multiple tools for explainability, including LIME, SHAP, and glass-box models like explainable boosted machines (EBMs).

- How it works: InterpretML integrates multiple methods, allowing users to compare explainability approaches.

- Strengths: It provides a centralized platform for experimenting with different techniques.

- Limitations: Its broader scope may not be as optimized for specific tasks as specialized tools.

Use case: Data scientists evaluating multiple models can leverage InterpretML to assess which delivers the best balance of accuracy and transparency.

Integrating XAI Tools into Workflows

Choosing the Right Tool for the Job

Selecting the right XAI tool depends on the complexity of your model, the type of data, and your end-users’ needs.

- For tabular data (e.g., spreadsheets), SHAP or LIME are ideal for understanding feature importance.

- For image data, saliency maps or attention mechanisms provide intuitive visual explanations.

- For real-world “what if” scenarios, counterfactual explanations are best suited.

Automating Explainability in Deployment

To ensure seamless integration, many XAI tools can be embedded into machine learning pipelines. Libraries like PyCaret and TensorFlow provide built-in compatibility with LIME, SHAP, and similar tools.

Balancing Speed and Accuracy

Real-time applications, like fraud detection, require lightweight explainability tools. Hybrid approaches—simplifying explanations while maintaining core insights—help strike this balance.

Industries Leveraging Explainable AI Tools

Healthcare: Ensuring Trust in Medical Decisions

Healthcare organizations use XAI tools like LIME and SHAP to explain predictions in diagnostic models.

- Example: A hospital uses SHAP to interpret an AI model that predicts patient readmission risks. By showing which factors, such as age or previous conditions, contributed to the prediction, healthcare providers can personalize treatments.

- Impact: These insights help clinicians validate AI recommendations and strengthen patient trust in technology-assisted care.

Financial Services: Transparency in Credit and Risk Assessments

The financial sector depends on XAI tools to explain complex risk models and regulatory compliance.

- Example: A bank uses counterfactual explanations to inform a customer why their loan was denied and suggests how improving their credit score or income could change the outcome.

- Impact: Transparent decision-making helps institutions meet legal requirements like the U.S. Equal Credit Opportunity Act while improving customer satisfaction.

Retail and E-commerce: Enhancing Customer Engagement

AI-driven recommendation engines in retail are more effective when explanations accompany suggestions.

- Example: An e-commerce platform uses attention mechanisms to explain why certain products were recommended, such as “customers who viewed this also bought that.”

- Impact: Transparency drives higher user engagement and fosters trust in AI-driven personalization.

Criminal Justice: Promoting Fairness and Accountability

The use of predictive analytics in criminal justice has faced criticism for perpetuating biases. XAI tools like SHAP are critical in auditing these systems.

- Example: A court system analyzes risk assessments using LIME to ensure that predictions about reoffending rates are based on relevant, unbiased factors.

- Impact: This fosters fairness and helps communities trust AI’s role in judicial processes.

Practical Tips for Developers Implementing XAI

Start with the Right Data

Explainability starts with quality data. Developers should ensure data is:

- Clean and unbiased: Avoid data that skews results or embeds historical inequalities.

- Comprehensive: Use datasets that cover all relevant scenarios to make the model robust and fair.

Pre-processing techniques like de-biasing or reweighting data can improve model transparency before deploying XAI tools.

Test Multiple Tools for Context

Different tasks require different XAI approaches. Experiment with LIME, SHAP, and saliency maps to identify what works best for your model and audience.

- For predictive insights: Use SHAP for global and local explainability.

- For visual data: Integrate saliency maps to highlight influential regions in images.

Focus on User-Centric Explanations

Developers should tailor explanations to their audience. For example:

- Non-technical users: Simplify explanations using counterfactuals or visual tools.

- Data scientists: Provide detailed technical insights like Shapley values for deeper analysis.

Always prioritize clarity over complexity to ensure end-users trust and understand the outputs.

Integrate Explainability into Development Pipelines

Use platforms like InterpretML, Alibi Explain, or H2O.ai to embed explainability in your development workflow. These platforms automate aspects of XAI, ensuring consistent results across projects.

- Alibi Explain: Offers prebuilt tools for feature importance and counterfactual analysis, streamlining deployment.

- H2O.ai: Combines model building with built-in XAI features for end-to-end solutions.

Challenges in Industry Adoption

Balancing Trade-Offs

Developers often face a trade-off between model accuracy and explainability. Complex models like deep neural networks may resist simplification, requiring innovative hybrid approaches.

Scaling Explainability

Large organizations need explainability tools that work across multiple datasets and use cases. Deploying tools like SHAP at scale requires optimized infrastructure and robust computing power.

Managing Regulatory Requirements

AI explainability must align with evolving global standards, such as the EU’s AI Act or the U.S. Algorithmic Accountability Act. Developers should stay informed about regulatory updates to ensure compliance.

Actionable Steps for Implementing Explainable AI

1. Start with Transparency in Mind

Begin every AI project by defining explainability goals. Ask:

- Who needs to understand the system?

- What level of detail is appropriate for the audience?

Incorporate XAI tools like LIME or SHAP early in the development cycle to ensure transparency from the outset.

2. Prioritize Ethical Data Practices

The foundation of explainable AI lies in unbiased, high-quality data.

- Regularly audit datasets to remove biases.

- Include diverse perspectives during data collection and pre-processing.

- Monitor real-world performance to detect emerging biases post-deployment.

3. Choose Tools Aligned with Your Needs

Select XAI tools based on your model type and application:

- Use SHAP or LIME for feature importance in tabular data.

- Apply counterfactual explanations for actionable insights in user-facing systems.

- Leverage saliency maps for visual data or attention mechanisms for text-based models.

Combine tools when necessary to address different layers of explainability.

4. Tailor Explanations for Your Audience

Adapt the depth and complexity of explanations to your stakeholders:

- For end-users: Provide simple, relatable justifications (e.g., “You were denied a loan due to insufficient income”).

- For regulators: Offer detailed, technical insights with supporting documentation.

- For internal teams: Share comprehensive model evaluations to improve system performance and accountability.

5. Integrate Explainability Into Your Workflow

Build XAI into your development and deployment pipelines using platforms like:

- InterpretML for versatile model interpretability.

- H2O.ai for end-to-end machine learning with embedded XAI features.

- Alibi Explain for advanced explainability options, including counterfactual analysis.

Regularly update and validate these systems to keep up with evolving models and regulations.

6. Monitor and Iterate Post-Deployment

Explainability doesn’t end with deployment. Continuously assess how well your system provides clarity by:

- Gathering feedback from end-users on the usefulness of explanations.

- Auditing system outputs for consistency and fairness.

- Refining models to align better with user expectations and regulatory requirements.

Explainability is no longer optional—it’s essential for building trust, meeting compliance, and ensuring ethical AI use. By implementing these steps, organizations can transition from “black box” systems to accountable, transparent AI solutions that empower users and drive innovation.

Would you like a summary or additional resources to deepen your understanding of these tools and strategies?

FAQs

Are there regulations that mandate explainable AI?

Yes, various frameworks and laws prioritize explainability, including:

- The EU’s AI Act, which emphasizes transparency and accountability.

- The U.S. Algorithmic Accountability Act, which calls for audits of automated systems.

- The GDPR, requiring explanations for automated decisions that significantly impact individuals.

How can organizations implement XAI effectively?

Organizations can follow these steps:

- Use diverse, unbiased datasets.

- Select tools suited to their models and audiences.

- Integrate explainability into development pipelines using platforms like InterpretML or H2O.ai.

- Continuously monitor and refine systems post-deployment for consistency and fairness.

How does explainability differ from interpretability?

Interpretability is the ability to understand a model’s internal workings or logic, typically for simpler models like decision trees. Explainability, on the other hand, focuses on providing insights into complex models’ predictions, even when their internal mechanisms (like neural networks) are difficult to interpret.

Can explainability help detect biases in AI?

Yes, explainability tools like SHAP and counterfactual analysis can highlight biases in model predictions by revealing which features influence outcomes disproportionately. This insight helps developers identify and address systemic biases in their training data or algorithms.

Is explainability relevant for all types of AI models?

Explainability is critical for black-box models, such as deep neural networks and ensemble methods, where internal decision-making is not intuitive. Simpler models, like linear regression or decision trees, are naturally interpretable and may require less additional explanation.

What industries benefit the most from XAI?

Industries with high stakes and regulatory scrutiny benefit greatly, including:

- Healthcare: Explaining diagnostic predictions or treatment recommendations.

- Finance: Ensuring transparency in credit scoring and fraud detection.

- Criminal justice: Preventing biases in predictive policing or sentencing models.

- Retail: Increasing trust in personalized recommendations.

How does XAI improve user trust?

Explainability fosters trust by helping users understand why and how decisions are made. For example, if a customer understands the rationale behind a denied loan application, they’re more likely to trust the system—even if the outcome isn’t in their favor.

What role does human oversight play in explainable AI?

Human oversight ensures that AI operates ethically and aligns with societal values. Explainable AI enables this by providing the transparency needed for humans to validate decisions, question anomalies, and intervene when necessary.

What are “glass box” models?

“Glass box” models are designed to be inherently explainable, offering transparency in their structure and decision-making processes. Examples include linear regression, decision trees, and explainable boosted machines (EBMs), which prioritize clarity alongside predictive performance.

Can explainability address ethical concerns in AI?

Yes, by making decision processes transparent, explainability highlights potential issues like biases, unfair outcomes, or misuse of sensitive data. This allows organizations to correct these concerns proactively, fostering more ethical AI practices.

How does explainability interact with privacy concerns?

Explainability and privacy often need to be balanced. Providing detailed explanations may inadvertently expose sensitive user data. Techniques like privacy-preserving XAI use methods like differential privacy to ensure transparency without compromising individual data security.

What are counterfactual explanations?

Counterfactual explanations show what changes in input data would result in a different outcome. For instance, “If you had a credit score of 750 instead of 680, your loan application would have been approved.” This approach provides actionable insights while enhancing system clarity.

How does explainable AI impact model deployment?

Explainable AI helps during deployment by ensuring that models meet regulatory and ethical standards. It also facilitates smoother adoption by stakeholders, as users and regulators can understand and trust the decisions made by the AI system.

What are saliency maps used for?

Saliency maps are visual tools used to explain predictions in image recognition models. They highlight regions of an image that the model considers most important for its decision. For example, in a model detecting cats, the saliency map might highlight ears or whiskers.

Are there frameworks for developing explainable AI?

Yes, several frameworks are designed to support XAI development:

- InterpretML: A library that includes glass-box models and interpretability techniques like SHAP and LIME.

- AI Explainability 360: Developed by IBM, this toolkit provides tools for interpretable and fair AI.

- Google’s What-If Tool: Helps users explore machine learning models interactively, analyzing feature importance and fairness.

Can explainable AI prevent AI misuse?

Explainable AI can reduce misuse by identifying problematic decisions or behaviors, such as discriminatory predictions. By exposing how a model reaches conclusions, organizations can detect and address intentional or unintentional misuse.

What are attention mechanisms in explainability?

Attention mechanisms are used in natural language processing (NLP) models, like transformers, to highlight which words or phrases had the most influence on the model’s prediction. This technique is particularly valuable in tasks like sentiment analysis or language translation.

Is explainable AI only for regulated industries?

While explainable AI is essential in regulated industries like healthcare and finance, it benefits all fields. Transparent systems foster trust and improve adoption across sectors, including retail, entertainment, and education.

What is model-agnostic explainability?

Model-agnostic methods, such as LIME and SHAP, are not tied to a specific type of AI model. They work with any algorithm to provide explanations, making them versatile tools for organizations working with diverse models.

How does XAI address the issue of fairness?

XAI helps uncover unfair patterns in model predictions, such as discriminatory outcomes based on race or gender. By analyzing feature importance and bias, developers can refine their models to ensure more equitable results.

What is the role of counterfactual fairness in XAI?

Counterfactual fairness evaluates whether a prediction would remain the same if sensitive attributes (like race or gender) were altered. For instance, an AI hiring system should yield the same recommendation for equally qualified candidates, regardless of demographic differences.

How do explainability tools handle time-sensitive applications?

In real-time systems, such as fraud detection or autonomous driving, lightweight XAI methods are essential. Techniques like rule-based systems or hybrid models prioritize faster explanations while maintaining sufficient detail for accountability.

Resources

Research Papers and Articles

- “Why Should I Trust You?” Explaining the Predictions of Any Classifier (Ribeiro et al.)

This seminal paper introduces LIME and explains its use in making complex models more interpretable.

Read the paper - A Unified Approach to Interpreting Model Predictions (SHAP) (Lundberg and Lee)

This paper lays the foundation for SHAP, discussing its advantages and mathematical principles.

Read the paper - AI Fairness and Explainability Guidelines by NIST

A government-backed resource outlining best practices for ethical and explainable AI systems.

Learn more

Open-Source Libraries and Tools

- InterpretML

A Microsoft-developed library that combines explainability methods like LIME, SHAP, and EBMs in one package.

Visit GitHub - AI Explainability 360

IBM’s open-source toolkit offers tools for fairness and transparency across multiple AI models.

Access the toolkit - SHAP Library

The official SHAP Python package for implementing Shapley Additive Explanations in your projects.

Check it out - LIME (Local Interpretable Model-agnostic Explanations)

Provides Python implementations for explaining any machine learning model.

Learn more