Convolutional Neural Networks (CNNs) have achieved impressive results in tasks like image recognition, object detection, and more. However, one major drawback is that these neural networks often operate as “black boxes,” making it hard to understand how they reach their conclusions.

This lack of transparency has prompted the development of Explainable CNNs—a new wave of models and techniques that aim to shed light on the inner workings of CNNs.

In this article, we’ll dive into how explainable CNNs work, why they are important, and the methods used to make these neural networks more interpretable.

Why Do CNNs Need Explainability?

The Black Box Problem in CNNs

CNNs are powerful because they can learn intricate patterns from data, but they also lack transparency. The decision-making process in a CNN is hard to interpret, as it involves multiple layers of convolutions and non-linear activations.

This creates a black box problem where we know the input and output but have little understanding of how the network transforms the data in between. In critical applications like healthcare or autonomous driving, the inability to explain why a model made a certain decision can lead to distrust or even potential harm.

Trust and Accountability in AI Systems

For AI to be widely accepted, especially in high-stakes domains, we need to build trust. Explainable CNNs are key to achieving this trust by providing a clearer understanding of how models arrive at their conclusions. This is especially crucial in cases where decisions need to be justified, such as when diagnosing a medical condition or determining creditworthiness.

Accountability is another major reason why we need interpretable CNNs. If a model produces an incorrect output, understanding the model’s decision process can help in refining and improving the system.

Methods for Making CNNs More Interpretable

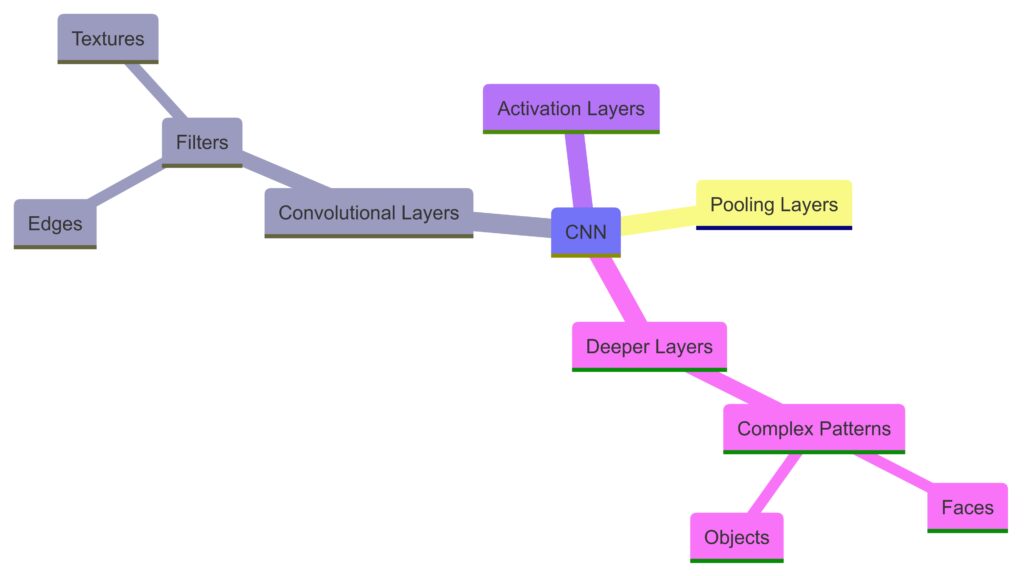

1. Visualization of Filters and Feature Maps

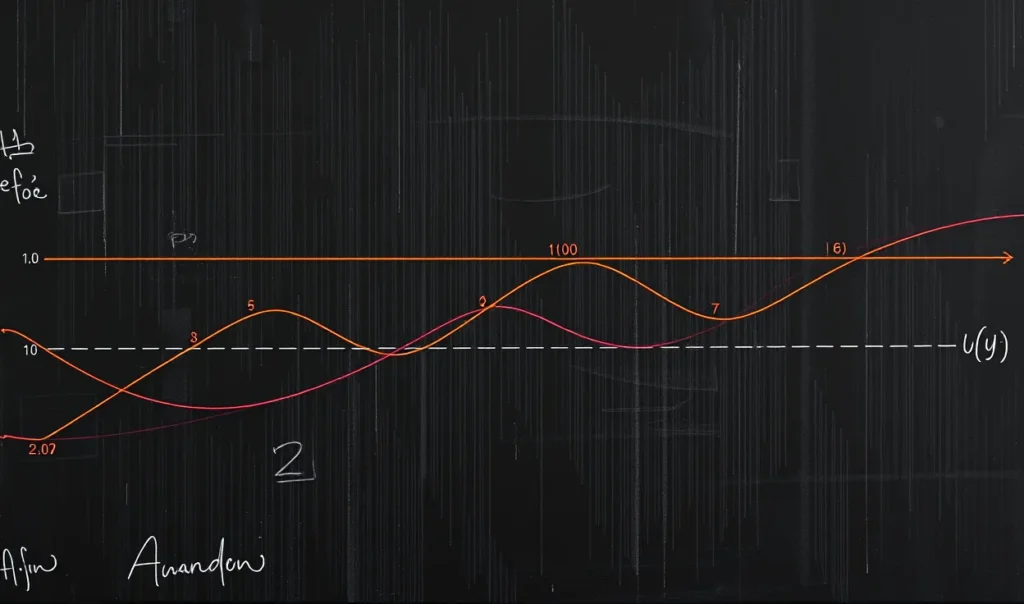

One way to explain CNNs is through the visualization of filters and feature maps. Filters in a CNN learn to recognize specific features in an image, like edges, textures, or even complex patterns like faces.

By visualizing the filters and feature maps from different layers, researchers can get a sense of what the network is focusing on as it processes an image. Lower layers typically detect simple patterns, while higher layers capture more abstract and complex features.

2. Saliency Maps and Heatmaps

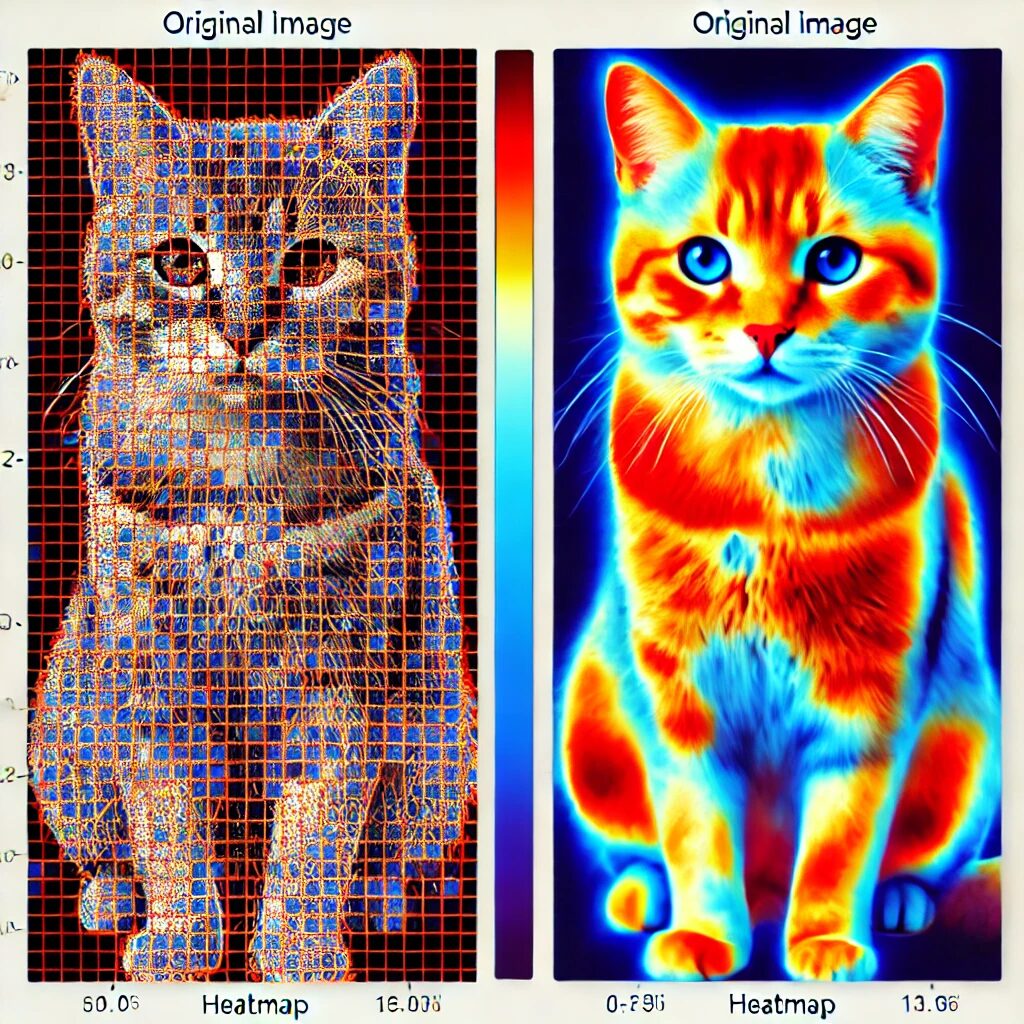

Saliency maps and heatmaps are common tools used to highlight which parts of the input image contribute the most to the CNN’s decision. These maps show which pixels had the highest impact on the model’s output, making it easier to understand what the network is focusing on.

This is particularly useful in object detection tasks. For instance, in medical imaging, heatmaps can show that the network is focusing on an abnormal region in a lung scan when diagnosing a disease.

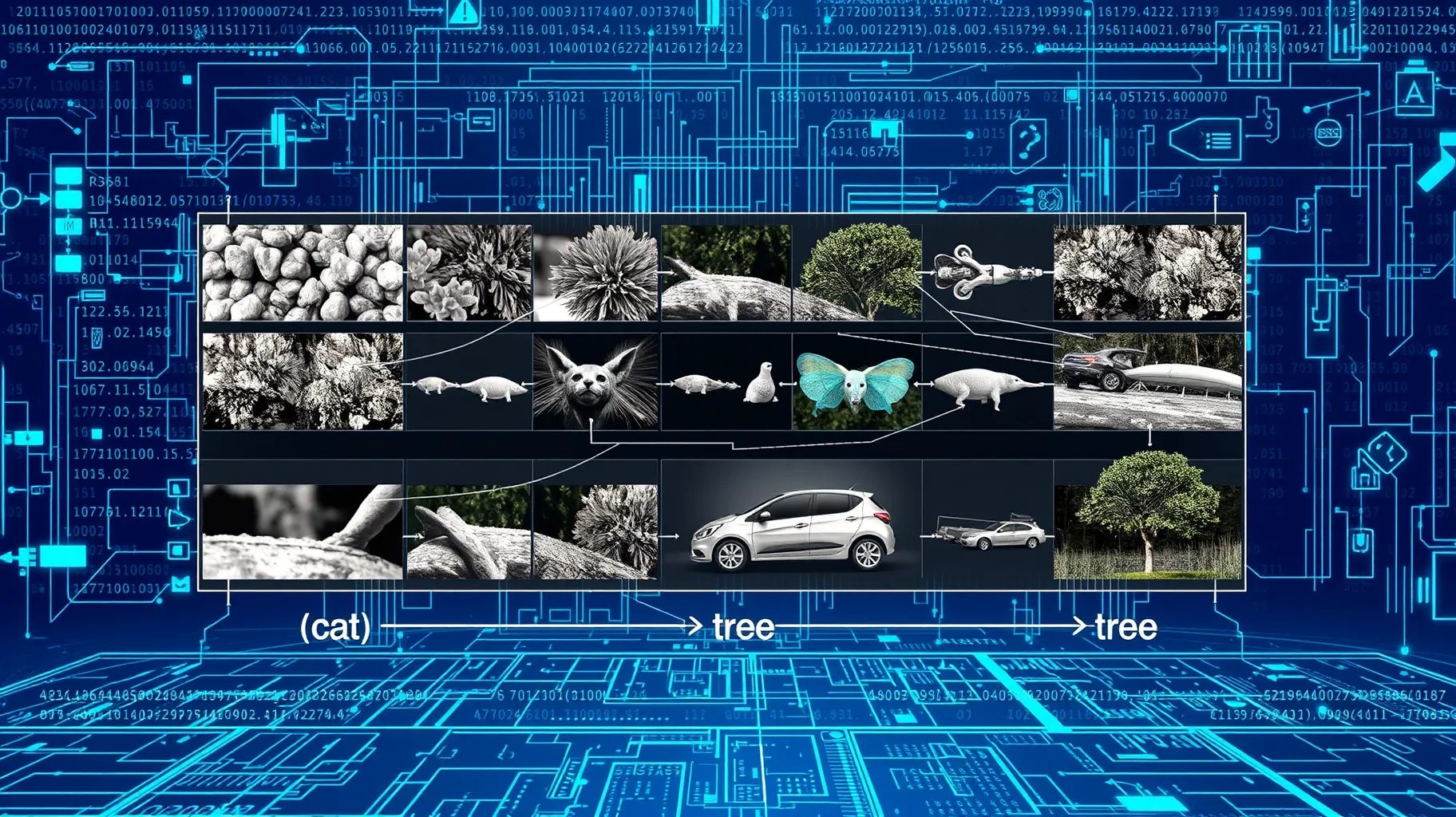

Saliency maps show which parts of an image influence a CNN’s classification decision.

3. Class Activation Maps (CAM)

Class Activation Maps (CAMs) take saliency maps a step further. CAMs provide a spatial understanding of which parts of an image influence the model’s classification for a specific category.

For example, if a CNN classifies an image as a cat, the CAM will highlight the areas of the image that contributed most to this classification, like the shape of the ears or the texture of the fur. This helps in validating that the model is focusing on the right features for making decisions.

Class Activation Maps highlight regions of the image that strongly influence model classification.

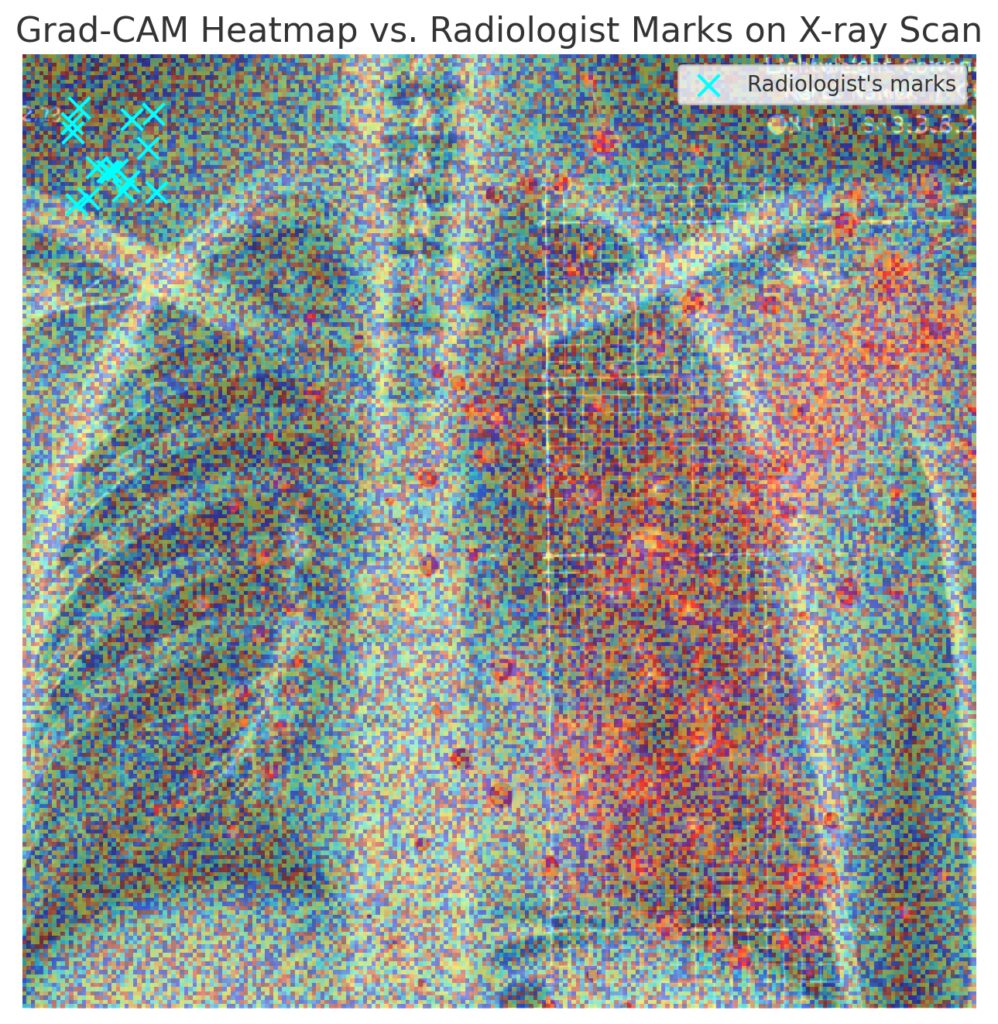

4. Grad-CAM: Gradient-based Explanations

Grad-CAM is a more advanced technique that builds on CAM. It uses gradients flowing back through the network to generate activation maps. This makes it more flexible and powerful, as it can be applied to a broader range of CNN architectures, including those that are not class-specific.

Grad-CAM++ is an improved version that provides even more detailed explanations by capturing finer spatial details, making it easier to interpret how different parts of the image contribute to the model’s decision.

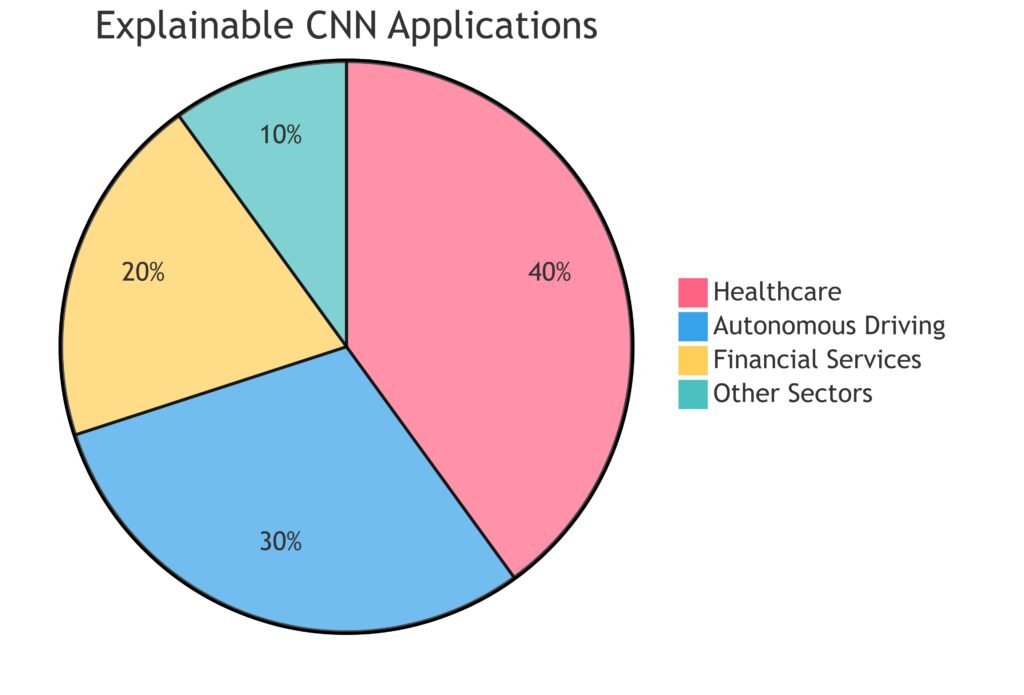

Applications of Explainable CNNs

Healthcare and Medical Imaging

One of the most promising areas for explainable CNNs is in healthcare. CNNs are increasingly used to assist doctors in diagnosing diseases from medical images such as X-rays, MRIs, and CT scans. However, for these models to be trusted, doctors need to understand why the model is making a specific diagnosis.

Explainable CNNs help doctors visualize which areas of an image are most relevant to the diagnosis, ensuring the model is focusing on the right regions and not irrelevant factors. This not only builds trust but also provides additional insights into the disease itself.

Grad-CAM reveals critical regions in medical images, helping to interpret CNN-based diagnoses.

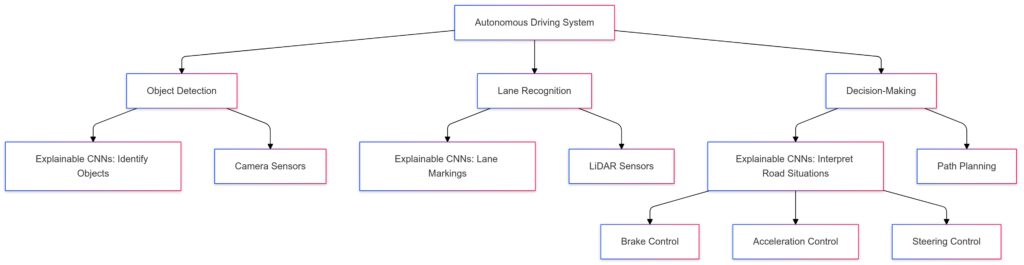

Autonomous Vehicles

In autonomous driving, CNNs are used for object detection, lane recognition, and decision-making. However, for self-driving cars to be fully trusted on the road, it’s crucial to understand their decision-making process.

Explainable CNNs can show which aspects of the environment—such as pedestrians, vehicles, or traffic signs—the model is focusing on, offering more transparency in how the car makes driving decisions.

Financial Services

In financial services, CNNs are used to analyze patterns in data for credit scoring, fraud detection, and risk assessment. An explainable CNN in this domain can provide clarity on how certain patterns in the data lead to a particular decision, making the system more trustworthy to regulators and consumers.

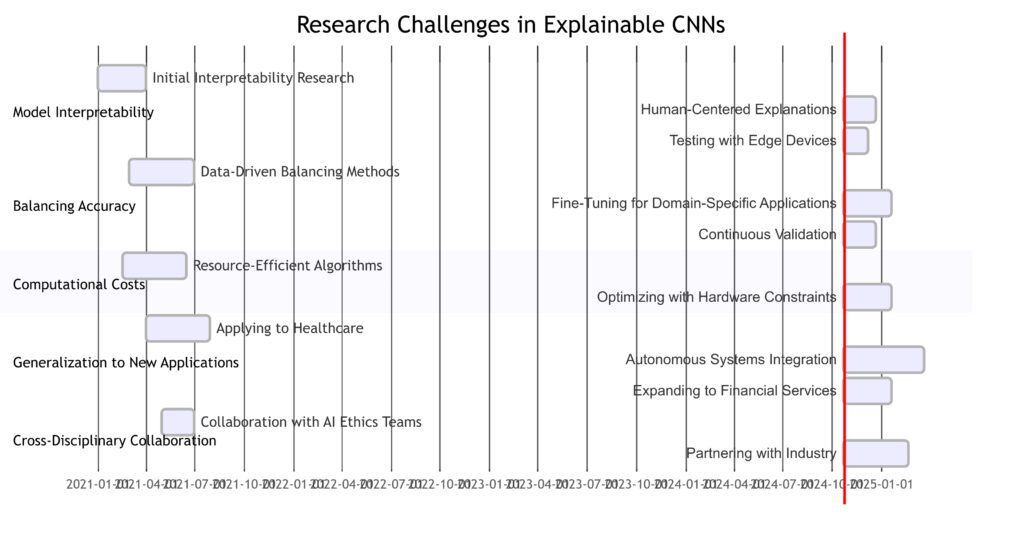

Challenges and Limitations of Explainable CNNs

Trade-offs Between Accuracy and Interpretability

A common challenge with explainability in CNNs is the trade-off between accuracy and interpretability. Making a model more interpretable often involves simplifying the architecture or adding constraints, which can reduce the model’s overall accuracy. Striking a balance between these two is still a work in progress.

Complexity in Real-World Applications

While explainable CNNs are a step forward, they can still struggle with the complexity of real-world applications. Some explanations, especially from techniques like Grad-CAM, may not always be intuitive or easy to interpret for non-experts. Furthermore, in cases involving large datasets, the computational cost of generating explanations can be a significant limitation.

The Future of Explainable CNNs

As AI systems become more integrated into our daily lives, the need for explainable and transparent CNNs will only grow. Ongoing research aims to create models that are both powerful and interpretable, without sacrificing one for the other.

New techniques are being developed to make CNNs more explainable, ranging from better visualization tools to simpler, inherently interpretable architectures. The goal is to make AI systems that are not only accurate but also understandable, accountable, and trustworthy.

Further Reading:

- Visualizing and Understanding Convolutional Networks

- Grad-CAM: Why Did You Say That?

- Explainable AI in Medical Imaging

FAQs

What are explainable CNNs?

Explainable CNNs refer to convolutional neural networks that have been modified or paired with techniques to make their decision-making process more transparent. These models allow users to understand how and why certain predictions are made, offering insight into the internal workings of the network.

Why is explainability important for CNNs?

Explainability is crucial because it builds trust in AI systems. In fields like healthcare and finance, understanding why a model made a specific decision is essential for ensuring safety, fairness, and accountability. It also helps improve model reliability by identifying errors or biases.

How do CNNs typically operate as “black boxes”?

CNNs are often referred to as black boxes because their complex architectures make it difficult to understand how they transform input data into predictions. With multiple layers of convolutions, activations, and pooling, the decision-making process becomes obscure, leaving users unsure about how the model reached its conclusion.

What are some common methods for explaining CNNs?

Some widely used techniques for explaining CNNs include visualizing filters and feature maps, saliency maps, class activation maps (CAM), and Grad-CAM. These methods help identify which parts of an image or input data are influencing the network’s predictions, offering greater transparency.

What is the difference between saliency maps and Grad-CAM?

Saliency maps highlight the most important pixels in an image that influence the model’s prediction, while Grad-CAM uses gradients to produce class-specific activation maps, showing which regions are most important for a particular prediction. Grad-CAM provides more spatial and context-specific information compared to basic saliency maps.

Can explainable CNNs improve model performance?

While explainability primarily aims at increasing transparency, it can indirectly improve performance by identifying areas where the model may be making incorrect or biased decisions. Through better understanding, developers can fine-tune and adjust models to improve their accuracy.

Where are explainable CNNs commonly applied?

Explainable CNNs are widely applied in fields like healthcare, where they assist in interpreting medical images; autonomous driving, where understanding object detection and decision-making is critical; and financial services, where model transparency is essential for regulatory compliance and customer trust.

Are there any trade-offs between explainability and performance?

Yes, there can be trade-offs. Models that are designed to be more interpretable may be simpler in architecture, which could slightly reduce their overall accuracy. However, finding a balance between performance and interpretability is a key area of ongoing research.

What challenges remain in developing explainable CNNs?

Challenges include the complexity of generating human-understandable explanations, especially in real-world scenarios with vast amounts of data. There is also a need to balance the trade-offs between making models interpretable without sacrificing too much accuracy. Furthermore, many explanation methods are still too complex for non-experts to grasp easily.

Can explainable CNNs detect biases in models?

Yes, explainable CNNs can help detect biases by revealing which features or patterns the model is focusing on. For example, if a model for loan approval is found to prioritize irrelevant features like race or gender, this bias can be identified and corrected by analyzing the model’s behavior using explainability techniques.

How does visualization of filters make CNNs more interpretable?

Visualizing filters helps in understanding what features the network is learning at different stages. Filters in early layers detect simple patterns like edges or textures, while deeper layers capture more complex features. By examining these filters, researchers can infer how the network processes images, which brings clarity to its decision-making process.

What is the role of Class Activation Maps (CAM) in explainability?

Class Activation Maps (CAM) play a critical role in explaining which regions of an image are contributing most to the final classification decision. CAMs help users verify whether the CNN is focusing on the correct features, like highlighting a dog’s ears when identifying a dog. This provides a spatial understanding of the network’s focus areas.

Can explainable CNNs be applied outside image data?

While CNNs are primarily used for image data, explainable techniques can be adapted to other domains. For instance, CNNs can be applied to text or time-series data, and explainability methods can be used to highlight important sequences, words, or patterns in the data that influence predictions.

What are some limitations of using explainable CNNs?

A key limitation of explainable CNNs is the complexity of generating understandable explanations, especially for large, high-dimensional datasets. Also, some explainability methods may produce results that are still too abstract for non-experts to interpret effectively. Moreover, explainability techniques can sometimes be computationally expensive, making them harder to implement in real-time applications.

How does Grad-CAM++ improve upon Grad-CAM?

Grad-CAM++ enhances Grad-CAM by providing more detailed and precise explanations. While Grad-CAM generates rough activation maps, Grad-CAM++ produces finer, pixel-level maps that show which parts of the input contributed most to the model’s decision. This makes it more useful in scenarios requiring high precision, such as medical diagnosis.

Can explainable CNNs be integrated with other AI models?

Yes, explainable CNNs can be integrated with other AI models such as decision trees or attention-based networks to improve interpretability. Hybrid models, which combine the strengths of CNNs and interpretable architectures, can offer both high performance and transparency.

What role do explainable CNNs play in regulatory compliance?

In regulated industries like finance and healthcare, explainable CNNs are crucial for meeting compliance standards. For instance, in credit scoring or insurance, models need to justify their decisions to ensure they are fair and non-discriminatory. Explainable CNNs help organizations provide clear reasons for their AI-driven decisions, aligning with legal and ethical requirements.

How do explainable CNNs contribute to ethical AI development?

Explainable CNNs contribute to ethical AI by promoting transparency, accountability, and fairness. They allow developers and users to understand how a model makes decisions, making it easier to detect biases, ensure fairness, and avoid harm in sensitive applications. This contributes to building more responsible and trustworthy AI systems.

Are there alternatives to explainable CNNs for model interpretability?

Yes, other interpretable models, such as decision trees, rule-based systems, or linear models, offer inherent interpretability. However, these models might not match the accuracy of CNNs in complex tasks like image recognition. Explainable CNNs aim to bridge this gap by combining the high performance of deep learning with enhanced interpretability.