AI is a game-changer, but the way you approach it matters. Should you fine-tune a pre-trained model or start from scratch? Both paths have unique benefits and challenges. In this guide, we’ll break down the key factors to help you make the best decision for your AI project.

Understanding AI Training Approaches

What is Fine-Tuning?

Fine-tuning involves taking an existing pre-trained model and adapting it to your specific needs.

- These models are often pre-trained on massive datasets (like GPT or BERT).

- You only adjust the model for a particular task or domain.

This method requires less data and computational power, making it an efficient choice for most projects.

What is Training from Scratch?

Training from scratch means building a model from the ground up.

- You start with a blank slate (untrained model).

- All parameters are learned solely from your dataset.

While this method offers complete customization, it demands extensive resources, including large datasets and high-performance hardware.

Key Considerations for Fine-Tuning

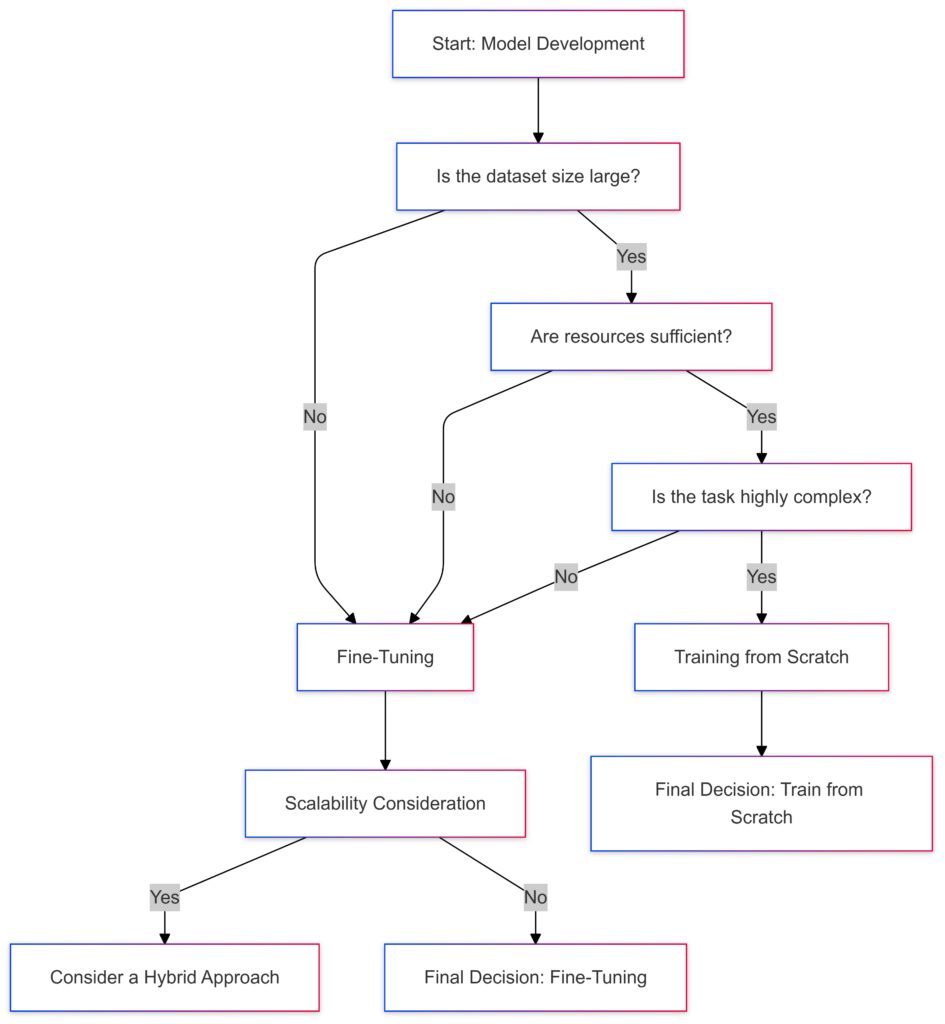

Start:

Decision begins with model development.

Key Factors:

Dataset Size:If large, assess resources.

If small, opt for fine-tuning.

Resources:If sufficient, consider task complexity.

If limited, prioritize fine-tuning.

Task Complexity:Highly complex tasks benefit from training from scratch.

Simpler tasks favor fine-tuning.

Scalability:For scalable solutions, consider hybrid approaches.

Final Decisions:

Fine-Tuning: Best for smaller datasets, limited resources, or simpler tasks.

Training from Scratch: Optimal for large datasets, ample resources, and complex tasks.

Resource Efficiency

Fine-tuning is ideal when resources are limited.

- Why? Pre-trained models have already done the heavy lifting by learning general features.

- You can focus on specific tweaks, saving time and money.

Data Requirements

Fine-tuning typically needs smaller datasets than training from scratch.

- Even a dataset with just a few thousand samples can work wonders.

- This is particularly beneficial for niche industries with limited data availability.

Domain-Specific Adaptability

Pre-trained models can adapt to specific industries or applications.

- For instance, adapting a general language model for medical diagnostics or legal document analysis.

With fine-tuning, you gain domain relevance without losing general knowledge.

The Case for Training from Scratch

Maximum Control Over Architecture

Building from scratch gives you full control over the model design.

- Tailor the architecture to fit unique project requirements.

- Ideal for cutting-edge research or custom use cases with no pre-trained alternatives.

Handling Specialized Tasks

If your task is vastly different from mainstream AI applications, pre-trained models might not cut it.

- Example: Training a model for genomic data analysis may require scratch training, as there are fewer pre-trained options.

Scalability and Experimentation

Training from scratch allows for unlimited experimentation with:

- Model size.

- Layers and structures.

- Optimization algorithms.

This flexibility is key for projects pushing AI boundaries.

Evaluating Costs in AI Development

Hardware Demands

Fine-tuning usually runs on a single GPU or even a CPU.

- Training from scratch? Be ready for multi-GPU setups or cloud infrastructure.

Time Commitment

Training a model from scratch can take weeks or months. Fine-tuning, on the other hand, can be done in hours to days, depending on the model and dataset size.

Financial Considerations

- Fine-tuning saves money on computational resources.

- Training from scratch is costlier, especially when factoring in team expertise and infrastructure.

Real-World Examples of Fine-Tuning

Chatbots and Virtual Assistants

Fine-tuning is widely used in conversational AI systems.

- Example: OpenAI’s GPT models fine-tuned for specific industries, like healthcare or e-commerce.

- Why? These systems need to answer domain-specific queries without losing conversational fluency.

Fine-tuning allows businesses to personalize pre-trained chatbots with industry-relevant data, enhancing customer satisfaction.

Image Recognition in Niche Fields

Fine-tuning works wonders in specialized fields like medical imaging.

- For example, adapting pre-trained vision models like ResNet for detecting diseases in MRI or CT scans.

- Fine-tuning saves time, especially when collecting large datasets of labeled medical images is challenging.

Sentiment Analysis and Text Classification

Pre-trained language models (like BERT) are fine-tuned for sentiment analysis or spam detection.

- This approach is used by platforms like Twitter or YouTube to filter harmful content.

Fine-tuning ensures high accuracy without overburdening computational resources.

Success Stories of Training from Scratch

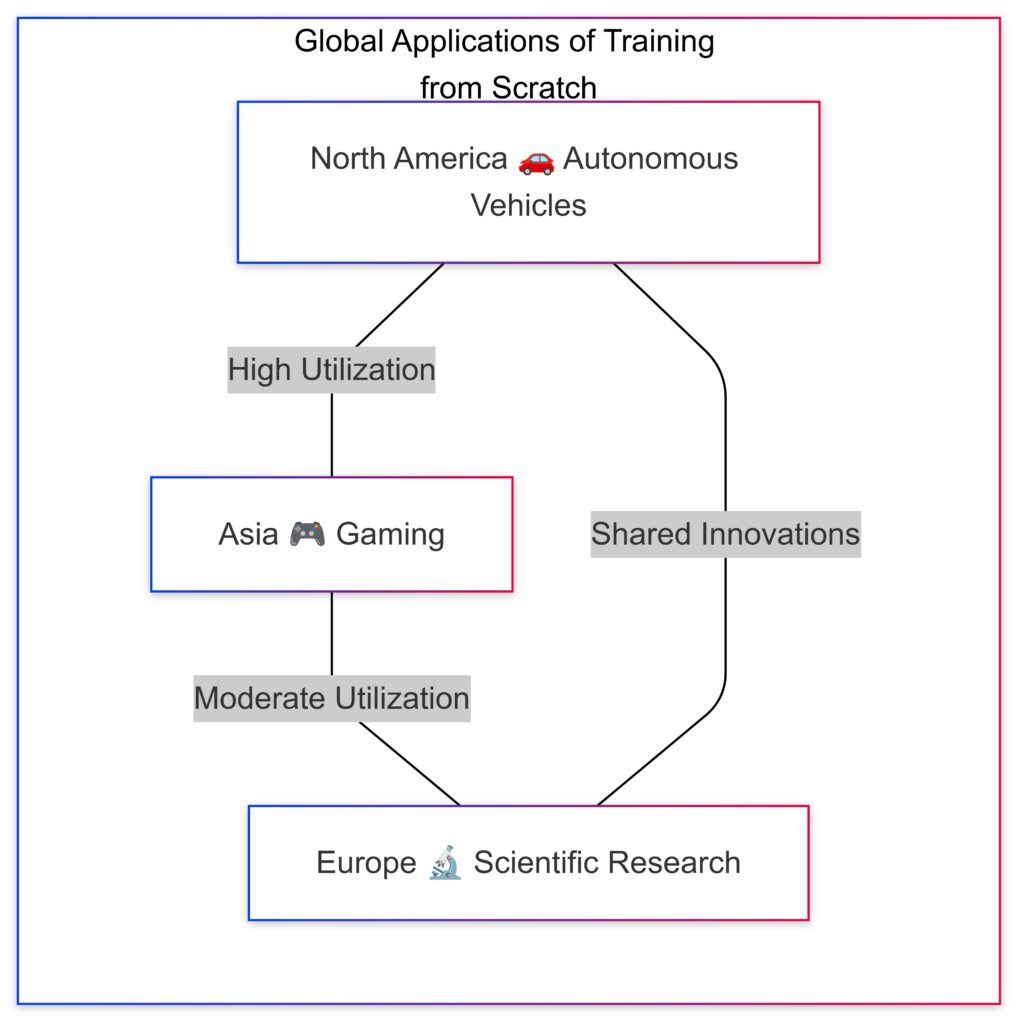

Utilization Gradient:

Moderate utilization in Asia and Europe.

High utilization in North America.

Autonomous Vehicles

Training AI from scratch is common in self-driving car systems.

- These models are trained on massive datasets with millions of miles of driving data.

- Custom architectures are developed to process sensor fusion, combining LIDAR, cameras, and radar data.

Here, scratch training is essential due to the unique complexity of real-time driving conditions.

Scientific Research

Researchers often train models from scratch to explore new frontiers in AI.

- Example: AI models for protein folding or quantum computing simulations.

- These fields require bespoke algorithms that aren’t covered by existing pre-trained models.

Gaming and Simulation

AI in video games relies heavily on training models from scratch.

- Custom-built reinforcement learning models allow game developers to create adaptive, intelligent NPCs (non-player characters).

- Fine-tuning is rarely applicable since the tasks are too game-specific.

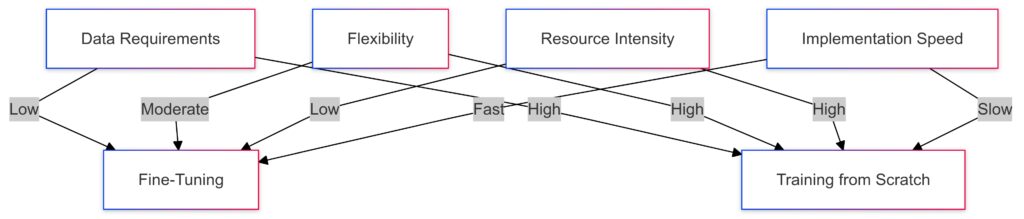

Common Challenges in Each Approach

Fine-Tuning: Low data requirements.

Training from Scratch: High data requirements.

Flexibility:

Fine-Tuning: Moderate flexibility.

Training from Scratch: High flexibility.

Resource Intensity:

Fine-Tuning: Low resource demand.

Training from Scratch: High resource demand.

Implementation Speed:

Fine-Tuning: Fast to implement.

Training from Scratch: Slower to implement.

Challenges in Fine-Tuning

- Overfitting Risk

Fine-tuning on a small dataset can lead to overfitting, where the model performs well only on that dataset. - Model Compatibility

Not all pre-trained models are suited for your use case. For example, adapting a text-based model for tabular data might not work well. - Dependence on Pre-Trained Models

Fine-tuning limits innovation, as you rely on an existing model’s architecture and assumptions.

Challenges in Training from Scratch

- Data Requirements

Building a model from scratch often demands millions of labeled examples.- For smaller organizations, this can be a major roadblock.

- Infrastructure Needs

Training large models from scratch requires specialized infrastructure, such as high-end GPUs or TPUs. - High Costs

The combination of computational demands, longer training times, and larger datasets makes this approach costly.

How to Avoid Pitfalls

For Fine-Tuning

- Use data augmentation techniques to overcome overfitting.

- Evaluate pre-trained models carefully to ensure relevance.

- Test with small batches of fine-tuned data to confirm performance gains.

For Training from Scratch

- Start with a proof of concept before committing resources to full-scale training.

- Use synthetic data or simulations if real-world data is limited.

- Consider hybrid approaches, like pre-training with public datasets and fine-tuning for your unique needs.

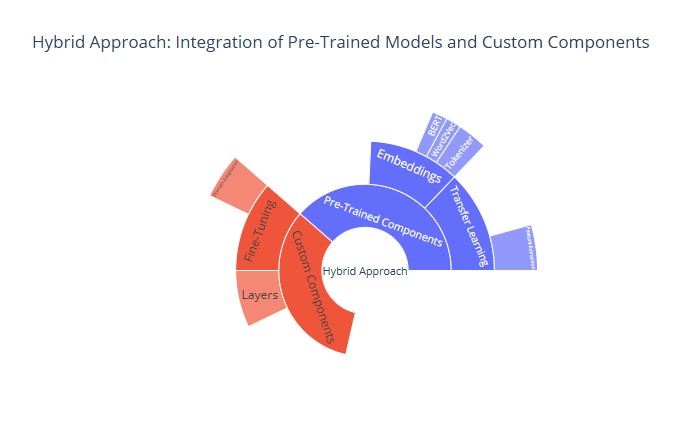

Exploring the Hybrid Approach

What is the Hybrid Approach?

The hybrid approach combines the strengths of both fine-tuning and training from scratch.

- Typically, you start with a pre-trained model and refine it by adding customized layers or tweaking its architecture.

- This approach allows for partial reuse of existing knowledge while incorporating task-specific innovations.

When to Use a Hybrid Strategy

- Unique Requirements: When pre-trained models don’t fully meet your needs but offer a solid starting point.

- Resource Constraints: When training a full model from scratch isn’t feasible but fine-tuning alone isn’t enough.

- Transfer Learning with Custom Layers: When additional features are required for specialized tasks.

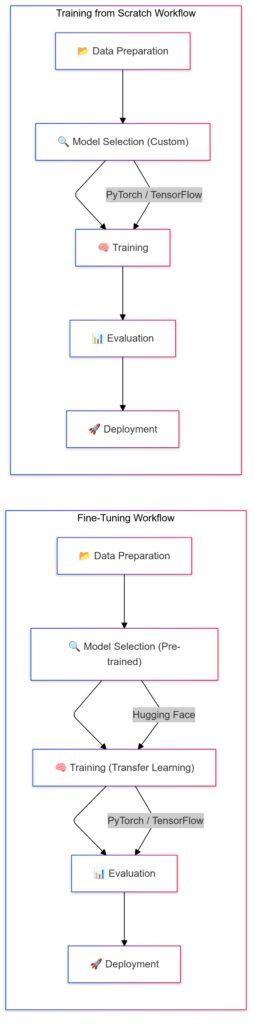

Tools and Frameworks for Fine-Tuning

PyTorch/TensorFlow for training and evaluation.

Key Differences:Fine-tuning leverages pre-trained models, reducing training complexity.

Training from scratch requires extensive data and computational resources.

Hugging Face Transformers

One of the most popular libraries for fine-tuning pre-trained models.

- Offers support for a wide range of models like BERT, GPT, and RoBERTa.

- Features user-friendly APIs and pre-trained weights to jump-start projects.

TensorFlow and Keras

These frameworks allow for seamless integration of fine-tuning workflows.

- Use transfer learning features with pre-built models like MobileNet or EfficientNet.

- Ideal for image classification or object detection tasks.

PyTorch

PyTorch provides a highly flexible environment for fine-tuning.

- Its dynamic computation graphs make it easy to customize model layers.

- Pre-trained models available via TorchVision and Hugging Face.

Tools and Frameworks for Training from Scratch

PyTorch Lightning

A high-level wrapper for PyTorch that simplifies training pipelines.

- Reduces boilerplate code, allowing developers to focus on model architecture and innovation.

TensorFlow with TPU Support

Training from scratch often involves heavy computation, which TensorFlow optimizes for TPU accelerators.

- It’s perfect for large-scale projects requiring extensive resources.

DeepSpeed by Microsoft

A tool designed for efficiently training massive AI models.

- Features like zero optimization and parallelism techniques make it ideal for resource-intensive training tasks.

Practical Use Cases of the Hybrid Approach

Industrial Applications

For tasks like predictive maintenance in manufacturing, hybrid approaches shine.

- Pre-trained models on general sensor data are fine-tuned, while specialized layers are added for specific machinery.

Cross-Domain Tasks

Models trained on natural language (e.g., GPT) can be adapted to code generation by adding programming-specific datasets and layers.

- Example: OpenAI Codex used for AI programming assistants.

Innovative Research

The hybrid approach supports experimentation while leveraging existing knowledge.

- Example: Customizing language models for low-resource languages by combining base models and new linguistic layers.

Comparing Popular AI Workflows

Cost and Time Comparison

Comparing time, costs, and resources for fine-tuning, training from scratch, and the hybrid approach.

| Approach | Cost | Time Investment | Scalability |

|---|---|---|---|

| Fine-Tuning | Low to Moderate | Hours to Days | High |

| Training from Scratch | High | Weeks to Months | Moderate |

| Hybrid | Moderate | Days to Weeks | High |

Customization Potential

| Approach | Flexibility | Use Case Diversity |

|---|---|---|

| Fine-Tuning | Medium | Wide Range |

| Training from Scratch | High | Highly Specialized Tasks |

| Hybrid | Very High | Both General and Specific |

Actionable Steps to Choose the Right Approach

Step 1: Define Your Project’s Goals

Start by identifying what you want your AI to achieve.

- Is it a general-purpose application or a highly specialized task?

- Understanding this will help you choose between pre-trained versatility (fine-tuning) or full customization (training from scratch).

Step 2: Evaluate Data Availability

Assess the size and quality of your dataset.

- Limited Data: Opt for fine-tuning or a hybrid approach.

- Extensive Data: Training from scratch becomes feasible if you have millions of examples.

Step 3: Analyze Resource Constraints

Examine your available infrastructure, time, and budget.

- Low Resources: Fine-tuning is cost-effective and less resource-intensive.

- Generous Resources: Training from scratch allows for deeper experimentation and flexibility.

Step 4: Consider Task Complexity

Some tasks align better with one approach over another:

- Domain-Specific Tasks: Fine-tune pre-trained models for areas like sentiment analysis or medical diagnostics.

- Highly Novel Tasks: Train from scratch for fields like astrophysics simulations or autonomous robotics.

Step 5: Factor in Long-Term Scalability

Think about the future.

- Fine-tuning is faster to deploy but might limit long-term customization.

- Training from scratch offers complete control, but scalability could require ongoing investment.

- Hybrid approaches balance both, making them ideal for growing projects.

Making the Final Decision

If Fine-Tuning Is Best

- Choose a pre-trained model aligned with your task.

- Use tools like Hugging Face Transformers or Keras to streamline the process.

- Focus on small datasets and efficient workflows.

If Training from Scratch Is Best

- Invest in high-quality data collection and preprocessing.

- Use frameworks like PyTorch Lightning or TensorFlow with TPUs to optimize training.

- Be prepared for longer development cycles but reap the rewards of total customization.

If the Hybrid Approach Fits

- Leverage pre-trained models as a base.

- Add task-specific layers or modify architectures to meet unique needs.

- Combine the speed of fine-tuning with the innovation of scratch training.

By carefully weighing these factors, you’ll be equipped to make the best decision for your AI project. The right approach can save resources, enhance performance, and pave the way for groundbreaking results.

Final Summary

Deciding between fine-tuning and training from scratch boils down to your project’s goals, resources, and complexity. Each approach has its strengths:

- Fine-tuning is perfect for projects needing quick, cost-effective solutions tailored to specific tasks.

- Training from scratch offers unparalleled flexibility and is ideal for cutting-edge innovation or unique requirements.

- The hybrid approach combines the best of both worlds, enabling scalability, efficiency, and customization.

By defining your objectives, evaluating data availability, and considering your constraints, you can confidently choose the most effective path for your AI project. Whether you’re enhancing pre-trained models or building one from the ground up, your decision will shape the foundation for a successful AI solution.

Let the AI revolution in your project begin!

FAQs

Can I fine-tune a model if I have limited computing resources?

Yes! Fine-tuning is resource-friendly. It can often be performed on a single GPU or even a CPU for smaller models.

For instance, startups in e-commerce often fine-tune recommendation systems using only limited cloud-based hardware, saving time and costs.

What are the benefits of training a model from scratch?

Training from scratch provides:

- Complete control over architecture and parameters.

- The ability to tackle highly specialized tasks for which no pre-trained models exist.

Example: Scientists developing AI for protein folding simulations train their models from scratch since off-the-shelf models don’t fit this highly specific task.

Is it possible to combine fine-tuning and training from scratch?

Yes! The hybrid approach combines the best aspects of both. Start with a pre-trained model, then add custom layers or adjust its architecture to better fit your needs.

For example, in AI-powered legal tech, companies fine-tune language models for general text processing but train custom components from scratch for case law prediction.

How does data size affect my choice?

- Fine-tuning works well with small datasets because the model already has general knowledge.

- Training from scratch requires large datasets to learn effectively, often in the range of millions of labeled examples.

Example: Fine-tuning a language model for historical document analysis might only need a few thousand examples, but training a new one for the same task could require significantly more.

What are the main risks of fine-tuning?

Fine-tuning risks include:

- Overfitting if the dataset is too small.

- The potential for the model to retain biases from its original pre-training data.

Mitigation tip: Augment your data with varied examples and test the model extensively before deployment.

Can I use open-source tools for both approaches?

Absolutely! Open-source tools like Hugging Face Transformers and PyTorch support both fine-tuning and training from scratch.

Example: Developers can fine-tune BERT for document classification or build a fully custom architecture with PyTorch for real-time object detection in drones.

Which approach is faster to implement?

Fine-tuning is significantly faster. Pre-trained models reduce training time from weeks or months to just hours or days.

Example: A company deploying AI chatbots can fine-tune GPT for its domain-specific needs within days, compared to months of scratch training.

Is fine-tuning scalable for long-term projects?

Yes, fine-tuning is scalable but may require periodic updates as tasks evolve. For long-term scalability, the hybrid approach offers more flexibility by enabling deeper customization when needed.

Example: A music recommendation system might start with fine-tuning but incorporate custom-trained components later to address unique user behavior trends.

Can I switch from fine-tuning to training from scratch later?

Yes, you can start with fine-tuning and transition to training from scratch as your resources or requirements grow.

- Fine-tuning is great for prototyping and testing feasibility.

- Once you understand the limitations or need greater customization, training from scratch offers more freedom.

Example: A fintech startup fine-tunes a pre-trained model for fraud detection but later trains a custom model from scratch to handle more complex financial data.

What role does transfer learning play in fine-tuning?

Transfer learning is the foundation of fine-tuning. It allows you to:

- Leverage a model pre-trained on a general task and adapt it to a specific task.

Example: Using a model trained on Wikipedia articles to fine-tune for financial sentiment analysis, where the general language knowledge is invaluable.

What are the computational costs of training from scratch?

Training from scratch demands significantly higher computational resources, including:

- Multi-GPU setups or TPUs.

- Weeks or months of continuous processing time.

Example: OpenAI’s GPT models required thousands of GPUs and substantial energy to train from scratch, making it a costly endeavor.

Can fine-tuning handle real-time applications?

Yes, fine-tuning can create real-time solutions effectively. Once trained, these models can process tasks quickly, thanks to their pre-trained foundation.

- Applications: Real-time translation, fraud detection, or personalized recommendations.

Example: An AI-powered travel app fine-tunes a model to provide instant multilingual support for tourists.

What kind of expertise is required for each approach?

- Fine-Tuning: Requires basic knowledge of frameworks like Hugging Face or TensorFlow. Perfect for teams with limited AI expertise.

- Training from Scratch: Demands deep understanding of machine learning, optimization algorithms, and infrastructure management.

Example: A startup with general developers can use fine-tuning, but a research lab with ML PhDs might opt for training from scratch.

How do hybrid approaches improve project efficiency?

Hybrid approaches optimize both time and accuracy. By using pre-trained models as a base and adding customized elements, you achieve:

- Faster development than scratch training.

- Greater flexibility than pure fine-tuning.

Example: A retailer might fine-tune an existing recommendation system but train a custom price optimization layer to handle unique seasonal trends.

What are common mistakes in fine-tuning?

- Overfitting: Using too small a dataset or not enough regularization.

- Ignoring Pre-Trained Model Limitations: Assuming all models are equally adaptable.

- Insufficient Validation: Not testing thoroughly across diverse scenarios.

Example: A company fine-tuning a chatbot for tech support might find it failing to handle uncommon user queries due to over-reliance on specific examples.

Are hybrid approaches becoming the future of AI development?

Yes, hybrid methods are gaining traction. They offer the best balance of resource efficiency, scalability, and customization.

- Emerging tools like LoRA (Low-Rank Adaptation) allow lightweight adjustments to pre-trained models without full retraining.

- Popular in industries that need scalable yet domain-specific solutions.

Example: An educational platform combines a pre-trained language model with custom layers for adaptive learning based on student performance.

How can I measure success for each approach?

Success metrics vary depending on the approach:

- Fine-Tuning: Accuracy improvement on a small dataset with minimal computational cost.

- Training from Scratch: Model performance compared to state-of-the-art results in similar tasks.

- Hybrid: Balance between resource efficiency and task-specific innovation.

Example: A content platform fine-tunes a model to recommend articles. Success is measured by increased click-through rates, not necessarily achieving perfect accuracy.

Can I use pre-trained models for non-standard data types?

Yes, but it may require adaptations. Pre-trained models are often designed for specific data types (e.g., text, images). If your data is unconventional (e.g., genomic sequences, graphs), you can:

- Fine-tune pre-trained models adapted to related domains.

- Use hybrid approaches by combining pre-trained components with custom modules for non-standard data.

Example: A research lab fine-tunes a transformer model originally built for text to analyze DNA sequences by reformatting input data.

How does model size affect fine-tuning and training from scratch?

- Fine-Tuning: Works well with large pre-trained models (e.g., GPT-4, BERT). These models already encapsulate generalized knowledge, making them ideal for transfer learning.

- Training from Scratch: The larger the model, the more resources and data you’ll need to ensure effective training.

Example: Fine-tuning a massive model like GPT-4 for customer support is feasible, but training a model of similar size from scratch requires millions of dollars in infrastructure.

Can fine-tuning reduce ethical risks in AI?

Yes, fine-tuning allows you to address ethical concerns by carefully curating your fine-tuning dataset:

- Bias Mitigation: Use balanced datasets to reduce bias present in the pre-trained model.

- Domain Relevance: Avoid unintended consequences by aligning the model with specific tasks and guidelines.

Example: Fine-tuning a chatbot for healthcare can focus on ensuring non-discriminatory language and adherence to ethical standards like HIPAA compliance.

How do pre-trained models handle multilingual tasks?

Pre-trained models like mBERT and XLM-R are designed for multilingual tasks and can be fine-tuned to improve performance in specific languages.

- Fine-tuning these models with a localized dataset enhances their performance in specific regions.

- Training from scratch for multilingual tasks is extremely resource-intensive and rarely necessary.

Example: A content platform fine-tunes a multilingual model to improve search relevance in underrepresented languages like Swahili.

What industries benefit most from fine-tuning?

Fine-tuning thrives in industries with specific needs but limited resources for scratch training:

- Healthcare: Diagnosing diseases from medical imaging.

- E-Commerce: Personalized product recommendations.

- Education: Adaptive learning systems for diverse topics.

Example: An online education platform fine-tunes a language model to assess and grade student essays efficiently.

Can transfer learning handle streaming or dynamic data?

Yes, transfer learning (the core of fine-tuning) can be adapted to handle dynamic data by incorporating online learning techniques:

- Fine-tune models periodically as new data becomes available.

- Combine pre-trained models with real-time updates to adapt to changing trends.

Example: A social media company fine-tunes a model to detect trending hashtags and updates it regularly to reflect current events.

How does overfitting differ between fine-tuning and scratch training?

- Fine-Tuning: Overfitting is more common because pre-trained models might memorize a small fine-tuning dataset.

- Training from Scratch: Overfitting is less likely on large datasets but can occur if training continues for too many epochs.

Mitigation strategies:

- Use early stopping for scratch training.

- Apply data augmentation and dropout layers during fine-tuning.

Example: An AI model fine-tuned for stock market predictions must avoid overfitting to past trends that no longer apply.

What role do cloud services play in both approaches?

Cloud services like AWS, Google Cloud, and Azure simplify both fine-tuning and scratch training by providing:

- Pre-trained models for quick adaptation.

- Scalable infrastructure for heavy computations in scratch training.

Example: A startup uses Google Cloud’s Vertex AI to fine-tune a text classification model with minimal setup, while a research lab uses AWS EC2 instances for scratch training on genomics data.

Is data preprocessing different for fine-tuning vs. training from scratch?

Yes, preprocessing varies:

- Fine-Tuning: Focus on ensuring the dataset aligns with the pre-trained model’s input requirements. For instance, tokenization for text or resizing for images.

- Training from Scratch: Requires comprehensive preprocessing to clean and format raw data for effective training.

Example: When fine-tuning a vision model, resizing images to match the model’s expected dimensions (e.g., 224×224) is key, whereas scratch training involves deeper exploration of pixel-level transformations.

How can I monitor and evaluate model performance in both approaches?

- Fine-Tuning: Use validation metrics like accuracy, F1 score, or BLEU scores to track improvements over the pre-trained baseline.

- Training from Scratch: Track loss functions and performance on both training and validation datasets across epochs.

Tools like TensorBoard or Weights & Biases simplify monitoring for both methods.

Example: Fine-tuning a translation model might focus on BLEU score improvements, while scratch training a classifier emphasizes reducing the validation loss over time.

Resources

Documentation and Tutorials

- Hugging Face Transformers:

A go-to resource for fine-tuning language and vision models. Their documentation offers step-by-step guides for using pre-trained models like GPT and BERT.- Tutorial: Fine-tune BERT for text classification.

- PyTorch:

PyTorch’s official documentation provides a deep dive into training models from scratch, including dynamic computation graphs and pre-trained tools via TorchVision.- Tutorial: Transfer Learning with PyTorch.

- TensorFlow/Keras:

TensorFlow’s model garden includes pre-trained models for fine-tuning, while Keras simplifies custom model creation.- Tutorial: Transfer learning for image classification.

Pre-Trained Model Repositories

- Model Zoo by PyTorch:

Access pre-trained models for vision, natural language processing, and more. PyTorch Model Zoo. - Hugging Face Model Hub:

A massive library of pre-trained models for language, vision, and multimodal tasks. Hugging Face Models. - TensorFlow Hub:

Offers pre-trained models for transfer learning and fine-tuning. TensorFlow Hub.

Open Datasets

- Kaggle:

A treasure trove of datasets for both fine-tuning and training from scratch, spanning text, images, and structured data. Kaggle Datasets. - Google Dataset Search:

Find datasets for niche domains or specific tasks. Google Dataset Search. - Open Data Portal:

Offers datasets from government, academic, and nonprofit sectors. Open Data Portal.

Computational Tools and Platforms

- Google Colab:

Free access to GPUs/TPUs for fine-tuning and lightweight scratch training. Google Colab. - AWS SageMaker:

A robust platform for large-scale fine-tuning and training from scratch with scalable infrastructure. AWS SageMaker. - NVIDIA GPU Cloud (NGC):

Pre-trained models and optimized GPU support for high-performance training. NVIDIA NGC.

Books and Courses

- “Deep Learning” by Ian Goodfellow:

A foundational text for understanding model training and optimization. - Fast.ai’s Practical Deep Learning for Coders:

A hands-on course focused on transfer learning and building AI models efficiently. Fast.ai Course. - Coursera: Deep Learning Specialization by Andrew Ng:

Covers the basics of training models, fine-tuning, and advanced architectures. Deep Learning Specialization.

Tools for Experiment Tracking

- Weights & Biases (W&B):

Simplifies tracking experiments, hyperparameters, and metrics for both fine-tuning and training from scratch. W&B. - TensorBoard:

Visualize training performance, losses, and model graphs. TensorBoard. - MLFlow:

Manage and track AI development workflows. MLFlow.

Communities and Forums

- Reddit: Machine Learning Subreddit:

Engage with an active community discussing fine-tuning, training, and emerging trends. r/MachineLearning. - Hugging Face Forums:

Collaborate and troubleshoot with experts in model fine-tuning. Hugging Face Discussions. - Stack Overflow:

Get coding help for PyTorch, TensorFlow, or other frameworks. Stack Overflow AI.